Vulkan

Starting

Versions

-

Versions and Features Breakdown .

-

Patch notes.

-

-

Why not use Vulkan 1.0? {12:57 -> end} .

-

1.0 is harder, with missing features and clunky interfaces.

-

The video is pretty nice. I listed the problems it explained about 1.0 and placed them in the documentation below.

-

It was well explained and I came to appreciate using Vulkan 1.3+.

-

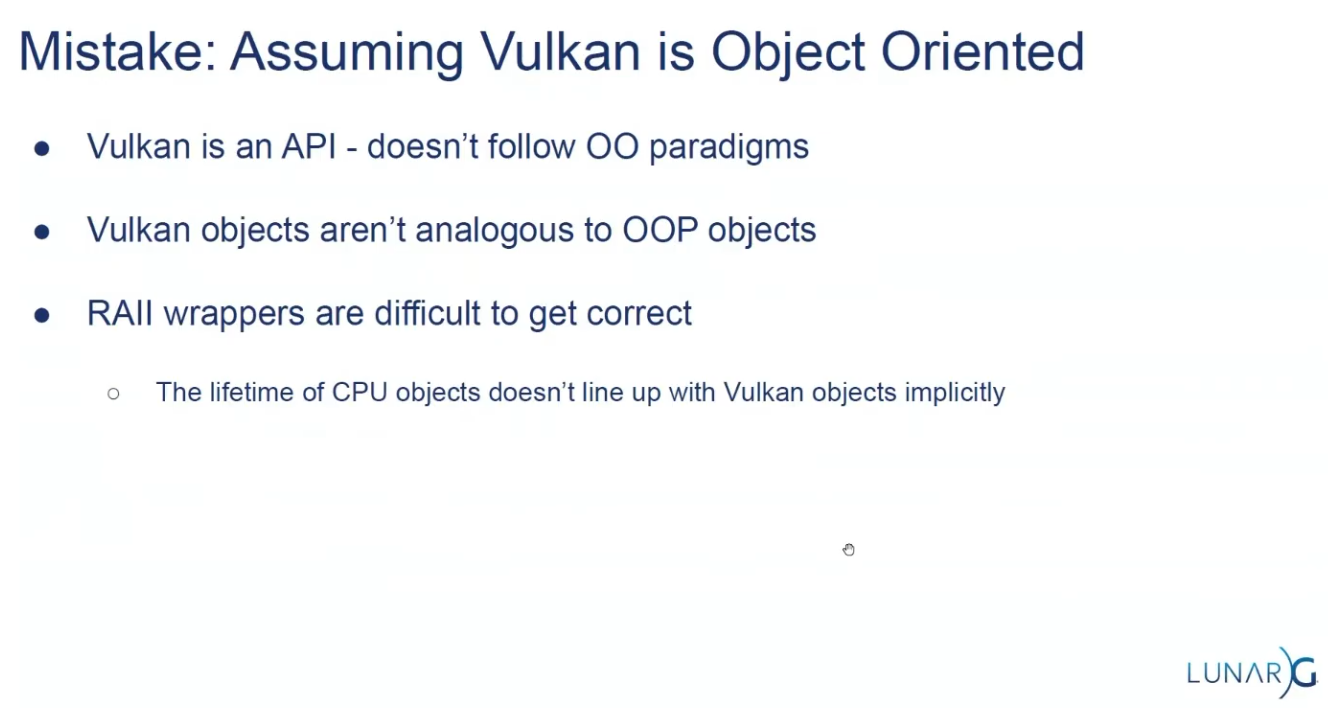

Is OOP?

-

Version 1.3, (2024-02-22).

-

.

.

API Structs

-

Many structures in Vulkan require you to explicitly specify the type of structure in the

sTypemember. -

Functions that create or destroy an object will have a

VkAllocationCallbacksparameter that allows you to use a custom allocator for driver memory, which will also be leftnullptrin this tutorial. -

Almost all functions return a

VkResultthat is eitherSUCCESSor an error code. The specification describes which error codes each function can return and what they mean. -

The

KHRpostfix, which means that these objects are part of a Vulkan extension. -

The

pNextmember can point to an extension structure.

Compatibility

Support

-

Windows (7 and later)

-

Yes, via the official SDK and drivers.

-

-

Linux

-

Yes. Native support via Mesa and vendor drivers.

-

-

Android (5.0+)

-

Yes, most devices from Android 7.0+ support Vulkan.

-

-

macOS

-

No native support — requires MoltenVK (Vulkan-to-Metal wrapper).

-

-

iOS

-

No native support — requires MoltenVK.

-

-

Web

-

No native support — experimental via WebGPU or Emscripten with translation layers.

-

-

Consoles.

-

Partially supported; depends on platform SDKs and NDAs (e.g., Nintendo Switch uses a Vulkan-like API).

-

Driver support

-

Vulkan requires updated GPU drivers.

-

Older or integrated GPUs (especially pre-2013) may lack Vulkan support.

-

Vendor support varies: NVIDIA, AMD, and Intel generally support Vulkan on most modern hardware.

Compatibility Layers

-

To increase compatibility.

-

MoltenVK :

-

Runs Vulkan on Metal (required for macOS/iOS).

-

-

gfx-rs / wgpu / bgfx :

-

Abstraction layers to use Vulkan when available, fallback to other APIs.

-

-

ANGLE / Zink :

-

Can translate other APIs (e.g., OpenGL) to Vulkan and vice-versa.

-

Tutorials

Tutorials in Docs

-

-

I already read everything before the memory allocation section.

-

-

-

Based on the vulkan-tutorial, with differences:

-

Vulkan 1.4 as a baseline

-

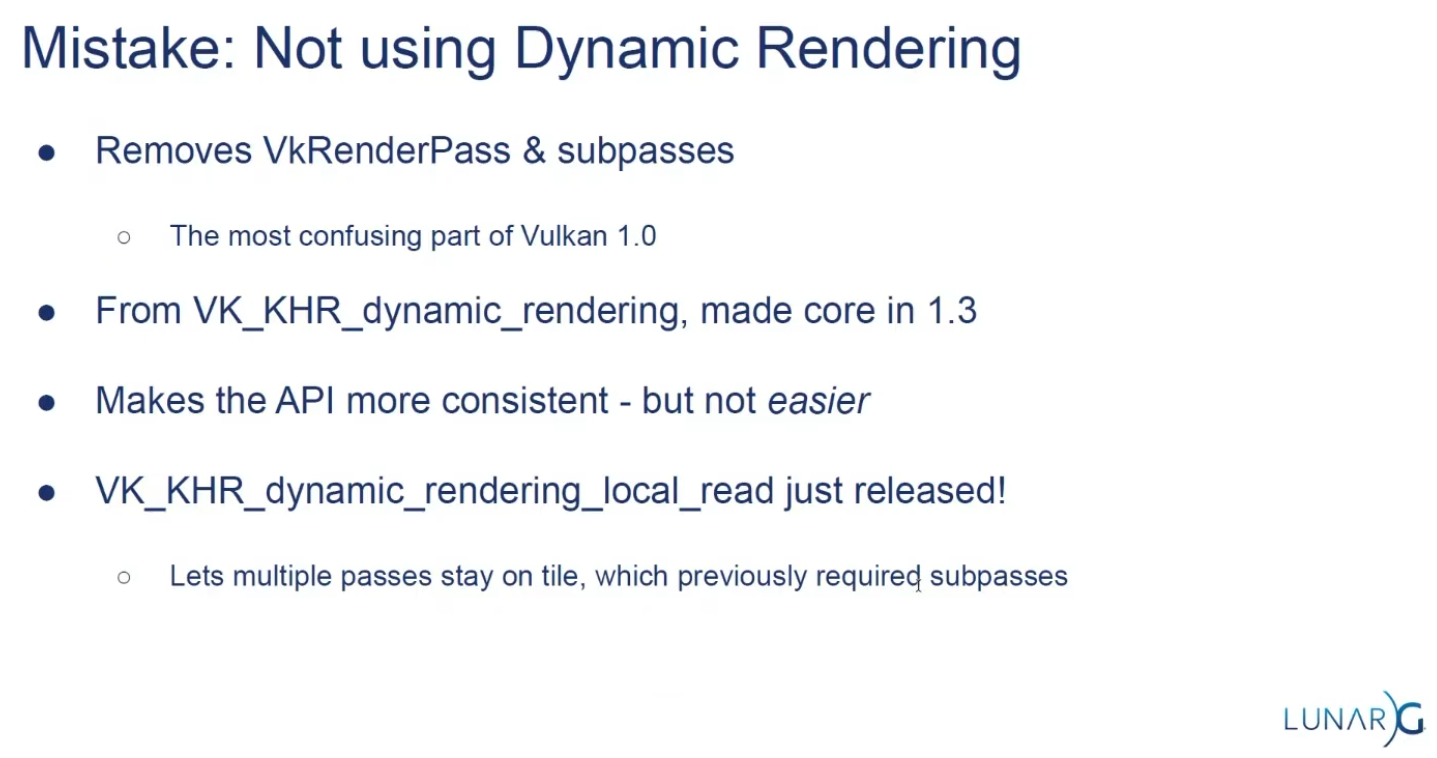

Dynamic rendering instead of render passes

-

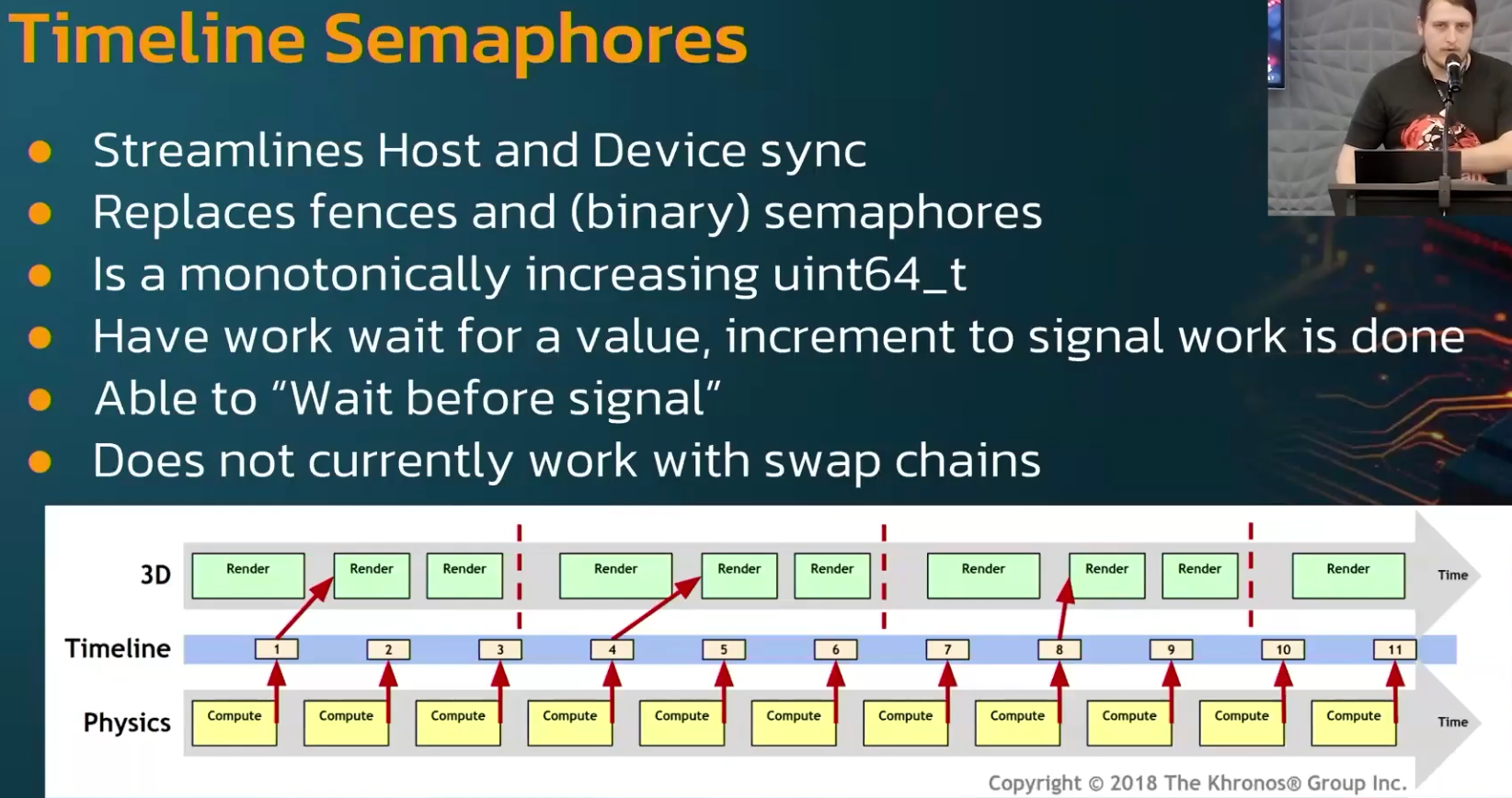

Timeline semaphores

-

Slang as the primary shading language

-

Modern C++ (20) with modules

-

Vulkan-Hpp with RAII

-

It also contains Vulkan usage clarifications, improved synchronization and new content.

-

"This tutorial will use RAII with smart pointers and it will endeavor to demonstrate the latest methods and extensions which should hopefully make Vulkan a joy to use."

-

-

Does not require knowledge of previous APIs, but you need to know C++ and graphics math.

-

Impressions :

-

Holy moly the new C++ API is a pain.

-

I preferred to go back to the vulkan-tutorial several times and check how it's used in the C API.

-

I used this tutorial only as a base to consider the new features.

-

I didn't use Slang, I didn't like it; I stayed with GLSL.

-

-

-

-

Does not require knowledge of previous APIs, but you need to know C++ and graphics math.

-

You can use C, but the tutorial is in C++.

-

Vulkan 1.0; shown here .

-

Uses GLSL for shaders.

-

-

~ Vulkan Guide .

-

For people with previous experience with Graphics APIs.

-

I'm not a big fan of this guide.

-

Uses :

-

Vulkan 1.3.

-

C++, Visual Studio, CMake.

-

SDL to create a window.

-

-

Abstracts a big amount of boilerplate that Vulkan has when setting up. Most of that code is written once and never touched again, so we will skip most of it using this library. This library simplifies instance creation, swapchain creation, and extension loading. It will be removed from the project eventually in an optional chapter that explains how to initialize that Vulkan boilerplate the “manual” way.

-

-

-

Implements memory allocators for Vulkan, header only. In Vulkan, the user has to deal with the memory allocation of buffers, images, and other resources on their own. This can be very difficult to get right in a performant and safe way. Vulkan Memory Allocator does it for us and allows us to simplify the creation of images and other resources. Widely used in personal Vulkan engines or smaller scale projects like emulators. Very high end projects like Unreal Engine or AAA engines write their own memory allocators.

-

-

-

Impressions :

-

The tutorial gives you a project with many things already done, and holds your hand for every syntax, file, folder, methodology, etc.

-

It simply throws a lot of stuff at you.

-

It's a pretty bloated experience, for sure.

-

I consider that a pain.

-

-

-

Playlists

-

Playlist Vulkan with Odin - Nadako .

-

Vulkan 1.3, with Dynamic Rendering.

-

I watched videos 1 through 11.

-

They are good videos.

-

I do not recommend them to someone who has never seen anything before, because they are not exactly for beginners and their explanations lack some foundation.

-

I recommend them as a reference for how to set up in Odin.

-

-

-

C++, with Visual Studio.

-

Assumes you have seen another GPU API before.

-

Video 1:

-

Window with GLFW, not explained.

-

-

Video 8:

-

Theory explanation ok; code explanation meh.

-

-

Video 12:

-

Synchronization with 1 frame in-flight.

-

Good video.

-

-

Video 16:-

Descriptor Sets.

-

Nope. See the spec, guides, or other videos on the subject, I think it's better.

-

-

Video 21:

-

Dynamic Rendering.

-

{0:00 -> 12:14}

-

Explanation of the code to obtain the EXT for Vulkan 1.2, and ignore it for Vulkan 1.3

-

-

The rest of the video is irrelevant, it does not explain anything beyond what to change if someone is following his code line by line.

-

-

-

Playlist Vulkan 2024 - GetIntoGameDev.-

Overall :

-

The person seems nice and I like when he draws things.

-

Unfortunately 95% of the series videos are code in C++ and he does not do a good job explaining the code.

-

I listed some videos below that I considered interesting.

-

-

Vulkan 1.3.

-

Video 12:

-

Synchronization, with 1 frame in-flight.

-

The drawings are nice.

-

-

~Video 13:

-

Multithreaded rendering.

-

Nope. See the Multithreading Rendering section to understand why "nope".

-

-

Video 26:

-

Barycentric coordinates.

-

-

Only code, so nope :

-

Videos: 9, 10, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 27, 28, 29.

-

-

Playlist Vulkan - GetIntoGameDev.-

Vulkan 1.2, (2022-01-22).

-

Watch the new 2024 version of the tutorials.

-

The person sometimes explains on a sheet of paper, which is nice.

-

-

-

Playlist Vulkan - Computer Graphics at TU Wien.-

Vulkan 1.2.

-

Video 1:

-

SDK, Instances, extensions, physical devices, logical devices.

-

Ok.

-

-

Video 2:

-

Presentation Modes, Swapchain.

-

{10:20 -> 21:45}

-

Explanation of all Presentation Modes.

-

-

-

Video 3:

-

Explanation of Buffers and Images.

-

The explanation seemed s a bit rushed and the definition is poorly established.

-

I can return and rewatch the video after reading the documentation.

-

-

Video 4:

-

Commands, Command Pools, Command Buffers.

-

Ok, sure.

-

I skipped the descriptor sets part.

-

-

Video 5:

-

Pipelines.

-

I skipped it.

-

-

Video 6:

-

Synchronization.

-

Skipped.

-

-

Impressions :

-

I don't like the illustrations, nor the tone of the explanation.

-

I simply feel I learn more and feel more confident reading the documentation or the spec.

-

The videos are "more technical", but when that is the case documentation is better.

-

I prefer a simpler playlist to learn some basic concepts, and to read the documentation for advanced topics.

-

-

-

Playlist Vulkan - Brendan Galea.-

Vulkan 1.0.

-

C++, with Visual Studio.

-

It's a pain to see C++ code.

-

The sketch explanations in the middle of the videos are ok, but the rest is very bad; all code-related parts are unpleasant and with a LOT of mess in C++.

-

Video 1:

-

Window with GLFW.

-

-

Video 2:

-

Light explanation of the graphics pipeline.

-

{9:54}

-

Shader compilation, to SPIR-V.

-

-

-

Video 20:

-

Descriptor Sets

-

{0:00 -> 5:35} Nice explanation.

-

The rest of the video is nah.

-

-

-

-

C++

-

Starts by teaching how to install Visual Studio and Git...

-

Does not use GLFW, instead creates its own platform layer on Windows to create a window.

-

-

Vulkan playlist - Francesco Piscani.-

He uses the vulkan-tutorial.

-

Spends the first 4 episodes doing basically nothing, just setting up CMake and Linux.

-

Nope, it sounds bad as tutorials.

-

Talks

-

-

Use RenderDoc extensively.

-

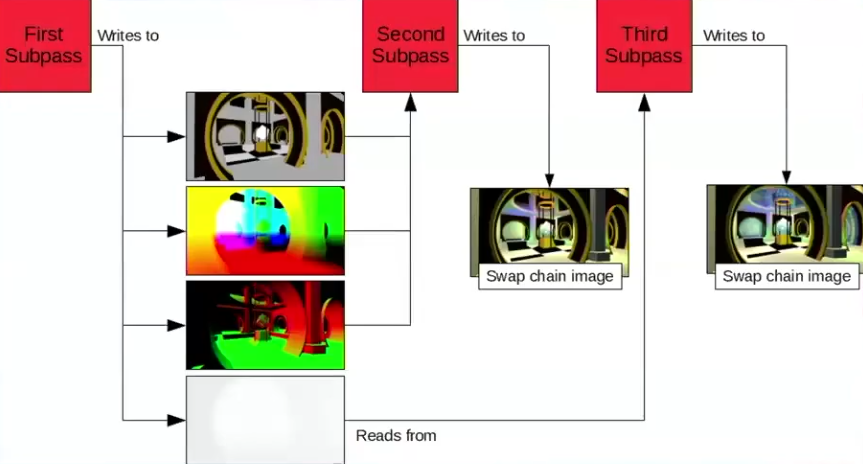

1 Render Pass, 1 subpass, 3 attachments.

-

.

.

-

-

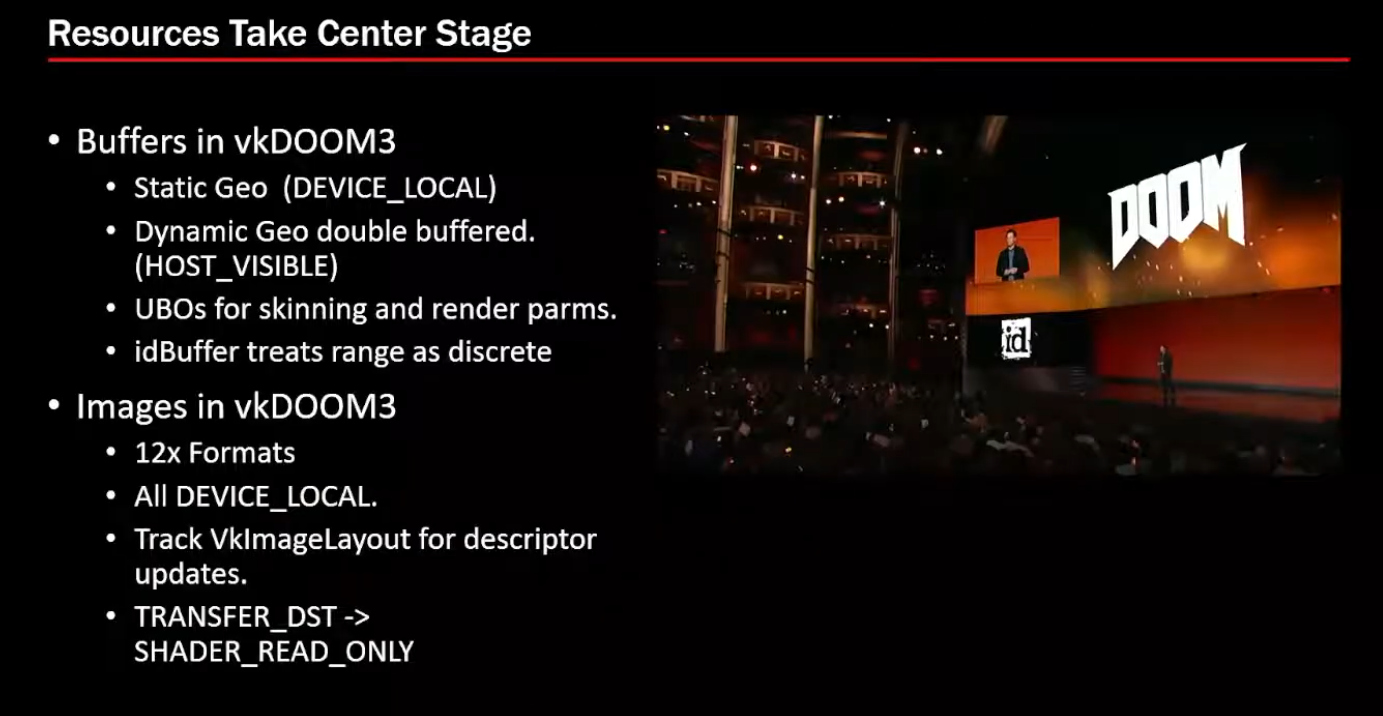

Buffers and Images

-

.

.

-

-

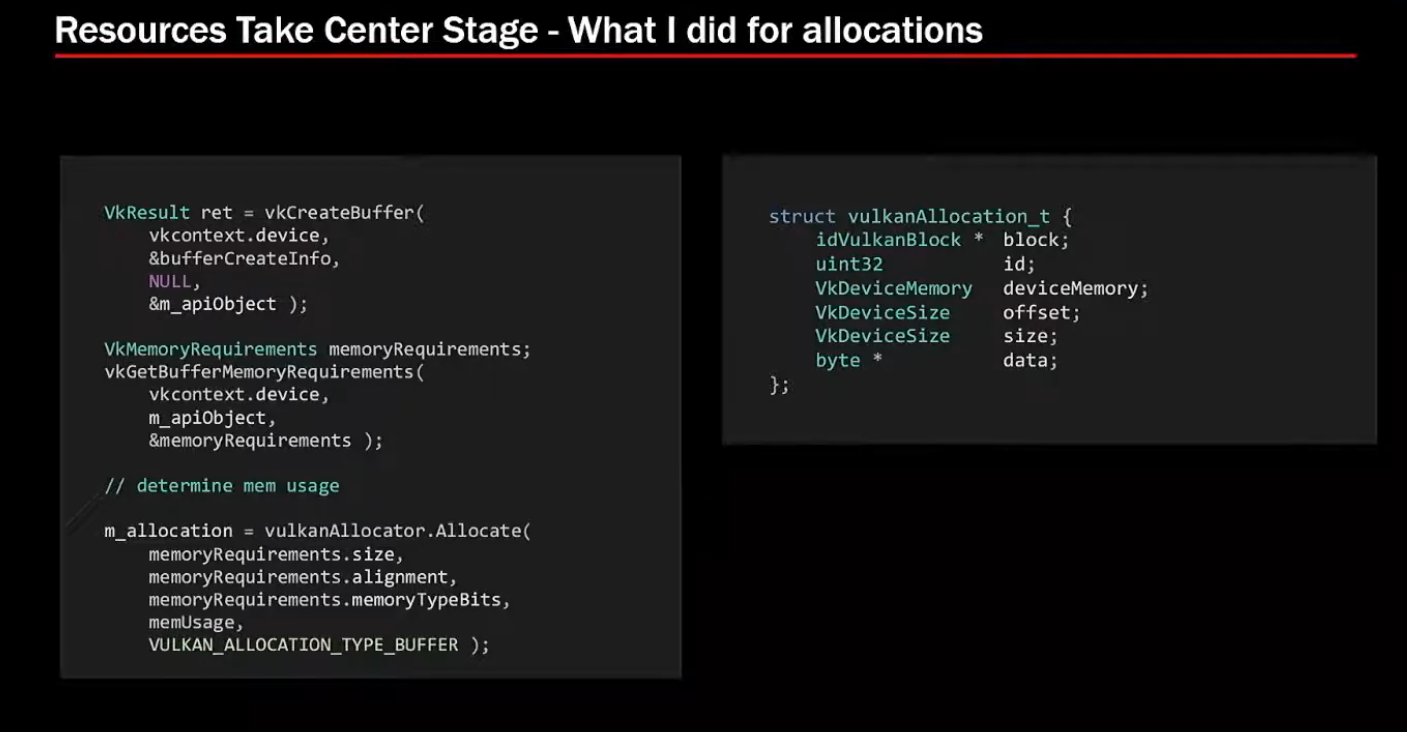

Allocations:

-

VMA for allocators.

-

.

.

-

.

.

-

-

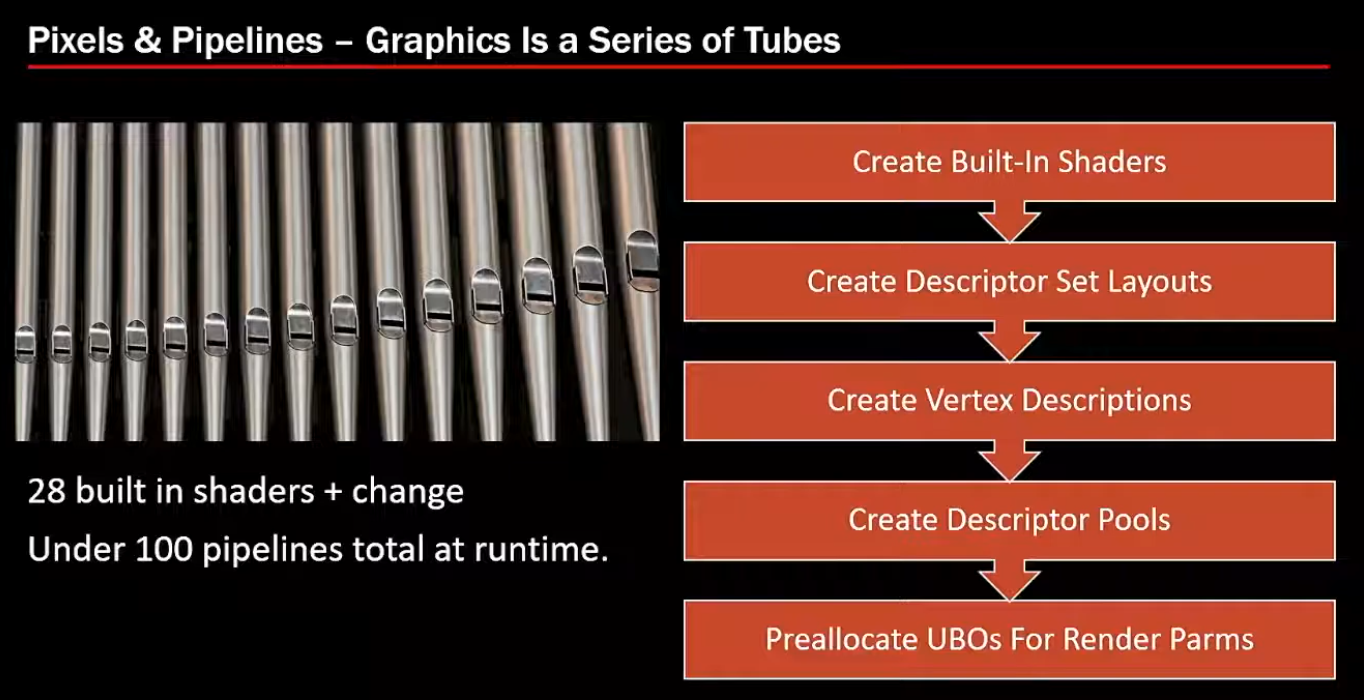

28 shaders + changes => 100 pipelines total at runtime.

-

.

.

-

-

Synchronization:

-

Not much of it. Doom 3 was single-threaded, it didn't require multithreading.

-

-

Samples

-

To run :

-

Git clone recursively the repo.

-

Build the entire solution.

-

Vulkan-Samples\build\windows\app\bin\debug\AMD64. -

Copy the

shadersandassetsfolders fromVulkan-Samplesto the folder above. -

Type

.\vulkan_samples sample sample_name.

-

-

Note :

-

Normal and hpp have the same performance; or whatever, it does not matter.

-

-

Impressions :

-

The extension samples were more visually "uninteresting".

-

I saw all API samples, but I didn't see all Extensions.

-

There were still other folders besides these two, but I was lazy to check.

-

API

-

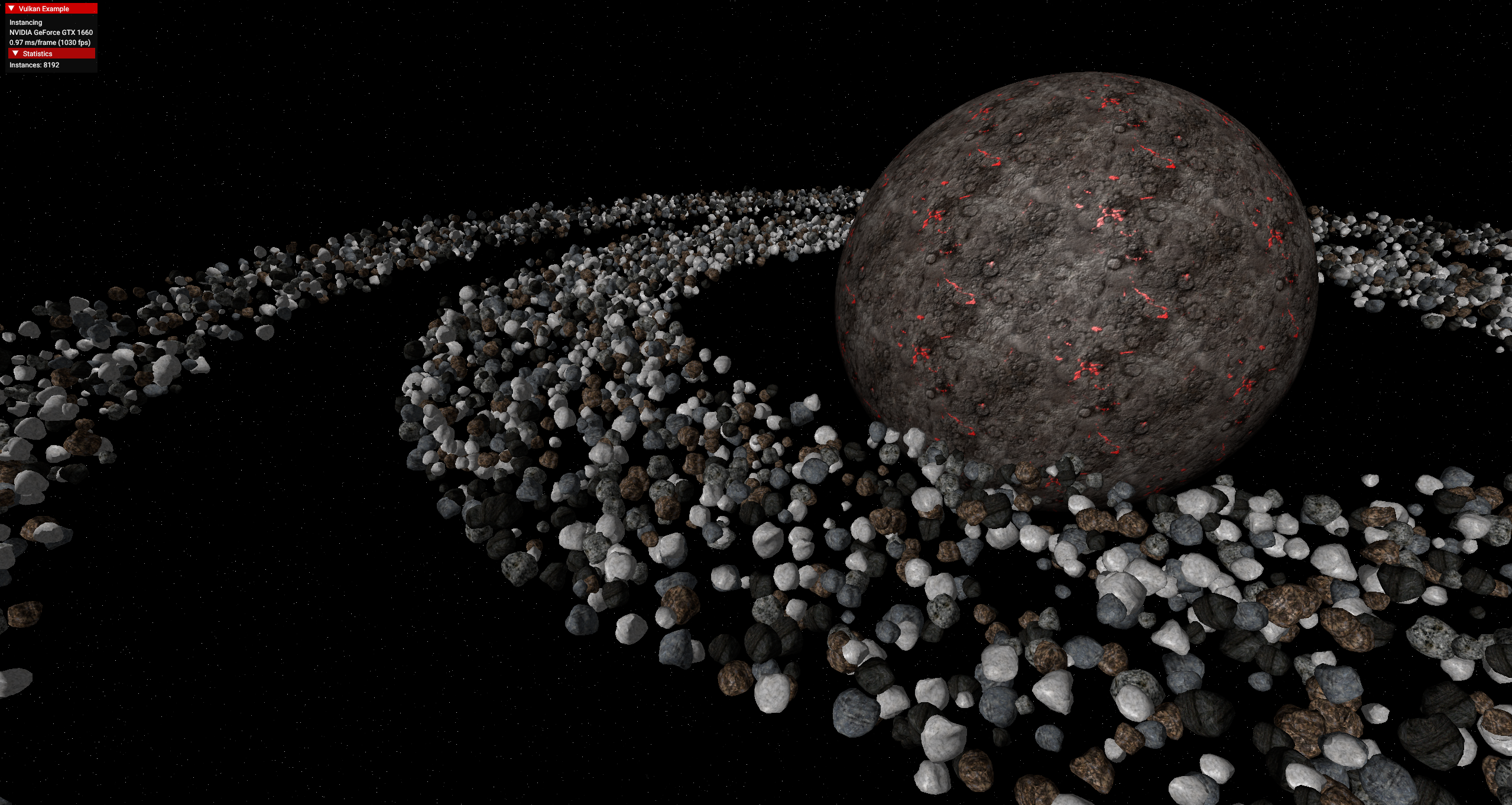

instancing

-

.

.

-

Wow, awesome.

-

The fps is very high.

-

-

oit_linked_lists (Order Independent Transparency)

-

.

.

-

-

oit_depth_peeling (Order Independent Transparency)

-

The object in the center rotates with the mouse.

-

.

.

-

-

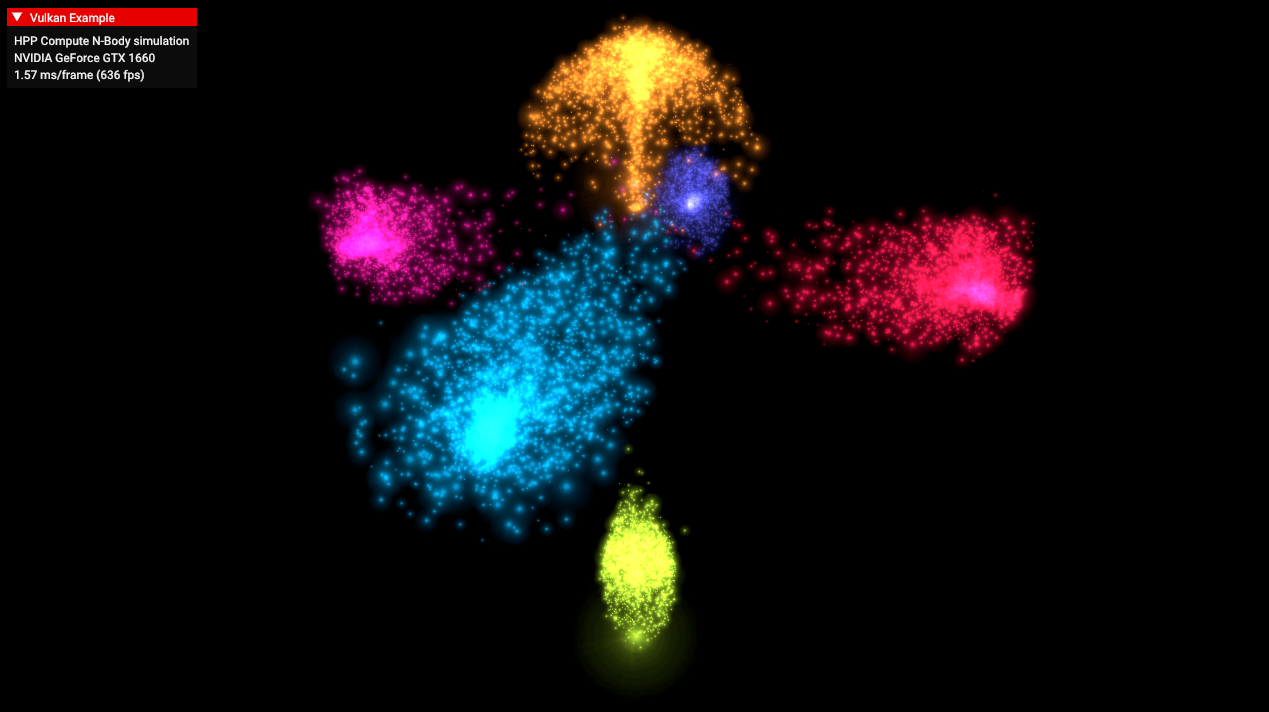

compute_nbody

-

.

.

-

-

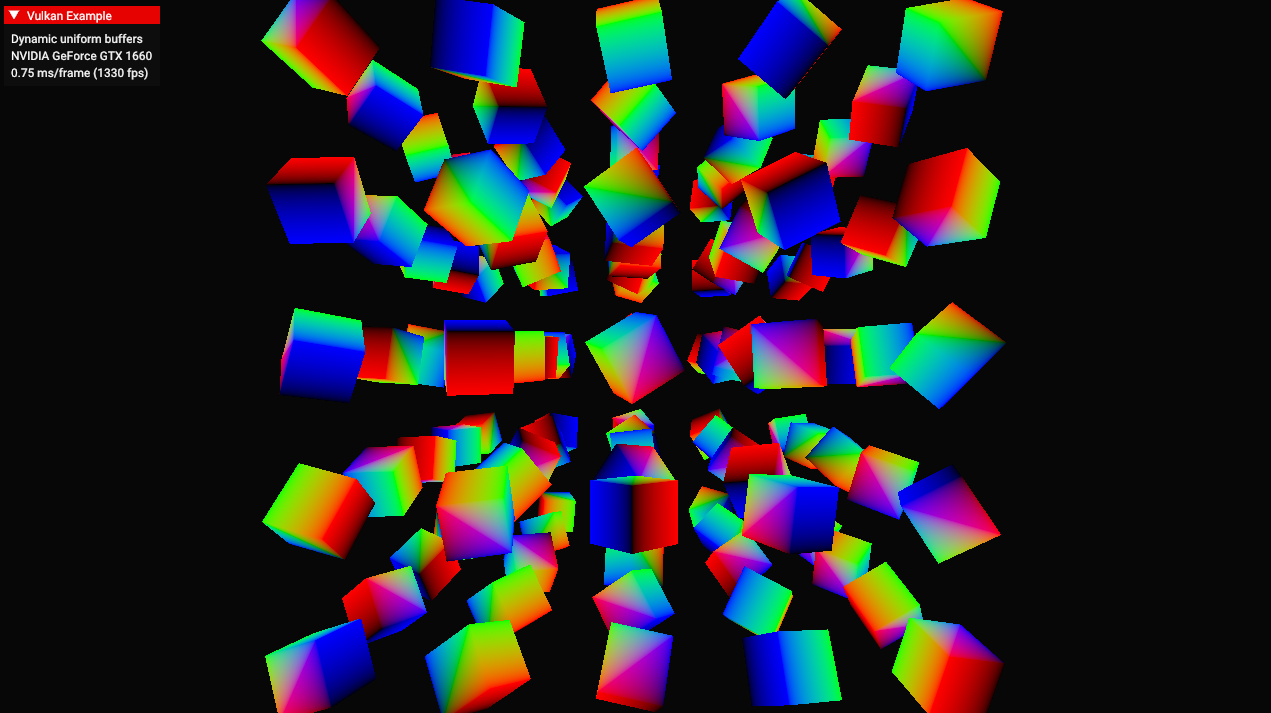

dynamic_uniform_buffers.

-

.

.

-

-

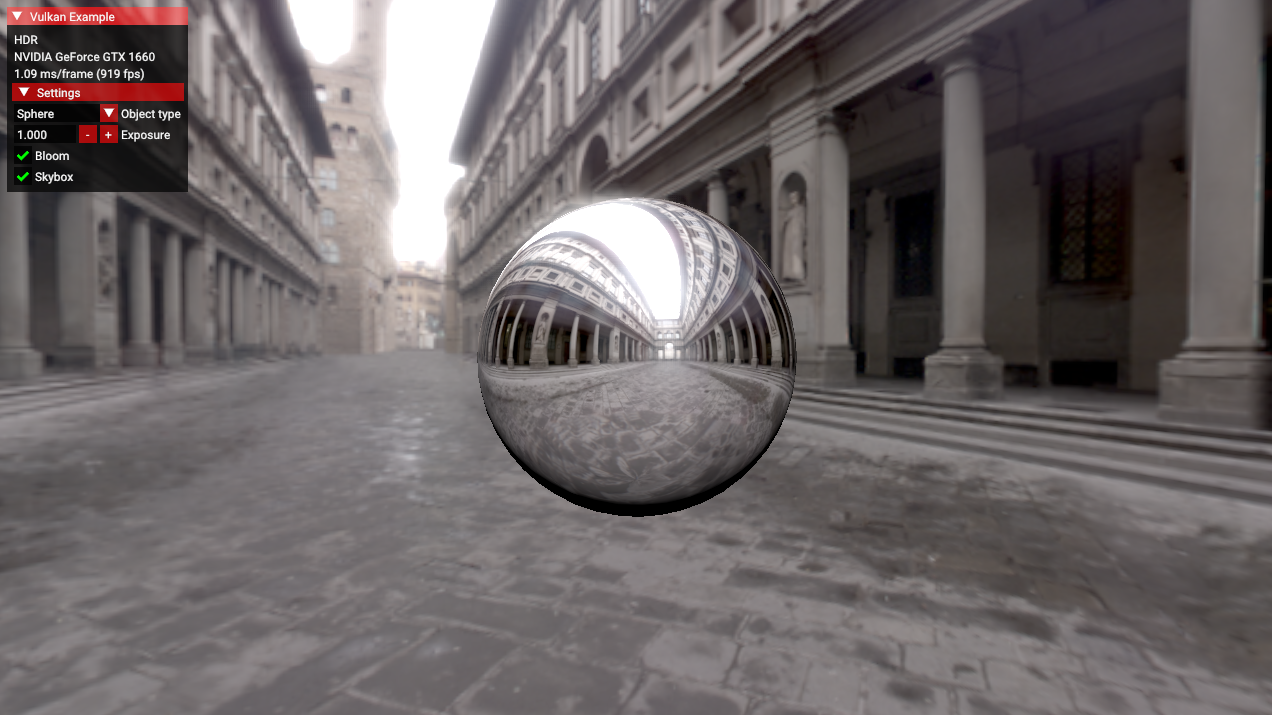

hdr

-

.

.

-

Allows changing the object, toggling the skybox, changing the exposure, toggling bloom.

-

-

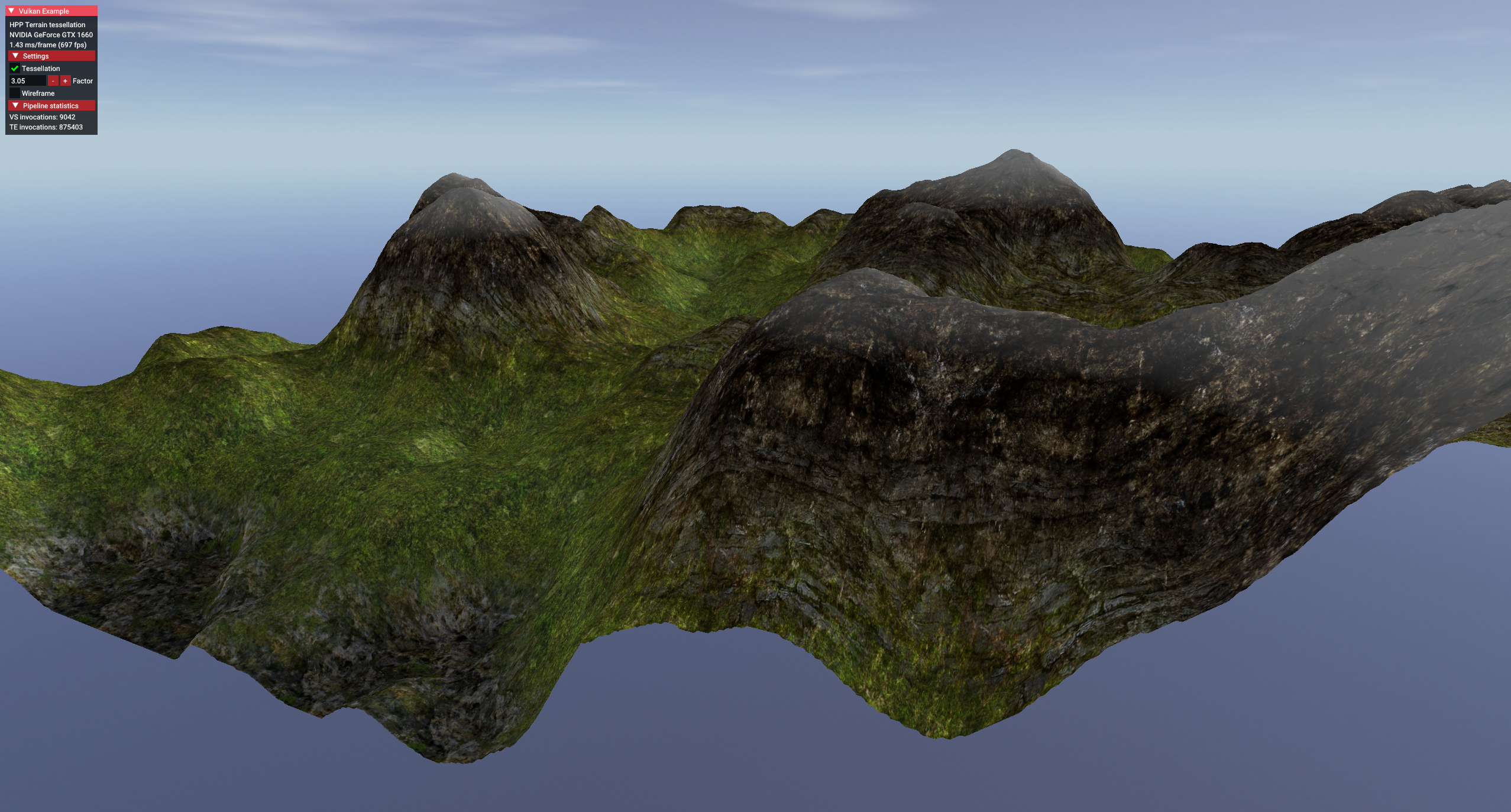

terrain_tessellation

-

.

.

-

Increasing the tessellation factor made it look like the terrain polycount increased.

-

-

timestamp_queries

-

.

.

-

Allows changing the object, toggling the skybox, changing the exposure, toggling bloom.

-

-

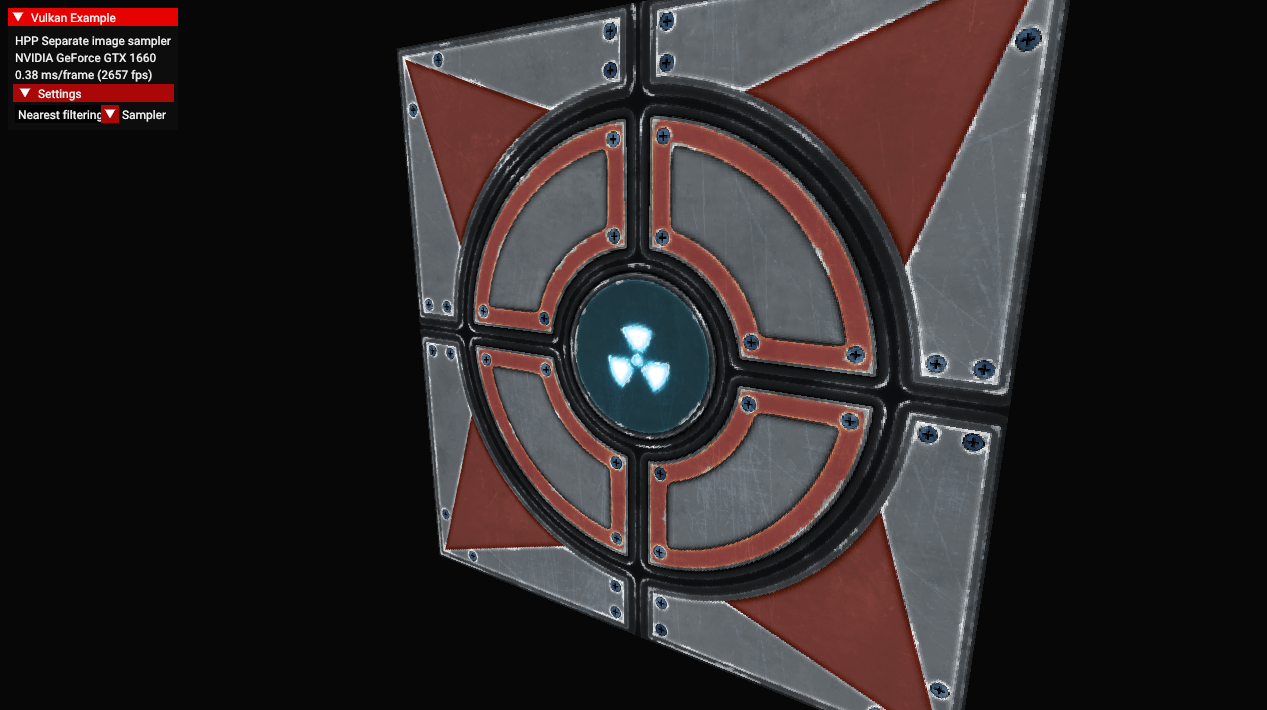

separate_image_sampler

-

.

.

-

Allows selecting linear or nearest filtering.

-

-

texture_loading

-

.

.

-

Allows increasing the LOD bias, reducing image quality.

-

-

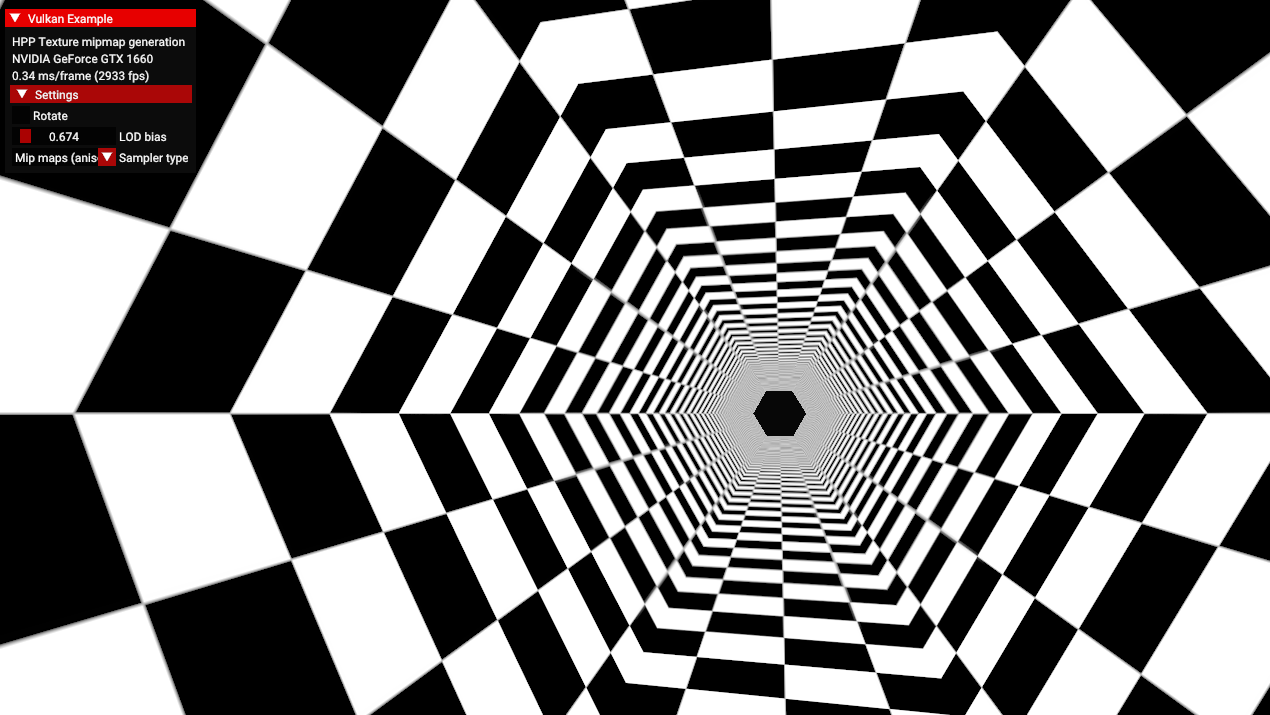

texture_mipmap_generation

-

.

.

-

Allows calibrating the LOD bias, and choosing between mipmap off, bilinear and anisotropic.

-

-

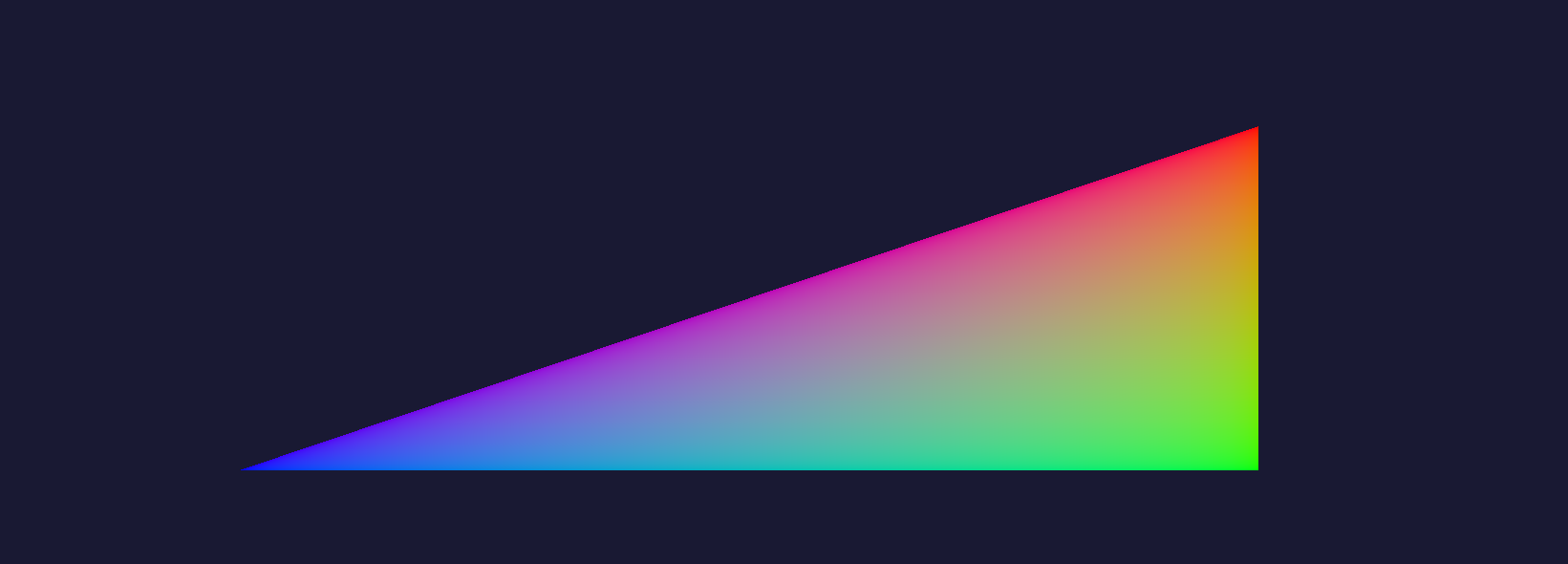

hello_triangle_1_3 / hello_triangle

-

.

.

-

Nothing special

-

No dynamic resize.

-

Extensions

-

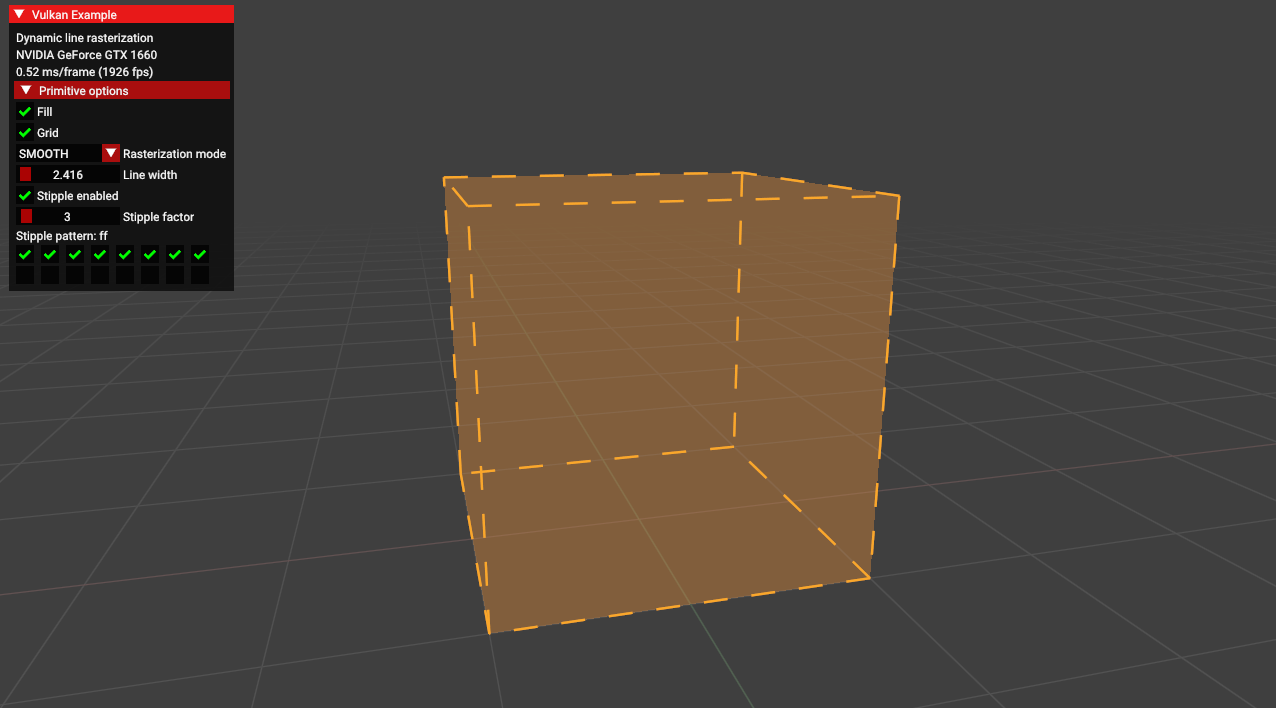

dynamic_line_rasterization

-

.

.

-

This sample demonstrates functions from various extensions related to dynamic line rasterization.

-

These functions can be useful for developing CAD applications.

-

From the

EXT_line_rasterizationextension.-

vkCmdSetLineStippleEXT- sets the stipple pattern.

-

-

From the

EXT_extended_dynamic_state3extension:-

vkCmdSetPolygonModeEXT- sets how defined primitives should be rasterized. -

vkCmdSetLineRasterizationModeEXT- sets the algorithm for line rasterization. -

vkCmdSetLineStippleEnableEXT- toggles stippling for lines.

-

-

And also from core Vulkan:

-

vkCmdSetLineWidth- sets the line width. -

vkCmdSetPrimitiveTopologyEXT- defines which type of primitives is being drawn.

-

-

-

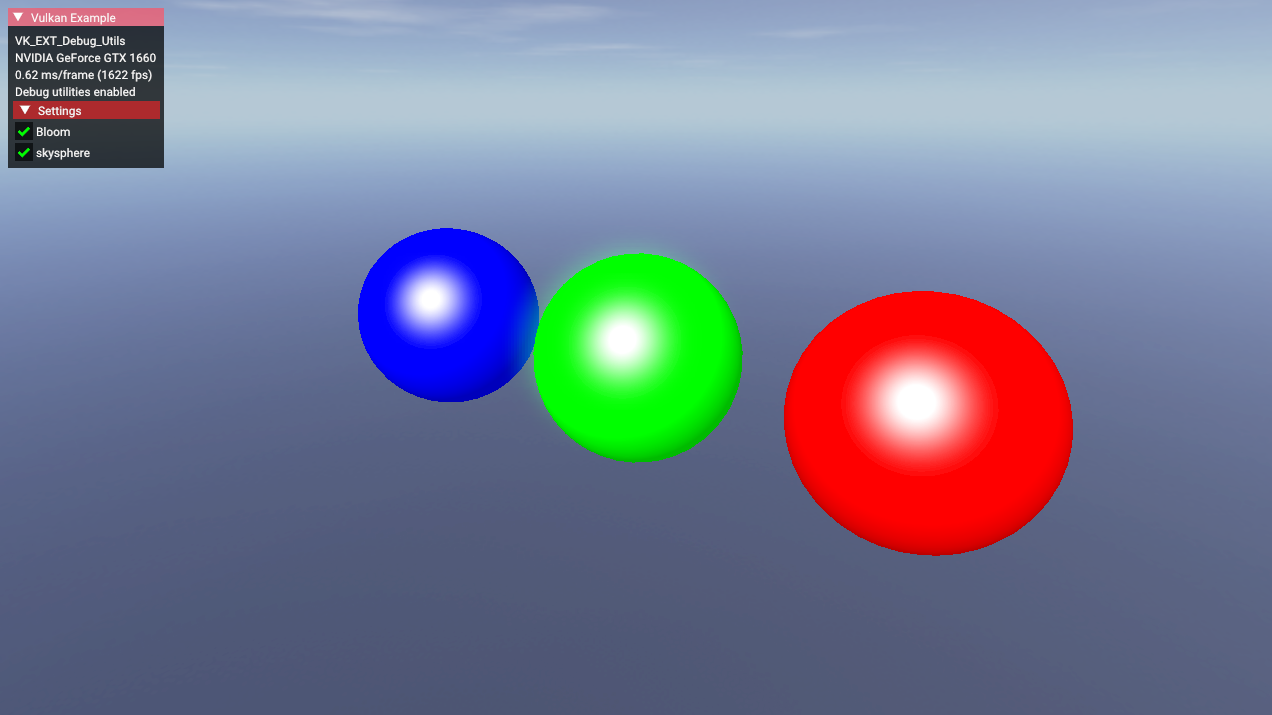

debug utils

-

.

.

-

Toggle bloom, toggle skybox.

-

The

EXT_debug_utilsextension to setup a validation layer messenger callback and pass additional debugging information to debuggers like RenderDoc. -

EXT_debug_utilshas been introduced based on feedback for the initial Vulkan debugging extensionsEXT_debug_reportandEXT_debug_marker, combining these into a single instance extension with some added functionality. -

Procedure examples :

-

vkCmdBeginDebugUtilsLabelEXT -

vkCmdInsertDebugUtilsLabelEXT -

vkCmdEndDebugUtilsLabelEXT -

vkQueueBeginDebugUtilsLabelEXT -

vkQueueInsertDebugUtilsLabelEXT -

vkQueueEndDebugUtilsLabelEXT -

vkSetDebugUtilsObjectNameEXT -

vkSetDebugUtilsObjectTagEXT

-

-

-

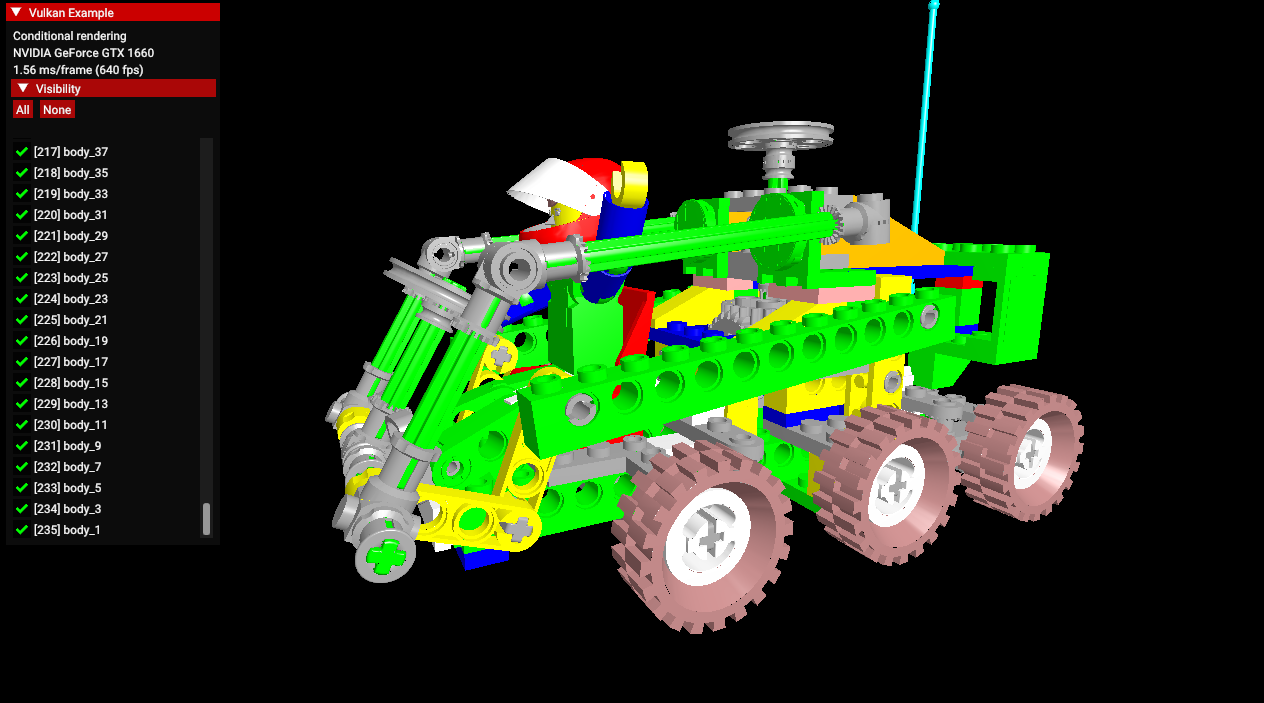

conditional_rendering

-

.

.

-

A list of 235 parts of the car, which can be disabled to not render.

-

The

EXT_conditional_renderingextension allows the execution of rendering commands to be conditional based on a value taken from a dedicated conditional buffer. -

This may help an application reduce latency by conditionally discarding rendering commands without application intervention.

-

This sample demonstrates usage of this extension for conditionally toggling the visibility of sub-meshes of a complex glTF model.

-

Instead of having to update command buffers, this is done by updating the aforementioned buffer.

-

-

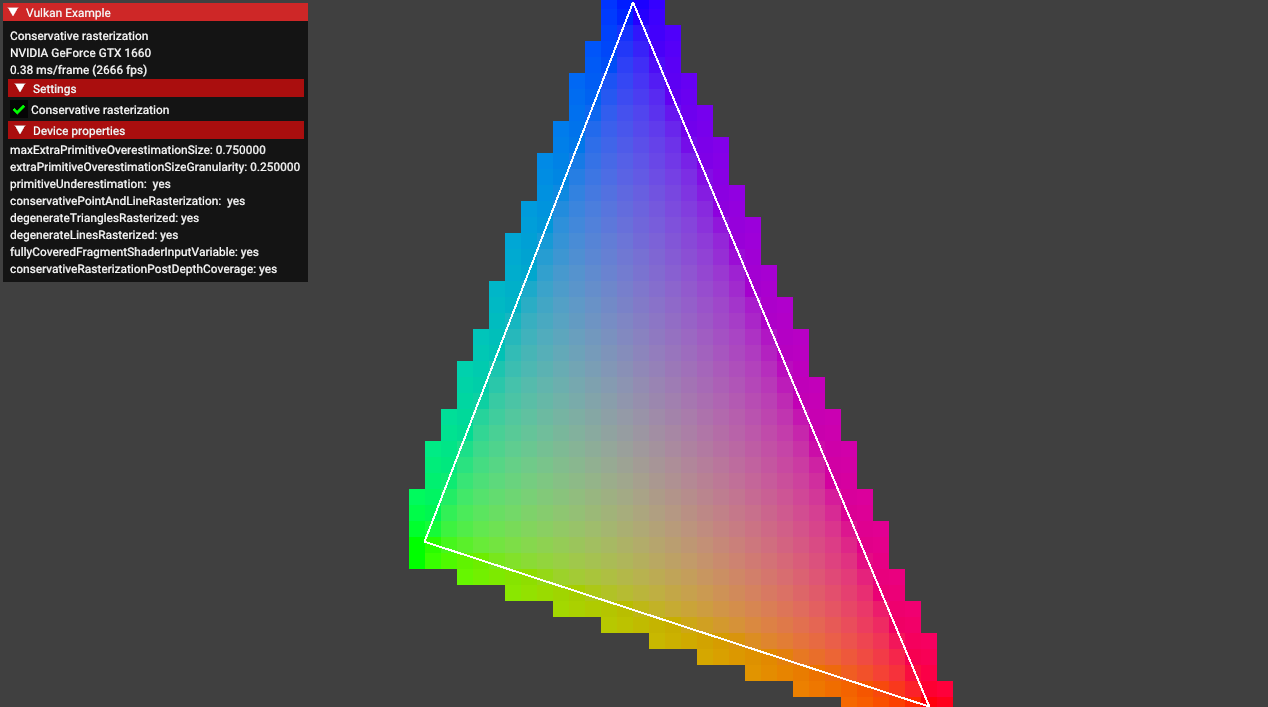

conservative_rasterization

-

.

.

-

Enabling the conservative rasterization option causes this blending effect.

-

EXT_conservative_rasterizationchanges the way fragments are generated. -

Enables overestimation to generate fragments for every pixel touched instead of only pixels that are fully covered.

-

-

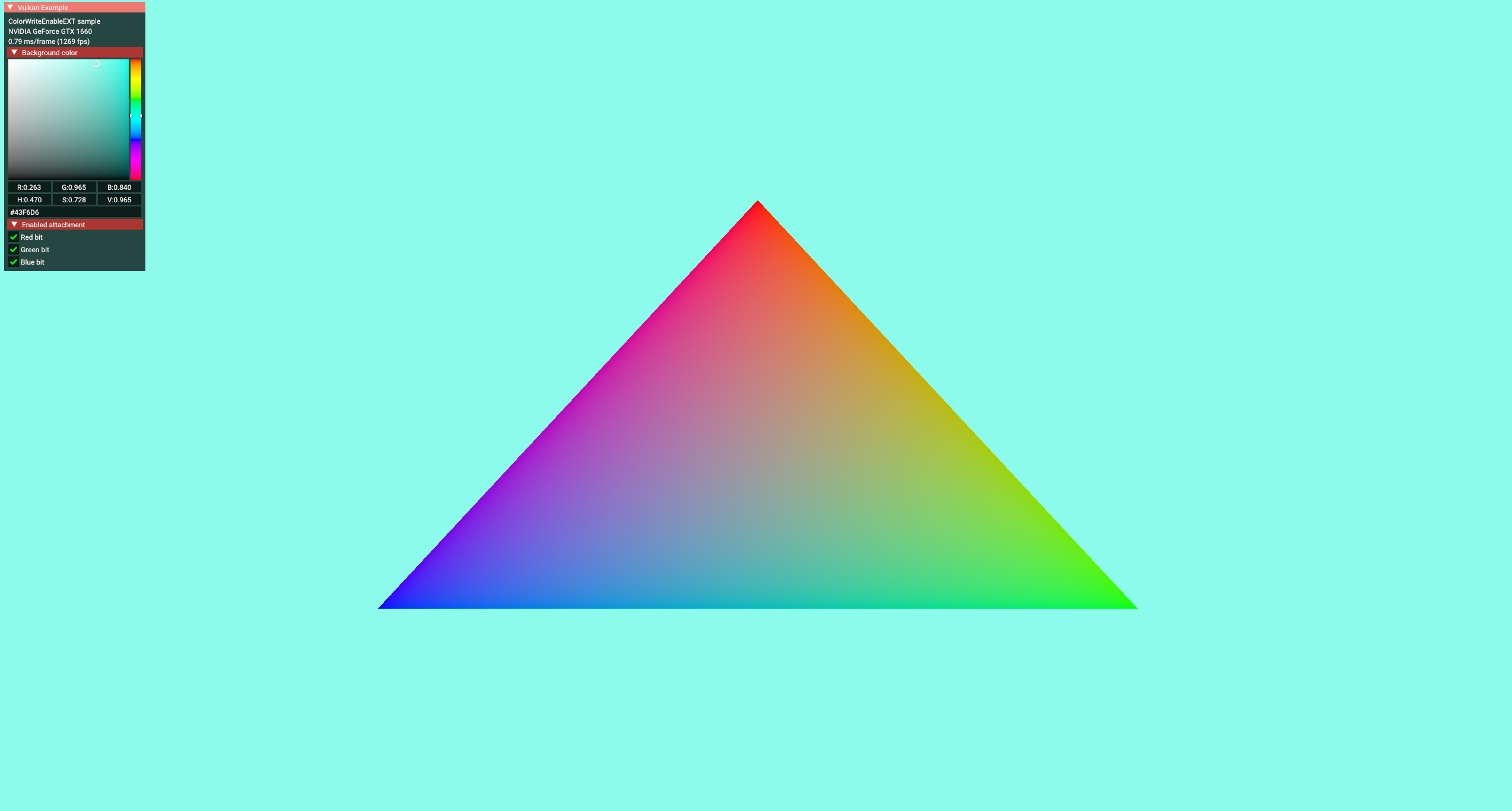

color_write_enable

-

.

.

-

Color picker to change the background color.

-

Some options for "bit", changing the triangle color.

-

The

EXT_color_write_enableextension allows toggling the output color attachments using a pipeline dynamic state. -

It allows the program to prepare an additional framebuffer populated with the data from a defined color blend attachment which can be blended dynamically to the final scene.

-

The final results are comparable to those obtained with

vkCmdSetColorWriteMaskEXT, but it does not require the GPU driver to supportEXT_extended_dynamic_state3.

-

-

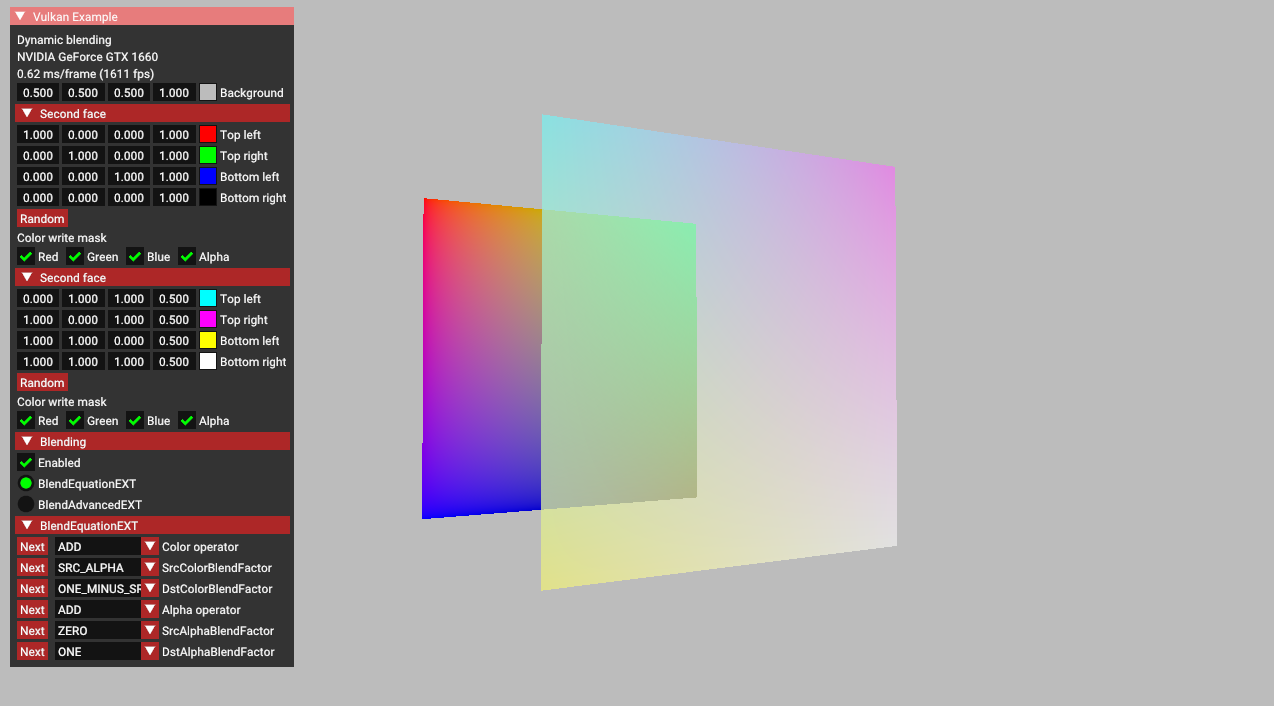

dynamic_blending

-

-

This sample demonstrates the functionality of

EXT_extended_dynamic_state3related to blending. -

It includes the following features:

-

vkCmdSetColorBlendEnableEXT: toggles blending on and off. -

vkCmdSetColorBlendEquationEXT: modifies blending operators and factors. -

vkCmdSetColorBlendAdvancedEXT: utilizes more complex blending operators. -

vkCmdSetColorWriteMaskEXT: toggles individual channels on and off.

-

-

-

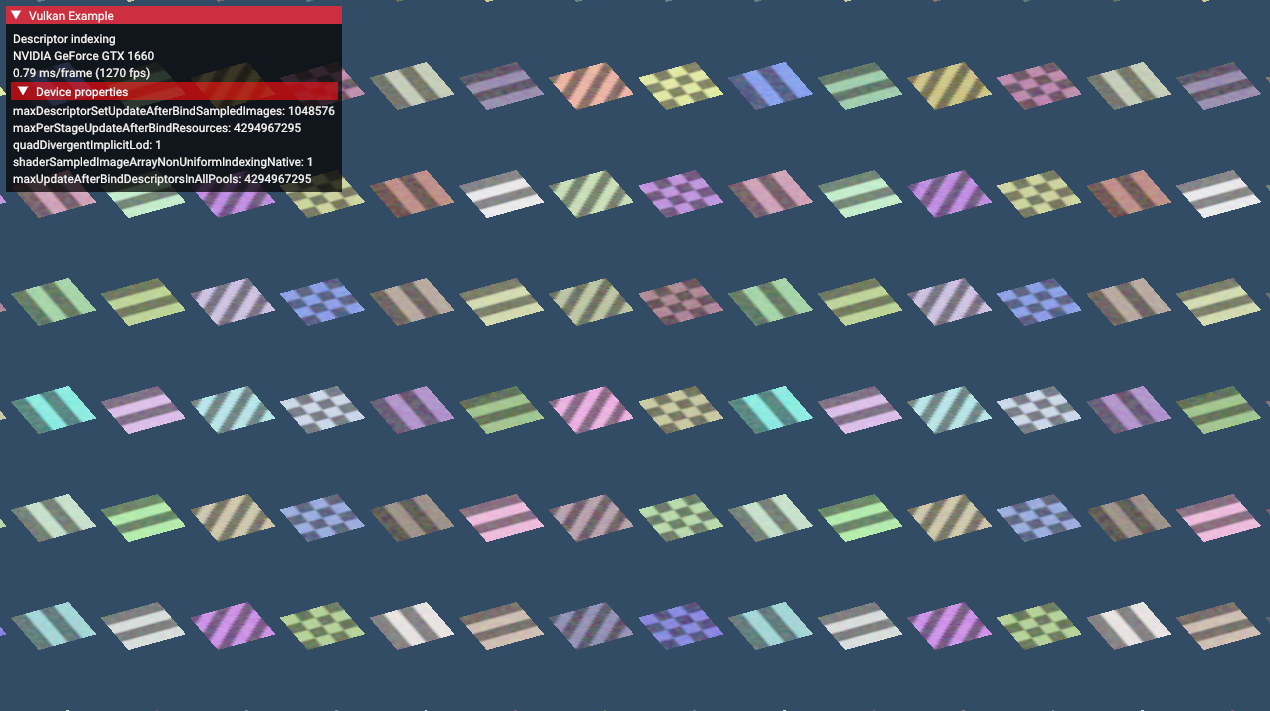

descriptor_indexing

-

.

.

-

-

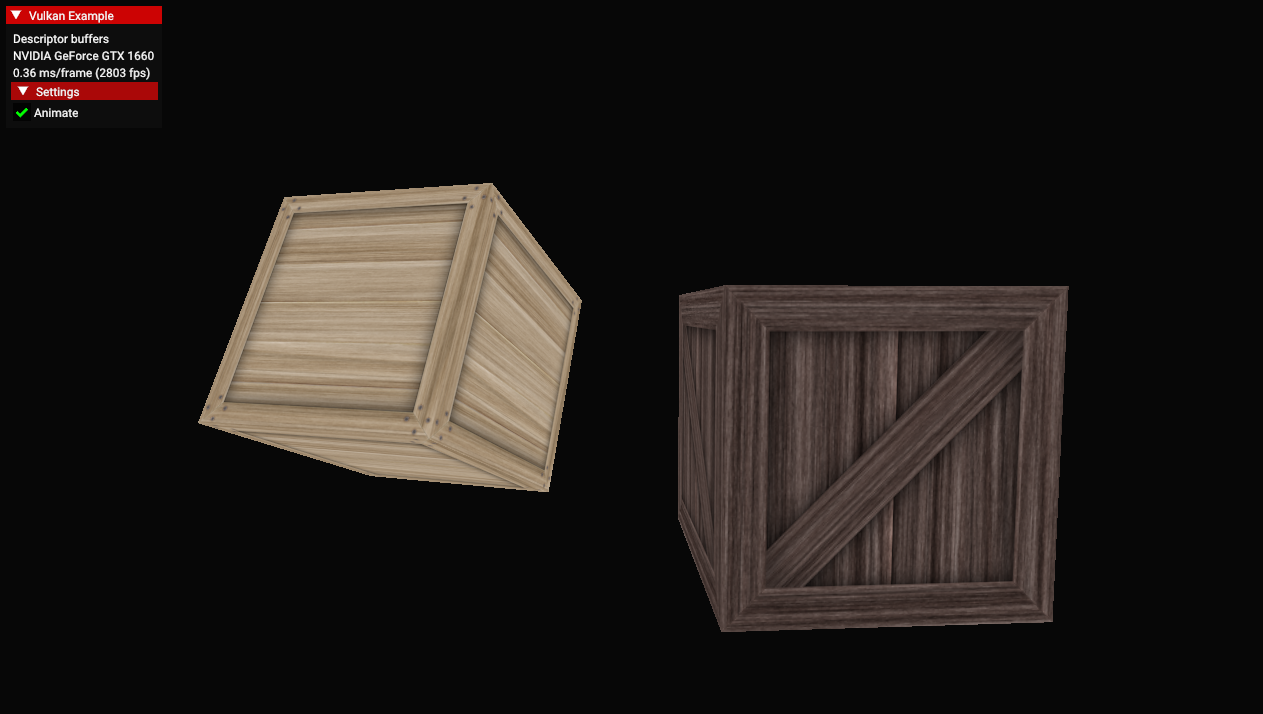

~descriptor_buffer_basic

-

.

.

-

Just boxes rotating, I didn't understand.

-

Just textures rotating, I didn't understand.

-

-

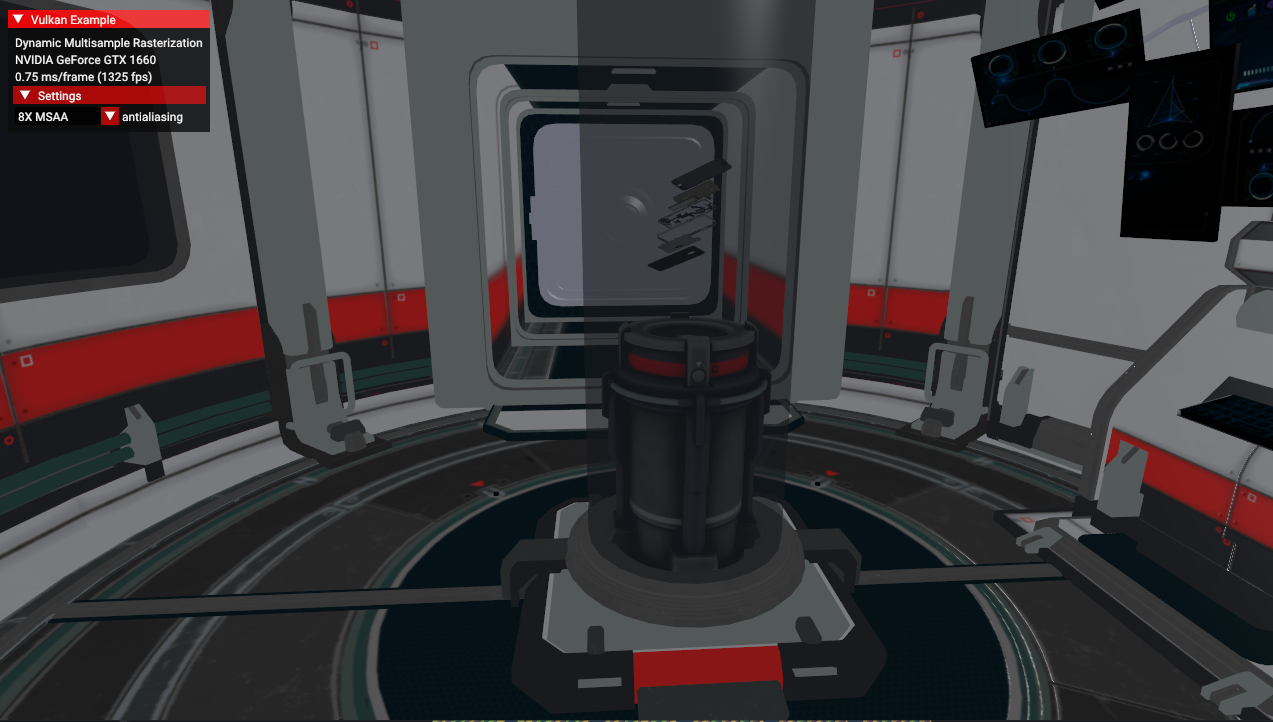

dynamic_multisample_rasterization

-

This sample demonstrates one of the functionalities of

EXT_extended_dynamic_state3related to rasterization samples. -

The extension can be used to dynamically change sampling without the need to swap pipelines.

-

.

.

-

This thing took quite a while to open, generating binary files, etc.

-

-

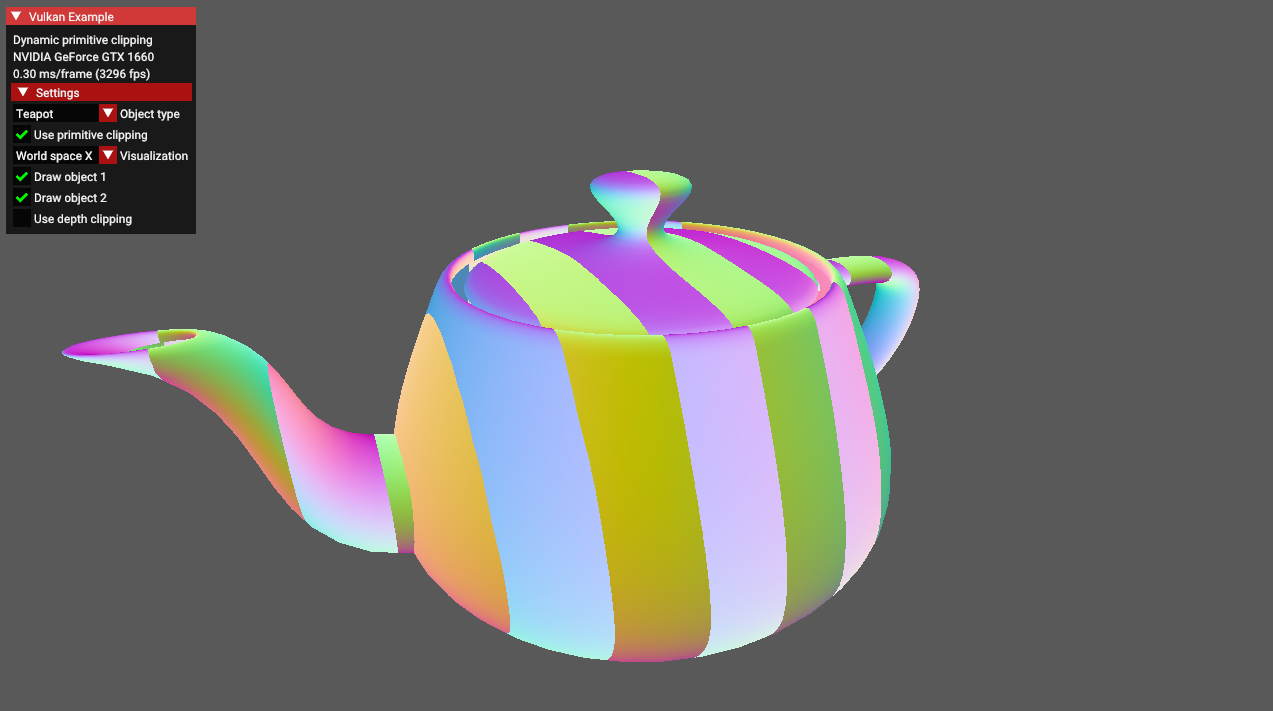

dynamic_primitive_clipping

-

.

.

-

This sample demonstrates how to apply depth clipping using the

vkCmdSetDepthClipEnableEXT()command which is a part of theEXT_extended_dynamic_state3extension. -

Additionally it also shows how to apply primitive clipping using the

gl_ClipDistance[]builtin shader variable. -

It is worth noting that primitive clipping and depth clipping are two separate features of the fixed-function vertex post-processing stage.

-

They're both described in the same chapter of the Vulkan specification (chapter 27.4, "Primitive clipping").

-

What is primitive clipping

-

Primitives produced by vertex/geometry/tessellation shaders are sent to fixed-function vertex post-processing.

-

Primitive clipping is a part of post-processing pipeline in which primitives such as points/lines/triangles are culled against the cull volume and then clipped to the clip volume.

-

And then they might be further clipped by results stored in the

gl_ClipDistance[]array - values in this array must be calculated in a vertex/geometry/tessellation shader. -

In the past, the fixed-function version of the OpenGL API provided a method to specify parameters for up to 6 clipping planes (half-spaces) that could perform additional primitive clipping. Fixed-function hardware calculated proper distances to these planes and made a decision - should the primitive be clipped against these planes or not (for historical study - search for the

glClipPlane()description). -

Vulkan inherited the idea of primitive clipping, but with one important difference: the user has to calculate the distance to the clip planes on their own in the vertex shader.

-

And - because the user does it in a shader - they do not have to use clip planes at all. It can be any kind of calculation, as long as the results are put in the

gl_ClipDistance[]array. -

Values that are less than 0.0 cause the vertex to be clipped. In the case of a triangle primitive the whole triangle is clipped if all of its vertices have values stored in

gl_ClipDistance[]below 0.0. When some of these values are above 0.0 - the triangle is split into new triangles as described in the Vulkan specification.

-

-

What is depth clipping

-

When depth clipping is disabled then effectively there is no near or far plane clipping.

-

Depth values of primitives that are behind the far plane are clamped to the far plane depth value (usually 1.0).

-

Depth values of primitives that are in front of the near plane are clamped to the near plane depth value (by default it's 0.0, but may be set to -1.0 if we use settings defined in

VkPipelineViewportDepthClipControlCreateInfoEXTstructure. This requires the presence of theEXT_depth_clip_controlextension which is not part of this tutorial). -

In this sample the result of depth clipping (or lack of it) is not clearly visible at first. Try to move the viewer position closer to the object and see how the "use depth clipping" checkbox changes object appearance.

-

-

-

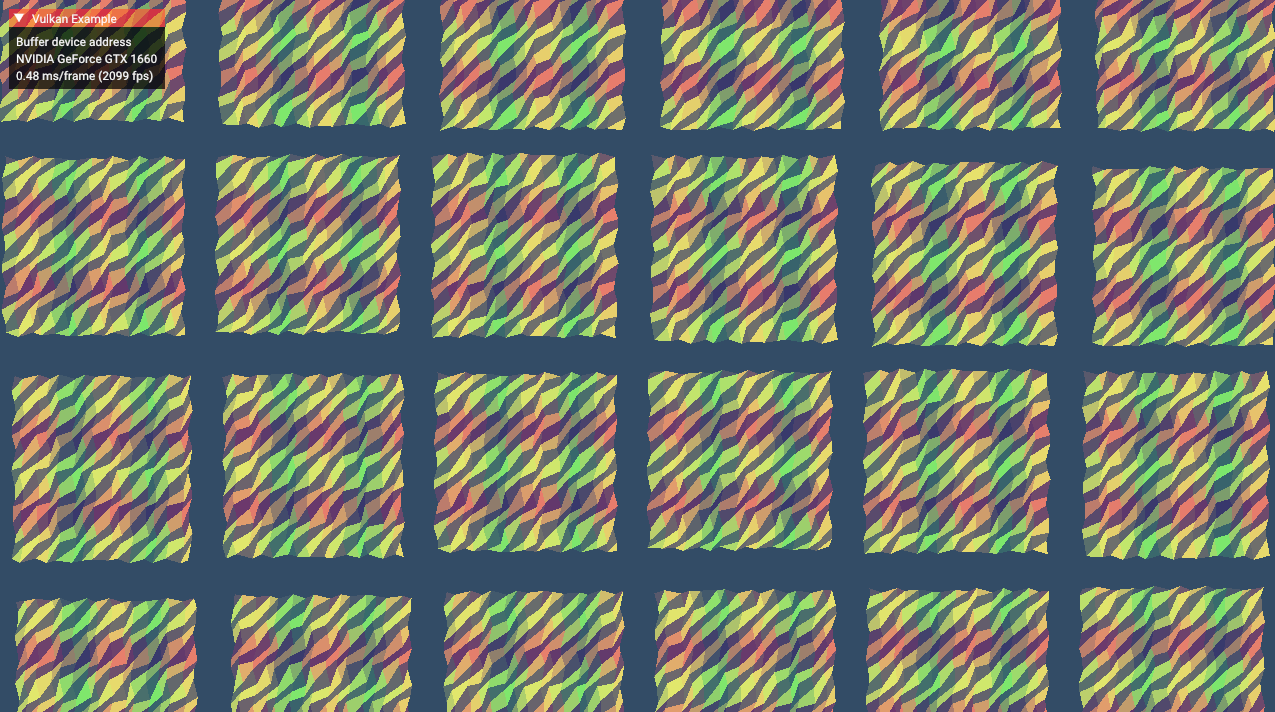

~buffer_device_address.

-

.

.

-

I didn't understand. It's just things moving.

-

-

~calibrated_timestamps

-

timestamp_queries, but with other timings.

-

Core

Instance / Extensions

Instance

-

VkInstance-

The Vulkan context, used to access drivers.

-

-

The instance is the connection between your application and the Vulkan library.

-

-

Optional, but it may provide some useful information to the driver to optimize our specific application.

-

-

-

Tells the Vulkan driver which global extensions and validation layers we want to use.

-

Instance Level Extensions

-

vkEnumerateInstanceExtensionProperties()-

Retrieve a list of supported extensions before creating an instance.

-

Each

VkExtensionPropertiesstruct contains the name and version of an extension.

-

Debugging

Validation Layers

-

Layers .

-

Vulkan is designed for high performance and low driver overhead, therefore, it will include very limited error checking and debugging capabilities by default.

-

The driver will often crash instead of returning an error code if you do something wrong, or worse, it will appear to work on your graphics card and completely fail on others.

-

Vulkan allows you to enable extensive checks through a feature known as validation layers .

-

Validation layers are pieces of code that can be inserted between the API and the graphics driver to do things like running extra checks on function parameters and tracking memory management problems.

-

The nice thing is that you can enable them during development and then completely disable them when releasing your application for zero overhead. Anyone can write their own validation layers, but the Vulkan SDK by LunarG provides a standard set of validation layers. You also need to register a callback function to receive debug messages from the layers.

-

Because Vulkan is so explicit about every operation and the validation layers are so extensive, it can actually be a lot easier to find out why your screen is black compared to OpenGL and Direct3D!

-

Common operations in validation layers are:

-

Checking the values of parameters against the specification to detect misuse

-

Tracking the creation and destruction of objects to find resource leaks

-

Checking thread safety by tracking the threads that calls originate from

-

Logging every call and its parameters to the standard output

-

Tracing Vulkan calls for profiling and replaying

-

-

There were formerly two different types of validation layers in Vulkan: instance and device specific.

-

The idea was that instance layers would only check calls related to global Vulkan objects like instances, and device-specific layers would only check calls related to a specific GPU.

-

Device-specific layers have now been deprecated , which means that instance validation layers apply to all Vulkan calls.

-

We don’t really need to check for the existence of this extension because it should be implied by the availability of the validation layers.

-

vkEnumerateInstanceLayerProperties -

RenderDoc :

-

Do not run validation at the same time as RenderDoc, otherwise you'll also be validating RenderDoc.

-

-

Vulkan Configurator :

-

Overwrites the normal Layer setup.

-

Implicitly loads layers.

-

How to use :

-

RIGHT-CLICK.

-

-

-

Performance :

-

Ensure validation layers and debug callbacks are off for performance runs. Use pipeline cache objects to avoid repeated pipeline creation cost.

-

I notice how each 'push', 'descriptor set bind', 'vertex bind', 'indices bind' and 'draw' were a lot slower with validations on.

-

Message Callback

-

The validation layers will print debug messages to the standard output by default, but we can also handle them ourselves by providing an explicit callback in our program.

-

This will also allow you to decide which kind of messages you would like to see.

-

messageSeverity -

messageType -

pfnUserCallback-

messageSeverity-

DEBUG_UTILS_MESSAGE_SEVERITY_VERBOSE_EXT-

Diagnostic message

-

-

DEBUG_UTILS_MESSAGE_SEVERITY_INFO_EXT-

Informational message like the creation of a resource

-

-

DEBUG_UTILS_MESSAGE_SEVERITY_WARNING_EXT-

Message about behavior that is not necessarily an error, but very likely a bug in your application

-

-

DEBUG_UTILS_MESSAGE_SEVERITY_ERROR_EXT-

Message about behavior that is invalid and may cause crashes.

-

-

-

messageType-

DEBUG_UTILS_MESSAGE_TYPE_GENERAL_EXT-

Some event has happened that is unrelated to the specification or performance

-

-

DEBUG_UTILS_MESSAGE_TYPE_VALIDATION_EXT-

Something has happened that violates the specification or indicates a possible mistake

-

-

DEBUG_UTILS_MESSAGE_TYPE_PERFORMANCE_EXT-

Potential non-optimal use of Vulkan

-

-

-

pCallbackData-

Refers to a

VkDebugUtilsMessengerCallbackDataEXTstruct containing the details of the message itself, with the most important members being: -

pMessage-

The debug message as a null-terminated string

-

-

pObjects-

Array of Vulkan object handles related to the message

-

-

objectCount-

Number of objects in the array

-

-

-

pUserData-

Contains a pointer specified during the setup of the callback and allows you to pass your own data to it.

-

-

Debug Utils (

VK_EXT_debug_utils

)

must(

vk.SetDebugUtilsObjectNameEXT(

dev,

&vk.DebugUtilsObjectNameInfoEXT {

sType = .DEBUG_UTILS_OBJECT_NAME_INFO_EXT,

objectType = obj,

objectHandle = handle,

pObjectName = strings.clone_to_cstring(name, context.temp_allocator),

},

),

)

Window / Surface / GLFW

Window

-

The Vulkan API itself is completely platform-agnostic, which is why we need to use the standardized WSI (Window System Interface) extension to interact with the window manager.

-

Windows can be created with the native platform APIs or libraries like GLFW and SDL .

-

Some platforms allow you to render directly to a display without interacting with any window manager through the

KHR_displayandKHR_display_swapchainextensions. -

These allow you to create a surface that represents the entire screen and could be used to implement your own window manager, for example.

GLFW

-

The very first call in

initWindowshould beglfwInit(), which initializes the GLFW library. Because GLFW was originally designed to create an OpenGL context, we need to tell it to not create an OpenGL context with a later call: -

Because handling resized windows takes special care that we’ll look into later, disable it for now with another window hint call:

glfwWindowHint(GLFW_CLIENT_API, GLFW_NO_API);

glfwWindowHint(GLFW_RESIZABLE, GLFW_FALSE);

-

All that’s left now is creating the actual window. Add a

GLFWwindow* window;private class member to store a reference to it and initialize the window with:

window = glfwCreateWindow(WIDTH, HEIGHT, "Vulkan", nullptr, nullptr);

-

The first three parameters specify the width, height and title of the window. The fourth parameter allows you to optionally specify a monitor to open the window on, and the last parameter is only relevant to OpenGL.

-

Init:

void initWindow() {

glfwInit();

glfwWindowHint(GLFW_CLIENT_API, GLFW_NO_API);

glfwWindowHint(GLFW_RESIZABLE, GLFW_FALSE);

window = glfwCreateWindow(WIDTH, HEIGHT, "Vulkan", nullptr, nullptr);

}

-

Main loop:

void mainLoop() {

while (!glfwWindowShouldClose(window)) {

glfwPollEvents();

}

}

-

Destroy:

void cleanup() {

glfwDestroyWindow(window);

glfwTerminate();

}

-

Blocking the Thread :

Surface

-

A

VkSurfaceKHRis an opaque handle representing a platform-specific presentation target (for example, a window on Windows, an X11 window on Linux, or a UIView on iOS). It is created directly from the Vulkan instance together with a native window handle. Conceptually, a surface is:-

Instance-level: it lives above any physical or logical device.

-

Window abstraction: it wraps the OS window or drawable so that Vulkan knows where to submit images for display.

-

Device-agnostic: you can create a surface before choosing which GPU you will use.

-

-

Once created, the surface is used by a chosen physical device to query presentation support, formats and capabilities, and then by the logical device to build a Swapchain.

-

A surface itself is not intrinsically tied to any particular physical or logical device, because:

-

Creation: you call

vkCreateSurfaceKHR(instance, …)without involving aVkPhysicalDeviceorVkDevicehandle. -

Lifetime: it exists even before you pick or create a device, and you destroy it with

vkDestroySurfaceKHR(instance, surface, …).

-

-

Lifetime :

-

The surface is tied to the GLFW window's lifecycle.

-

It does not change when the window is resized, minimized, or restored.

-

The same surface handle remains valid until you destroy it (e.g., when closing the window).

-

-

"Window surfaces are part of the larger topic of render targets and presentation".

Extensions

-

To establish the connection between Vulkan and the window system to present results to the screen, we need to use the WSI (Window System Integration) extensions.

-

The

KHR_surfaceexposes aVkSurfaceKHRobject that represents an abstract type of surface to present rendered images to. -

The surface in our program will be backed by the window that we’ve already opened with GLFW.

-

The

KHR_surfaceextension is an instance level extension, and we’ve actually already enabled it, because it’s included in the list returned byglfwGetRequiredInstanceExtensions. The list also includes some other WSI extensions that we’ll use in the next couple of chapters. -

The window surface needs to be created right after the instance creation, because it can actually influence the physical device selection.

-

It should also be noted that window surfaces are an entirely optional component in Vulkan if you just need off-screen rendering.

-

Vulkan allows you to do that without hacks like creating an invisible window (necessary for OpenGL).

-

-

Vulkan also allows you to remotely render from a non-presenting GPU or remotely over the internet, or run compute acceleration for AI without a render or presentation target.

-

Although the

VkSurfaceKHRobject and its usage is platform-agnostic, its creation isn’t because it depends on window system details. For example, it needs theHWNDandHMODULEhandles on Windows. Therefore, there is a platform-specific addition to the extension, which on Windows is calledKHR_win32_surfaceand is also automatically included in the list fromglfwGetRequiredInstanceExtensions. -

GLFW actually has

glfwCreateWindowSurfacethat handles the platform differences for us.

Blocking the thread

-

A callback

glfw.SetWindowRefreshCallbackallows the swapchain to be recreated while resizing.-

See [[#Swapchain Recreation]].

-

Physical Device / Logical Device

Physical Device

-

VkPhysicalDevice -

A GPU. Used to query physical GPU details, like features, capabilities, memory size, etc.

Device Level Extensions

Queue Families

-

Most operations performed with Vulkan, like draw commands and memory operations, are asynchronously executed by submitting them to a

VkQueue. -

Queues are allocated from queue families, where each queue family supports a specific set of operations in its queues.

-

For example, there could be separate queue families for graphics, compute and memory transfer operations.

-

-

The availability of queue families could also be used as a distinguishing factor in physical device selection.

-

It is possible for a device with Vulkan support to not offer any graphics functionality; however, all graphics cards with Vulkan support today will generally support all queue operations that we’re interested in.

-

-

We need to check which queue families are supported by the device and which one of these supports the commands that we want to use.

Presentation support

-

Although the Vulkan implementation may support window system integration, that does not mean that every device in the system supports it. Therefore, we need to extend

createLogicalDeviceto ensure that a device can present images to the surface we created. -

Since the presentation is a queue-specific feature, the problem is actually about finding a queue family that supports presenting to the surface we created.

-

It’s actually possible that the queue families supporting drawing commands and the queue families supporting presentation do not overlap.

-

It’s very likely that these end up being the same queue family after all, but throughout the program we will treat them as if they were separate queues for a uniform approach.

-

Nevertheless, you could add logic to explicitly prefer a physical device that supports drawing and presentation in the same queue for improved performance.

-

-

Therefore, we have to take into account that there could be a distinct presentation queue.

-

We’ll look for a queue family that has the capability of presenting to our window surface. The function to check for that is

vkGetPhysicalDeviceSurfaceSupportKHR, which takes the physical device, queue family index and surface as parameters. -

It should be noted that the availability of a presentation queue, as we checked in the previous chapter, implies that the Swapchain extension must be supported. However, the extension does have to be explicitly enabled.

-

Not all graphics cards are capable of presenting images directly to a screen for various reasons, for example, because they are designed for servers and don’t have any display outputs. Secondly, since image presentation is heavily tied into the window system and the surfaces associated with windows, it is not part of the Vulkan core. You have to enable the

KHR_swapchaindevice extension after querying for its support.

Surface Capabilities

-

The extents can change when resizing and you should requery the surface properties. Note that if it says the current extent is

{UINT32_MAX, UINT32_MAX}(happens on some platforms) then you'll need to ask the windowing system for an appropriate new size (but I don't know GLFW well enough to know ifGetFramebufferSizeis the right function for that purpose)

Logical Device

-

VkDevice -

The “logical” GPU context that you actually execute things on.

-

Where you describe more specifically which VkPhysicalDeviceFeatures you will be using, like multi viewport rendering and 64-bit floats.

-

You also need to specify which queue families you would like to use.

Queues

-

Queues .

-

VkQueue-

Execution “port” for commands.

-

GPUs will have a set of queues with different properties.

-

Some allow only graphics commands, others only allow memory commands, etc.

-

-

Command buffers are executed by submitting them into a queue, which will copy the rendering commands onto the GPU for execution.

-

-

The queues are automatically created along with the logical device, but we don’t have a handle to interface with them yet.

-

Device queues are implicitly cleaned up when the device is destroyed.

-

We can use the

vkGetDeviceQueuefunction to retrieve queue handles for each queue family. The parameters are the logical device, queue family, queue index and a pointer to the variable to store the queue handle in. Because we’re only creating a single queue from this family, we’ll simply use index0. -

Vulkan Guide:

-

It is common to see engines using 3 queue families:

-

One for drawing the frame, other for async compute, and other for data transfer.

-

-

In this tutorial, we use a single queue that will run all our commands for simplicity.

-

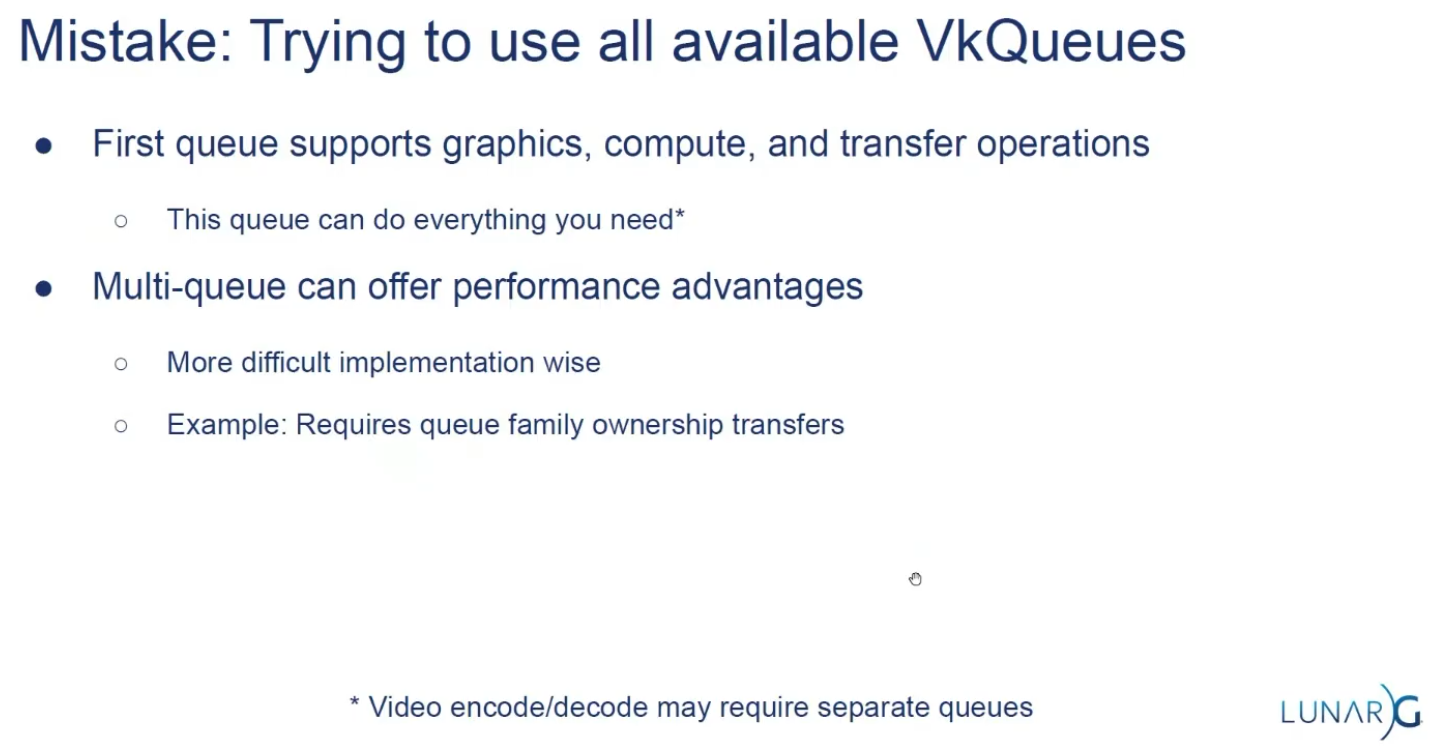

Multi-queue

-

.

.

-

Some hardware only has one queue.

Render Loop

-

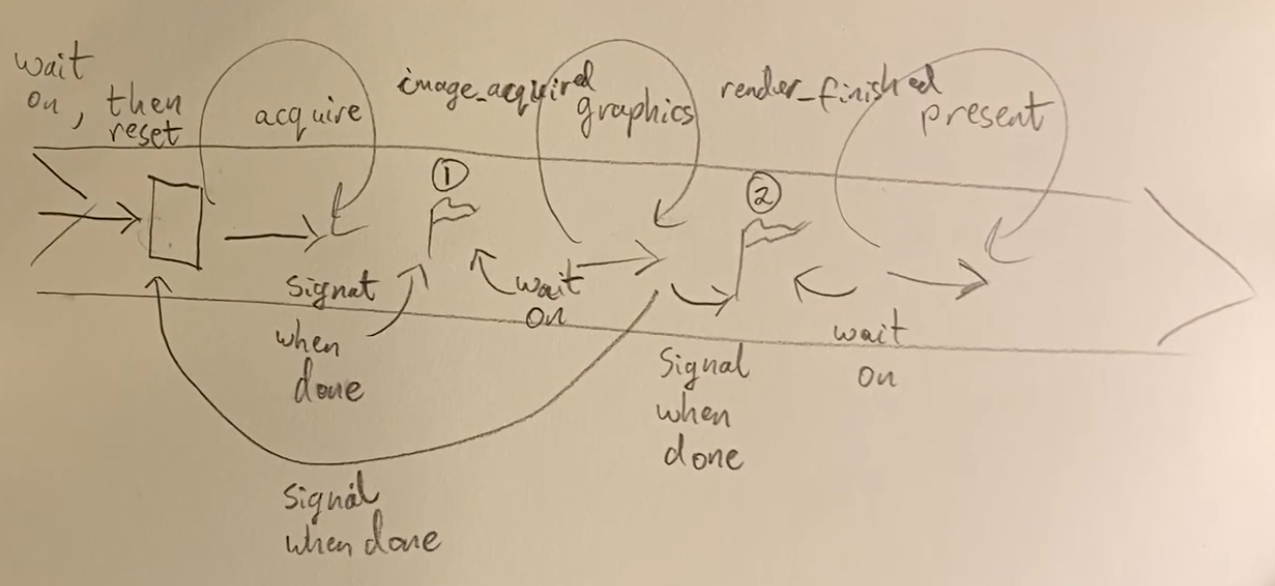

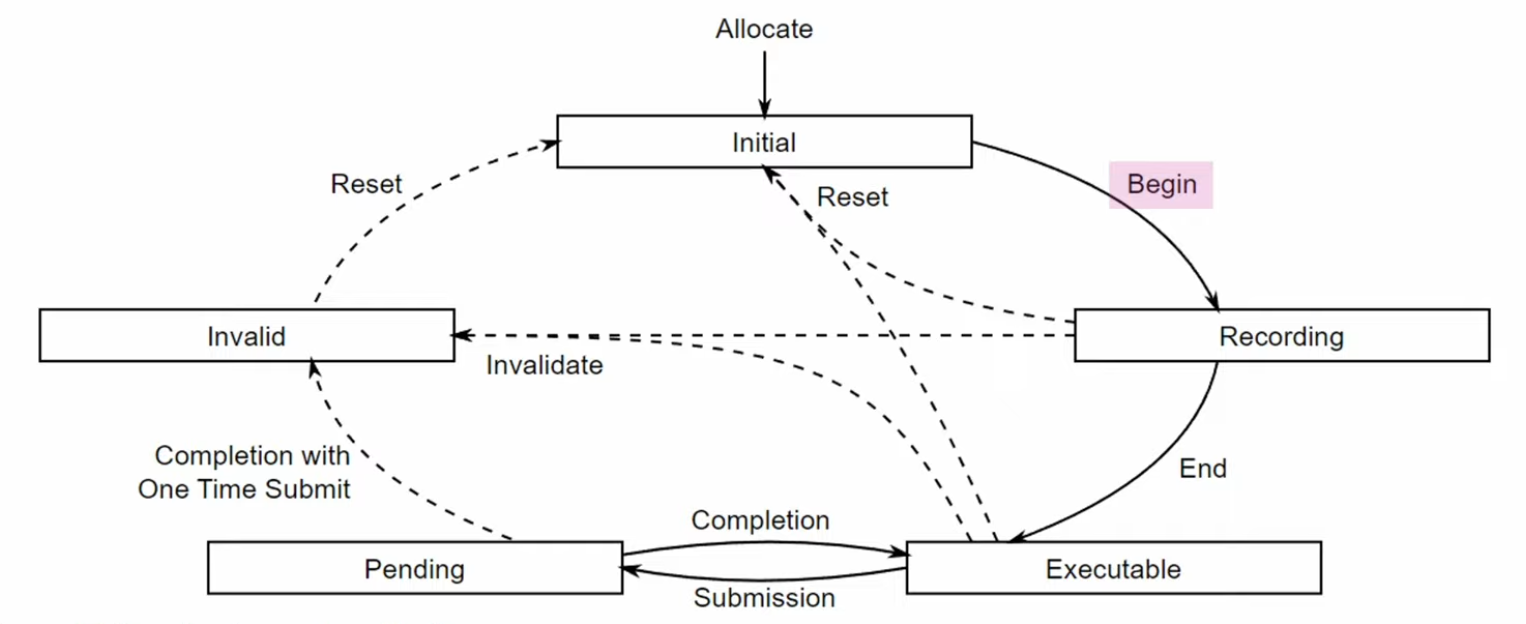

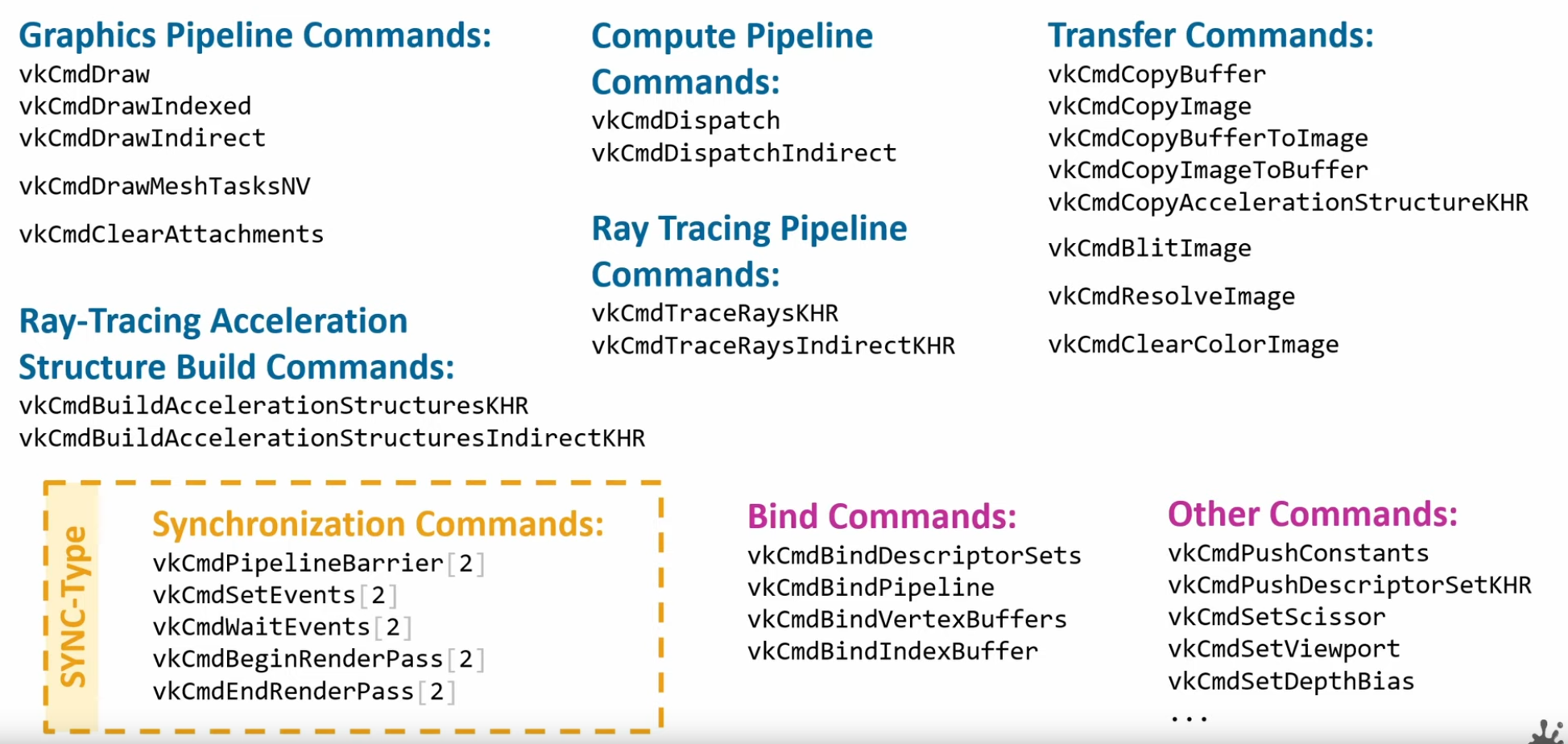

Now that everything is ready for rendering, you first ask the

VkSwapchainKHRfor an image to render to. Then you allocate aVkCommandBufferfrom aVkCommandBufferPoolor reuse an already allocated command buffer that has finished execution, and “start” the command buffer, which allows you to write commands into it. -

Next, you begin rendering by using Dynamic Rendering.

-

Then create a loop where you bind a

VkPipeline, bind someVkDescriptorSetresources (for the shader parameters), bind the vertex buffers, and then execute a draw call. -

If there is nothing more to render, you end the

VkCommandBuffer. Finally, you submit the command buffer into the queue for rendering. This will begin execution of the commands in the command buffer on the gpu. If you want to display the result of the rendering, you “present” the image you have rendered to to the screen. Because the execution may not have finished yet, you use a semaphore to make the presentation of the image to the screen wait until rendering is finished. -

At a high level, rendering a frame in Vulkan consists of a common set of steps:

-

Wait for the previous frame to finish

-

Acquire an image from the Swapchain

-

Record a command buffer which draws the scene onto that image

-

Re-recording every frame doesn't really take up performance.

-

-

Submit the recorded command buffer

-

Takes performance.

-

-

Present the Swapchain image

-

Puts it up on the screen.

-

-

Swapchain

-

Vulkan does not have the concept of a "default framebuffer," hence it requires an infrastructure that will own the buffers we will render to before we visualize them on the screen.

-

This infrastructure is known as the swapchain and must be created explicitly in Vulkan.

-

The Swapchain is essentially a queue of images that are waiting to be presented to the screen.

-

Our application will acquire such an image to draw to it, and then return it to the queue.

-

The conditions for presenting an image from the queue depend on how the Swapchain is set up.

-

The general purpose of the Swapchain is to synchronize the presentation of images with the refresh rate of the screen.

-

This is important to make sure that only complete images are shown.

-

-

Every time we want to draw a frame, we have to ask the Swapchain to provide us with an image to render to. When we’ve finished drawing a frame, the image is returned to the Swapchain for it to be presented to the screen at some point.

-

"Is a collection of render targets".

-

Render Targets is not a well-defined term.

-

-

The number of render targets and conditions for presenting finished images to the screen depends on the present mode.

-

VkSwapchainKHR-

Holds the images for the screen.

-

It allows you to render things into a visible window.

-

The

KHRsuffix shows that it comes from an extension, which in this case isKHR_swapchain.

-

-

-

Good video.

-

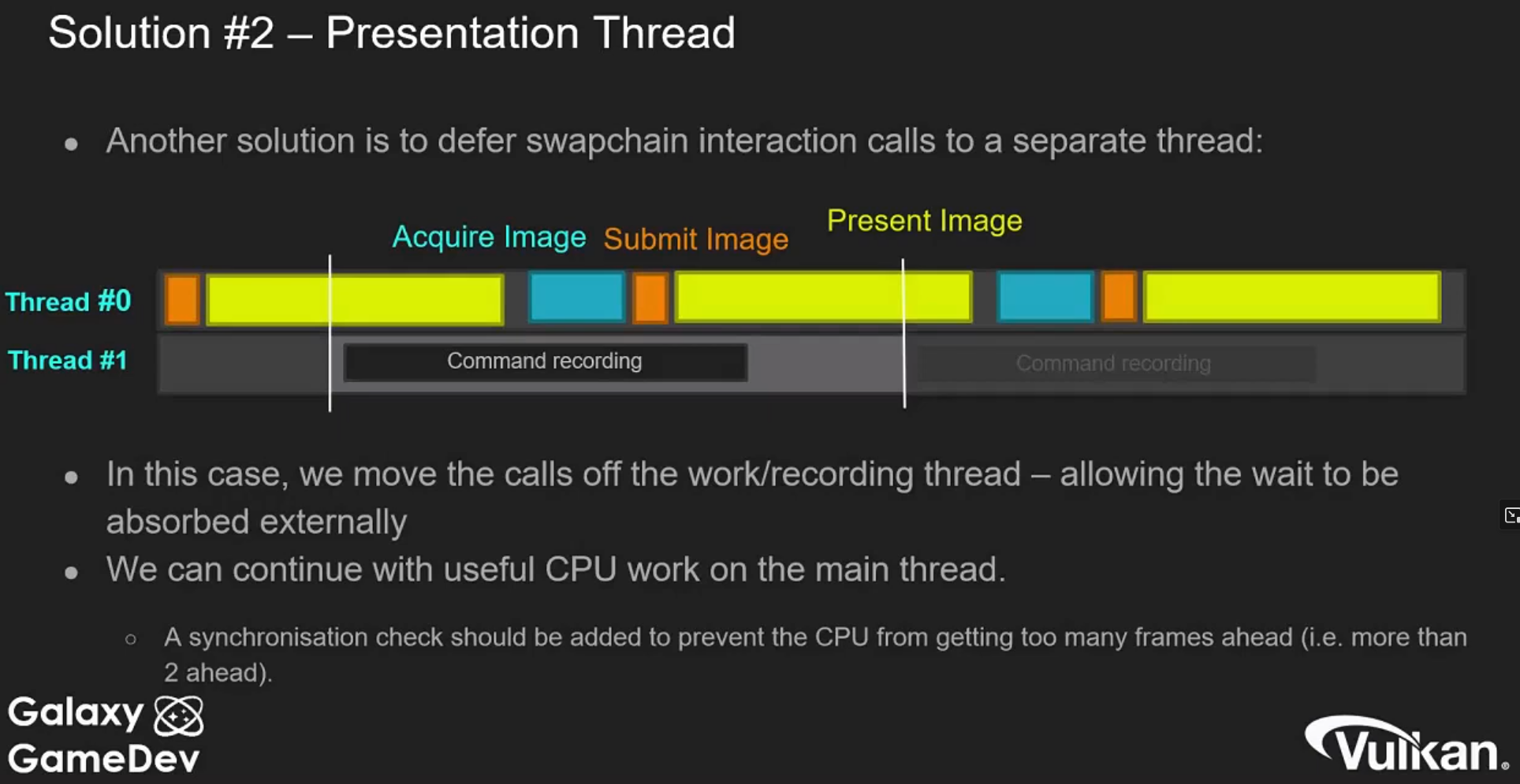

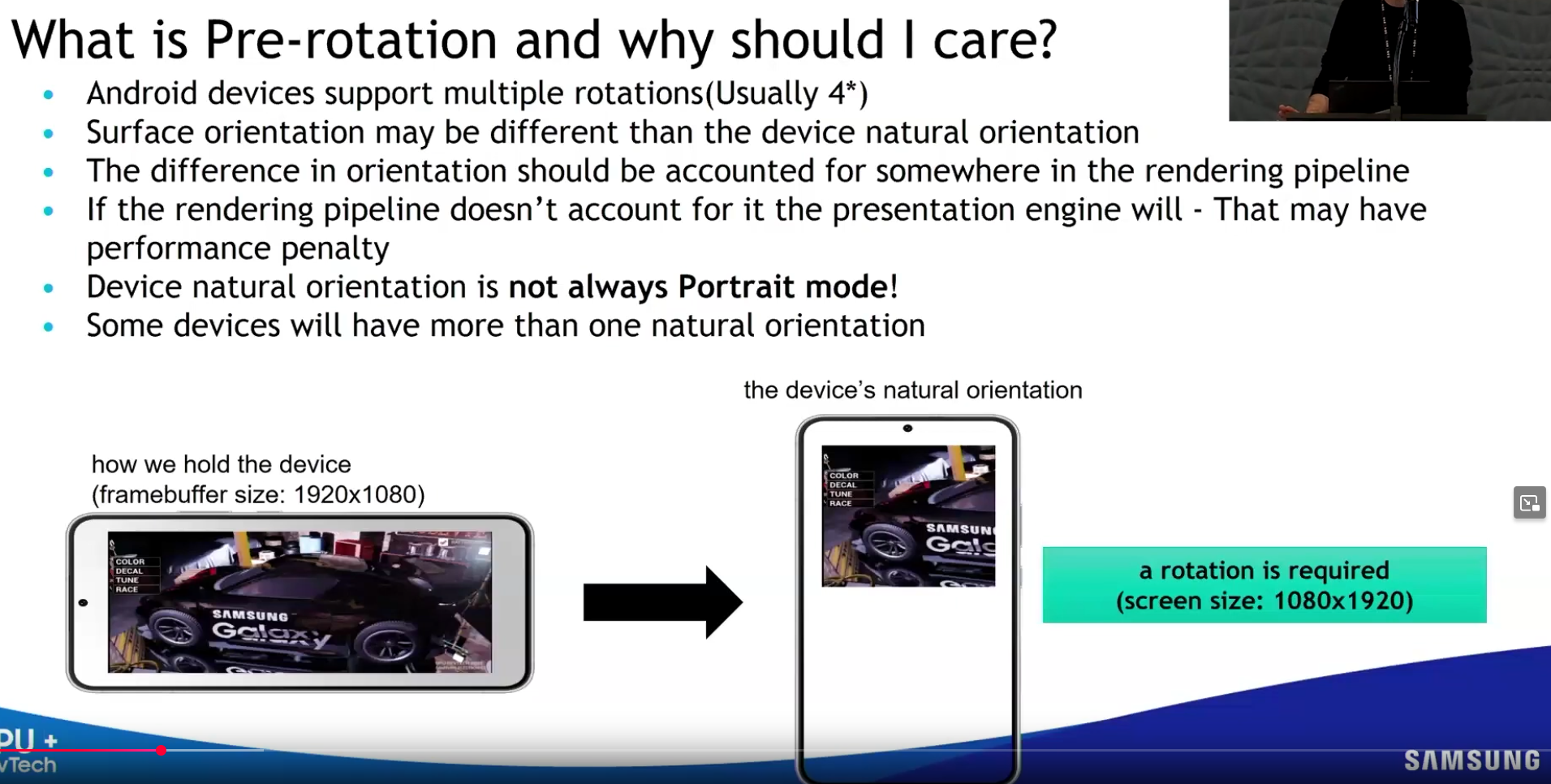

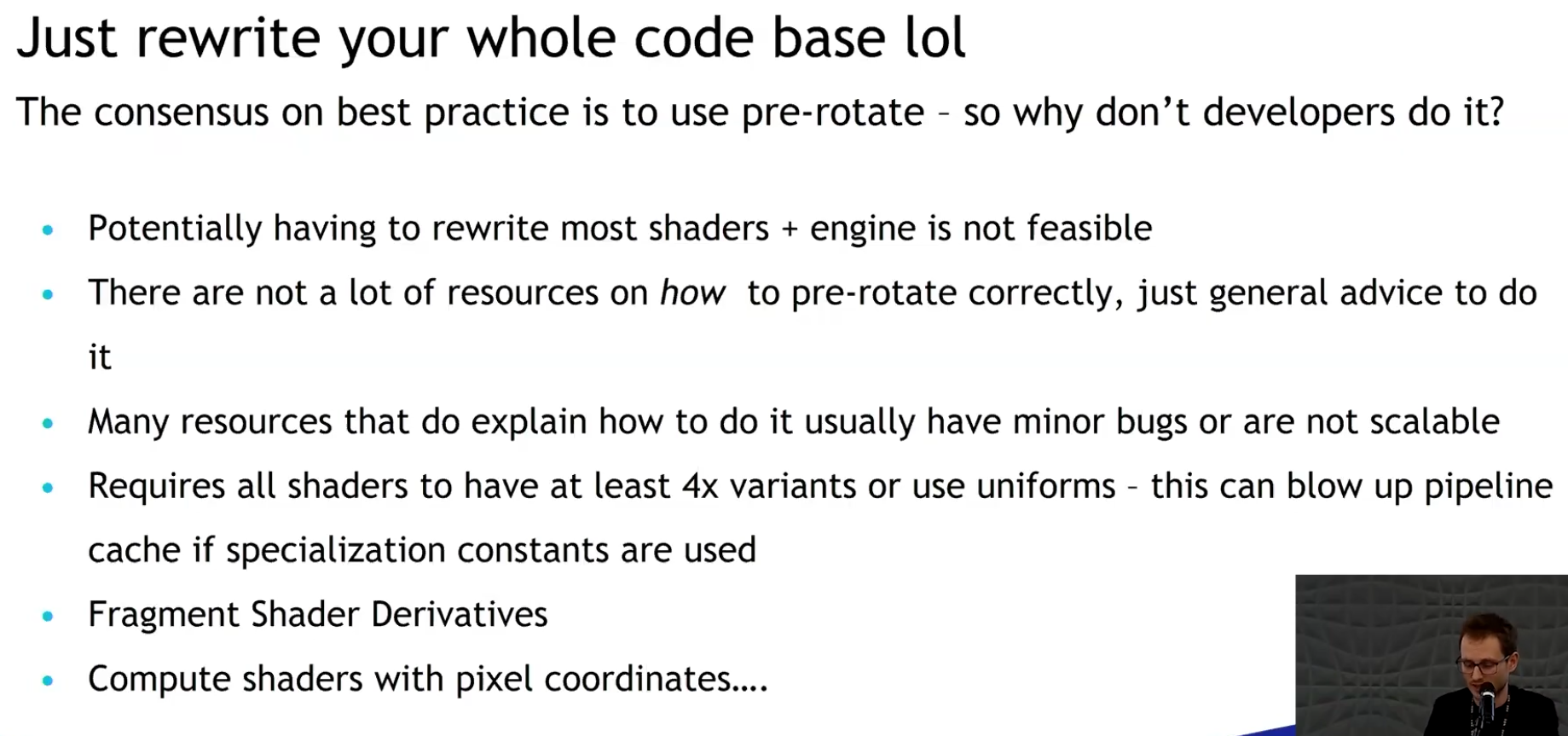

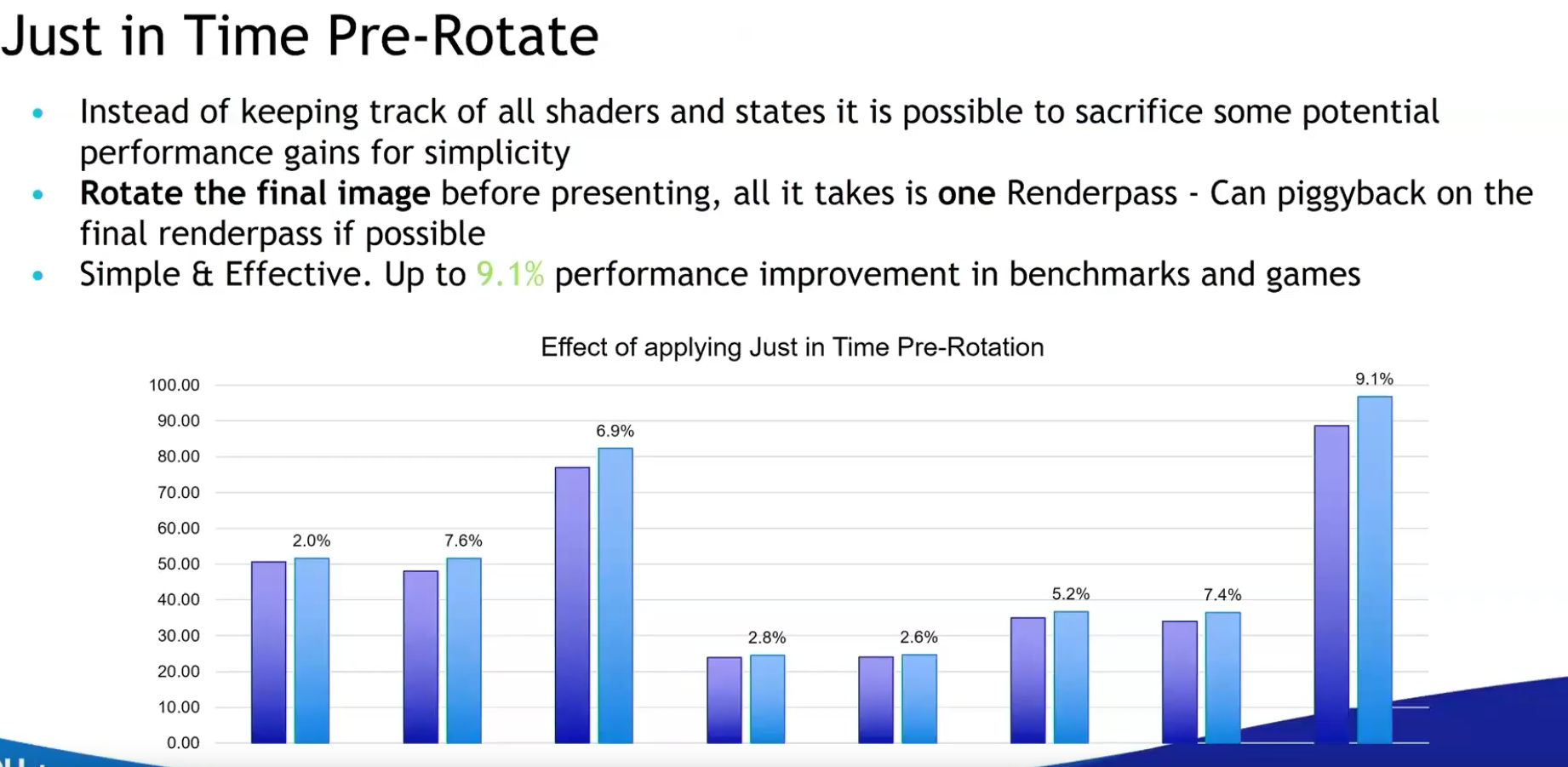

Pre-rotate on mobile.

-

When to recreate, recreation problems, recreation strategies, maintenance.

-

Present modes.

-

-

Support :

-

There are basically three kinds of properties we need to check:

-

Basic surface capabilities (min/max number of images in Swapchain, min/max width and height of images)

-

Surface formats (pixel format, color space)

-

Available presentation modes

-

-

It is important that we only try to query for Swapchain support after verifying that the extension is available.

-

Swapchain Creation

-

-

surface-

Is the surface onto which the swapchain will present images. If the creation succeeds, the swapchain becomes associated with

surface.

-

-

minImageCount-

we also have to decide how many images we would like to have in the Swapchain. However, simply sticking to the minimum means that we may sometimes have to wait on the driver to complete internal operations before we can acquire another image to render to. Therefore, it is recommended to request at least one more image than the minimum:

uint32_t imageCount = surfaceCapabilities.minImageCount + 1;-

We should also make sure to not exceed the maximum number of images while doing this, where

0is a special value that means that there is no maximum

if (surfaceCapabilities.maxImageCount > 0 && imageCount > surfaceCapabilities.maxImageCount) { imageCount = surfaceCapabilities.maxImageCount; } -

-

imageFormat-

For the color space we’ll use SRGB if it is available, because it results in more accurate perceived colors . It is also pretty much the standard color space for images, like the textures we’ll use later on.

-

Because of that we should also use an SRGB color format, of which one of the most common ones is

FORMAT_B8G8R8A8_SRGB.

-

-

imageColorSpace-

Is a VkColorSpaceKHR value specifying the way the swapchain interprets image data.

-

-

imageExtent-

Is the size (in pixels) of the swapchain image(s).

-

The swap extent is the resolution of the Swapchain images. It’s almost always exactly equal to the resolution of the window that we’re drawing to in pixels .

-

The range of the possible resolutions is defined in the

VkSurfaceCapabilitiesKHRstructure. -

On some platforms, it is normal that

maxImageExtentmay become(0, 0), for example when the window is minimized. In such a case, it is not possible to create a swapchain due to the Valid Usage requirements , unless scaling is selected through VkSwapchainPresentScalingCreateInfoKHR , if supported . -

We’ll pick the resolution that best matches the window within the

minImageExtentandmaxImageExtentbounds. But we must specify the resolution in the correct unit. -

GLFW uses two units when measuring sizes: pixels and screen coordinates . For example, the resolution

{WIDTH, HEIGHT}that we specified earlier when creating the window is measured in screen coordinates. But Vulkan works with pixels, so the Swapchain extent must be specified in pixels as well. -

Unfortunately, if you are using a high DPI display (like Apple’s Retina display), screen coordinates don’t correspond to pixels. Instead, due to the higher pixel density, the resolution of the window in pixel will be larger than the resolution in screen coordinates. So if Vulkan doesn’t fix the swap extent for us, we can’t just use the original

{WIDTH, HEIGHT}. Instead, we must useglfwGetFramebufferSizeto query the resolution of the window in pixel before matching it against the minimum and maximum image extent. -

The surface capabilities changes every time the window resizes, and it's only used for creating the Swapchain, so it doesn't make sense to cache.

-

-

imageUsage -

imageSharingMode(Handling multiple queues):-

We need to specify how to handle Swapchain images that will be used across multiple queue families. That will be the case in our application if the graphics queue family is different from the presentation queue. We’ll be drawing on the images in the Swapchain from the graphics queue and then submitting them on the presentation queue. There are two ways to handle images that are accessed from multiple queues:

-

SHARING_MODE_EXCLUSIVE:-

An image is owned by one queue family at a time, and ownership must be explicitly transferred before using it in another queue family.

-

This option offers the best performance.

-

-

SHARING_MODE_CONCURRENT:-

Images can be used across multiple queue families without explicit ownership transfers.

-

Concurrent mode requires you to specify in advance between which queue families ownership will be shared using the

queueFamilyIndexCountandpQueueFamilyIndicesparameters.

-

-

-

If the queue families differ, then we’ll be using the concurrent mode in this tutorial to avoid having to do the ownership chapters, because these involve some concepts that are better explained at a later time.

-

If the graphics queue family and presentation queue family are the same, which will be the case on most hardware, then we should stick to exclusive mode. Concurrent mode requires you to specify at least two distinct queue families.

-

-

queueFamilyIndexCount-

Is the number of queue families having access to the image(s) of the swapchain when

imageSharingModeisSHARING_MODE_CONCURRENT.

-

-

pQueueFamilyIndices-

Is a pointer to an array of queue family indices having access to the images(s) of the swapchain when

imageSharingModeisSHARING_MODE_CONCURRENT.

-

-

imageArrayLayers-

Is the number of views in a multiview/stereo surface. For non-stereoscopic-3D applications, this value is 1.

-

-

presentMode -

preTransform-

We can specify that a certain transform should be applied to images in the Swapchain if it is supported (

supportedTransformsincapabilities), like a 90-degree clockwise rotation or horizontal flip. To specify that you do not want any transformation, simply specify the current transformation. -

IDENTITY-

This would not be optimal on devices that support rotation and will lead to measurable performance loss.

-

It is strongly recommended that

surface_properties.currentTransformbe used instead. However, the application is required to handlepreTransformelsewhere accordingly.

-

-

-

compositeAlpha-

Specifies if the alpha channel should be used for blending with other windows in the window system.

-

You’ll almost always want to simply ignore the alpha channel, hence

OPAQUE.

-

-

clipped-

If set to

TRUE, then that means that we don’t care about the color of pixels that are obscured, for example, because another window is in front of them. -

Unless you really need to be able to read these pixels back and get predictable results, you’ll get the best performance by enabling clipping.

-

-

oldSwapChain-

Can be an existing non-retired swapchain currently associated with

surface, orNULL_HANDLE. -

If the

oldSwapchainisNULL_HANDLE:-

And if the native window referred to by

pCreateInfo->surfaceis already associated with a Vulkan swapchain,ERROR_NATIVE_WINDOW_IN_USEmust be returned.

-

-

If the

oldSwapchainis valid:-

This may aid in the resource reuse, and also allows the application to still present any images that are already acquired from it.

-

And the

oldSwapchainhas exclusive full-screen access, that access is released frompCreateInfo->oldSwapchain. If the command succeeds in this case, the newly created swapchain will automatically acquire exclusive full-screen access frompCreateInfo->oldSwapchain. -

And there are outstanding calls to

vkWaitForPresent2KHR, thenvkCreateSwapchainKHRmay block until those calls complete. -

Any images from

oldSwapchainthat are not acquired by the application may be freed by the implementation, upon callingvkCreateSwapchainKHR, which may occur even if creation of the new swapchain fails. -

The

oldSwapchainwill be retired upon callingvkCreateSwapchainKHR, even if creation of the new swapchain fails.-

After

oldSwapchainis retired, the application can pass tovkQueuePresentKHRany images it had already acquired fromoldSwapchain.-

An application may present an image from the old swapchain before an image from the new swapchain is ready to be presented.

-

As usual,

vkQueuePresentKHRmay fail ifoldSwapchainhas entered a state that causesERROR_OUT_OF_DATEto be returned.

-

-

-

The application can continue to use a shared presentable image obtained from

oldSwapchainuntil a presentable image is acquired from the new swapchain, as long as it has not entered a state that causes it to returnERROR_OUT_OF_DATE. -

The application can destroy

oldSwapchainto free all memory associated witholdSwapchain.

-

-

Regardless if the

oldSwapchainis valid or not:-

The new swapchain is created in the non-retired state.

-

-

-

flags-

Is a bitmask of

VkSwapchainCreateFlagBitsKHRindicating parameters of the swapchain creation. -

SWAPCHAIN_CREATE_DEFERRED_MEMORY_ALLOCATION_EXT-

When

EXT_swapchain_maintenance1is available, you can optionally amortize the cost of swapchain image allocations over multiple frames. -

When this is used, image views cannot be created until the first time the image is acquired.

-

The idea is that normally the images and image views are acquired when a Swapchain recreation happens, but if this flag is enabled it is necessary to acquire them after

result == SUCCESS || result == SUBOPTIMAL_KHRas the result ofvkAcquireNextImageKHR.

-

-

-

-

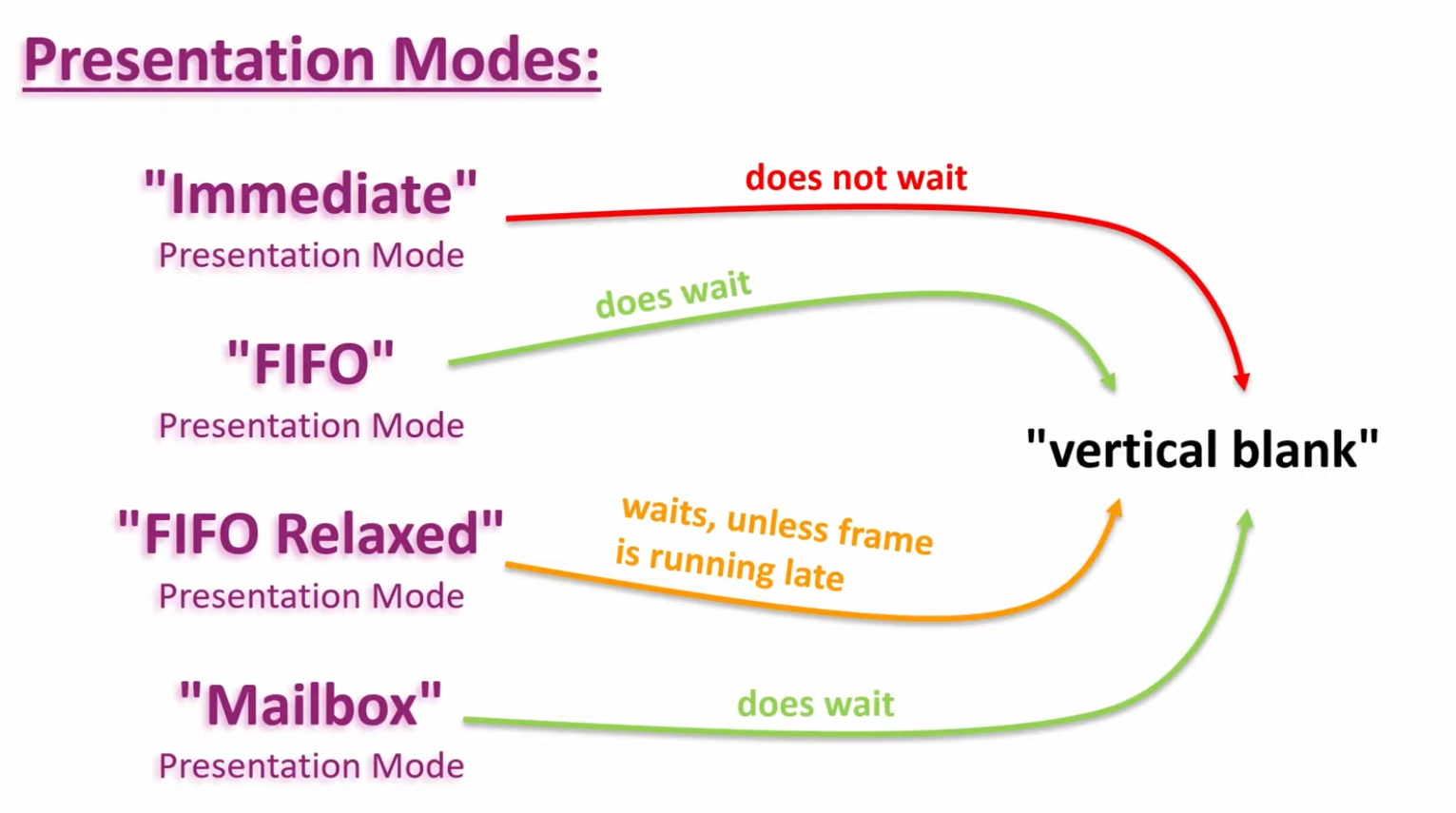

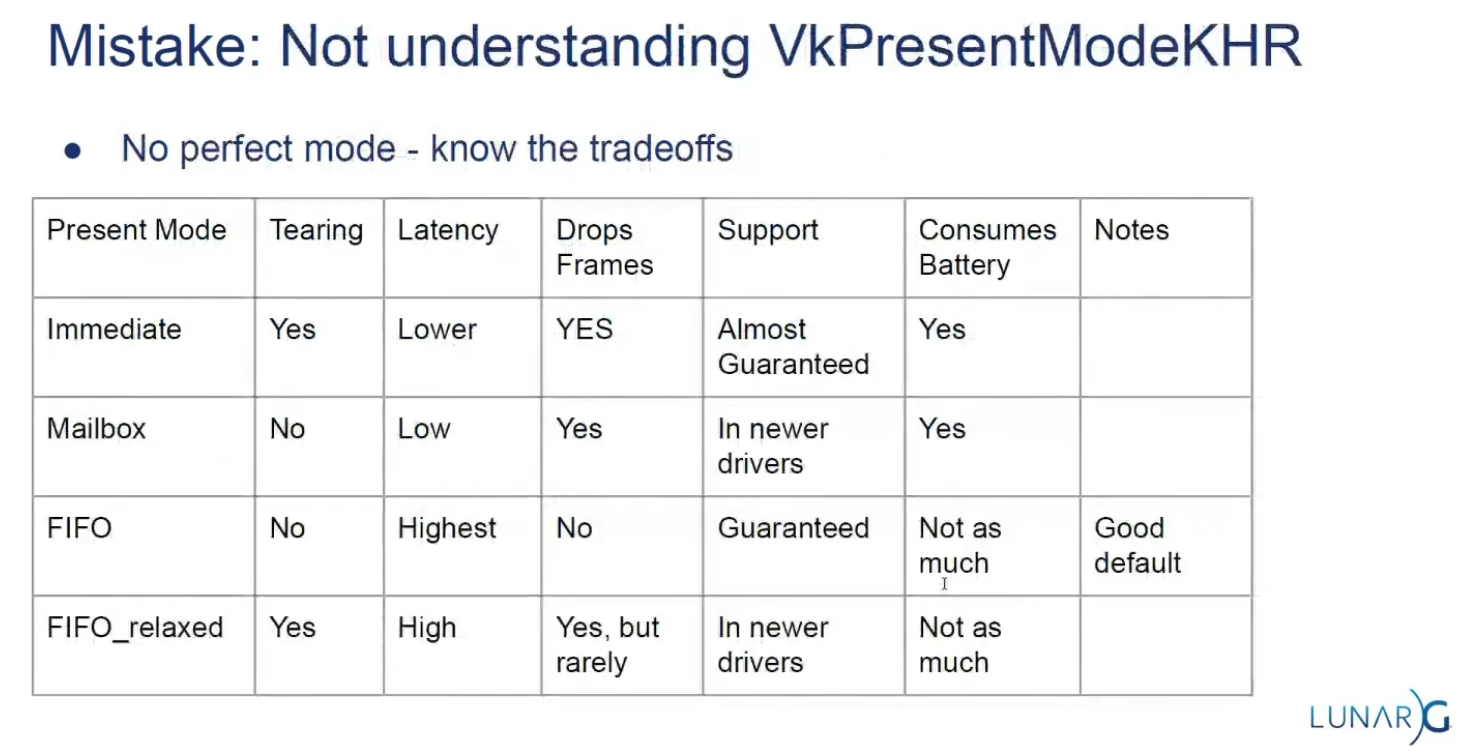

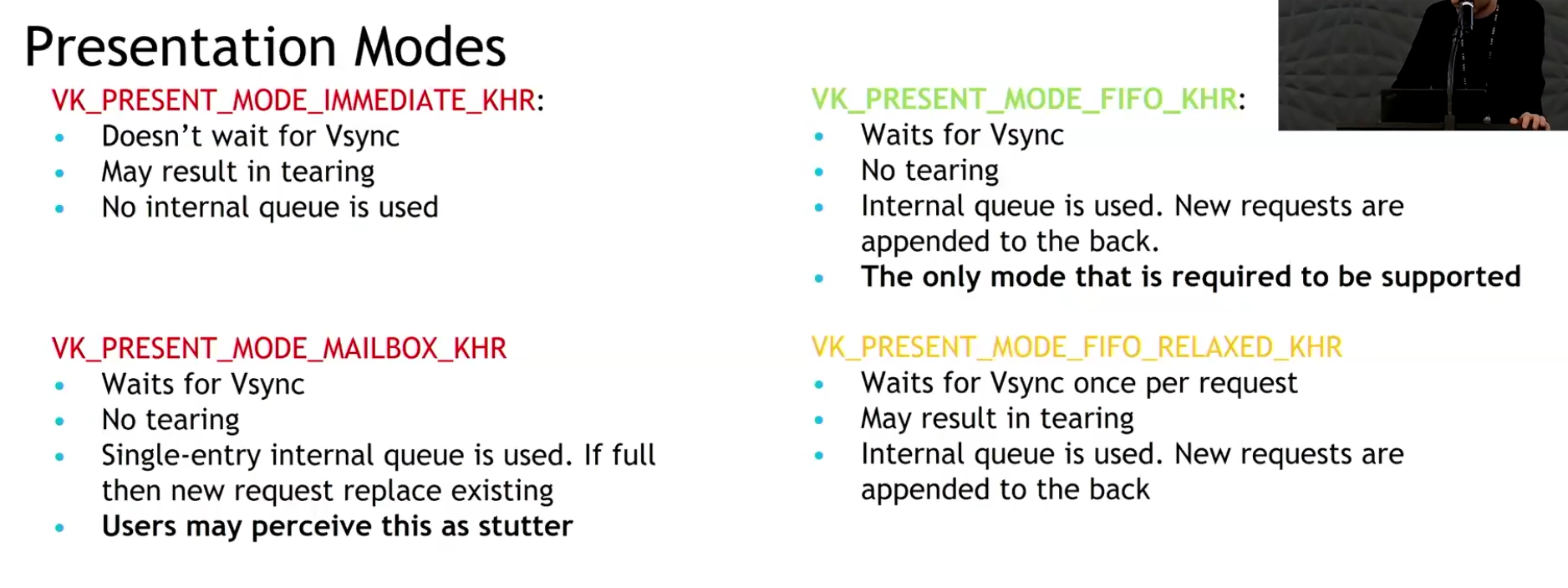

Present Modes

-

Common present modes are double buffering (vsync) and triple buffering.

-

The presentation mode is arguably the most important setting for the Swapchain, because it represents the actual conditions for showing images to the screen. There are four possible modes available in Vulkan:

-

PRESENT_MODE_IMMEDIATE_KHR-

Images submitted by your application are transferred to the screen right away, which may result in tearing.

-

-

PRESENT_MODE_FIFO_KHR-

The Swapchain is a queue where the display takes an image from the front of the queue when the display is refreshed, and the program inserts rendered images at the back of the queue. If the queue is full, then the program has to wait. This is most similar to vertical sync as found in modern games. The moment that the display is refreshed is known as "vertical blank".

-

-

PRESENT_MODE_FIFO_RELAXED_KHR-

This mode only differs from the previous one if the application is late and the queue was empty at the last vertical blank. Instead of waiting for the next vertical blank, the image is transferred right away when it finally arrives. This may result in visible tearing.

-

-

PRESENT_MODE_MAILBOX_KHR-

This is another variation of the second mode. Instead of blocking the application when the queue is full, the images that are already queued are simply replaced with the newer ones. This mode can be used to render frames as fast as possible while still avoiding tearing, resulting in fewer latency issues than standard vertical sync. This is commonly known as "triple buffering," although the existence of three buffers alone does not necessarily mean that the framerate is unlocked.

-

-

-

Only the

PRESENT_MODE_FIFO_KHRmode is guaranteed to be available, so we’ll again have to write a function that looks for the best mode that is available: -

.

.

-

Options :

-

I think that

PRESENT_MODE_MAILBOX_KHRis a very nice trade-off if energy usage is not a concern. It allows us to avoid tearing while still maintaining fairly low latency by rendering new images that are as up to date as possible right until the vertical blank. -

On mobile devices, where energy usage is more important, you will probably want to use

PRESENT_MODE_FIFO_KHRinstead. -

.

.

-

.

.

-

Slide from the Samsung talk on (2025-02-25).

-

It recommends FIFO and says that mailbox is not as good as it seems because it induces a lot of stutter.

-

-

Drawing directly to the Swapchain vs Blitting to the Swapchain

-

Source .

-

Drawing directly into the swapchain :

-

Is fine for many projects, and it can even be optimal in some cases such as phones.

-

Restrictions :

-

Their resolution is fixed to whatever your window size is.

-

If you want to have higher or lower resolution, and then do some scaling logic, you need to draw into a different image.

-

Swapchain image size (imageExtent / surface extent) is part of swapchain creation and is tied to the surface. If you want an internal render at a different resolution (supersampling, dynamic resolution, lower-res upscaling), you create an offscreen image/render-target at the desired size and then copy/blit/resolve/tone-map into the swapchain image for presentation. The spec and WSI notes treat imageExtent as the surface-presentable size.

-

-

The formats of the image used in the swapchain are not guaranteed.

-

Different OS, drivers, and windowing modes can have different optimal swapchain formats.

-

The WSI model exposes the surface’s supported formats to the application via

vkGetPhysicalDeviceSurfaceFormatsKHR(or equivalent WSI queries); the returned list is implementation- and surface-dependent, so you must choose from what the platform/driver exposes. That means formats available for swapchains vary by OS, driver, and surface. -

Vulkan explicitly states this via

VkSurfaceFormatKHRandvkGetPhysicalDeviceSurfaceFormatsKHR. The specification (Section 30.5 "WSI Swapchain", Vulkan 1.3.275) and tutorials emphasize that the application must query and choose from available formats supported by the surface/device combination. Android documentation (Vulkan on Android) and Windows (DXGI_FORMAT) similarly highlight platform-specific format requirements and HDR needs (e.g.,FORMAT_A2B10G10R10_UNORM_PACK32orDXGI_FORMAT_R10G10B10A2_UNORMfor HDR10). This variability makes direct rendering inflexible.

-

-

HDR support needs its own very specific formats.

-

HDR output requires specific color formats and color-space metadata (examples: 10-bit packed UNORM formats or explicit HDR color-space support such as ST2084/Perceptual Quantizer). WSI and sample repos treat HDR as a distinct case (e.g. A2B10G10 formats and HDR color spaces). Support is platform- and driver-dependent.

-

-

Swapchain formats are, for the most part, low precision.

-

Some platforms with High Dynamic Range rendering have higher precision formats, but you will often default to 8 bits per color.

-

So if you want high precision light calculations, systems that would prevent banding, or to be able to go past 1.0 on the normalized color range, you will need a separate image for drawing.

-

HDR/high-dynamic-range lighting typically uses floating-point or extended-range render targets (e.g.

R16G16B16A16_SFLOATor higher) for intermediate lighting accumulation; final tonemapping reduces values into the presentable format. Because presentable swapchain images are often limited (8-bit), the offscreen high-precision image plus a conversion/tonemap pass is the usual pattern.

-

-

Many surfaces expose 8-bit UNORM or sRGB formats (e.g.

B8G8R8A8_UNORM/SRGB) as commonly returned swapchain formats. Higher-precision formats (16-bit float per channel or 10-bit packed) exist and are used for HDR/high-precision pipelines, but they are not guaranteed by every surface/driver. Therefore applications that need high-precision lighting/accumulation commonly render into a 16-bit-float render target and tonemap/convert for presentation. -

Banding artifacts in gradients or low-light scenes are a well-known consequence of limited precision. High-precision rendering (HDR, complex lighting, deferred shading G-Buffers) requires formats like

FORMAT_R16G16B16A16_SFLOAT(RGBA16F) to store values outside the [0.0, 1.0] range and prevent banding. While some swapchains can support HDR formats (e.g., 10:10:10:2), they are less universally available and not the default. Using RGBA16F directly in a swapchain is often unsupported or inefficient for presentation.

-

-

-

-

Drawing to a different image and copying/blitting to the swapchain image :

-

Advantages :

-

Decouples tonemapping from presentation timing

-

Tonemap into an intermediate LDR image that you control. You can finish the tonemap pass earlier and defer the actual transfer/present of the swapchain image to a later point, reducing risk of stalling the present path or blocking on swapchain ownership.

-

-

Avoids writing directly to the swapchain

-

Writing directly into the swapchain can introduce stalls (wait-for-acquire or present-time synchronization). Using an intermediate LDR image lets you do the heavy work off-swapchain and only do a cheap transfer/present step when convenient.

-

-

Enables batching / chaining of postprocesses without touching the swapchain

-

If you need further LDR processing (dithering, temporal AA, UI composite, overlays, readback for screenshots, or additional filters), do those against the intermediate image. This allows composing multiple passes without repeatedly transitioning the swapchain.

-

-

Easier support for multiple outputs or different sizes/formats

-

You can tonemap once to an LDR image and then blit/copy to different-size or different-format targets (screenshots, streaming encoder, secondary displays) without re-running tonemap.

-

-

Allows use of transient/optimized memory for the intermediate

-

The intermediate image can be created as transient (e.g.,

MEMORY_PROPERTY_LAZILY_ALLOCATEDor tiled transient attachment) to reduce memory pressure and bandwidth compared with always keeping a full persistent LDR buffer.

-

-

Better control over final conversion semantics

-

In shader you control quantization, gamma conversion, ordered/temporal dithering, and color-space tagging. After producing the controlled LDR image you can choose the transfer method (exact copy vs scaled blit) that matches target capabilities, improving visual consistency across vendors.

-

-

Improved cross-queue / async workflows

-

You can produce the LDR image on a graphics/compute queue and then perform a transfer on a transfer-only queue (or use a dedicated present queue) with explicit ownership transfers, possibly improving throughput if hardware supports it.

-

-

Facilitates deterministic screenshots / capture

-

Saving an intermediate LDR image for file export is safer (format/bit-depth known) than capturing the swapchain which may have platform-specific transforms applied.

-

-

-

Trade-offs :

-

Extra GPU memory usage

-

You need memory for the intermediate LDR image (unless you use transient attachments), which increases resident memory footprint.

-

-

Extra GPU bandwidth and a copy step

-

Creating an LDR image then copying/blitting to the swapchain costs memory bandwidth and GPU cycles. This can increase frame time if the transfer is on the critical path.

-

-

More layout transitions and synchronization complexity

-

You must manage transitions and possibly ownership transfers (if different queues are used). Incorrect synchronization can cause stalls or correctness bugs.

-

-

Potential increased latency if done poorly

-

If the copy/blit is done synchronously right before present, it can add latency compared with rendering directly to the swapchain; the intended decoupling only helps if scheduling is arranged to avoid the critical path.

-

-

Implementation complexity

-

Managing an extra render target, transient allocation, and copy logic is more code than rendering directly to the swapchain.

-

-

-

Swapchain Recreation

When to recreate

-

If the window surface changed such that the Swapchain is no longer compatible with it.

-

If the window resizes.

-

If the window minimizes.

-

This case is special because it will result in a framebuffer size of

0. -

We can handle by waiting for the framebuffer size to be back to something greater than

0, indicating that the window is no longer minimized.

-

-

If the swapchain image format changed during an application's lifetime, for example, when moving a window from a standard range to a high dynamic range monitor.

Finding out that a recreation is needed

-

The

vkAcquireNextImageKHRandvkQueuePresentKHRfunctions can return the following special values to indicate this.-

ERROR_OUT_OF_DATE_KHR-

The Swapchain has become incompatible with the surface and can no longer be used for rendering. Usually happens after a window resize.

-

-

SUBOPTIMAL_KHR-

The Swapchain can still be used to successfully present to the surface, but the surface properties are no longer matched exactly.

-

You should ALWAYS recreate the swapchain if the result is suboptimal.

-

This result means that it's a "success" but there will be performance penalties.

-

Both

SUCCESSandSUBOPTIMAL_KHRare considered "success" return codes.

-

-

-

If the Swapchain turns out to be out of date when attempting to acquire an image, then it is no longer possible to present to it. Therefore, we should immediately recreate the Swapchain and try again in the next

drawFramecall. -

You could also decide to do that if the Swapchain is suboptimal, but I’ve chosen to proceed anyway in that case because we’ve already acquired an image.

result = presentQueue.presentKHR( presentInfoKHR );

if (result == vk::Result::eErrorOutOfDateKHR || result == vk::Result::eSuboptimalKHR) {

framebufferResized = false;

recreateSwapChain();

} else if (result != vk::Result::eSuccess) {

throw std::runtime_error("failed to present Swapchain image!");

}

currentFrame = (currentFrame + 1) % MAX_FRAMES_IN_FLIGHT;

-

The

vkQueuePresentKHRfunction returns the same values with the same meaning. In this case, we will also recreate the Swapchain if it is suboptimal, because we want the best possible result. -

Finding out explicitly :

-

Although many drivers and platforms trigger

ERROR_OUT_OF_DATE_KHRautomatically after a window resize, it is not guaranteed to happen. -

That’s why we’ll add some extra code to also handle resizes explicitly:

glfw.SetWindowUserPointer(vulkan_context.glfw_window, vulkan_context) glfw.SetFramebufferSizeCallback(vulkan_context.glfw_window, proc "c" (window: glfw.WindowHandle, _, _: i32) {s vulkan_context := cast(^Vulkan_Context)glfw.GetWindowUserPointer(window) vulkan_context.glfw_framebuffer_resized = true }) -

"Usually it's not the best idea to depend on this".

-

Problems with multithreading.

-

You depend on the windowing system to notify changes correctly; this can be really tricky on mobile.

-

-

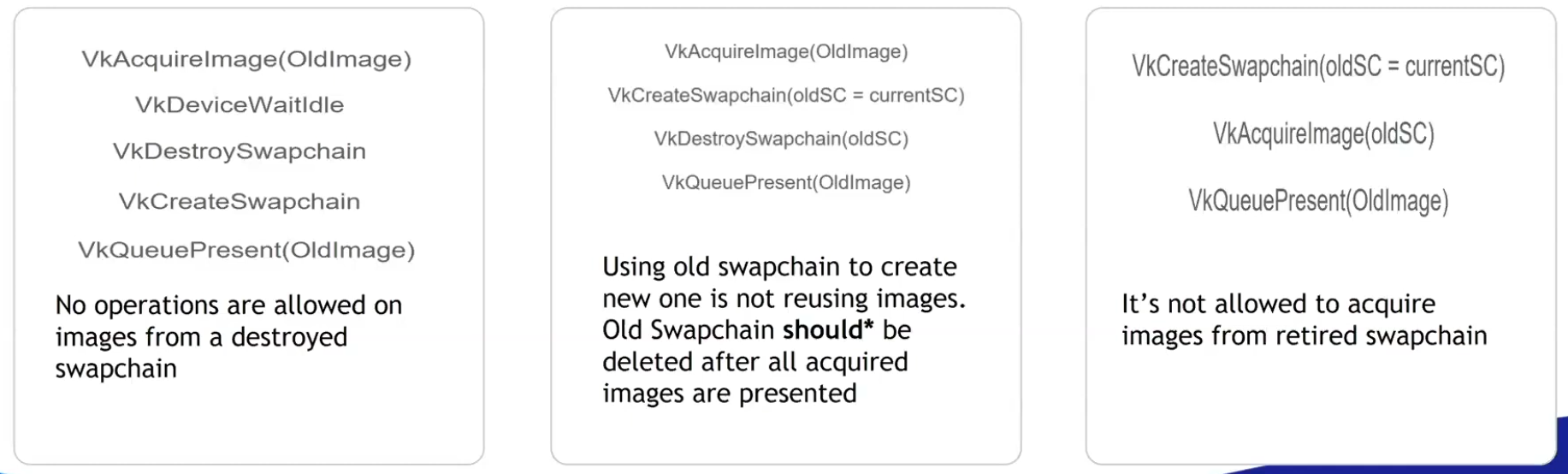

Recreating

void recreateSwapChain() {

device.waitIdle();

cleanupSwapChain();

createSwapChain();

createImageViews();

}

-

Synchronization :

-

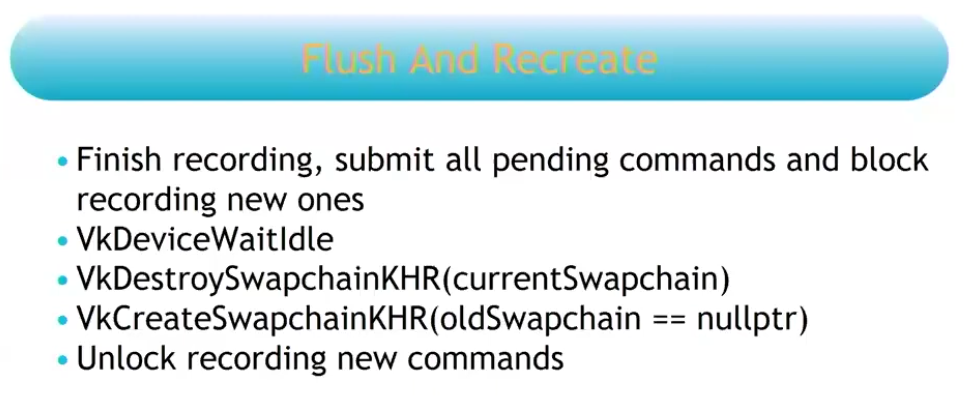

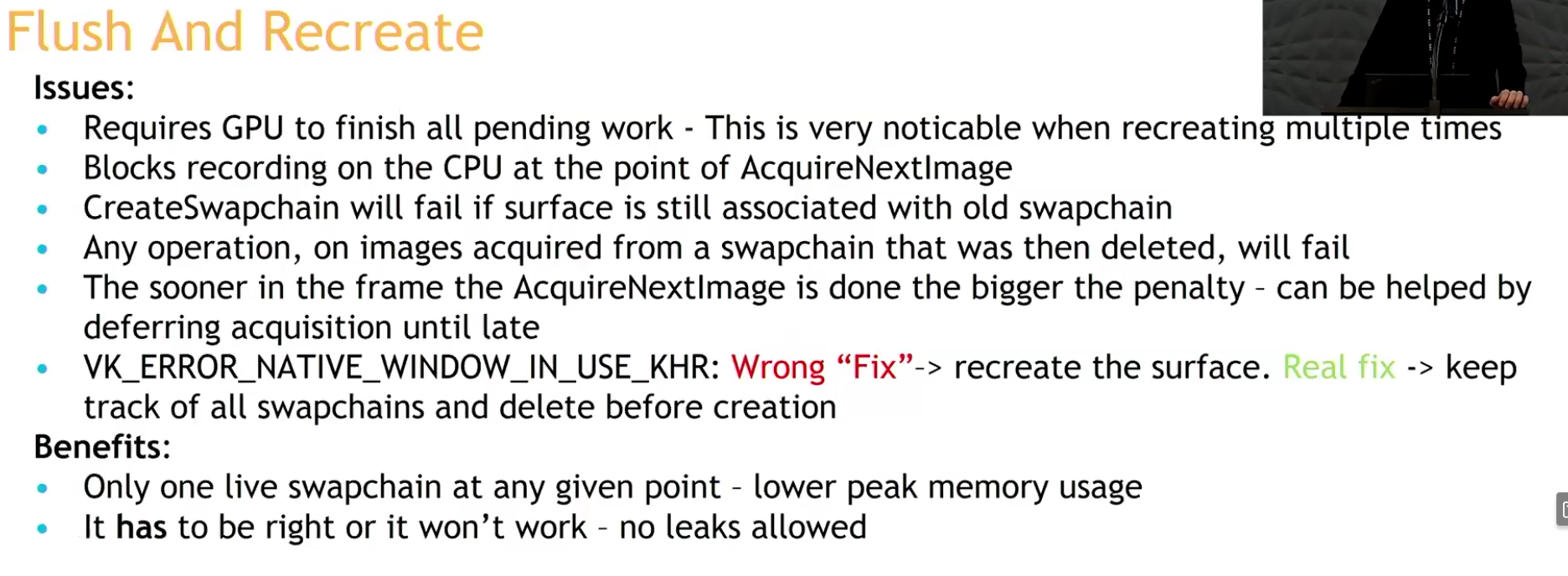

~Flush and Recreate:

-

"We first call

vkDeviceWaitIdle, because just like in the last chapter, we shouldn’t touch resources that may still be in use."-

This is not enough.

-

.

.

-

-

The whole app has to stop and wait for synchronization.

-

.

.

-

.

.

-

-

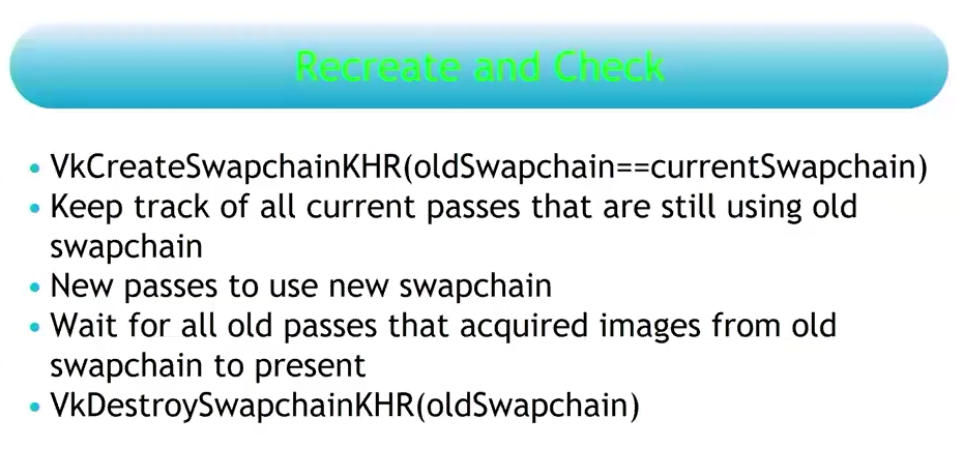

Recreate and check:

-

.

.

-

You do not need to stop your rendering at any given point.

-

The reason why you are allowed to pass the old swapchain when recreating the new swapchain, is due to this strategy.

-

This is the recommendation.

-

Strategy .

-

This issue is resolved by deferring the destruction of the old swapchain and its remaining present semaphores to the time when the semaphore corresponding to the first present of the new swapchain can be destroyed. Because once the first present semaphore of the new swapchain can be destroyed, the first present operation of the new swapchain is done, which means the old swapchain is no longer being presented.

-

The destruction of both old swapchains must now be deferred to when the first QP of the new swapchain has been processed. If an application resizes the window constantly and at a high rate, we would keep accumulating old swapchains and not free them until it stops.

-

This potentially accumulates a lot of memory, I think.

-

-

So what's the correct moment then? Only after the new swapchain has completed one full cycle of presentations, that is, when I acquire image index

0for the second time.

-

-

Analysis :

-

(2025-08-19)

-

Holy, now I understand the problem.

-

I cannot delete anything from the old swapchain until I am sure that everything from the previous one has been presented. I thought that by acquiring the first image of the new swapchain, that would already indicate that it was safe to delete the old swapchain, but that's not true; by doing that, I only guarantee that 1 (ONE) image from the old swapchain has been presented, but the old swapchain may have several images in the queue.

-

However, as made clear, that is not the case.

-

Dealing with this can be a nightmare. Potentially having to handle multiple old swapchains at the same time in case of very frequent resizes (smooth swapchain).

-

-

-

-

"You should always use this extension if available".

-

Support :

-

Introduced in 2023.

-

(2025-02-25)

-

Only 25% of Android devices and 20% of desktop GPUs use it.

-

It was added on Android 14.

-

-

-

Adds a collection of window system integration features that were intentionally left out or overlooked in the original

KHR_swapchainextension. -

Features :

-

Allow applications to release previously acquired images without presenting them.

-

Allow applications to defer swapchain memory allocation for improved startup time and memory footprint.

-

Specify a fence that will be signaled when the resources associated with a present operation can be safely destroyed.

-

Allow changing the present mode a swapchain is using at per-present granularity.

-

Allow applications to define the behavior when presenting a swapchain image to a surface with different dimensions than the image.

-

Using this feature may allow implementations to avoid returning

ERROR_OUT_OF_DATE_KHRin this situation.

-

-

This extension makes

vkQueuePresentKHRmore similar tovkQueueSubmit, allowing it to specify a fence that the application can wait on.

-

-

The problem with

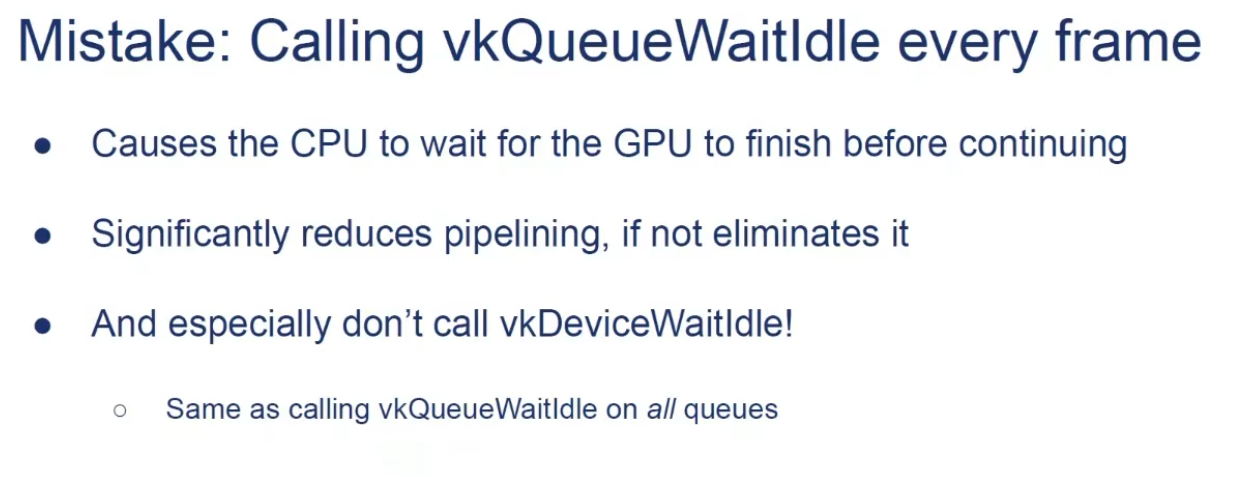

vkDeviceWaitIdleorvkQueueWaitIdle:-

Typically, applications call these functions and assume it’s safe to delete swapchain semaphores and the swapchain itself.

-

The problem is that

WaitIdlefunctions are defined in terms of fences - they only wait for workloads submitted through functions that accept a fence. -

Unextended

vkQueuePresentdoes not provide a fence parameter. -

The

vkDeviceWaitIdlecan’t guarantee that it’s safe to delete swapchain resources.-

The validation layers don't trigger errors in this case, but it's just because so many people use it and there's no good alternative.

-

When

EXT_swapchain_maintenance1is enabled the validation layer will report an error if the application shutdown sequence relies onvkDeviceWaitIdleorvkQueueWaitIdleto release swapchain resources instead of using a presentation fence.

-

-

The extension fixes this problem.

-

By waiting on the presentation fence, the application can safely release swapchain resources.

-

-

-

To avoid a deadlock, only reset the fence if we are submitting work:

-

If reset is made right after wait for the fence, but the window was resized, then it will happen a deadlock.

-

The fence is opened by the signaling of

QueueSubmit, and closed by theResetFences.

vkWaitForFences(device, 1, &inFlightFences[currentFrame], TRUE, UINT64_MAX); uint32_t imageIndex; VkResult result = vkAcquireNextImageKHR(device, swapChain, UINT64_MAX, imageAvailableSemaphores[currentFrame], NULL_HANDLE, &imageIndex); if (result == ERROR_OUT_OF_DATE_KHR) { recreateSwapChain(); return; } else if (result != SUCCESS && result != SUBOPTIMAL_KHR) { throw std::runtime_error("failed to acquire Swapchain image!"); } // Only reset the fence if we are submitting work vkResetFences(device, 1, &inFlightFences[currentFrame]); -

-

-

What to recreate :

-

The image views need to be recreated because they are based directly on the Swapchain images.

-

-

Smooth Swapchain Resizing :

-

"Don't bother with smooth swapchain resizing, it's not worth it".

-

My experience :

-

(2025-08-04)

-

A callback

glfw.SetWindowRefreshCallbackallows the swapchain to be recreated while resizing. -

Synchronization :

-

Since the swapchain is recreated all the time, it becomes difficult to manage when the old swapchain should be destroyed along with its resources.

-

At the moment I'm handling the old_swapchain in a "bad" way, and I feel that recreating it every resize frame only worsens synchronization.-

It is not necessary to deal with the old_swapchain when using

vkDeviceWaitIdle().

-

-

-

My current implementation:

eng.window_init(1280, 720, "Expedicao Hover", proc "c" (window: glfw.WindowHandle) { context = eng.global_context // fmt.printfln("REFRESHED") eng.swapchain_resize() game_draw(&game, game.cycle_draw.dt_cycles_s) })

-

-

Updating resources after recreating

-

Destroy every image and view created from the old swapchain (the swapchain destroys its own images).

-

Update everything that holds a reference to either of those.

-

If anything was created using the swapchain's size you also have to destroy and recreate those and update anything that references them.

-

There's no getting around it.

-

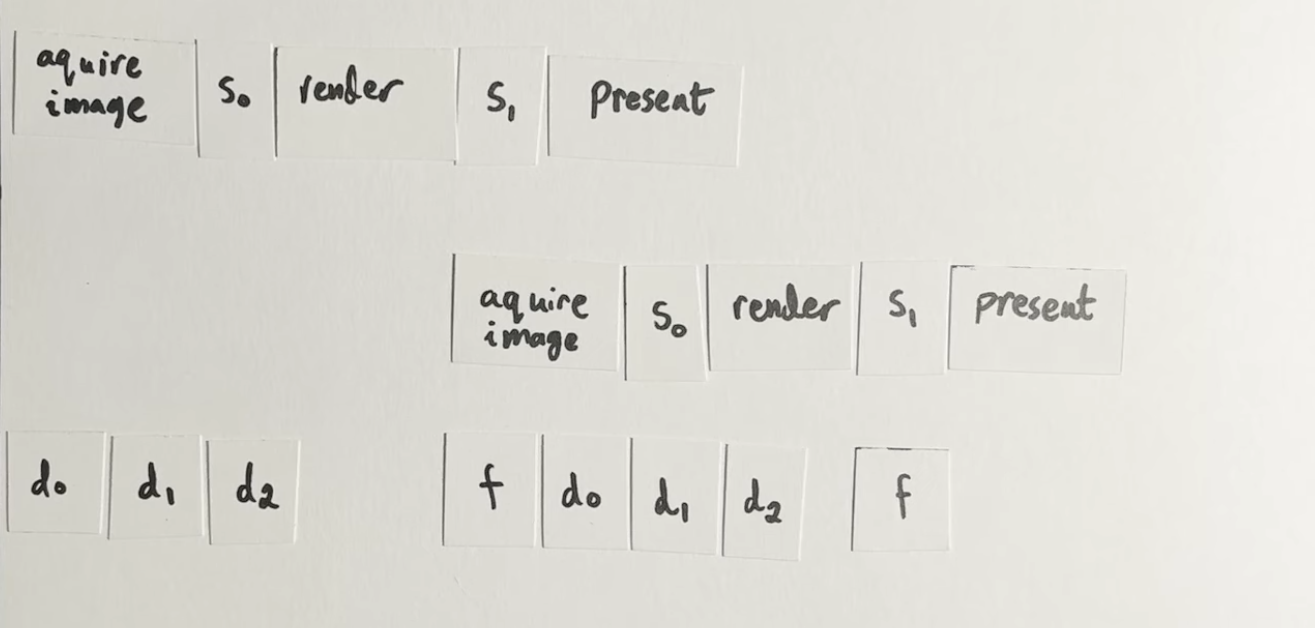

Frames In-Flight

Motivation

-

The render loop has one glaring flaw: unnecessary idling of the host. We are required to wait on the previous frame to finish before we can start rendering the next.

-

To fix this we allow multiple frames to be in-flight at once, allowing the rendering of one frame to not interfere with the recording of the next.

-

This control over the number of frames in flight is another example of Vulkan being explicit.

Frame

-

There is no concept of a frame in Vulkan. This means that the way you render is entirely up to you. The only thing that matters is when you have to display the frame to the screen, which is done through a swapchain. But there is no fundamental difference between rendering and then sending the images over the network, or saving the images into a file, or displaying it on the screen through the swapchain.

-

This means it is possible to use Vulkan in an entirely headless mode, where nothing is displayed to the screen. You can render the images and then store them on disk (very useful for testing) or use Vulkan as a way to perform GPU calculations such as a raytracer or other compute tasks.

How many Frames In-Flight

-

We choose the number 2 because we don’t want the CPU to get too far ahead of the GPU.

-

With two frames in flight, the CPU and the GPU can be working on their own tasks at the same time. If the CPU finishes early, it will wait till the GPU finishes rendering before submitting more work.

-

With three or more frames in flight, the CPU could get ahead of the GPU, adding frames of latency. Generally, extra latency isn’t desired.

-

One Per Frame In-Flight

-

Duplicate :

-

Resources :

-

Uniform Buffers.

-

If modified while a previous frame uses it, corruption occurs.

-

-

Dynamic Storage Buffers.

-

GPU-computed results (e.g., particle positions). Writing to a buffer while an older frame reads it causes hazards.

-

-

Color/Depth Attachments.

-

Staging Buffers

-

If updated per frame (e.g.,

vkMapMemory), duplication avoids overwriting mid-transfer.

-

-

Compute Shader Output Buffers:

-

If frame

Nwrites, and frameN+1reads, duplicate to prevent read-before-write. -

Use ping-pong buffers (count = frames in-flight).

-

-

-

Command pool.

-

I have doubts about this; some people do it differently.

-

-

Command buffer.

-

'present_finished_semaphore'.

-

'render_finished_fence'.

-

-

Don't duplicate :

-

Resources :

-

Static Vertex/Index Buffers:

-

Initialized once, read-only. No per-frame updates.

-

-

Immutable Textures

-

Loaded once (e.g., via

VkDeviceMemory). -

Not mapped for change.

-

It's device local.

-

-

-

Static BRDF LUTs.

-

Initialized once, read by all frames.

-

-

Advancing a frame

void drawFrame() {

...

currentFrame = (currentFrame + 1) % MAX_FRAMES_IN_FLIGHT;

}

-

By using the modulo (

%) operator, we ensure that the frame index loops around after everyMAX_FRAMES_IN_FLIGHTenqueued frames.

Acquire Next Image

-

vkWaitForFences()-

Waits on the previous frame.

-

Takes an array of fences and waits on the host for either any or all of the fences to be signaled before returning.

-

The

TRUEwe pass here indicates that we want to wait for all fences, but in the case of a single one it doesn’t matter. -

This function also has a timeout parameter that we set to the maximum value of a 64 bit unsigned integer,

UINT64_MAX, which effectively disables the timeout.

-

-

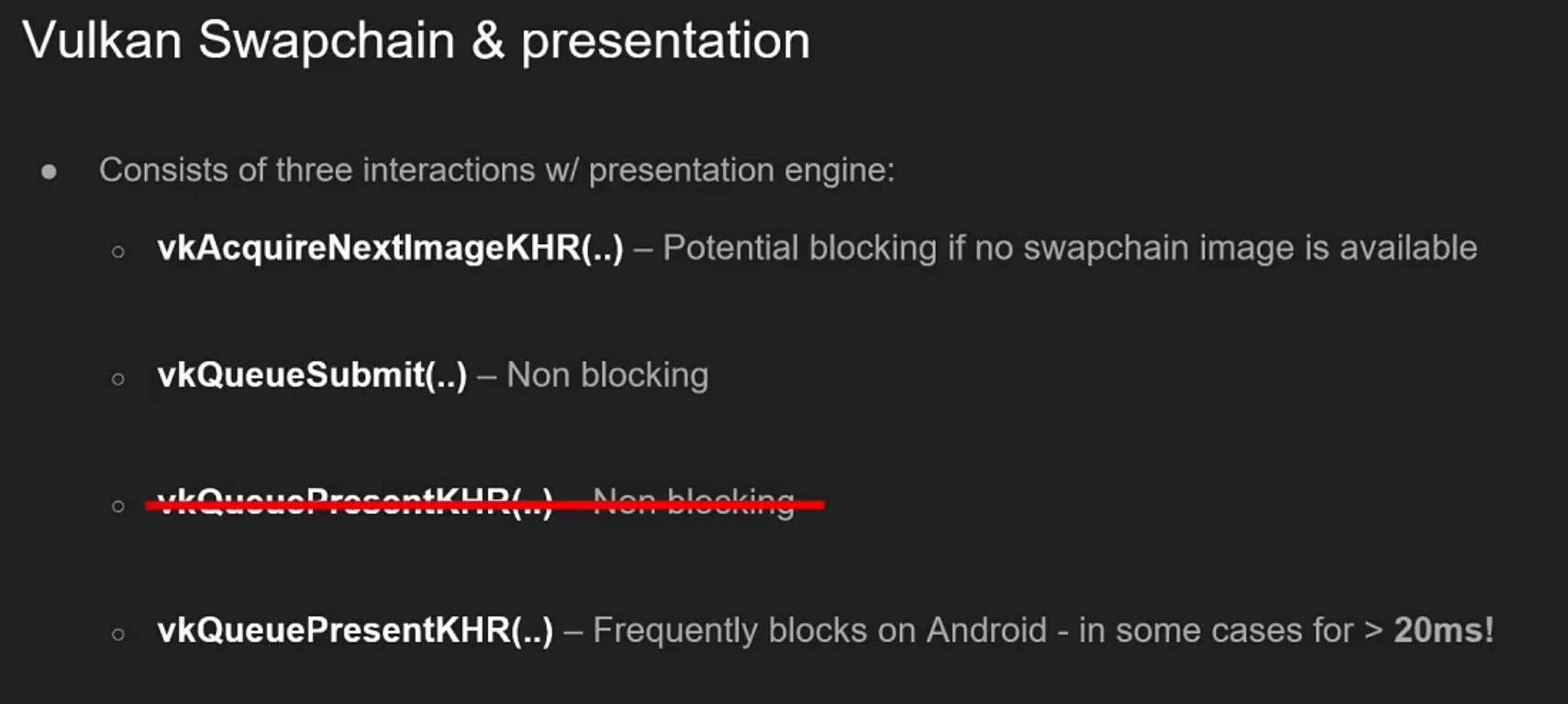

vkAcquireNextImageKHR()-

Acquire the index of an available image from the swapchain for rendering .

-

If an image was acquired, then it means that this image is idle (i.e., not currently being displayed or written to).

-

If no image is ready, the call blocks (or returns an error if non-blocking).

-

The returned image index is now " owned " by your app for rendering.

-

We only get a swapchain image index from the windowing present system.

-

A semaphore/fence is signaled when the image is safe to use.

-

timeout-

If the swapchain doesn’t have any image we can use, it will block the thread with a maximum for the timeout set.

-

The measurement unit is nanoseconds.

-

1 second is fine:

1_000_000_000.

-