Render Engineering

APIs

Graphics APIs

Vulkan

-

Vulkan .

-

Open Source Open Standard.

-

Type:

-

Low-level graphics API

-

-

Platforms:

-

Windows, Linux, Android.

-

No native support for Web, needs WebAssembly.

-

-

Backend:

-

Vulkan

-

-

Focus:

-

High-performance games, advanced 3D graphics

-

-

Advantages:

-

Cross-platform (Windows, Linux, Android)

-

Better performance than OpenGL due to control over the GPU

-

Better management of multiple threads and parallel rendering

-

-

Disadvantages:

-

Complex and difficult to program (similar to DX12).

-

Requires more code and manual memory management

-

More recent support on some platforms (e.g., on macOS, only via layers like MoltenVK)

-

WebGPU

-

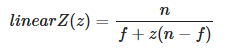

WebGPU is an open standard created by the W3C to offer GPU-accelerated graphics and computation within browsers.

-

It is designed to replace WebGL, offering a more modern and efficient API based on Vulkan, Metal, and DirectX 12.

-

Currently, it is being implemented natively in Chrome, Edge, and Firefox.

-

Platforms :

-

Web.

-

Only in browsers compatible with WebGPU.

-

It is not an independent library, but a standard that browsers implement.

-

-

-

Who maintains it :

-

The W3C, in collaboration with major companies like Google, Mozilla, Microsoft, and Apple.

-

-

wgpu :

-

Open-source MIT.

-

It is a native implementation of the WebGPU standard, designed to work both in the browser and in desktop applications.

-

It serves as a cross-platform wrapper that can use different graphics APIs depending on the operating system.

-

Therefore, although WebGPU is a standard for the Web, wgpu is an implementation of that standard that can also run natively outside of browsers.

-

Written in Rust, C/C++, etc.

-

Type:

-

Mid-level graphics API

-

-

Platforms:

-

Windows, Linux, macOS.

-

Web, via WebGPU.

-

-

Supported Backends:

-

Vulkan, DX12, Metal, OpenGL (selected automatically)

-

-

Focus:

-

Cross-platform, WebGPU, ease of use (Rust, C/C++)

-

-

Advantages:

-

Cross-platform and compatible with WebGPU

-

Easier to use than Vulkan/DX12

-

Memory safety and stability

-

-

Disadvantages:

-

Less control over GPU optimizations

-

Still in development, fewer tools than Vulkan/DX12

-

-

-

wgpu vs WebGPU :

-

If you are developing for the Web, you will use WebGPU directly.

-

If you want to use WebGPU also in native apps, wgpu is the right choice, as it allows running the same code both in the browser and on desktops.

-

-

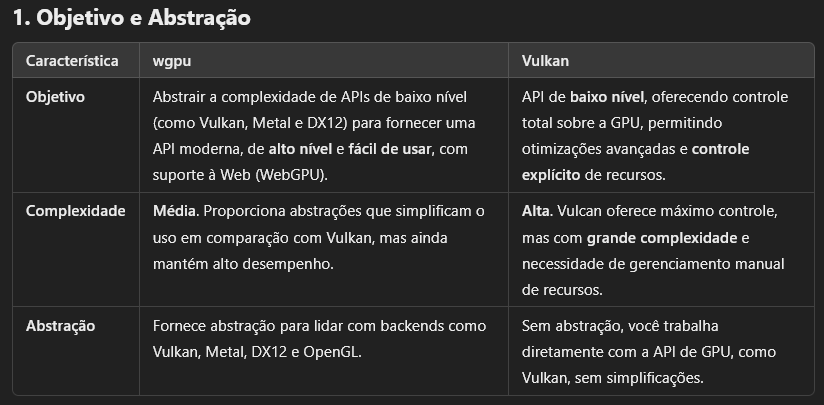

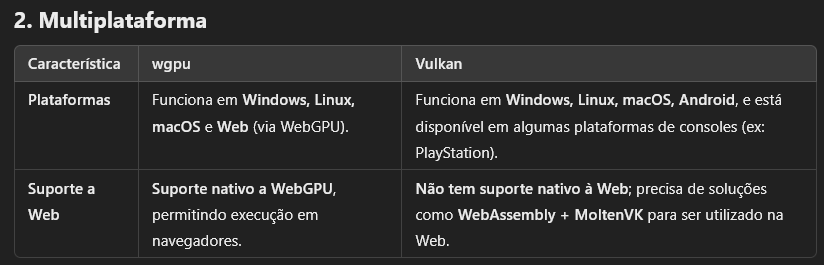

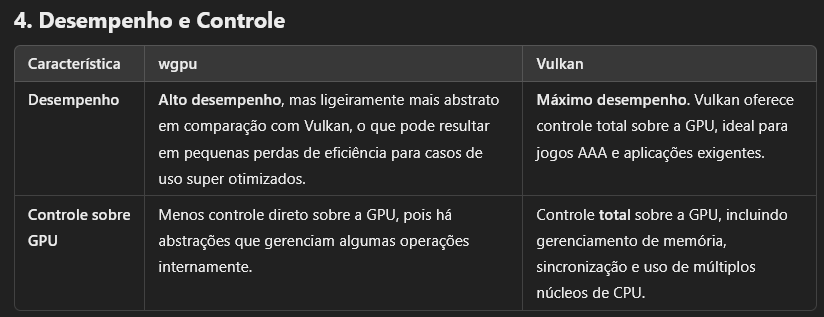

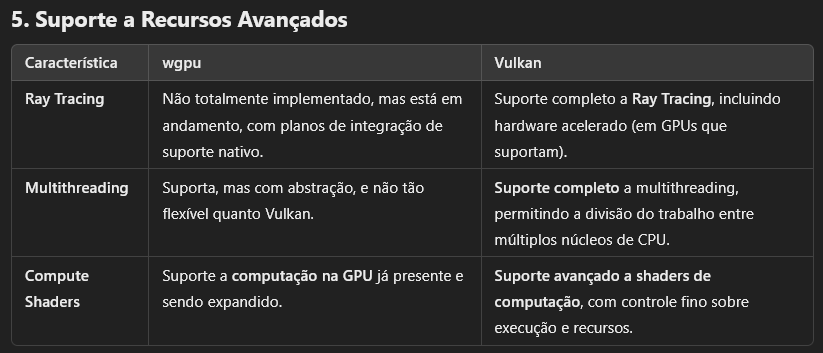

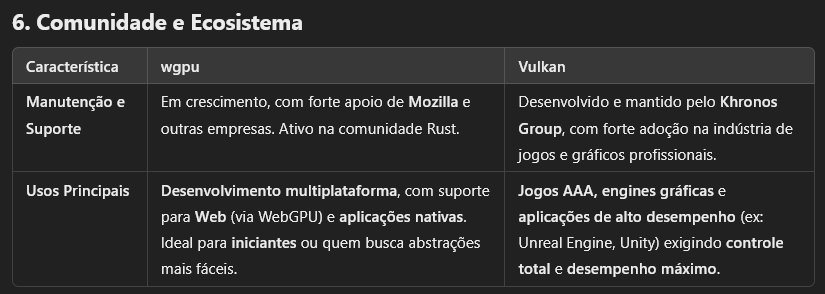

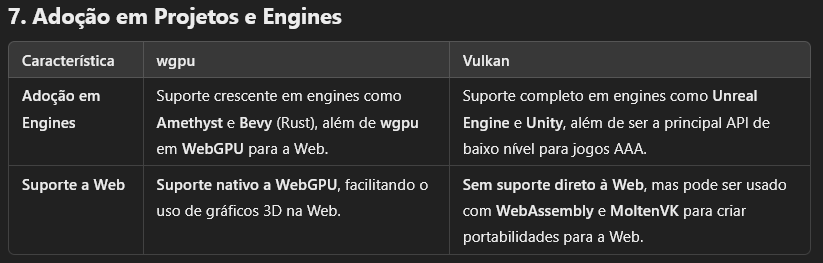

wgpu vs Vulkan :

-

.

.

-

.

.

-

.

.

-

.

.

-

.

.

-

.

.

-

OpenGL

-

OpenGL .

-

Open Source Open Standard.

-

OpenGL itself has not been officially "deprecated" globally, but it is obsolete in many contexts and being replaced by more modern APIs, such as Vulkan.

-

ES 3.x versions are still used on mobile, but Vulkan is the future.

-

-

Type:

-

Mid-level graphics API

-

-

Platforms:

-

Windows, Linux, macOS.

-

Support for Web via WebGL.

-

-

Backend:

-

OpenGL

-

-

Focus:

-

3D graphics for games, graphics engines, scientific applications

-

-

Advantages:

-

Cross-platform and widely supported

-

Easy to use compared to DX12/Vulkan

-

Good documentation and strong community

-

-

Disadvantages:

-

Old API, not optimized for modern GPUs

-

Less control over memory and graphics pipeline

-

Limited support on macOS (Apple uses Metal)

-

WebGL

-

Open Source Open Standard.

-

It is based on OpenGL ES 2.0, which is a simplified version of OpenGL for mobile and embedded devices.

-

WebGPU is the official successor to WebGL.

-

WebGL is still functional, but it is not recommended for new projects.

-

(2025-03-09) There is no official date for removal from browsers.

-

Type:

-

High-level graphics API

-

-

Platforms:

-

Web

-

-

Backend:

-

Based on OpenGL ES 2.0

-

-

Focus:

-

3D rendering on the web

-

-

Advantages:

-

Works directly in the browser, without the need for plugins

-

Easy to learn and integrate into web applications

-

Wide support, compatible with almost all browsers

-

-

Disadvantages:

-

Based on OpenGL ES 2.0, older and less efficient technology

-

No native support for modern features like Ray Tracing and Compute Shaders

-

May have lower performance than WebGPU

-

DirectX 12

-

Closed-source, from Microsoft.

-

Type:

-

Low-level graphics API.

-

-

Platforms:

-

Windows and Xbox

-

-

Backend:

-

Direct3D 12

-

-

Focus:

-

AAA games, high-performance applications

-

-

Advantages:

-

Direct control over the GPU

-

Support for Ray Tracing via DXR

-

Advanced Microsoft tools (PIX, RenderDoc)

-

-

Disadvantages:

-

Only for Windows and Xbox

-

High complexity, requires manual memory management and synchronization.

-

Metal

-

Closed-source, from Apple.

-

Low level and high performance :

-

Reduces CPU overhead, allowing better use of the GPU.

-

-

Support for parallel computation :

-

Includes an API for general computation on the GPU (similar to CUDA or OpenCL).

-

-

Platforms :

-

Exclusive to the Apple ecosystem.

-

Apple discontinued official support for OpenGL and encourages the use of Metal.

-

-

-

Support for Ray Tracing (since Metal 3).

Shader Languages

SPIR-V

GLSL (OpenGL Shader Language)

-

GLSL .

Slang

-

Slang .

WGSL (WebGPU)

-

Modern, safe, and cross-platform (WebGPU standard).

-

Works in browsers (WebGPU) and native (via

wgpu). -

No corporate lock-in (developed by W3C & Mozilla/Google/Apple).

-

Newer, less mature than GLSL/SPIR-V.

-

"Explicitly designed to avoid C++/OOP cruft. Rust-inspired syntax but purely for GPU work."

~HLSL (High-level Shader Language)

-

HLSL .

-

Proprietary to Microsoft.

-

Made for DirectX 9.

-

HLSL programs come in six forms: pixel shaders (fragment in GLSL), vertex shaders, geometry shaders, compute shaders, tessellation shaders (Hull and Domain shaders), and ray tracing shaders.

-

Where it can be used :

-

Is mainly used in DirectX-based environments (Windows/Xbox games, Unity, Unreal Engine). If you're targeting other platforms (macOS, Linux, mobile), you might need to use GLSL, MSL, or SPIR-V instead.

-

Direct3D (DirectX)

-

DirectX 9/10/11/12: HLSL is the standard shading language for Microsoft's Direct3D API.

-

Used in:

-

PC games (Windows)

-

Xbox console development (Xbox One, Xbox Series XS)

-

-

-

Unity (via Direct3D)

-

Unity supports HLSL when using:

-

Built-in Render Pipeline (Legacy)

-

Universal Render Pipeline (URP)

-

High Definition Render Pipeline (HDRP)

-

-

Shader Graph in Unity also uses HLSL-like syntax.

-

-

Unreal Engine (UE4/UE5)

-

Unreal Engine uses a custom shading language (Unreal Shader System, USS) but allows HLSL snippets in Custom HLSL Nodes.

-

HLSL is also used in Ray Tracing shaders in UE5.

-

-

NVIDIA OptiX (Ray Tracing)

-

HLSL can sometimes be used alongside CUDA/PTX for ray tracing effects.

-

-

Vulkan (via SPIR-V Cross)

-

HLSL shaders can be converted to SPIR-V (Vulkan's intermediate format) using tools like:

-

glslangValidator (from Khronos)

-

DXC (DirectX Shader Compiler)

-

SPIRV-Cross (converts HLSL to GLSL/SPIR-V)

-

-

-

Shader Model 6.0+ (Advanced Features)

-

HLSL supports modern GPU features like:

-

Ray Tracing (DXR)

-

Mesh & Amplification Shaders

-

Wave Operations.

-

-

-

Compute Shaders

-

(GPGPU programming in DirectX).

-

-

AI/ML Acceleration

-

(Some frameworks allow HLSL-based compute shaders for GPU acceleration).

-

-

-

Where it's not used :

-

OpenGL / WebGL → Uses GLSL instead.

-

Vulkan (Native) → Uses GLSL or SPIR-V.

-

Metal (Apple) → Uses MSL (Metal Shading Language).

-

-

Ex :

// Vertex Shader struct VSInput { float3 position : POSITION; float3 color : COLOR; }; struct VSOutput { float4 position : SV_POSITION; float3 color : COLOR; }; VSOutput VS_main(VSInput input) { VSOutput output; output.position = float4(input.position, 1.0); output.color = input.color; return output; } // Fragment Shader struct PSInput { float4 position : SV_POSITION; float3 color : COLOR; }; float4 PS_main(PSInput input) : SV_TARGET { return float4(input.color, 1.0); } -

Comparing the syntax of HLSL to GLSL :

-

Inputs :

-

HLSL:

-

The parameter for the

VS_mainandPS_maindescribe the inputs for each step. -

Uses a struct with semantics (

: POSITION,: COLOR).

-

-

GLSL:

-

Uses

in/outvariables. -

Uses

layout(location)to bind vertex attributes.

-

-

-

Outputs :

-

HLSL:

Returns a struct, for passing values from the Vertex Shader to the Fragment Shader;VSOutput. -

GLSL:

-

Uses a

outvariable.

-

-

-

MSL (Metal Shading Language)

-

Apple’s official shader language (optimized for M1/M2).

-

Required for iOS/macOS Metal apps.

-

Apple-only (no Windows/Linux).

-

Use with MoltenVK if you want Vulkan → Metal compatibility.

-

Ex :

// Vertex Shader #include <metal_stdlib> using namespace metal; struct VertexIn { float3 position [[attribute(0)]]; float3 color [[attribute(1)]]; }; struct VertexOut { float4 position [[position.md]]; float3 color; }; vertex VertexOut vertex_main(VertexIn in [[stage_in]]) { VertexOut out; out.position = float4(in.position, 1.0); out.color = in.color; return out; } // Fragment Shader fragment float4 fragment_main(VertexOut in [[stage_in]]) { return float4(in.color, 1.0); }

Tools

Capture

GFXReconstruct

-

Captures commands to a file and allows you to replay them.

-

Linux, Android, Linux.

-

Vulkan, but also other APIs.

-

(2025-10-04)

-

I tested it and it worked nicely, super simple.

-

set VK_INSTANCE_LAYERS=VK_LAYER_LUNARG_gfxreconstruct

set GFXRECON_CAPTURE_FILE=C:\captures\capture.gfxr

set GFXRECON_CAPTURE_FRAMES=1000-2200

my_game.exe

-

gfxrecon-replay-

Tool to replay GFXReconstruct capture files.

gfxrecon-replay --pause-frame 1200 capture.gfxr-

Also supports

--screenshotsand--screenshot-allif you want a quick visual scan of frames. -

While

gfxrecon-replayis paused, attach RenderDoc or Nsight Graphics to the replay process (or launch the replay from RenderDoc/Nsight) and use RenderDoc’s per-draw inspection / pixel history / depth buffer views. The GFXReconstruct docs explicitly say capture files “can be replayed inside other tools (RenderDoc, Nsight, AMD tools, etc.)”.

-

-

gfxrecon-info-

Tool to print information describing GFXReconstruct capture files.

-

-

gfxrecon-compress-

Tool to compress/decompress GFXReconstruct capture files.

-

The gfxrecon-compress tool requires LZ4, Zstandard, and/or zlib, which are currently optional build dependencies.

-

-

gfxrecon-extract-

Tool to extract SPIR-V binaries from GFXReconstruct capture files.

-

-

gfxrecon-convert-

Tool to convert GFXReconstruct capture files to a JSON Lines listing of API calls. (experimental for D3D12 captures)

-

-

gfxrecon-optimize-

Tool to produce new capture files with improved replay performance.

-

Debuggers

RenderDoc

-

-

API loader.

-

-

Cross-platform.

-

Open-source.

-

Not a profiler.

-

RenderDoc is not designed as a continuous multi-second timeline tracer; its strength is detailed single-frame analysis.

Nvidia Nsight Graphics

-

Capture :

-

(2025-10-04)

-

Exceptionally similar to RenderDoc.

-

-

-

GPU Trace :

PIX

-

.

Profilers

Choosing the Space to compute Lighting

-

For more information about precision, check Graphics and Shaders#Precision .

View Space

-

Advantages :

-

Since view space places the camera at the origin, numbers tend to stay small and preserve precision. This is an important consideration when rendering large worlds. When coordinates become large (e.g. 1000km, they cannot represent small differences; details smaller than ~6 cm are lost.

-

View-space normals allow efficient compression: since the camera always looks down the −Z axis, we only need to store x and y components and reconstruct z, saving G-buffer bandwidth. This is a reason why several engines prefer view-space normals for deferred shading. With smaller positions, depth reconstruction is also more stable. This compression does not work for world-space normals, where all directions are equally likely.

-

The view vector in specular/PBR models is simply the negative fragment position in view space. The camera position is (0,0,0), so no extra subtraction is needed. LearnOpenGL notes that this makes the specular term easy to compute and is why many tutorials prefer view-space lighting.

-

LearnOpenGL:

-

We chose to do the lighting calculations in world space, but most people tend to prefer doing lighting in view space.

-

An advantage of view space is that the viewer's position is always at

(0,0,0)so you already got the position of the viewer for free. -

However, I find calculating lighting in world space more intuitive for learning purposes.

-

-

-

Since the fragment’s z value directly represents the distance from the camera, effects like fog, attenuation and cluster slicing in clustered shading become easier to implement.

-

-

Disadvantages :

-

Environment maps and image-based lighting (IBL) are usually defined in world space. To sample them, view-space normals must be converted to world space; repeated conversions can add instructions and risk precision loss. Some encoding techniques only support world-space normals.

-

Because view-space normals rotate with the camera, specular highlights and reflections may appear to wobble, especially when using normal maps.

-

Light positions and directions stored in world space must be transformed into view space each frame. For many lights, this transformation may be non-negligible (though compute shaders can handle it efficiently).

-

World Space

-

Advantages :

-

World-space normals do not change when the camera moves, so specular highlights from normal maps remain stable. This is useful for physically based rendering (PBR) with image-based lighting, where a consistent normal is needed to look up from environment maps.

-

Krzysztof Narkowicz (UE5) notes that world-space normals have beneficial properties: they do not depend on the camera, so specular highlights and reflections on static objects will not wobble when the camera moves. Because their precision does not depend on the camera direction, they remain accurate even for surfaces pointing away from the viewer. In contrast, view-space normals change orientation when the camera rotates, which can make environment map lookups or specular highlights less stable.

-

Since most lights and environment maps are defined in world space, you avoid per-fragment transformations. In forward renderers this can simplify the shader because light direction can be computed once in world space and reused.

-

Some post-processing effects (SSAO, screen-space reflections) and global illumination techniques operate in world space and expect world-space positions and normals. Having them already in world space can avoid conversions.

-

-

Disadvantages :

-

Using world-space positions directly means adding large translations (e.g., the camera is at 1 km while shading a small object). The article “The Perils of World Space” explains that 32-bit floats only have high resolution near the origin; far from the origin, sub-centimetre differences cannot be represented. Thus shading calculations (e.g., diffuse or specular dot products) may wobble due to imprecision. This can manifest as flickering when the camera moves.

-

World-space normals require all three components to be stored, and world-space positions might need 3×32-bit floats. This increases memory bandwidth in deferred renderers compared with view-space normals, which can be compressed.

-

For specular reflections relative to the camera, you must compute the view vector by subtracting the camera position from the fragment position, adding per-fragment cost.

-

-

Trick to improve precision: Fixing the camera and moving the world :

-

Instead of letting the camera roam far from the origin, move the world in the opposite direction so that the camera stays near the origin. Pharr describes decomposing the view matrix into rotation and translation, then moving the translation into the model matrices while keeping the camera at the origin;

worldPos – cameraPosis computed in double-precision on the CPU and passed to the GPU as a small value. Many engines (e.g., Cryengine) perform lighting in world space but shift the origin per frame; this provides the stability of view-space lighting while retaining the advantages of world-space normals

-

BSDF (Bidirectional Scattering Distribution Function)

Sources

-

-

"Filament pbr paper is nice and readable and even has some pseudo code examples. the renderer is open source (which is also a good reference)".

-

"it just cuts right through the BS and just gives you the math you need".

-

-

Moving Frostbite to Physically Based Rendering 3.0 - Siggraph 2014 .

-

PBR Book.-

Award academy winning book.

-

Basically created the meaning of PBR.

-

"I'm finding myself pretty annoyed at how OOP-y it is so far (I think I'm at 4 levels of inheritancs now for the integrators?)".

-

-

(2025-09-10)

-

The reading sounds sloooow and seems to have the CMake mentality.

-

Nah fok off.

-

-

-

-

It's a super short and not enlightening explanation. The Filament PBR is better.

-

Samples

-

-

"wrap lighting" →

max((dot(N,L) + wrap) / (1+wrap), 0)creates softer shading. -

Can quantize light intensity into bands for toon-like shading.

-

Rendering Equation

BSDF (Bidirectional Scattering Distribution Function)

-

A material model is described mathematically by a BSDF (Bidirectional Scattering Distribution Function), which is itself composed of two other functions:

-

BRDF (Bidirectional Reflectance Distribution Function)

-

BTDF (Bidirectional Transmittance Function).

-

-

Since we aim to model commonly encountered surfaces, our standard material model will focus on the BRDF and ignore the BTDF, or approximate it greatly.

-

-

{9:57}

-

Implementation.

-

-

-

Rendering Equation and BRDFs .

-

Great video, super important to understand rendering and Physics Based Rendering (PBR).

-

Everything is based on the abstract equation, as being the basis for Based Physics Rendering:

-

outgoing_light = emitted_light + reflected_light.

-

-

{10:19}

-

The 'reflected_light' equation is shown

-

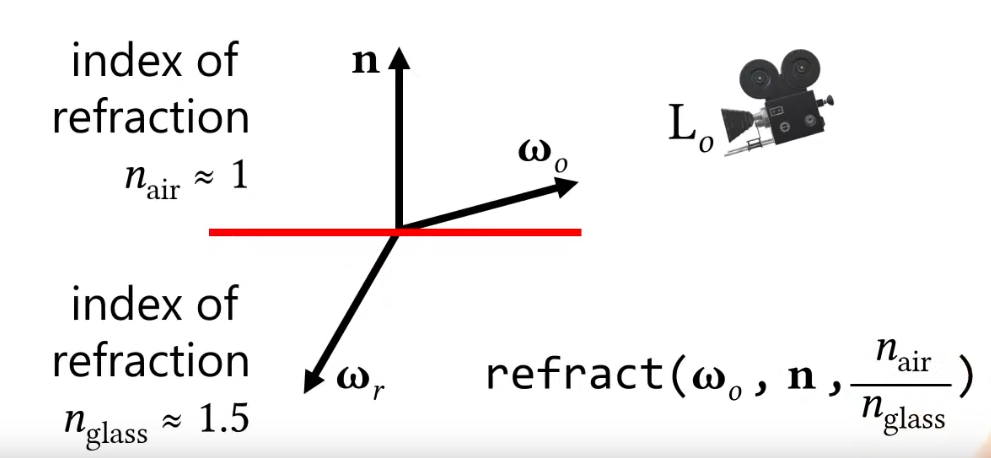

$f_r(x, \omega_i, \omega_o)$ is the 'Bidirectional Reflectance Distribution Function (BRDF)'

-

$L_i$ is the 'color of light'.

-

$cos(\theta_i)$ is the representation of the 'Surface Normal'

-

~Then an integral is used to calculate this at different angles, ~I don't know.

-

Engines don't use the integral.

-

-

-

{10:53}

-

Rendering Equation.

-

-

{22:58 -> end}

-

It's the most interesting part of the video, although everything is interesting.

-

Each parameter of the shader used by Disney is explained.

-

-

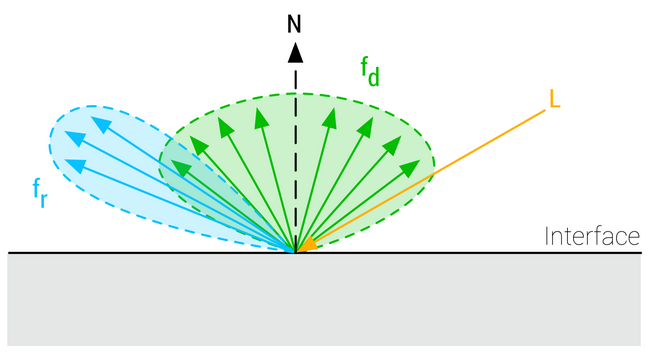

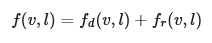

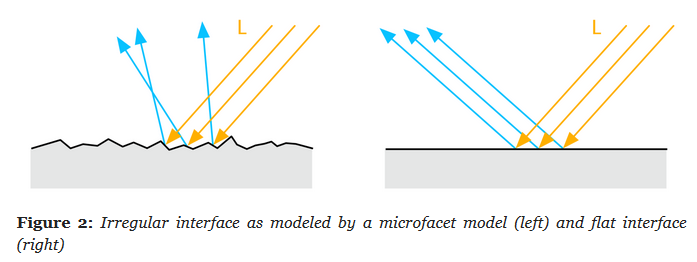

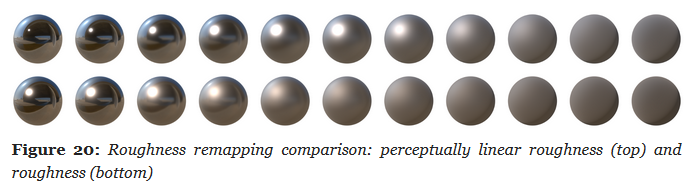

BRDF (Bidirectional Reflectance Distribution Function)

-

The BRDF describes the surface response of a standard material as a function made of two terms:

-

A diffuse component ($f_d$).

-

A specular component ($f_r$).

-

-

.

.

-

The complete surface response can be expressed as such:

-

.

.

-

This equation characterizes the surface response for incident light from a single direction. The full rendering equation would require to integrate $l$ over the entire hemisphere.

-

Energy conservation is one of the key components of a good BRDF for physically based rendering. An energy conservative BRDF states that the total amount of specular and diffuse reflectance energy is less than the total amount of incident energy. Without an energy conservative BRDF, artists must manually ensure that the light reflected off a surface is never more intense than the incident light.

General Terms

-

$v$

-

View unit vector.

-

-

$h$

-

Half unit vector between $l$ and $v$.

-

-

$l$

-

Incident light unit vector.

-

-

$n$

-

Normal surface unit vector.

-

-

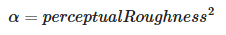

$\alpha$

-

Roughness, remapped from using input

perceptualRoughness.

-

TLDR

-

Specular Term :

-

A Cook-Torrance specular microfacet model

-

A GGX normal distribution function

-

A Smith-GGX height-correlated visibility function.

-

A Schlick Fresnel function.

-

-

Diffuse Term :

-

A Lambertian diffuse model.

-

float D_GGX(float NoH, float a) {

float a2 = a * a;

float f = (NoH * a2 - NoH) * NoH + 1.0;

return a2 / (PI * f * f);

}

vec3 F_Schlick(float u, vec3 f0) {

return f0 + (vec3(1.0) - f0) * pow(1.0 - u, 5.0);

}

float V_SmithGGXCorrelated(float NoV, float NoL, float a) {

float a2 = a * a;

float GGXL = NoV * sqrt((-NoL * a2 + NoL) * NoL + a2);

float GGXV = NoL * sqrt((-NoV * a2 + NoV) * NoV + a2);

return 0.5 / (GGXV + GGXL);

}

float Fd_Lambert() {

return 1.0 / PI;

}

void BRDF(...) {

// >> Standard Model

vec3 h = normalize(v + l);

float NoV = abs(dot(n, v)) + 1e-5;

float NoL = clamp(dot(n, l), 0.0, 1.0);

float NoH = clamp(dot(n, h), 0.0, 1.0);

float LoH = clamp(dot(l, h), 0.0, 1.0);

// perceptually linear roughness to roughness (see parameterization)

float roughness = perceptualRoughness * perceptualRoughness;

float D = D_GGX(NoH, roughness);

vec3 F = F_Schlick(LoH, f0);

float V = V_SmithGGXCorrelated(NoV, NoL, roughness);

// specular BRDF

float D = distributionCloth(roughness, NoH); // From the Cloth BRDF.

float V = visibilityCloth(NoV, NoL); // From the Cloth BRDF.

vec3 F = sheenColor; // From the Cloth BRDF.

vec3 Fr = (D * V) * F;

vec3 energyCompensation = 1.0 + f0 * (1.0 / dfg.y - 1.0);

// Scale the specular lobe to account for multiscattering

Fr *= pixel.energyCompensation;

// Without Cloth BRDF

// diffuse BRDF

// Conversion of base color to diffuse:

vec3 diffuseColor = (1.0 - metallic) * baseColor.rgb;

vec3 Fd = diffuseColor * Fd_Lambert();

// With Cloth BRDF

float diffuse = diffuse(roughness, NoV, NoL, LoH);

#if defined(MATERIAL_HAS_SUBSURFACE_COLOR)

// energy conservative wrap diffuse

diffuse *= saturate((dot(n, light.l) + 0.5) / 2.25);

#endif

vec3 Fd = diffuse * pixel.diffuseColor;

// <<

// >> Cloth BRDF

#if defined(MATERIAL_HAS_SUBSURFACE_COLOR)

// cheap subsurface scatter

Fd *= saturate(subsurfaceColor + NoL);

vec3 color = Fd + Fr * NoL;

color *= (lightIntensity * lightAttenuation) * lightColor;

#else

vec3 color = Fd + Fr;

color *= (lightIntensity * lightAttenuation * NoL) * lightColor;

#endif

// <<

// >> Clear Coat

// remapping and linearization of clear coat roughness

clearCoatPerceptualRoughness = clamp(clearCoatPerceptualRoughness, 0.089, 1.0);

clearCoatRoughness = clearCoatPerceptualRoughness * clearCoatPerceptualRoughness;

// clear coat BRDF

float Dc = D_GGX(clearCoatRoughness, NoH);

float Vc = V_Kelemen(clearCoatRoughness, LoH);

float Fc = F_Schlick(0.04, LoH) * clearCoat; // clear coat strength

float Frc = (Dc * Vc) * Fc;

// <<

// account for energy loss in the base layer

return color * ((Fd + Fr * (1.0 - Fc)) * (1.0 - Fc) + Frc);

}

void main() {

// I believe this is completely geared towards Directional Lights.

vec3 l = normalize(-lightDirection);

float NoL = clamp(dot(n, l), 0.0, 1.0);

// lightIntensity is the illuminance

// at perpendicular incidence in lux

float illuminance = lightIntensity * NoL;

vec3 luminance = BSDF(v, l) * illuminance;

}

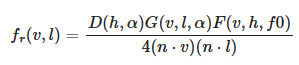

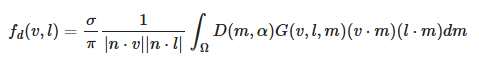

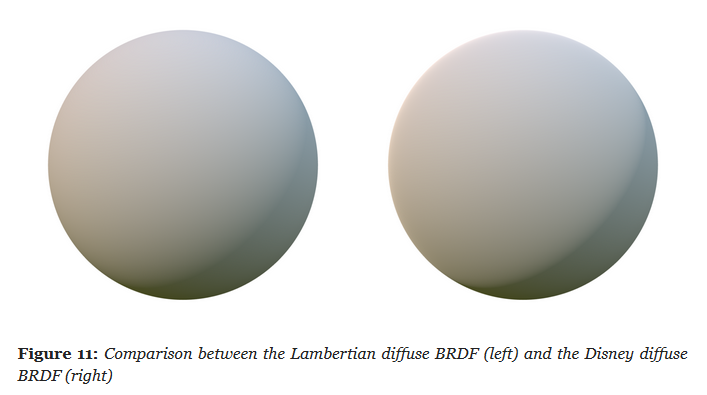

Specular BRDF

-

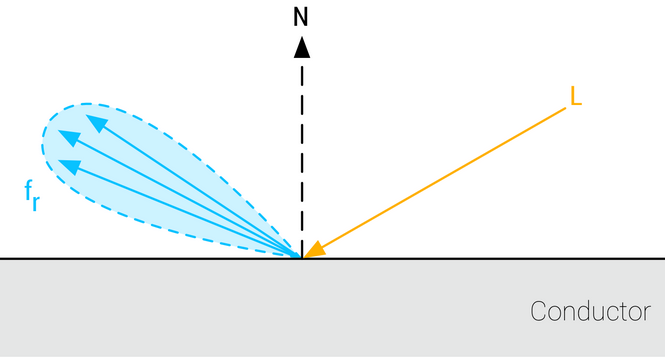

For the specular term, $f_r$ is a mirror BRDF that can be modeled with the Fresnel law , noted in the Cook-Torrance approximation of the microfacet model integration:

-

.

.

-

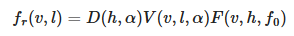

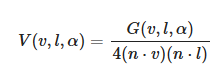

This function can be simplified by introducing a Visibility Function.

-

.

.

-

.

.

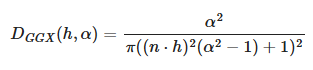

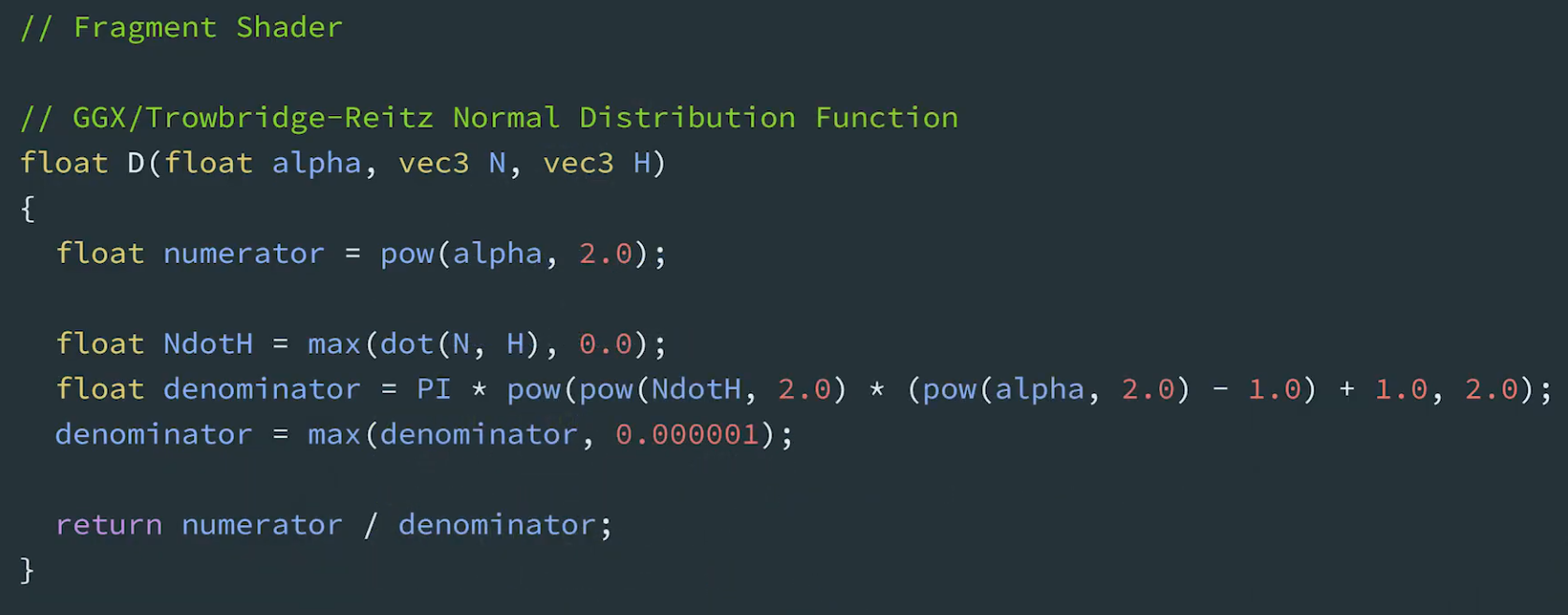

Normal distribution function (Specular D)

-

Burley observed that long-tailed normal distribution functions (NDF) are a good fit for real-world surfaces.

-

The GGX distribution is a distribution with long-tailed falloff and short peak in the highlights, with a simple formulation suitable for real-time implementations. It is also a popular model, equivalent to the Trowbridge-Reitz distribution, in modern physically based renderers.

-

.

.

-

Specular D term :

float D_GGX(float NoH, float roughness) { float a = NoH * roughness; float k = roughness / (1.0 - NoH * NoH + a * a); return k * k * (1.0 / PI); } -

Specular D term, optimized for fp16 :

#define MEDIUMP_FLT_MAX 65504.0 #define saturateMediump(x) min(x, MEDIUMP_FLT_MAX) float D_GGX(float roughness, float NoH, const vec3 n, const vec3 h) { vec3 NxH = cross(n, h); float a = NoH * roughness; float k = roughness / (dot(NxH, NxH) + a * a); float d = k * k * (1.0 / PI); return saturateMediump(d); } -

.

.

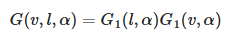

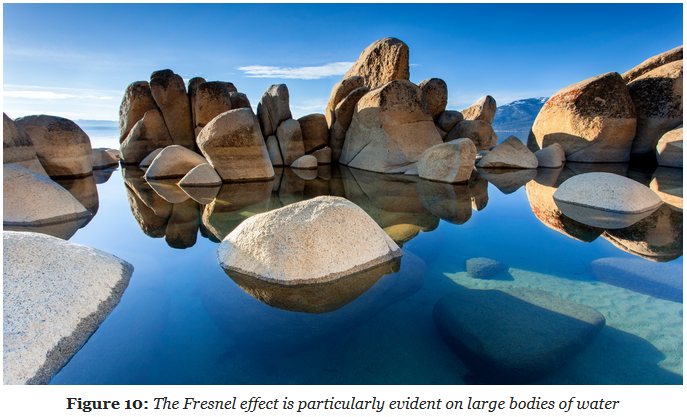

Geometric Shadowing / Visibility Function (Specular G / Specular V)

-

Eric Heitz showed in that the Smith geometric shadowing function is the correct and exact term to use.

-

The Smith formulation is the following:

-

.

.

-

Consider:

-

Specular V term :

-

The GLSL implementation of the visibility term, is a bit more expensive than we would like since it requires two

sqrtoperations.

float V_SmithGGXCorrelated(float NoV, float NoL, float roughness) { float a2 = roughness * roughness; float GGXV = NoL * sqrt(NoV * NoV * (1.0 - a2) + a2); float GGXL = NoV * sqrt(NoL * NoL * (1.0 - a2) + a2); return 0.5 / (GGXV + GGXL); } -

-

Approximated specular V term :

-

This approximation is mathematically wrong but saves two square root operations and is good enough for real-time mobile applications

float V_SmithGGXCorrelatedFast(float NoV, float NoL, float roughness) { float a = roughness; float GGXV = NoL * (NoV * (1.0 - a) + a); float GGXL = NoV * (NoL * (1.0 - a) + a); return 0.5 / (GGXV + GGXL); }-

(2025-09-13) Note:

-

If roughness is 0, then the final result is

1 / (4 * NoL * NoV). -

I tested this, it's correct.

-

-

-

.

.

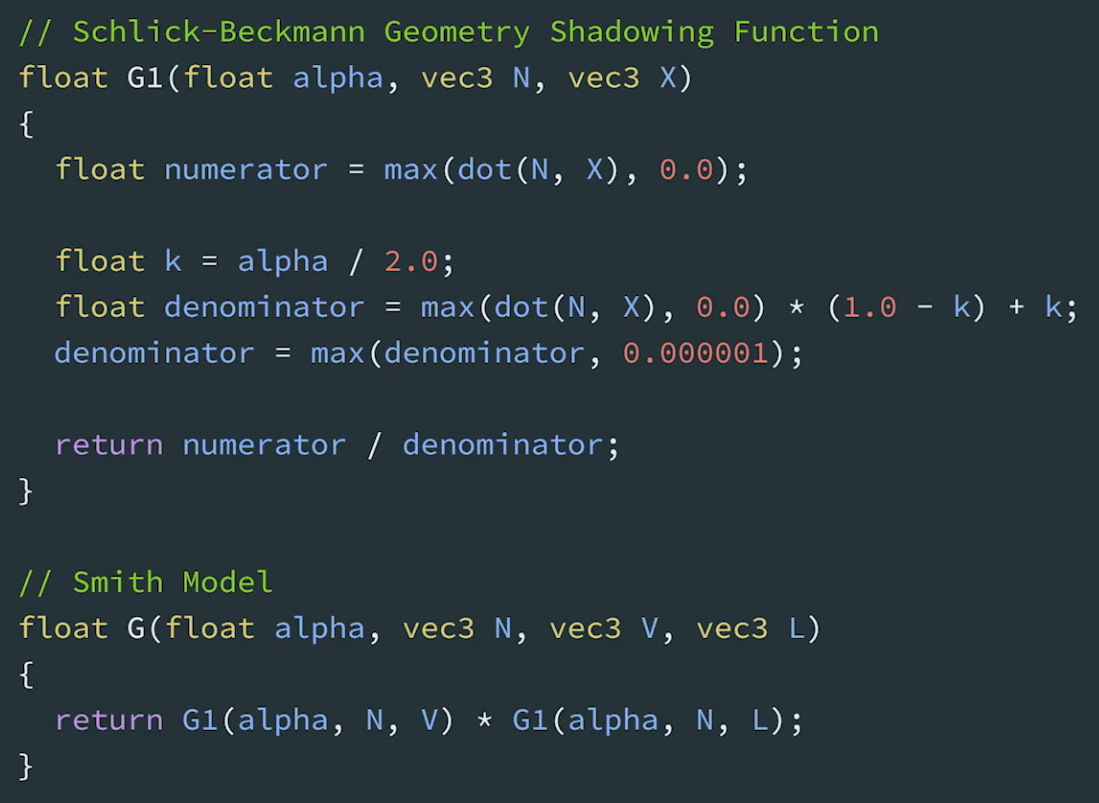

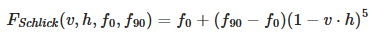

Fresnel (Specular F)

-

This effect models the fact that the amount of light the viewer sees reflected from a surface depends on the viewing angle and on the index of refraction (IOR) of the material.

-

.

.

-

When looking at the water straight down (at normal incidence) you can see through the water. However, when looking further out in the distance (at grazing angle, where perceived light rays are getting parallel to the surface), you will see the specular reflections on the water become more intense.

-

-

Schlick describes an inexpensive approximation of the Fresnel term for the Cook-Torrance specular BRDF:

-

.

.

-

This Fresnel function can be seen as interpolating between the incident specular reflectance and the reflectance at grazing angles.

-

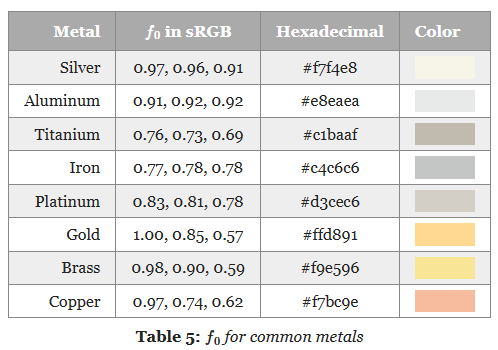

$f_0$ (Base Reflectance or Base Reflectivity) :

-

Is a constant that represents the specular reflectance at normal incidence and is achromatic for dielectrics, and chromatic for metals.

-

The actual value depends on the index of refraction of the interface.

-

If dia-electric: use base reflectivity of 0.04; else: is a metal, use albedo as base reflectivity.

-

n (Index of Refraction) (IOR) :

-

base_reflectivity of 0.04 is the same as IOR = 1.5.

-

IOR 1.5 is the default for blender.

-

.

.

-

-

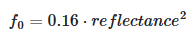

Calculating $f_0$ and Remapping :

-

The Fresnel term relies on $f_0$ , the specular reflectance at normal incidence angle, and is achromatic for dielectrics.

-

Remapping :

vec3 f0 = 0.16 * reflectance * reflectance-

See the Material -> Reflectance part to understand the remapping.

-

-

Computing $f_0$ for dielectric and metallic materials in GLSL

vec3 f0 = 0.16 * reflectance * reflectance * (1.0 - metallic) + baseColor * metallic; -

-

-

$f_{90}$.

-

Reflectance at grazing angles.

-

Approaches 100% for smooth materials.

-

Observation of real world materials show that both dielectrics and conductors exhibit achromatic specular reflectance at grazing angles and that the Fresnel reflectance is 1.0 at 90°.

-

-

Specular F term :

vec3 F_Schlick(float u, vec3 f0, float f90) { return f0 + (vec3(f90) - f0) * pow(1.0 - u, 5.0); }-

Using $f_{90}$ set to 1, the Schlick approximation for the Fresnel term can be optimized for scalar operations by refactoring the code slightly.

vec3 F_Schlick(float u, vec3 f0) { float f = pow(1.0 - u, 5.0); return f + f0 * (1.0 - f); }-

.

.

-

Godot Code Snippet :

float fresnel(float amount, vec3 normal, vec3 view) { return pow((1.0 - clamp(dot(normalize(normal), normalize(view)), 0.0, 1.0 )), amount); } void fragment() { vec3 base_color = vec3(0.0); float basic_fresnel = fresnel(3.0, NORMAL, VIEW); ALBEDO = base_color + basic_fresnel; }-

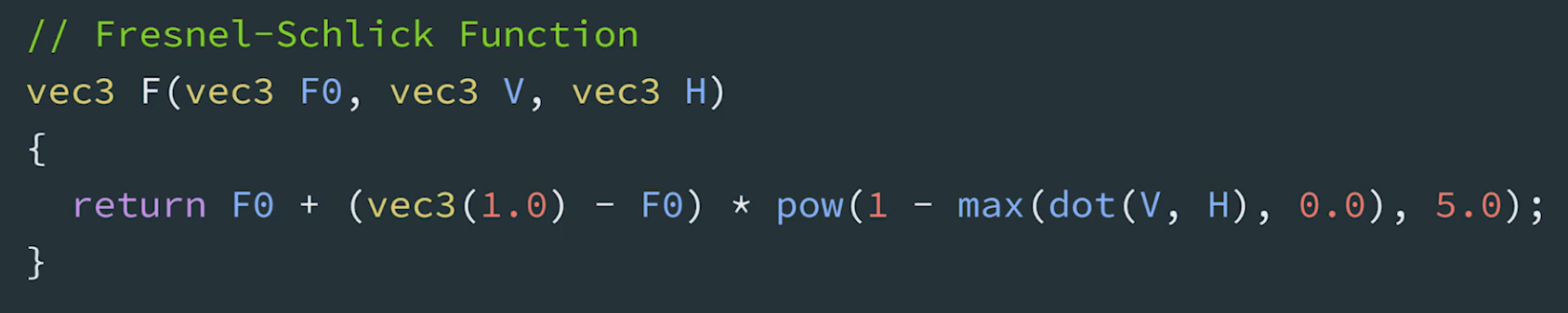

Colorful Fresnel:

-

This snippet lets you colorize the fresnel by multiplying it with an RGB-value and set the intensity to either tone down the effect or, if you crank it up, make it glow. You need to enable Glow in the Environment node. (The

clamp()has been removed allowing the fresnel to go beyond 1.0). You can also make the fresnel glow by assigning it to EMISSION. -

.

.

-

Not-colorful / colorful + glow.

-

vec3 fresnel_glow(float amount, float intensity, vec3 color, vec3 normal, vec3 view) { return pow((1.0 - dot(normalize(normal), normalize(view))), amount) * color * intensity; } void fragment() { vec3 base_color = vec3(0.5, 0.2, 0.9); vec3 fresnel_color = vec3(0.0, 0.7, 0.9); vec3 fresnel = fresnel_glow(4.0, 4.5, fresnel_color, NORMAL, VIEW); ALBEDO = base_color + fresnel; } -

-

-

Energy Compensation

-

.

.

-

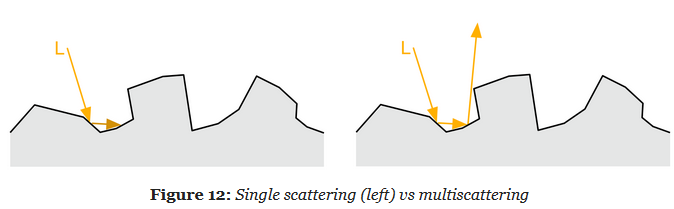

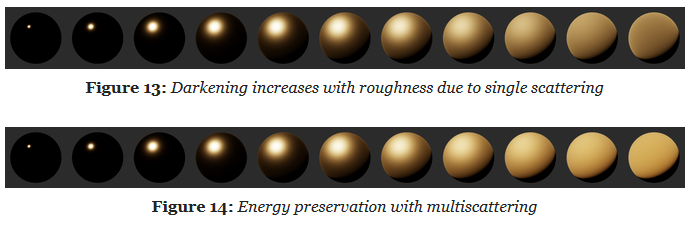

Single Scaterring vs Multiscattering :

-

.

.

-

.

.

-

-

This solution is therefore not suitable for real-time rendering.

-

The idea is to add an energy compensation term as an additional BRDF lobe.

vec3 energyCompensation = 1.0 + f0 * (1.0 / dfg.y - 1.0);

// Scale the specular lobe to account for multiscattering

Fr *= pixel.energyCompensation;

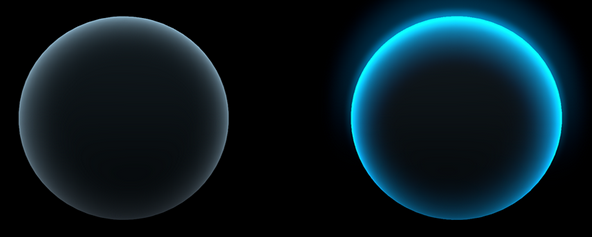

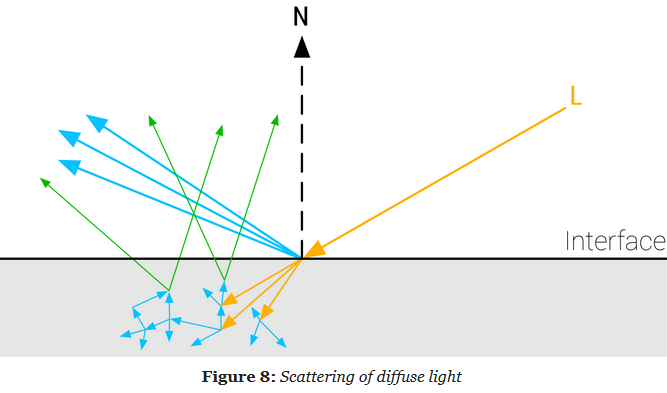

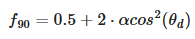

Diffuse BRDF

-

The diffuse term of the BRDF:

-

.

.

-

Our implementation will instead use a simple Lambertian BRDF that assumes a uniform diffuse response over the microfacets hemisphere:

-

.

.

-

Diffuse Lambertian BRDF :

-

In practice, the diffuse reflectance is multiplied later

float Fd_Lambert() { return 1.0 / PI; } vec3 Fd = diffuseColor * Fd_Lambert(); -

-

However, the diffuse part would ideally be coherent with the specular term and take into account the surface roughness. Both the Disney diffuse BRDF and Oren-Nayar model take the roughness into account and create some retro-reflection at grazing angles. Given our constraints we decided that the extra runtime cost does not justify the slight increase in quality. This sophisticated diffuse model also renders image-based and spherical harmonics more difficult to express and implement.

-

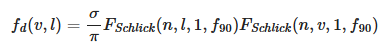

Disney diffuse BRDF :

-

.

.

-

.

.

float F_Schlick(float u, float f0, float f90) { return f0 + (f90 - f0) * pow(1.0 - u, 5.0); } float Fd_Burley(float NoV, float NoL, float LoH, float roughness) { float f90 = 0.5 + 2.0 * roughness * LoH * LoH; float lightScatter = F_Schlick(NoL, 1.0, f90); float viewScatter = F_Schlick(NoV, 1.0, f90); return lightScatter * viewScatter * (1.0 / PI); } -

-

Lambertian diffuse BRDF vs Disney diffuse BRDF :

-

The material used is fully dialetric.

-

The surface response is very similar with both BRDFs but the Disney one exhibits some nice retro-reflections at grazing angles (look closely at the left edge of the spheres).

-

.

.

-

We could allow artists/developers to choose the Disney diffuse BRDF depending on the quality they desire and the performance of the target device. It is important to note however that the Disney diffuse BRDF is not energy conserving as expressed here.

-

Material

Base Color / Albedo

-

Diffuse albedo for non-metallic surfaces, and specular color for metallic surfaces.

-

Linear RGB

[0..1]. -

It should be devoid of lighting information, except for micro-occlusion.

-

For Non-Metallic Materials :

-

Represents the reflected color and should be an sRGB value in the range 50-240 (strict range) or 30-240 (tolerant range).

-

-

For Metallic Materials :

-

Represents both the specular color and reflectance.

-

Use values with a luminosity of 67% to 100% (170-255 sRGB).

-

Oxidized or dirty metals should use a lower luminosity than clean metals to take into account the non-metallic components.

-

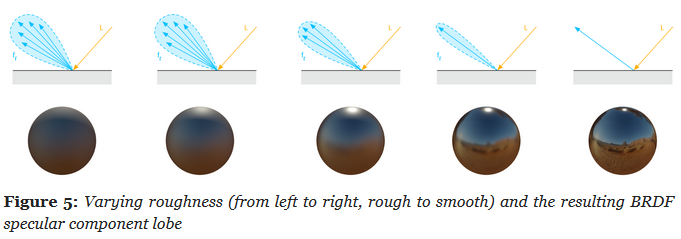

Roughness Value / Roughness Map

-

Perceived smoothness (0.0) or roughness (1.0) of a surface. Smooth surfaces exhibit sharp reflections

-

Scalar

[0..1]. -

.

.

-

Rough (left), smooth (right).

-

-

.

.

-

Remapping :

-

.

.

-

.

.

-

Metallic Map

-

Whether a surface appears to be dielectric (0.0) or conductor (1.0).

-

Scalar

[0..1]. -

Is almost a binary value. Pure conductors have a metallic value of 1 and pure dielectrics have a metallic value of 0. You should try to use values close at or close to 0 and 1. Intermediate values are meant for transitions between surface types (metal to rust for instance).

-

.

.

-

Non-Metallic.

-

-

.

.

-

Metallic.

-

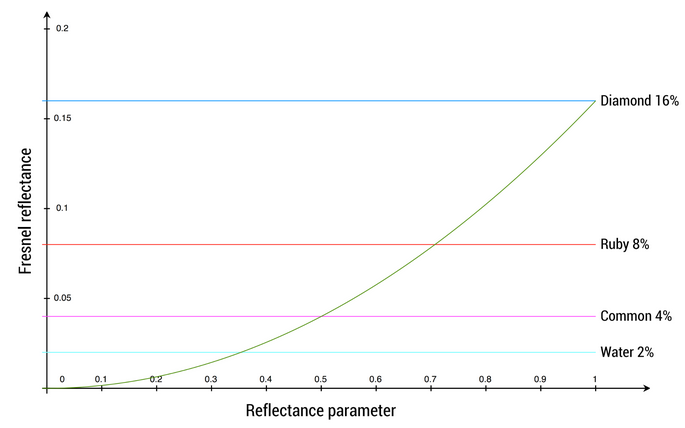

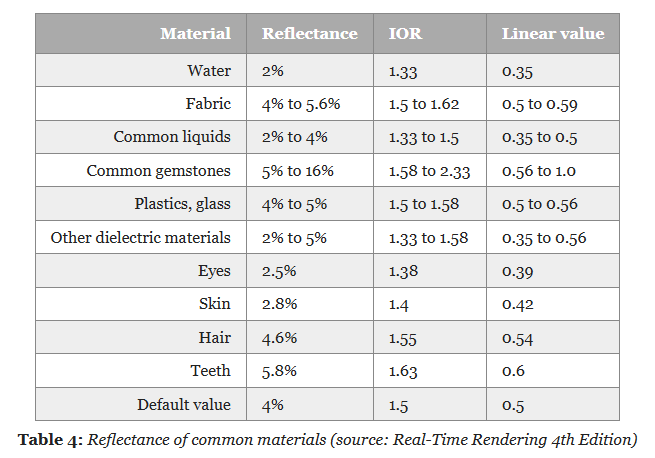

Reflectance

Parameter

-

Fresnel reflectance at normal incidence for dielectric surfaces. This replaces an explicit index of refraction

-

Scalar

[0..1]. -

For Non-Metallic Materials :

-

Should be set to 127 sRGB (0.5 linear, 4% reflectance) if you cannot find a proper value. Do not use values under 90 sRGB (0.35 linear, 2% reflectance).

-

-

For Metallic Materials :

-

Is ignored (calculated from the base color).

-

-

We will use the remapping for dielectric surfaces:

-

.

.

-

For

reflectance = 0.5,f0 = 0.04.

-

-

The goal is to map onto a range that can represent the Fresnel values of both common dielectric surfaces (4% reflectance) and gemstones (8% to 16%).

-

.

.

-

The mapping function is chosen to yield a 4% Fresnel reflectance value for an input reflectance of 0.5 (or 128 on a linear RGB gray scale).

-

.

.

-

No real world material has a value under 2%.

-

-

.

.

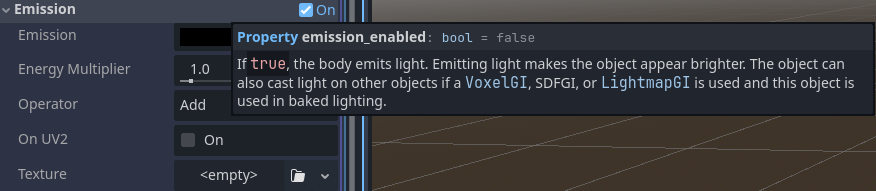

Emission

-

Additional diffuse albedo to simulate emissive surfaces (such as neons, etc.) This parameter is mostly useful in an HDR pipeline with a bloom pass

-

Linear RGB

[0..1]+ exposure compensation.

Normal Map, Displacement Map

-

Bump, Normal, Displacement, and Parallax Mapping .

-

Great video. No formulas or implementation, though.

-

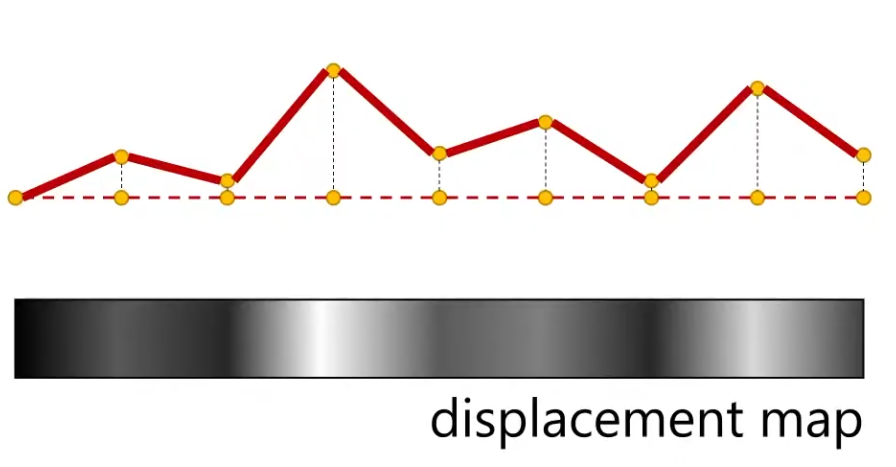

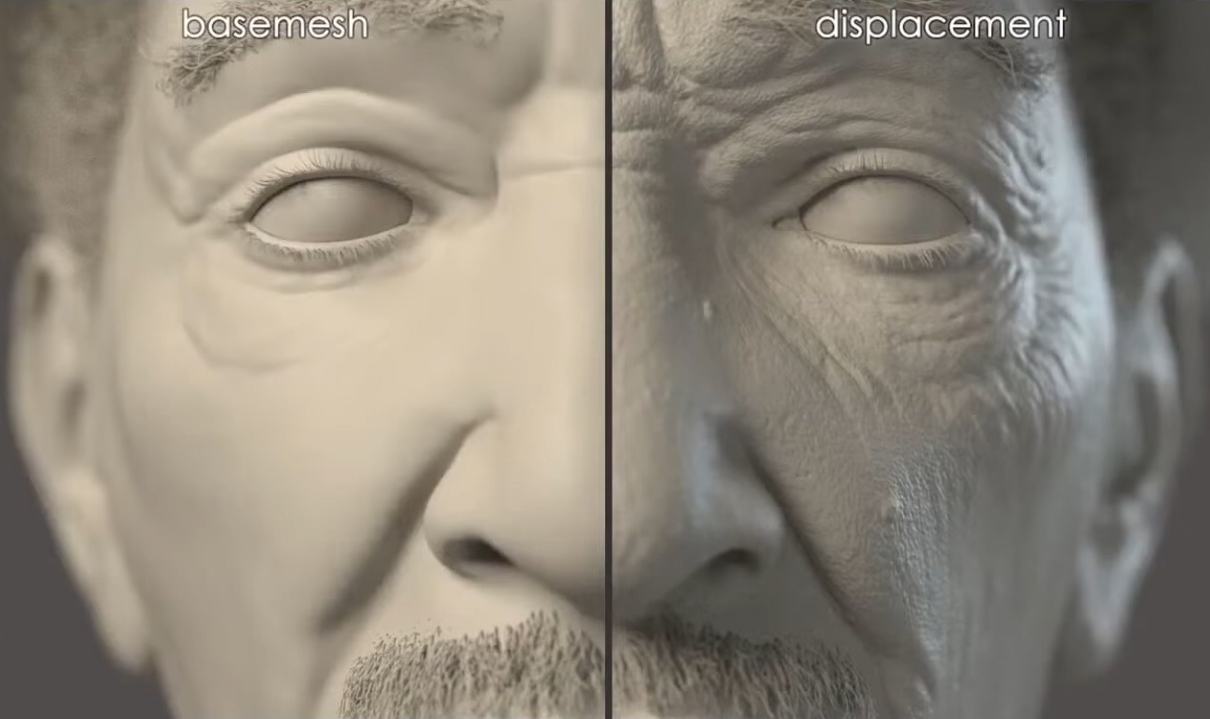

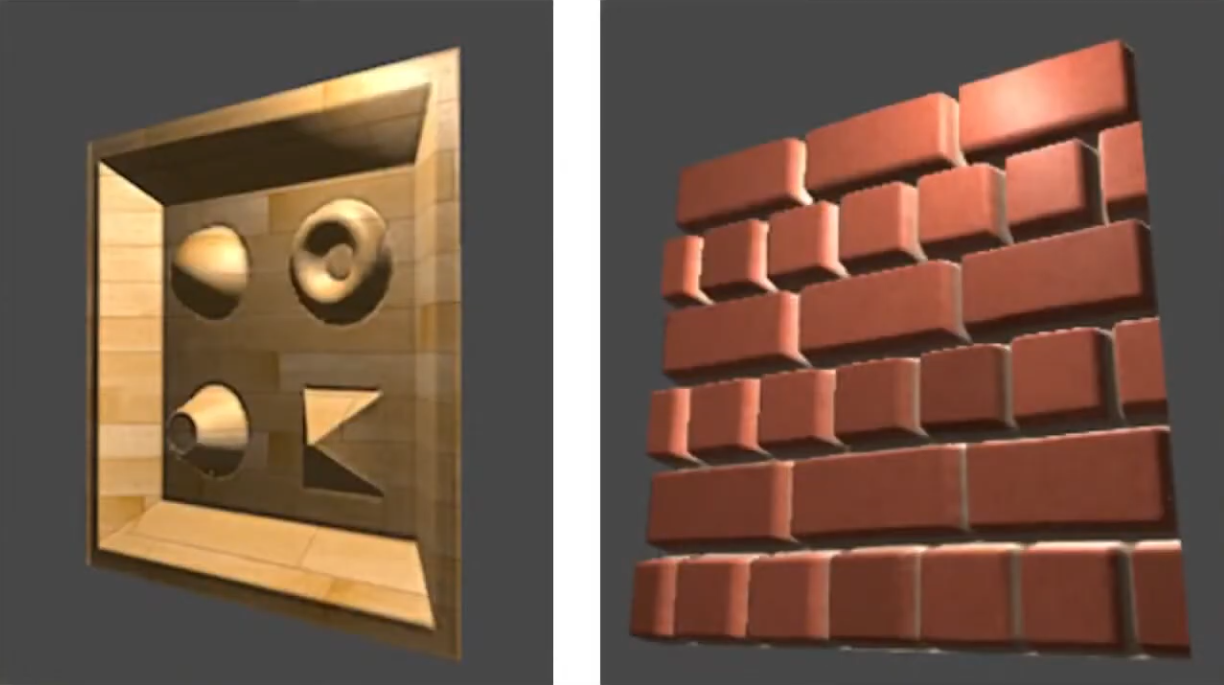

Displacement Map

-

Actually generate the geometry deformation; it's not faking anything.

-

A lot more expensive then Bump Map or Normal Map.

-

.

.

-

Requirements :

-

You can have a really high-res Displacement Map, but if you don't have enough vertices to displace, then you will not get the geometric detail.

-

This is not the same for Normal Maps; a high-res Normal Map give high detail, regardless of the vertex count.

-

-

-

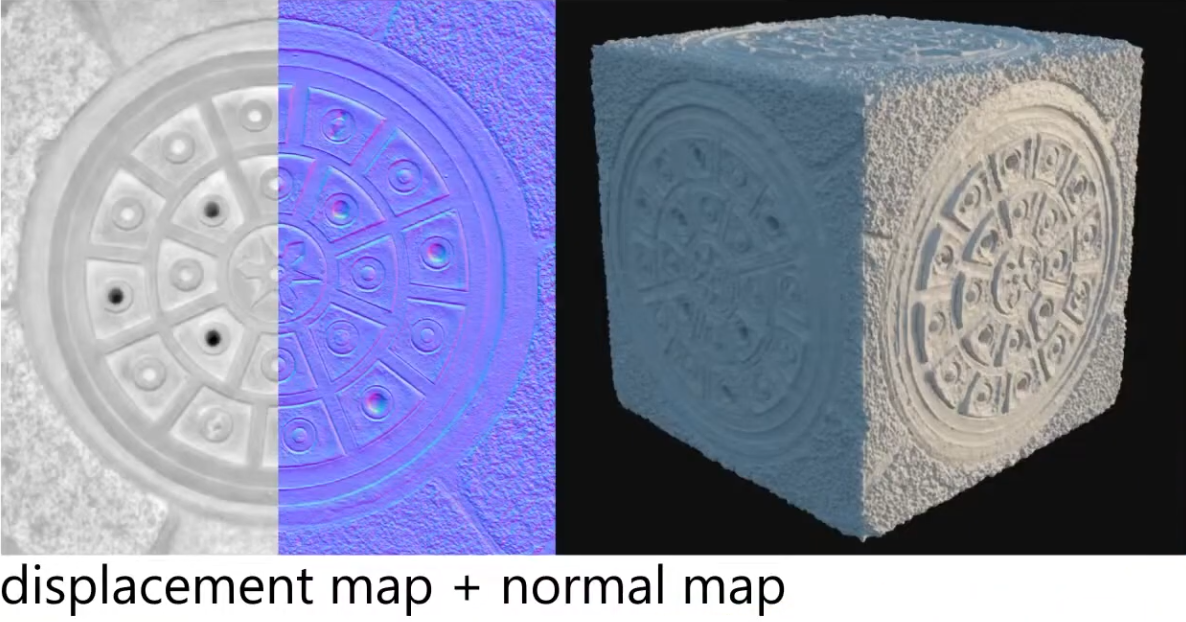

Displacement Map vs Displacement Map + Normal Map

-

The Displacement Map is usually used with a Normal Map.

-

Just by moving vertices around, you are not changing the normals. To see the visual changes, you need the normals that the geometry will have after the displacement.

-

How can I get the Normal Map? You can use the Displacement Map as a Bump Map, which will give you the information you need to get the Normal Map.

-

You should have a Normal Map if you intend to use the Displacement Map at runtime, as it's cheaper then having to calculate the normals on the fly.

-

-

.

.

-

You can use a Displacement Map without a Normal Map, but you need to "apply" the Displacement Map so you calculate the new normals after the Displacement.

-

If you don't apply (and thus re-calculate the surface normals), you will need a Normal Map.

-

In the end, you need the new normals, in one way or another.

-

-

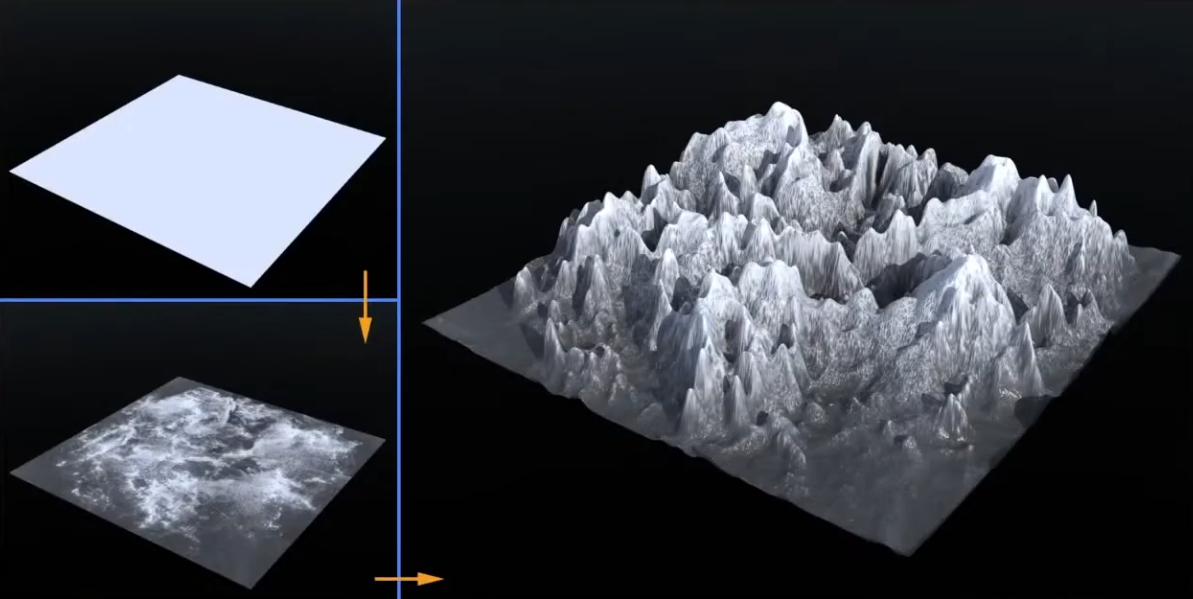

Used for :

-

Offline Terrain Generation.

-

.

.

-

-

Offline Sculptures.

-

Some fine details that are hard to model, and you may want geometry, instead of faking with a Normal Map.

-

.

.

-

-

-

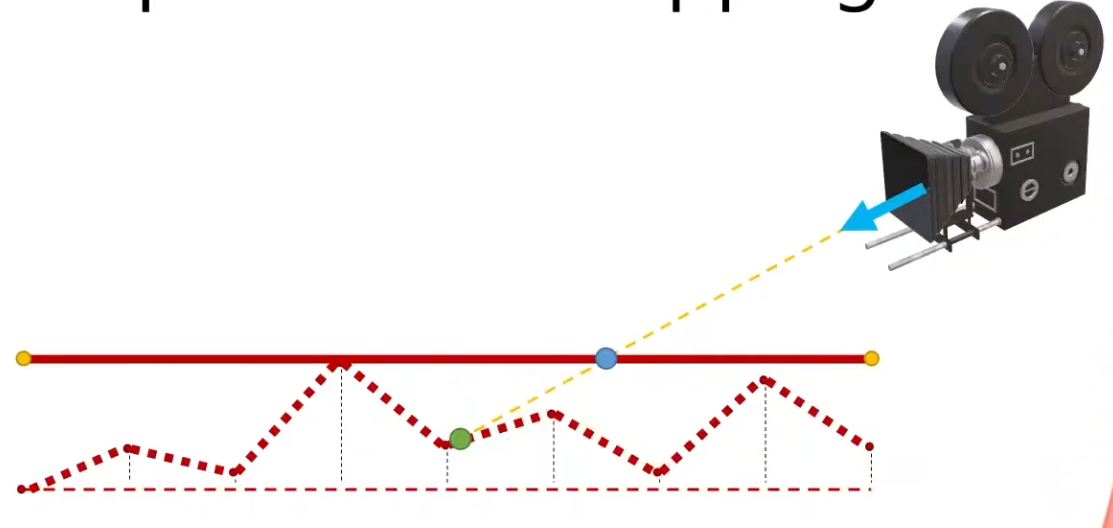

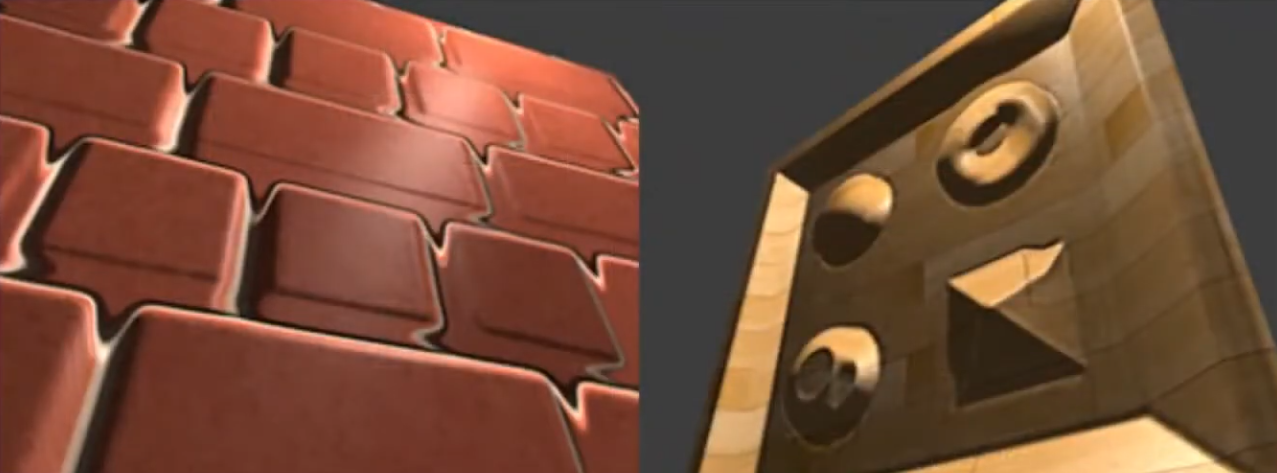

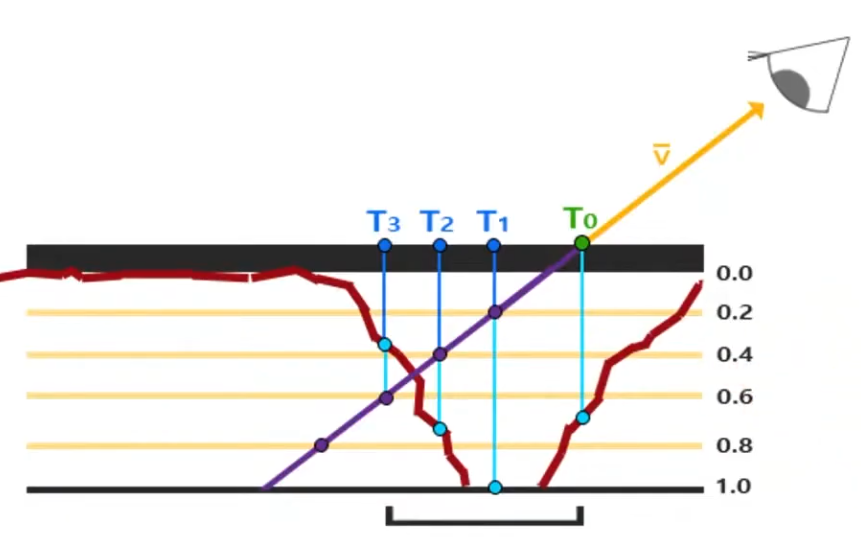

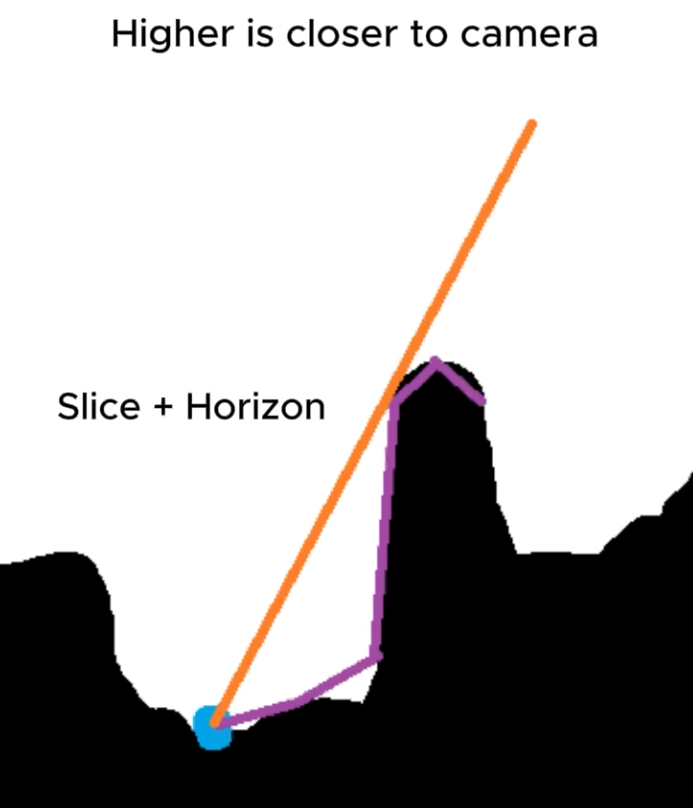

Per-Pixel Displacement Mapping :

-

It's a technique to get the visual of a displacement map, without generating new geometry.

-

The trick is not elevating the geometry with the Displacement Mapping, but "craving" the geometry.

-

.

.

-

Renders the green point, instead of the blue point.

-

-

This is a lot cheaper then having a Displacement Map generating the geometry, but still has a cost, as the fragment shader needs to figure out what pixel to actually shade.

-

-

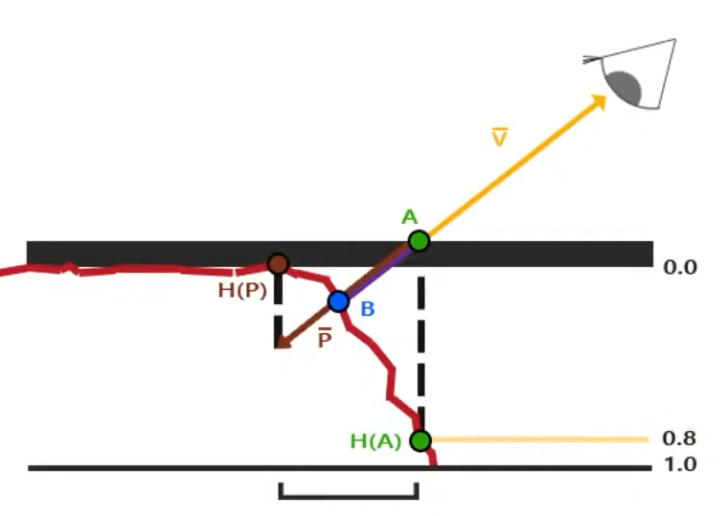

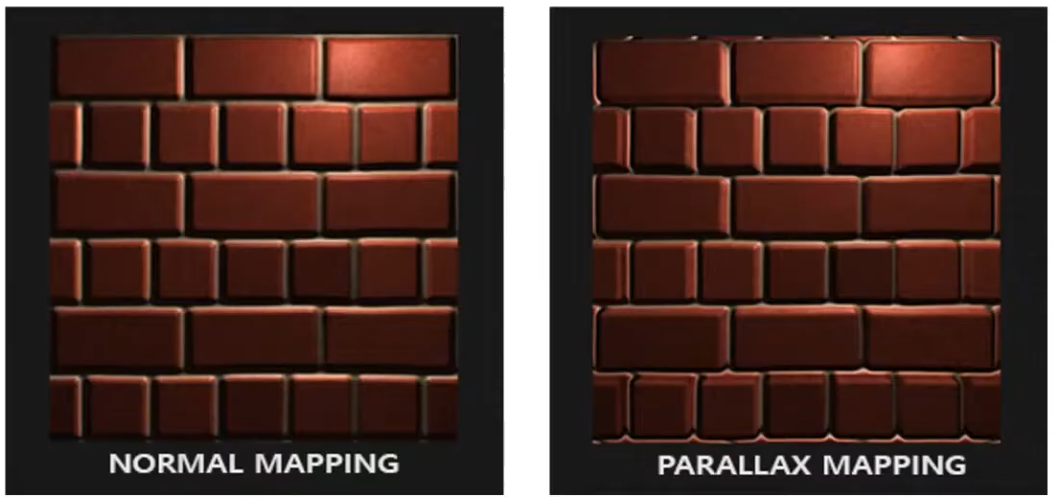

Parallax Mapping :

-

Is a way to approximate the result of Per-Pixel Displacement Mapping .

-

.

.

-

With Per-Pixel Displacement Mapping , the frag shader would have to figure out what's the correct point to shade. It will look for the blue point B.

-

With Parallax Mapping , the height between the A point and the correct height H(A) is used as an approximation to determine where the blue point B is. In this example, the technique misses the B point and reaches P, but this is the final pixel that will be drawn.

-

Even tho seems like a "big miss", the final visual looks fine.

-

-

-

.

.

-

.

.

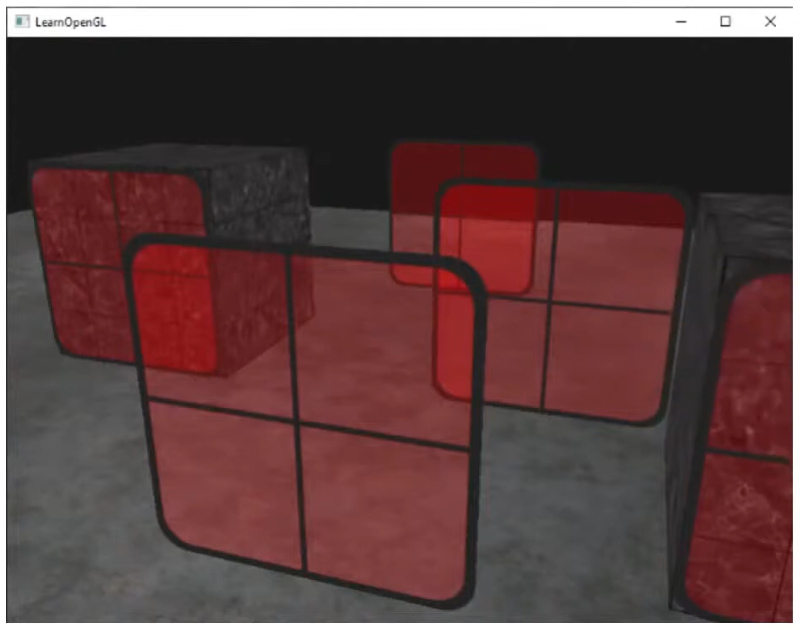

-

If the surface is rotated, things begin to not look so good.

-

Looking head on is better.

-

-

-

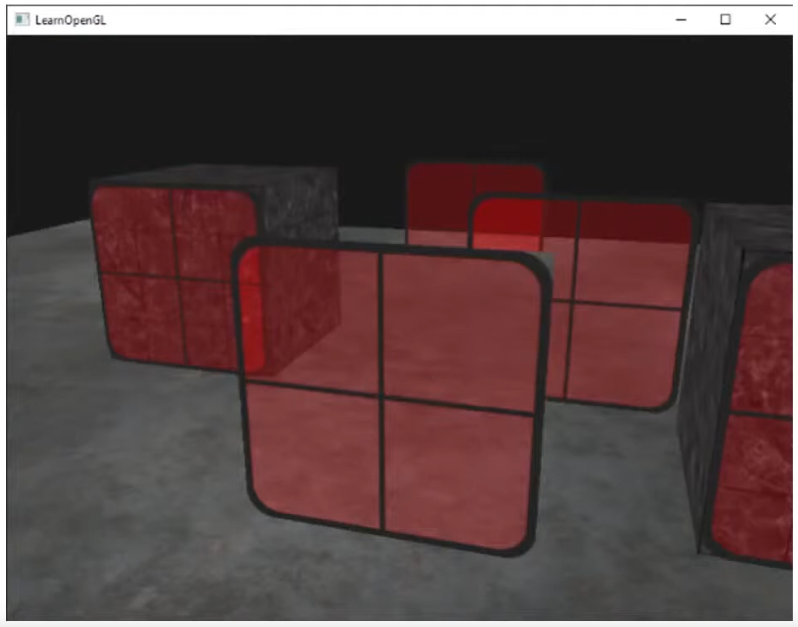

Steep Parallax Mapping :

-

A better approximation for Per-Pixel Displacement Mapping then Parallax Mapping , but a considerable more expensive then Parallax Mapping .

-

.

.

-

It does multiple texture reads instead of just one, in order to determine a better "stopping point" for the 'correct pixel to shade'.

-

-

.

.

-

It's better, but if the angle is too exagerated, the problem returns.

-

-

-

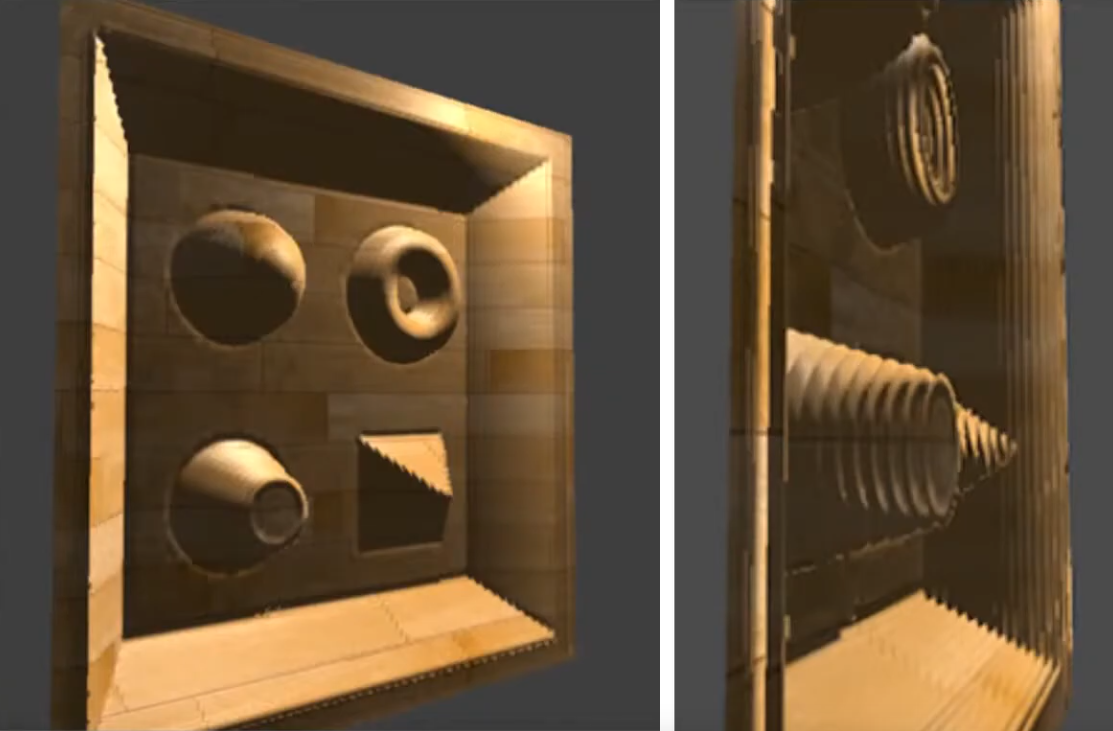

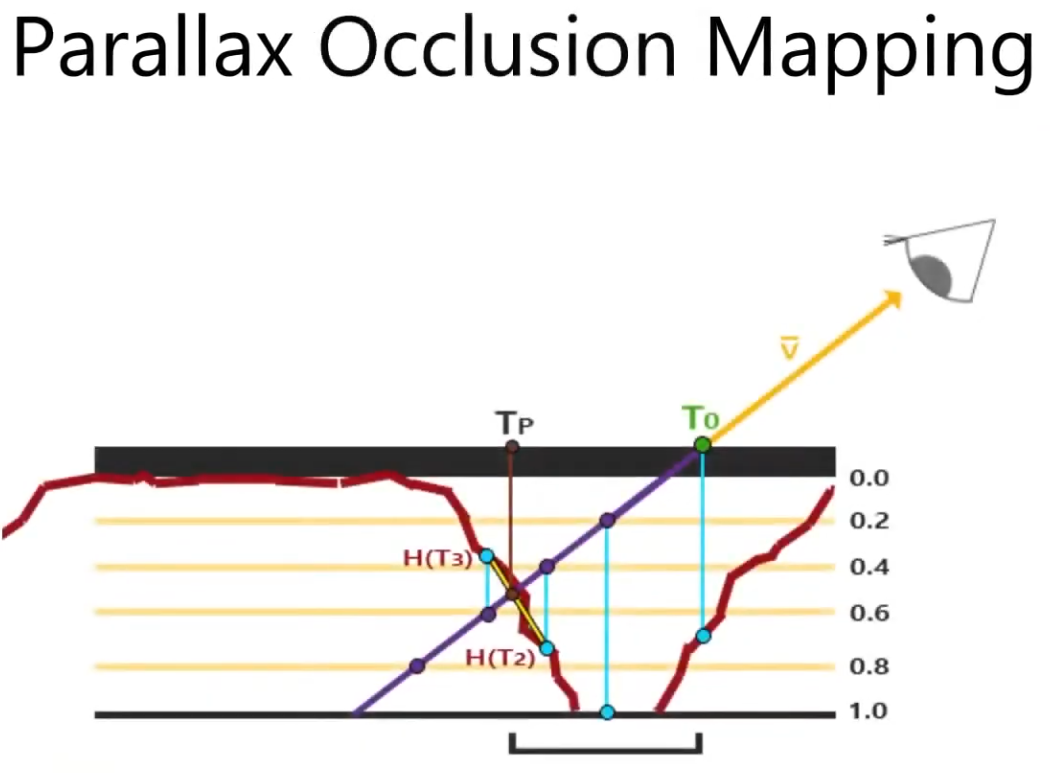

Parallax Occlusion Mapping :

-

A better approximation for Per-Pixel Displacement Mapping then Step Parallax Mapping , but a bit more expensive then Step Parallax Mapping .

-

.

.

-

It adds one extra step at the end of the Step Parallax Mapping evaluation.

-

This extra step doesn't perform any new texture read, it just better guesses the 'correct pixel to shade' based on the position of the previous step and the final step.

-

-

It gives more "continuity" for the guesses. It's smoother.

-

.

.

-

.

.

-

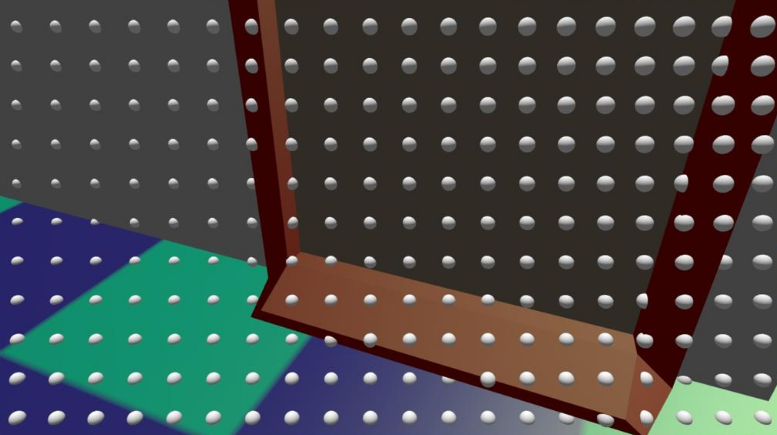

The stairs uses the Parallax Occlusion Mapping; it's just a flat plain.

-

-

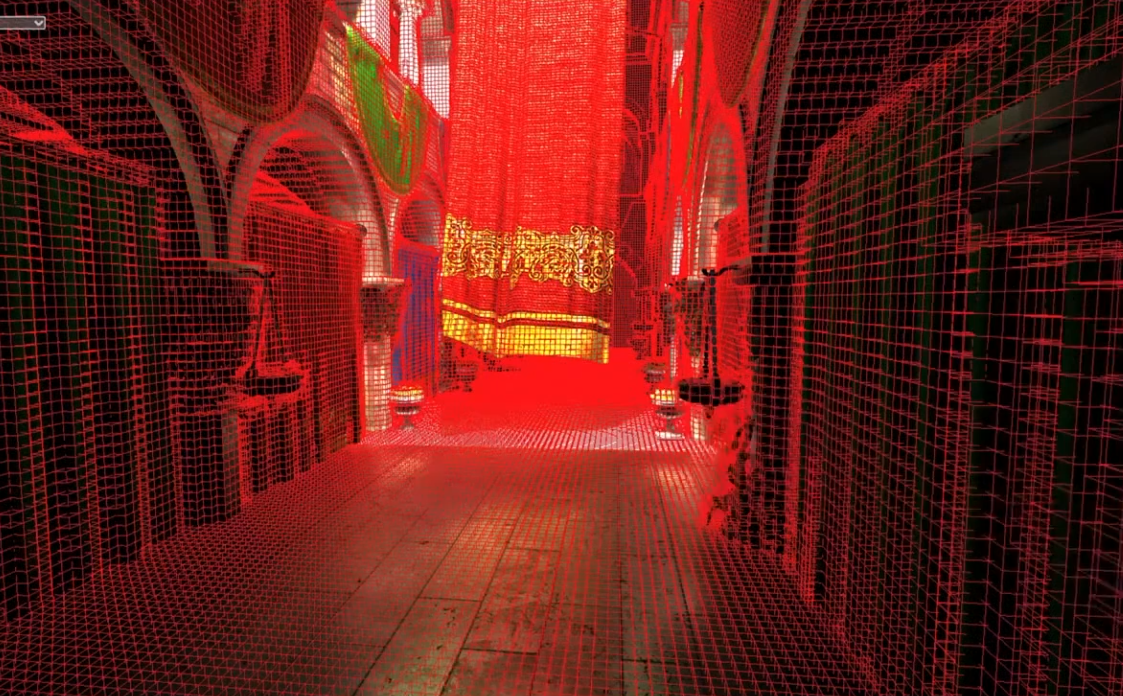

.

.

-

All the walls uses the Parallax Occlusion Mapping.

-

-

.

.

-

.

.

-

-

What about shadow casting?

-

Displacement Mapping and all techniques that approximate the result of Displacement Mapping can cast shadows.

-

.

.

-

-

WebGL Demo of different displacement techniques .

-

(2025-09-12) Didn't work on Firefox, Brave, or Chrome.

-

Normal Map

-

Tangent Space :

-

Tangent Space - Eric Lengyel .

-

(2025-09-15)

-

This is the one I chose to use.

-

RayLib does this same implementation in

GenMeshTangents.

-

-

-

-

The implementation is designed, specifically, to make the generation of tangent space as resilient as possible to a 3D model being moved from one application to another. That is generate the same tangent spaces even if there is a change in index list(s), ordering of faces/vertices of a face, and/or the removal of degenerate primitives. Both triangles and quads are supported.

-

This makes it easy for anyone to integrate the implementation into their own application and thus reproduce the same tangent spaces. This also makes the code a perfect candidate for an implementation standard. We hope the standard will be adopted by as many developers as possible.

-

The standard is used in Blender 2.57 and is also used by default in xNormal since version 3.17.5 in the form of a built-in tangent space plugin (binary and code).

-

-

-

Example .

-

-

Smooth Shading / Flat Shading :

-

Apparently my procedural Meshes are smooth by default, due to my implementation.

-

If adjacent faces share the same vertex a

-

If triangles do not share vertex normals (i.e., each triangle has its own vertex normal equal to the face normal), lighting will be flat (sharp edges between faces).nd that vertex has a single normal (an average), shading will be smooth across the faces.

-

-

Meshes coming from models may or may not be smooth, depending on how it was imported.

-

-

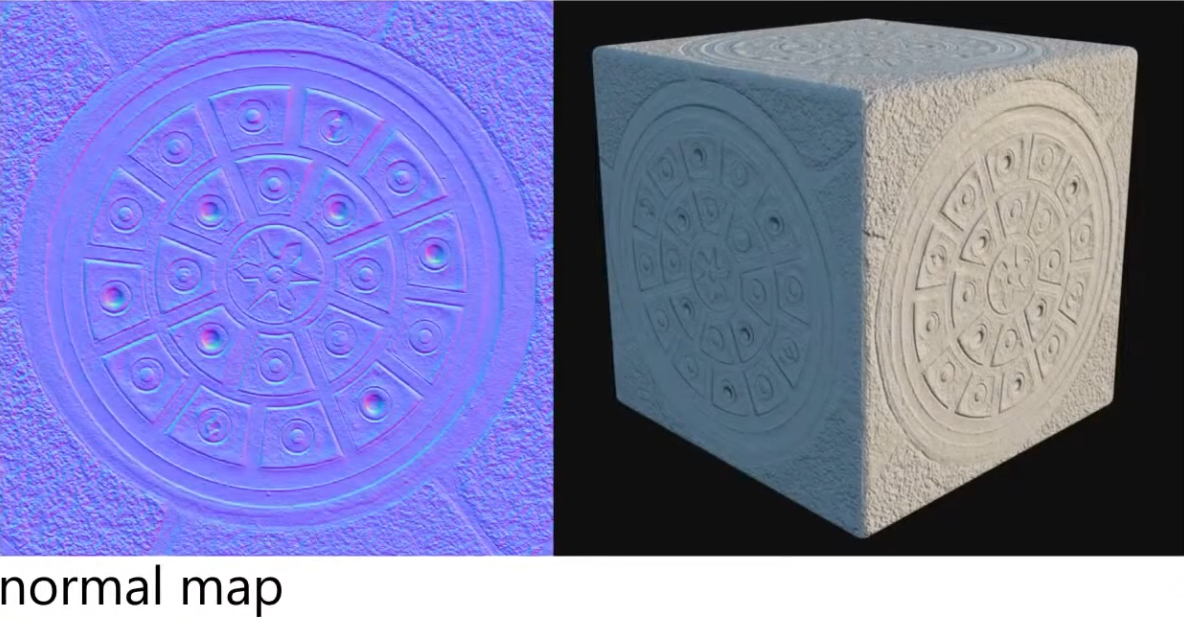

A RGB texture encoding surface normals (X, Y, Z).

-

Overrides per-pixel normal vectors, giving the illusion of complex surface detail under lighting without changing geometry.

-

Used for small details/deformations; doesn't work well for something that is too deep or elevated; the illusion breaks.

-

The coordinates from the Normal Map are actually in local coordinates from the point evaluated.

-

This make sense, as we want the normals to make sense, even if the character is moving.

-

This is why there's a lof of blue in a normal map. The more blue the map is, the less disturbed the normal of a point is.

-

-

Color intuition :

-

Red: inclining the normal towards the X direction (X+ == right).

-

Green: inclining towards the Y direction (Y+ == up).

-

Blue: not inclining.

-

-

.

.

-

Blending normal maps :

-

Reoriented normal mapping

vec3 t = texture(baseMap, uv).xyz * vec3( 2.0, 2.0, 2.0) + vec3(-1.0, -1.0, 0.0); vec3 u = texture(detailMap, uv).xyz * vec3(-2.0, -2.0, 2.0) + vec3( 1.0, 1.0, -1.0); vec3 r = normalize(t * dot(t, u) - u * t.z); return r;-

.

.

-

UDN Blending :

-

Its main advantage is the low number of shader instructions it requires.

-

While it leads to a reduction in details over flat areas, UDN blending is interesting if blending must be performed at runtime.

vec3 t = texture(baseMap, uv).xyz * 2.0 - 1.0; vec3 u = texture(detailMap, uv).xyz * 2.0 - 1.0; vec3 r = normalize(t.xy + u.xy, t.z); return r; -

-

~Height Map

-

Usually referred to as a Bump Map, but they aren't really the same.

-

Grayscale texture, encoding relative surface elevation; it represents the actual height/elevation values, not the variations as a Bump Map would do.

-

It can be used in different contexts:

-

To generate normals (essentially turning it into a bump/normal map).

-

To drive parallax mapping (screen-space depth illusion).

-

To drive displacement mapping (real geometric change).

-

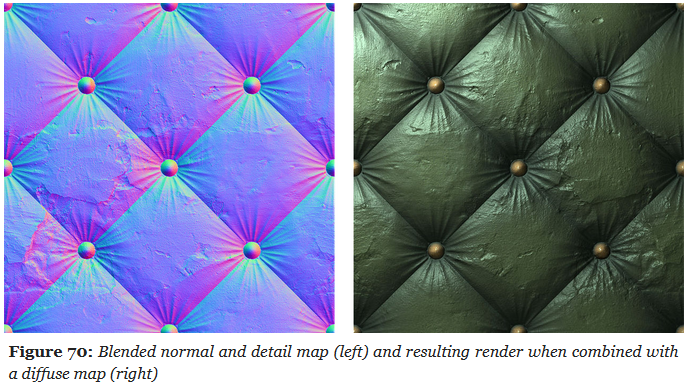

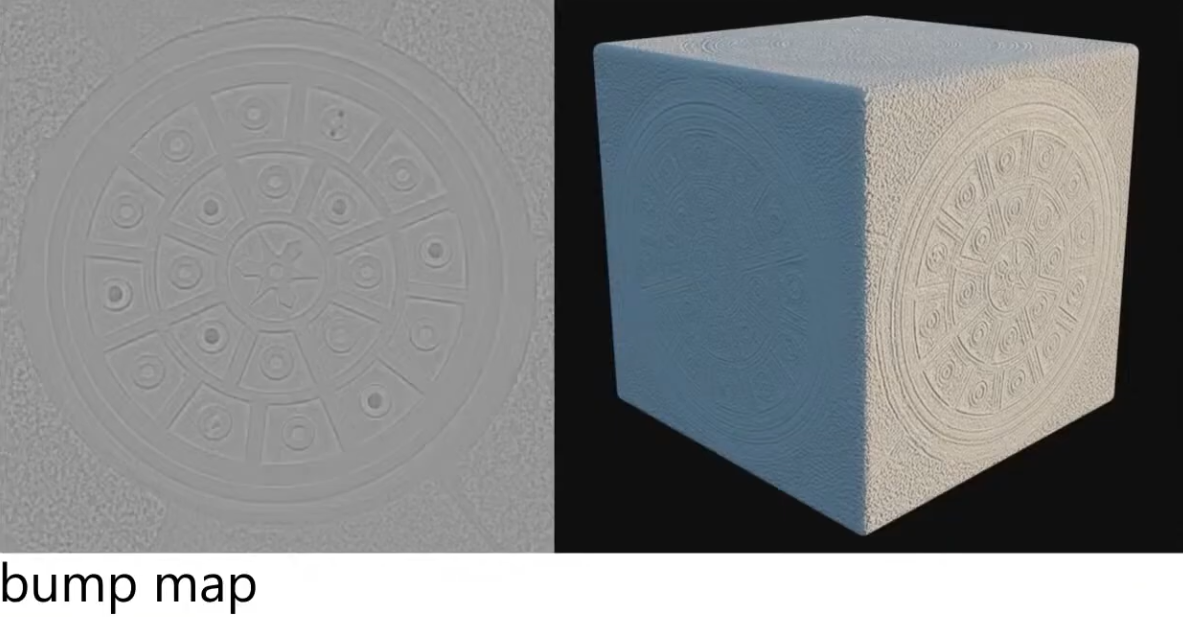

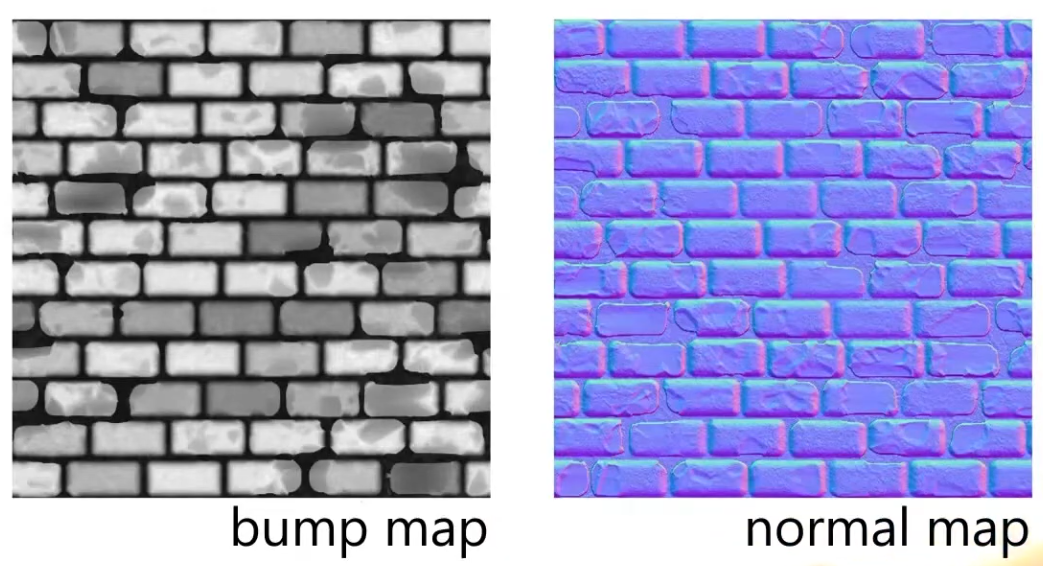

Bump Map

-

Grayscale texture where brightness represents surface height variations . White = high, black = low.

-

It does not store absolute "height," but only brightness variations that are sampled to compute a local slope.

-

No depth, no parallax.

-

Very lightweight (single-channel grayscale).

-

Typically used for adding simple surface detail in older or performance-constrained engines.

-

.

.

-

Requires additional texture reads. You have to know how the height is changing in regions around the current point.

-

"Would be nice to just pre-record the normals (as that what we actually want), instead of having to compute the normals through a Bump Map? Yes! That's why a Normal Map exists".

-

The Normal Map stores the normals, instead of the variation of the normals, like a Bump Map.

-

.

.

-

Visually, at runtime, they will look exactly the same; not always, but close enough; the parameters need to be the same.

-

-

Normal Map is the modernized version of a Bump Map.

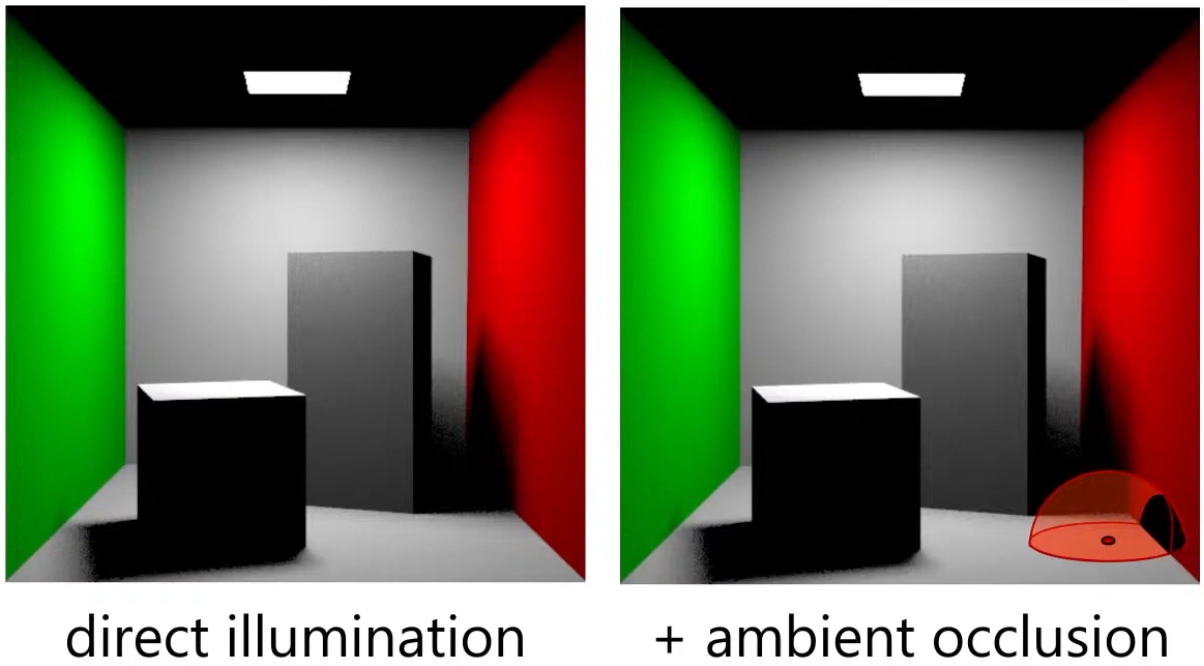

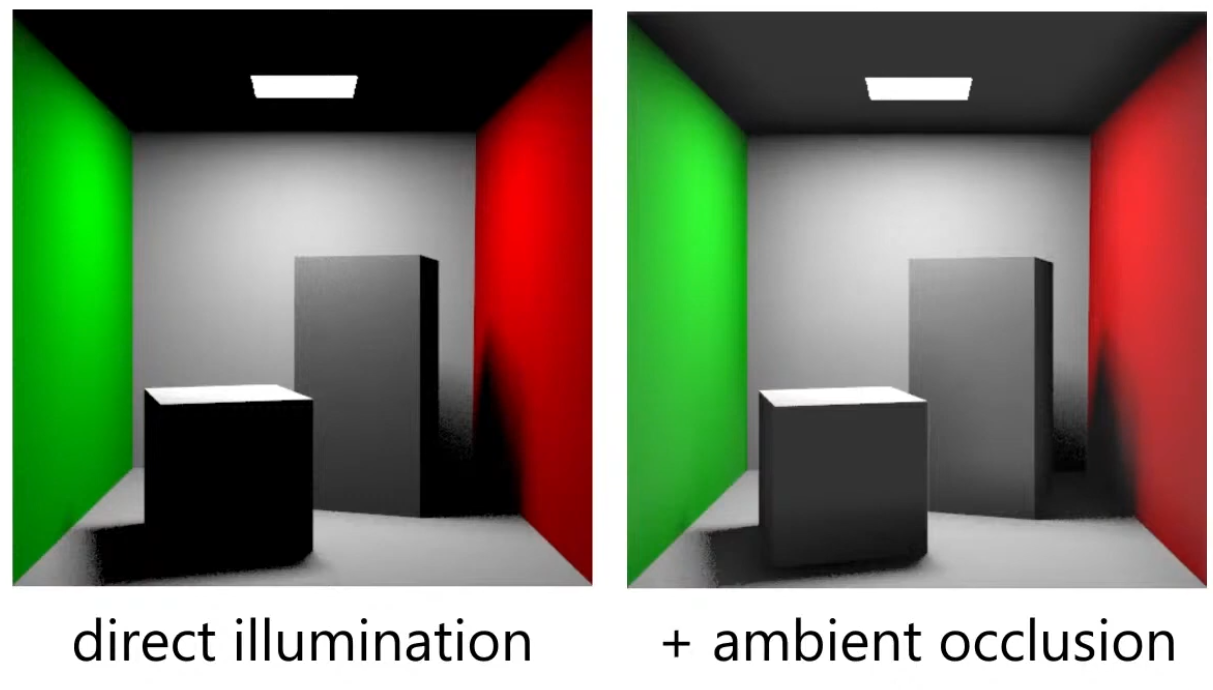

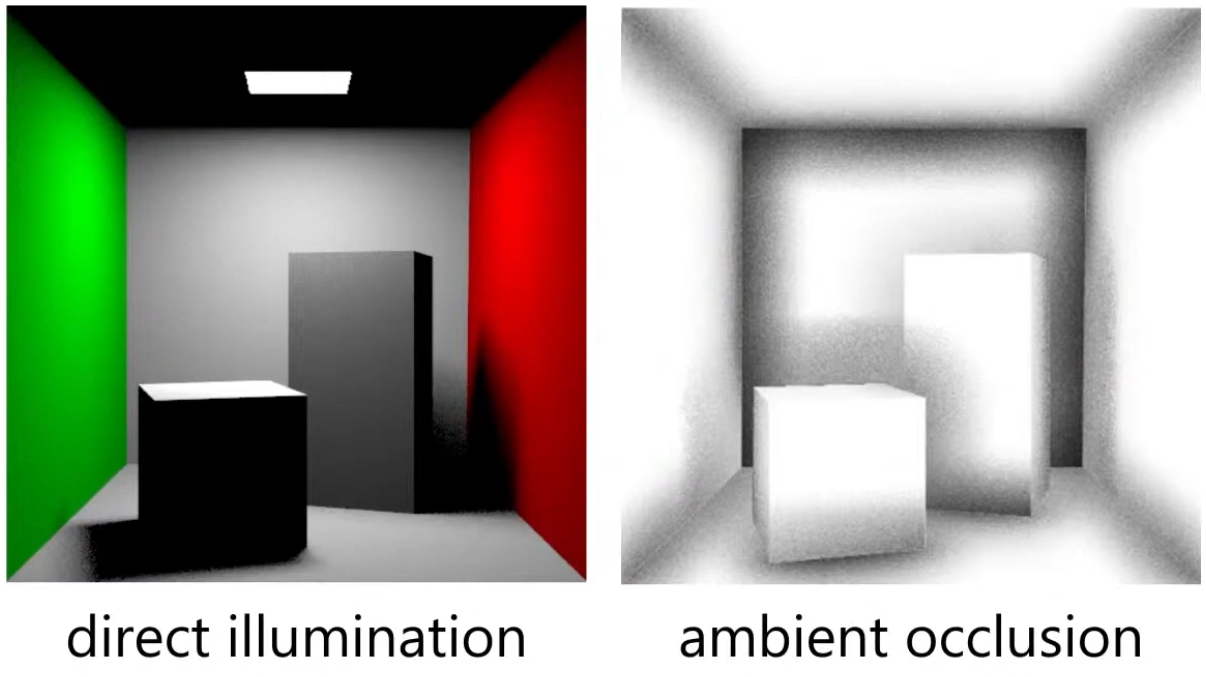

Ambient Occlusion Map

Parameter

-

Defines how much of the ambient light is accessible to a surface point. It is a per-pixel shadowing factor between 0.0 and 1.0.

-

Scalar

[0..1]. -

AO is an approximation of diffuse global illumination , focusing purely on occlusion from nearby geometry, approximating how exposed each point in a scene is to ambient lighting.

-

More specifically, it estimates the amount of indirect light that reaches a surface point by considering occlusion from nearby geometry.

-

Areas that are tightly enclosed or near other surfaces (e.g., corners, creases) receive less ambient light and appear darker.

-

Areas that are more open or exposed receive more ambient light and appear brighter.

-

-

AO is not full global illumination; it ignores directional light transport and color bleeding—it’s a simplified model to capture the general “shadowing” effect of ambient light.

-

The idea for Ambient Occlusion is to determine how bright or dark a region should be based on what is occluding it.

-

.

.

-

.

.

-

.

.

Ambient Occlusion Map

-

Diffuse :

// diffuse indirect vec3 indirectDiffuse = max(irradianceSH(n), 0.0) * Fd_Lambert(); // ambient occlusion indirectDiffuse *= texture2D(aoMap, outUV).r; -

Specular :

float f90 = clamp(dot(f0, 50.0 * 0.33), 0.0, 1.0); // cheap luminance approximation float f90 = clamp(50.0 * f0.g, 0.0, 1.0);float computeSpecularAO(float NoV, float ao, float roughness) { return clamp(pow(NoV + ao, exp2(-16.0 * roughness - 1.0)) - 1.0 + ao, 0.0, 1.0); } // specular indirect vec3 indirectSpecular = evaluateSpecularIBL(r, perceptualRoughness); // ambient occlusion float ao = texture2D(aoMap, outUV).r; indirectSpecular *= computeSpecularAO(NoV, ao, roughness); -

Horizon :

// specular indirect vec3 indirectSpecular = evaluateSpecularIBL(r, perceptualRoughness); // horizon occlusion with falloff, should be computed for direct specular too float horizon = min(1.0 + dot(r, n), 1.0); indirectSpecular *= horizon * horizon; -

Suggestions :

-

TLDR : Option 4 is the correct one, 3 is acceptable in the absence of IBL, and 1 is a non-physics-based hack.

-

~multiply only the albedo.

-

Blender does it this way.

-

I felt that it greatly increases the contrast in the object. Even in the most illuminated points, there are dark regions.

-

If you multiply the base color texture directly by AO (texture-level multiplication), you may inadvertently change the metallic F0 appearance. Avoid multiplying the base color used for F0 in the specular path; instead apply AO only to the diffuse/indirect parts.

-

-

~multiply only the diffuse.

-

I felt that it greatly increases the contrast in the object. Even in the most illuminated points, there are dark regions.

-

Do not multiply light_accumulation by AO — that would darken direct lighting and specular highlights.

-

-

~multiply the ambient_final

-

If you have no IBL yet, multiply the AO into ambient_final. Once you add IBL, multiply AO into the indirect diffuse term (the irradiance / ambient diffuse) and apply a reduced or rougness-weighted AO to the indirect specular if you want occlusion to affect glossy reflections.

-

-

apply only to ambient indirect:

// -> Indirect diffuse (irradiance) vec3 irradiance; // from diffuse irradiance probe / spherical harmonics / ambient color // the diffuse response (Lambertian) is usually: irradiance * albedo / PI vec3 ambient_diffuse = irradiance * (albedo * (1.0 / PI)); // apply AO to indirect diffuse (AO modulates the irradiance * albedo term) ambient_diffuse *= ao; // ->Indirect specular (IBL) // prefilteredSpecular and a BRDF LUT give you the specular IBL contribution: vec3 prefilteredColor; // sample prefiltered environment map with roughness vec2 brdfLUT; // result from split-sum integration: (scale, bias) vec3 ambient_specular = prefilteredColor * (brdfLUT.x * F0 + brdfLUT.y); // Optionally attenuate indirect specular by AO depending on roughness. // Rationale: very smooth surfaces reflect far-away environment less affected by local occluders. float specularAO = mix(1.0, ao, clamp(1.0 - roughness, 0.0, 1.0)); // lerp: smooth -> less AO effect ambient_specular *= specularAO; // -> Combine vec3 ambient_indirect = ambient_diffuse + ambient_specular; // final (linear) vec3 final_color = light_accumulation + ambient_indirect + emissive; return final_color;

-

Anisotropy

Parameter

-

Amount of anisotropy.

-

Scalar

[-1..1]. -

Note that negative values will align the anisotropy with the bitangent direction instead of the tangent direction.

-

.

.

-

For a rough metallic surface.

-

Anisotropy Specular BRDF

-

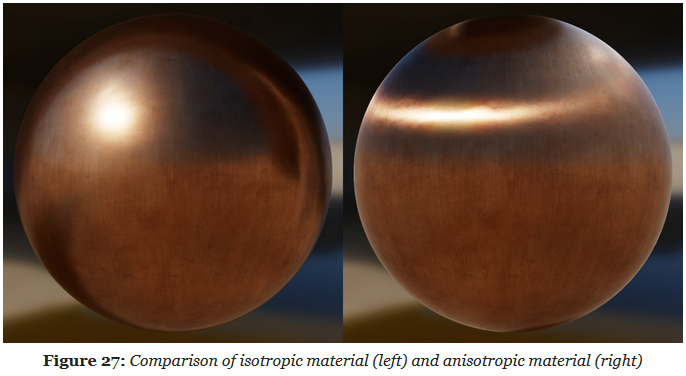

The standard material model described previously can only describe isotropic surfaces, that is, surfaces whose properties are identical in all directions. Many real-world materials, such as brushed metal, can, however, only be replicated using an anisotropic model.

-

.

.

-

The Isotropic Specular BRDF described previously can be modified to handle anisotropic materials.

-

Burley's anisotropic NDF :

float at = max(roughness * (1.0 + anisotropy), 0.001); float ab = max(roughness * (1.0 - anisotropy), 0.001); float D_GGX_Anisotropic(float NoH, const vec3 h, const vec3 t, const vec3 b, float at, float ab) { float ToH = dot(t, h); float BoH = dot(b, h); float a2 = at * ab; highp vec3 v = vec3(ab * ToH, at * BoH, a2 * NoH); highp float v2 = dot(v, v); float w2 = a2 / v2; return a2 * w2 * w2 * (1.0 / PI); } -

Anisotropic visibility function

float at = max(roughness * (1.0 + anisotropy), 0.001);

float ab = max(roughness * (1.0 - anisotropy), 0.001);

float V_SmithGGXCorrelated_Anisotropic(float at, float ab, float ToV, float BoV,

float ToL, float BoL, float NoV, float NoL) {

float lambdaV = NoL * length(vec3(at * ToV, ab * BoV, NoV));

float lambdaL = NoV * length(vec3(at * ToL, ab * BoL, NoL));

float v = 0.5 / (lambdaV + lambdaL);

return saturateMediump(v);

}

Clear Coat

Parameter

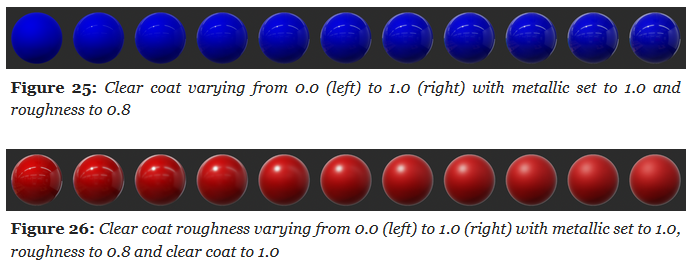

-

Clear Coat :

-

Strength of the clear coat layer.

-

Scalar

[0..1].

-

-

Clear Coat Roughness :

-

Perceived smoothness or roughness of the clear coat layer.

-

Scalar

[0..1].

-

-

.

.

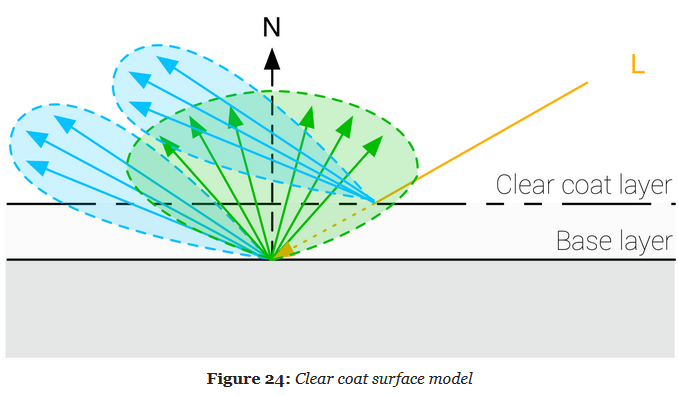

Clear Coat Specular BRDF

-

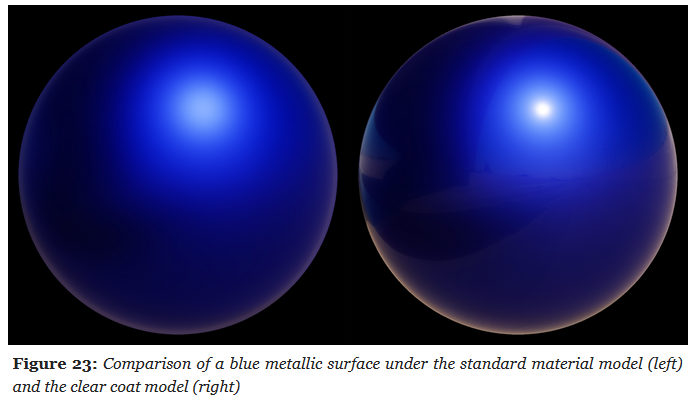

The standard material model is a good fit for isotropic surfaces made of a single layer.

-

Multi-layer materials are fairly common, particularly materials with a thin translucent layer over a standard layer.

-

Examples :

-

Car paints, soda cans, lacquered wood, acrylic, etc.

-

-

.

.

-

A clear coat layer can be simulated as an extension of the standard material model by adding a second specular lobe, which implies evaluating a second specular BRDF.

-

To simplify the implementation and parameterization, the clear coat layer will always be isotropic and dielectric.

-

Our model will however not simulate inter reflection and refraction behaviors.

-

.

.

-

It's a Cook-Torrance specular microfacet model, with a GGX normal distribution function, a Kelemen visibility function, and a Schlick Fresnel function.

-

Kelemen visibility term :

float V_Kelemen(float LoH) { return 0.25 / (LoH * LoH); }

Sheen

Parameters

-

Color :

-

Specular tint to create two-tone specular fabrics (defaults to 0.04 to match the standard reflectance).

-

-

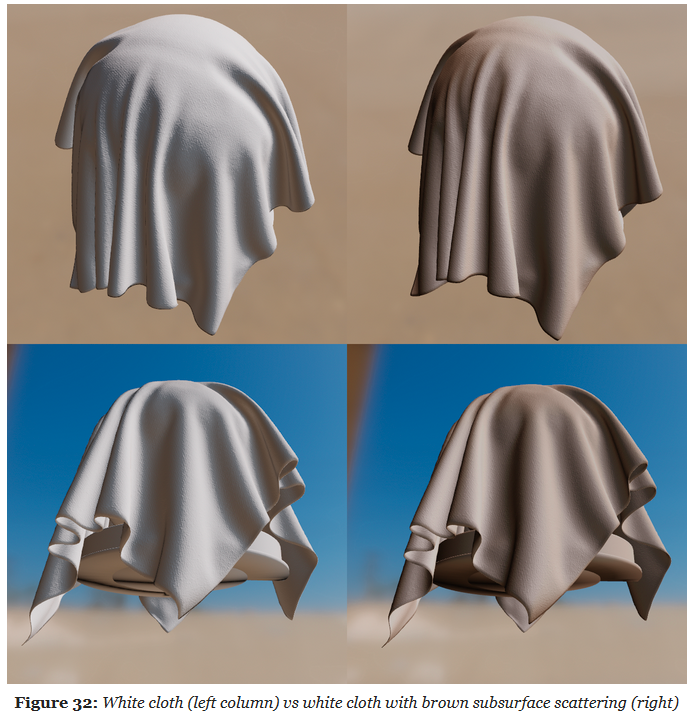

Subsurface Color :

-

Tint for the diffuse color after scattering and absorption through the material.

-

To create a velvet-like material, the base color can be set to black (or a dark color). Chromaticity information should instead be set on the sheen color. To create more common fabrics such as denim, cotton, etc. use the base color for chromaticity and use the default sheen color or set the sheen color to the luminance of the base color.

-

Cloth Specular BRDF

-

All the material models described previously are designed to simulate dense surfaces, both at a macro and at a micro level. Clothes and fabrics are however often made of loosely connected threads that absorb and scatter incident light. The microfacet BRDFs presented earlier do a poor job of recreating the nature of cloth due to their underlying assumption that a surface is made of random grooves that behave as perfect mirrors. When compared to hard surfaces, cloth is characterized by a softer specular lobe with a large falloff and the presence of fuzz lighting, caused by forward/backward scattering. Some fabrics also exhibit two-tone specular colors (velvets for instance).

-

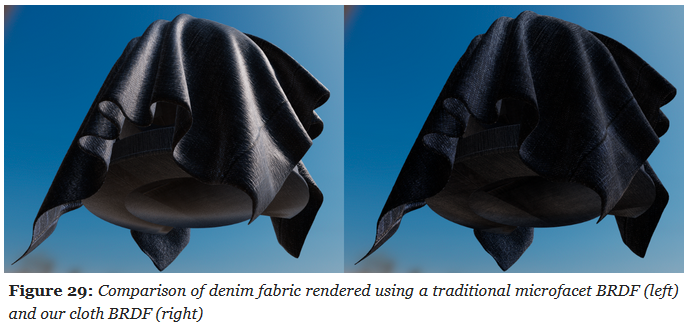

A traditional microfacet BRDF fails to capture the appearance of a sample of denim fabric. The surface appears rigid (almost plastic-like), more similar to a tarp than a piece of clothing. This figure also shows how important the softer specular lobe caused by absorption and scattering is to the faithful recreation of the fabric.

-

.

.

-

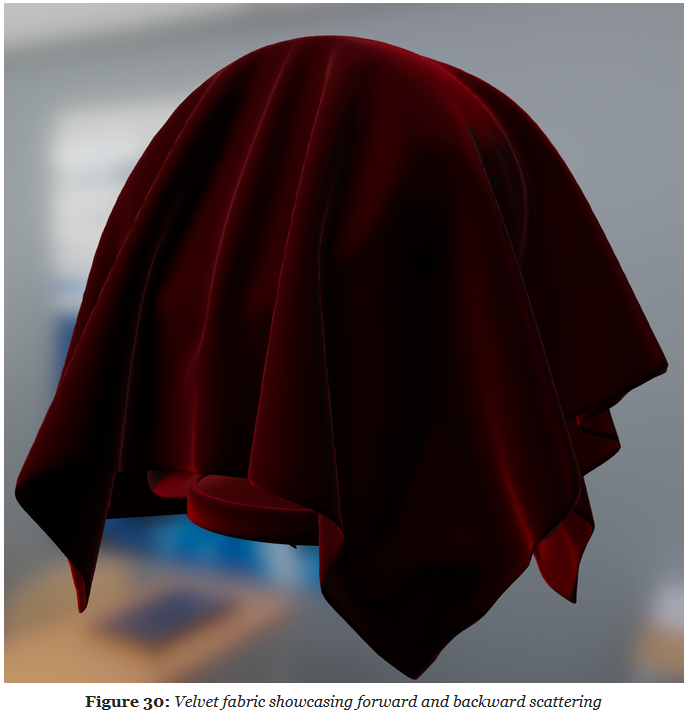

Velvet is an interesting use case for a cloth material model. As shown below, this type of fabric exhibits strong rim lighting due to forward and backward scattering. These scattering events are caused by fibers standing straight at the surface of the fabric. When the incident light comes from the direction opposite to the view direction, the fibers will forward-scatter the light. Similarly, when the incident light from the same direction as the view direction, the fibers will scatter the light backward.

-

.

.

-

The cloth specular BRDF we use is a modified microfacet BRDF as described by Ashikhmin and Premoze .

-

Ashikhmin's Velvet NDF :

float D_Ashikhmin(float roughness, float NoH) { // Ashikhmin 2007, "Distribution-based BRDFs" float a2 = roughness * roughness; float cos2h = NoH * NoH; float sin2h = max(1.0 - cos2h, 0.0078125); // 2^(-14/2), so sin2h^2 > 0 in fp16 float sin4h = sin2h * sin2h; float cot2 = -cos2h / (a2 * sin2h); return 1.0 / (PI * (4.0 * a2 + 1.0) * sin4h) * (4.0 * exp(cot2) + sin4h); } -

Charlie NDF :

-

Optimized to properly fit in half float formats.

float D_Charlie(float roughness, float NoH) { // Estevez and Kulla 2017, "Production Friendly Microfacet Sheen BRDF" float invAlpha = 1.0 / roughness; float cos2h = NoH * NoH; float sin2h = max(1.0 - cos2h, 0.0078125); // 2^(-14/2), so sin2h^2 > 0 in fp16 return (2.0 + invAlpha) * pow(sin2h, invAlpha * 0.5) / (2.0 * PI); } -

Cloth Diffuse BRDF

-

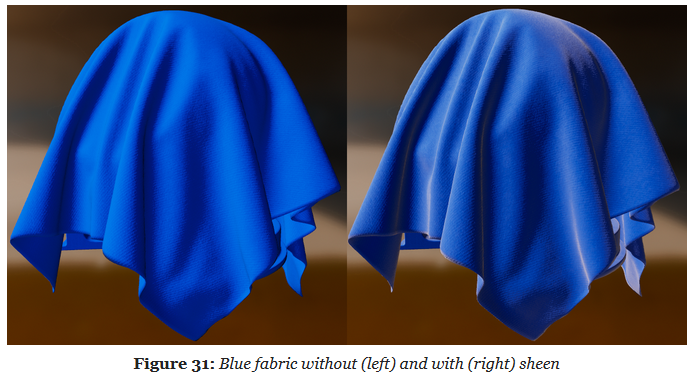

Sheen :

-

To offer better control over the appearance of cloth and to give users the ability to recreate two-tone specular materials, we introduce the ability to directly modify the specular reflectance.

-

.

.

-

-

Subsurface Scattering :

-

.

.

-

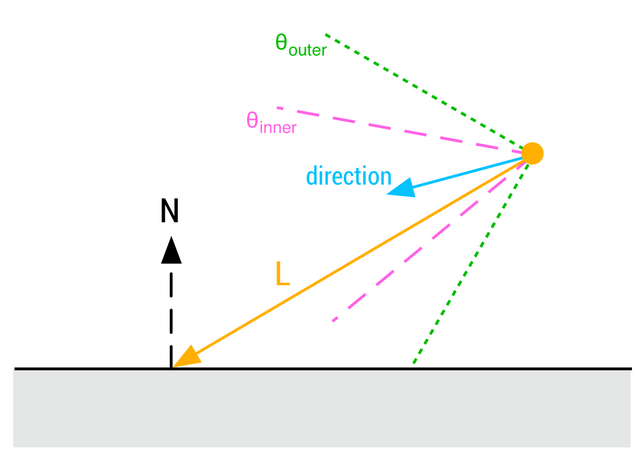

Direct Lighting

Parametrization

-

To simplify the implementation, all luminous powers will converted to luminous intensities () before being sent to the shader. The conversion is light dependent and is explained in the previous sections.

-

Type :

-

Directional, point, spot or area

-

Can be inferred from other parameters (e.g. a point light has a length, radius, inner angle and outer angle of 0).

-

-

Direction :

-

Used for directional lights, spot lights, photometric point lights, and linear and tubular area lights (orientation)

-

-

Color :

-

The color of emitted light, as a linear RGB color. Can be specified as an sRGB color or a color temperature in the tools

-

-

Intensity :

-

The light's brightness. The unit depends on the type of light

-

-

Falloff radius :

-

Maximum distance of influence

-

-

Inner angle :

-

Angle of the inner cone for spot lights, in degrees

-

-

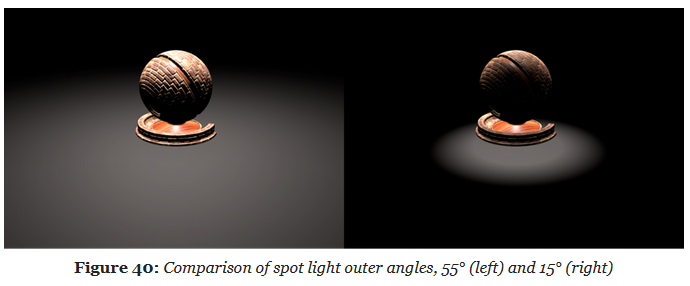

Outer angle :

-

Angle of the outer cone for spot lights, in degrees

-

-

Length :

-

Length of the area light, used to create linear or tubular lights

-

-

Radius :

-

Radius of the area light, used to create spherical or tubular lights

-

-

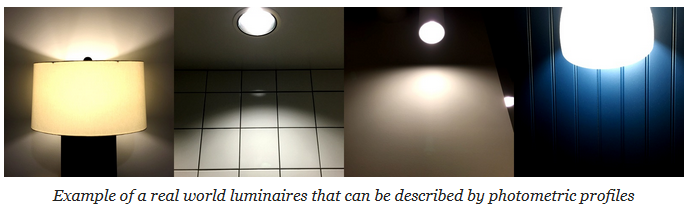

Photometric profile :

-

Texture representing a photometric light profile, works only for punctual lights

-

-

Masked profile :

-

Boolean indicating whether the IES profile is used as a mask or not. When used as a mask, the light's brightness will be multiplied by the ratio between the user specified intensity and the integrated IES profile intensity. When not used as a mask, the user specified intensity is ignored but the IES multiplier is used instead

-

-

Photometric multiplier :

-

Brightness multiplier for photometric lights (if IES as mask is turned off)

-

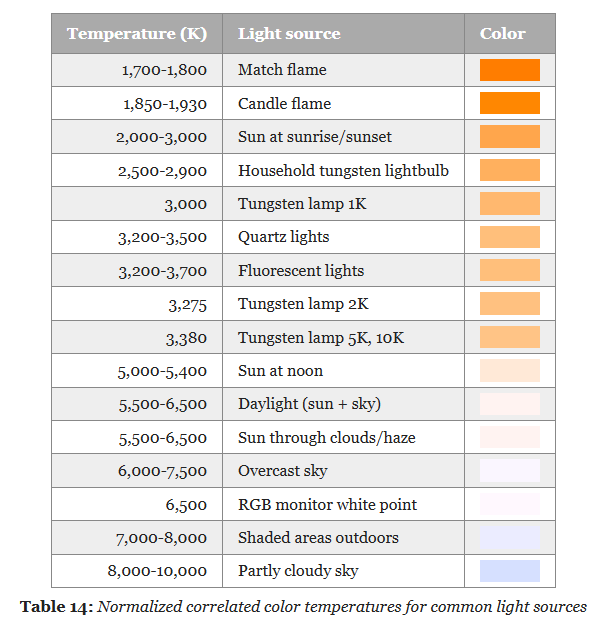

Color Temperature

-

.

.

-

I got a little lost about this. See this session .

-

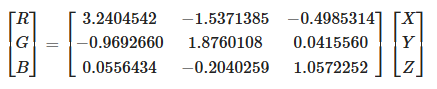

Convert from the XYZ space to linear RGB with a simple 3×3 matrix.

-

Conversion using the inverse matrix for the sRGB color space:

-

.

.

-

-

The result of these operations is a linear RGB triplet in the sRGB color space.

-

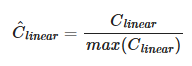

Since we care about the chromaticity of the results, we must apply a normalization step to avoid clamping values greater than 1.0 and distort resulting colors:

-

.

.

-

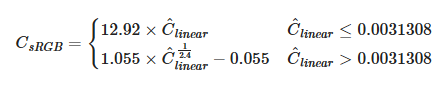

We must finally apply the sRGB opto-electronic conversion function (OECF) to obtain a displayable value (the value should remain linear if passed to the renderer for shading).

-

.

.

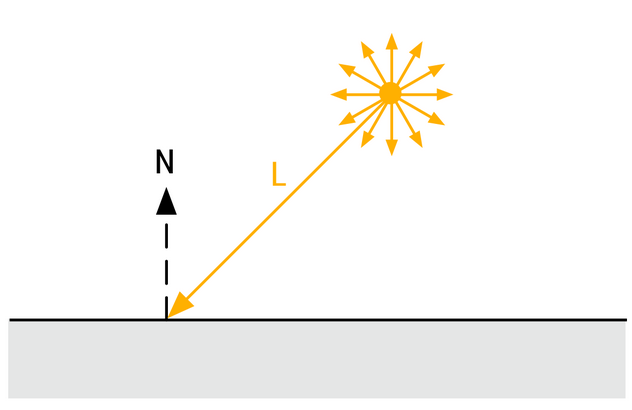

Directional Lights

-

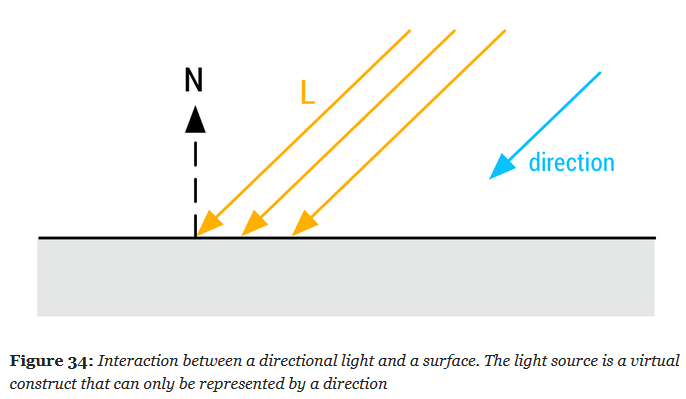

The main purpose of directional lights is to recreate important light sources for outdoor environment, i.e. the sun and/or the moon. While directional lights do not truly exist in the physical world, any light source sufficiently far from the light receptor can be assumed to be directional

-

.

.

-

.

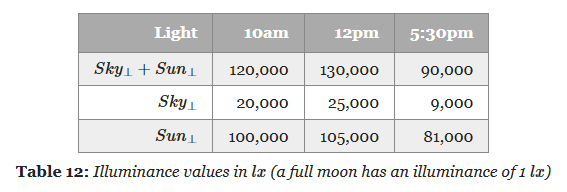

.

-

Illuminance is measured with the unit Lux ($lx$); $lx$ is the symbol, like $W$ for Watts .

.

.

-

-

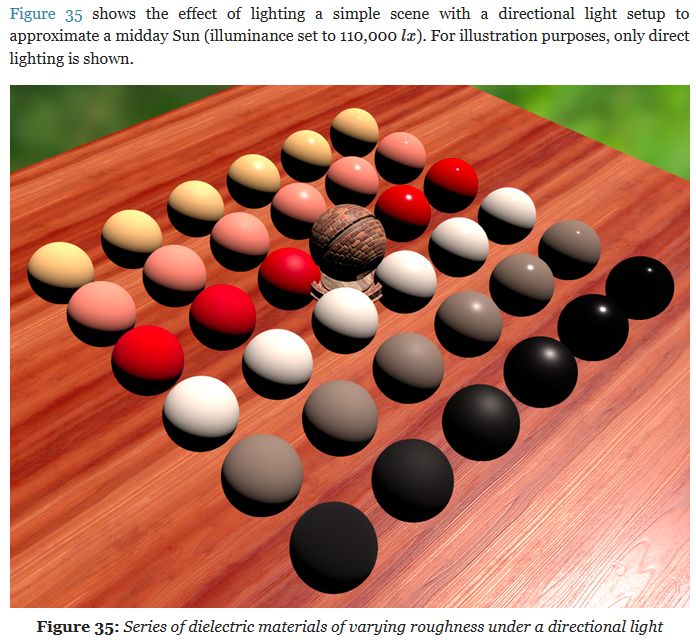

Dynamic directional lights are particularly cheap to evaluate at runtime.

vec3 l = normalize(-lightDirection);

float NoL = clamp(dot(n, l), 0.0, 1.0);

// lightIntensity is the illuminance

// at perpendicular incidence in lux

float illuminance = lightIntensity * NoL;

vec3 luminance = BSDF(v, l) * illuminance;

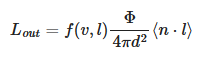

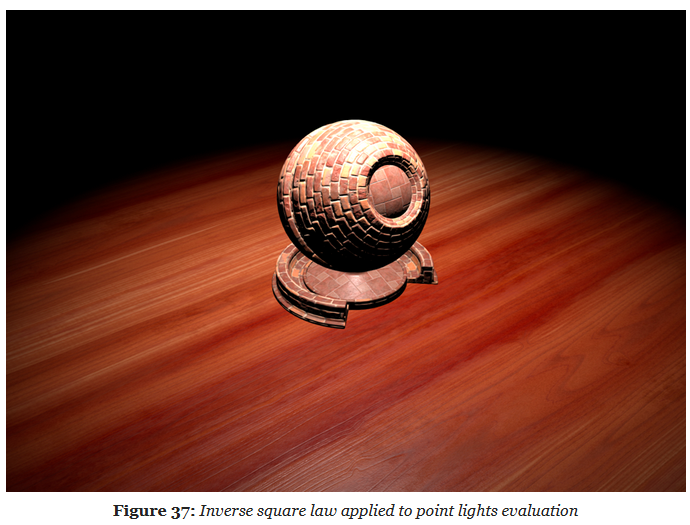

Punctual Lights

-

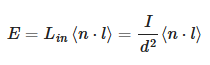

For punctual lights following the inverse square law, we use:

-

.

.

-

Where $d$ is the distance from a point at the surface to the light.

Point Lights

-

.

.

-

.

.

-

.

.

-

Physically based punctual lights :

-

Note that the light intensity used in this piece of code is the luminous intensity in , converted from the luminous power CPU-side. This snippet is not optimized and some of the computations can be offloaded to the CPU (for instance the square of the light's inverse falloff radius, or the spot scale and angle).

float getSquareFalloffAttenuation(vec3 posToLight, float lightInvRadius) { float distanceSquare = dot(posToLight, posToLight); float factor = distanceSquare * lightInvRadius * lightInvRadius; float smoothFactor = max(1.0 - factor * factor, 0.0); return (smoothFactor * smoothFactor) / max(distanceSquare, 1e-4); } float getSpotAngleAttenuation(vec3 l, vec3 lightDir, float innerAngle, float outerAngle) { // the scale and offset computations can be done CPU-side float cosOuter = cos(outerAngle); float spotScale = 1.0 / max(cos(innerAngle) - cosOuter, 1e-4) float spotOffset = -cosOuter * spotScale float cd = dot(normalize(-lightDir), l); float attenuation = clamp(cd * spotScale + spotOffset, 0.0, 1.0); return attenuation * attenuation; } vec3 evaluatePunctualLight() { vec3 l = normalize(posToLight); float NoL = clamp(dot(n, l), 0.0, 1.0); vec3 posToLight = lightPosition - worldPosition; float attenuation; attenuation = getSquareFalloffAttenuation(posToLight, lightInvRadius); attenuation *= getSpotAngleAttenuation(l, lightDir, innerAngle, outerAngle); vec3 luminance = (BSDF(v, l) * lightIntensity * attenuation * NoL) * lightColor; return luminance; } vec3 l = normalize(-lightDirection); float NoL = clamp(dot(n, l), 0.0, 1.0); // lightIntensity is the illuminance // at perpendicular incidence in lux float illuminance = lightIntensity * NoL; vec3 luminance = BSDF(v, l) * illuminance; -

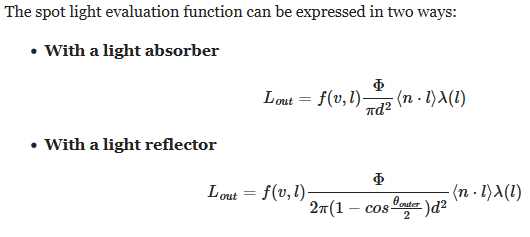

Spot Lights

-

.

.

-

-

.

.

Photometric Lights

-

.

.

-

.

.

-

Implementation:

float getPhotometricAttenuation(vec3 posToLight, vec3 lightDir) {

float cosTheta = dot(-posToLight, lightDir);

float angle = acos(cosTheta) * (1.0 / PI);

return texture2DLodEXT(lightProfileMap, vec2(angle, 0.0), 0.0).r;

}

vec3 evaluatePunctualLight() {

vec3 l = normalize(posToLight);

float NoL = clamp(dot(n, l), 0.0, 1.0);

vec3 posToLight = lightPosition - worldPosition;

float attenuation;

attenuation = getSquareFalloffAttenuation(posToLight, lightInvRadius);

attenuation *= getSpotAngleAttenuation(l, lightDirection, innerAngle, outerAngle);

attenuation *= getPhotometricAttenuation(l, lightDirection);

// This is the addition to the Punctual Light. It requires a lightProfileMap, etc.

float luminance = (BSDF(v, l) * lightIntensity * attenuation * NoL) * lightColor;

return luminance;

}

-

The light intensity is computed CPU-side and depends on whether the photometric profile is used as a mask.

float multiplier;

// Photometric profile used as a mask

if (photometricLight.isMasked()) {

// The desired intensity is set by the artist

// The integrated intensity comes from a Monte-Carlo

// integration over the unit sphere around the luminaire

multiplier = photometricLight.getDesiredIntensity() /

photometricLight.getIntegratedIntensity();

} else {

// Multiplier provided for convenience, set to 1.0 by default

multiplier = photometricLight.getMultiplier();

}

// The max intensity in cd comes from the IES profile

float lightIntensity = photometricLight.getMaxIntensity() * multiplier;

Mobile Adaptations

Pre-Expose Lights

-

"How to store and handle the large range of values produced by the lighting code?"

-

Assuming computations performed at full precision in the shaders, we still want to be able to store the linear output of the lighting pass in a reasonably sized buffer (

RGB16For equivalent). -

The most obvious and easiest way to achieve this is to simply apply the camera exposure before writing out the result of the lighting pass.

-

Pre-exposing lights allows the entire shading pipeline to use half precision floats.

-

In practice we pre-expose the following lights:

-

Punctual lights (point and spot):

-

on the GPU

-

-

Directional light:

-

on the CPU

-

-

IBLs:

-

on the CPU

-

-

Material emissive:

-

on the GPU

-

-

-

This can be easily done with:

fragColor = luminance * camera.exposure;

-

But, this requires intermediate computations to be performed with single precision floats.

-

We would instead prefer to perform all (or at least most) of the lighting work using half precision floats instead.

-

Doing so can greatly improve performance and power usage, particularly on mobile devices. Half precision floats are however ill-suited for this kind of work as common illuminance and luminance values (for the sun for instance) can exceed their range.

-

The solution is to simply pre-expose the lights themselves instead of the result of the lighting pass.

-

This can be done efficiently on the CPU if updating a light's constant buffer is cheap.

-

This can also be done on the GPU , like so:

// The inputs must be highp/single precision, // both for range (intensity) and precision (exposure) // The output is mediump/half precision float computePreExposedIntensity(highp float intensity, highp float exposure) { return intensity * exposure; } Light getPointLight(uint index) { Light light; uint lightIndex = // fetch light index; // the intensity must be highp/single precision highp vec4 colorIntensity = lightsUniforms.lights[lightIndex][1]; // pre-expose the light light.colorIntensity.w = computePreExposedIntensity( colorIntensity.w, frameUniforms.exposure); return light; }

Shadows

Shadow Map

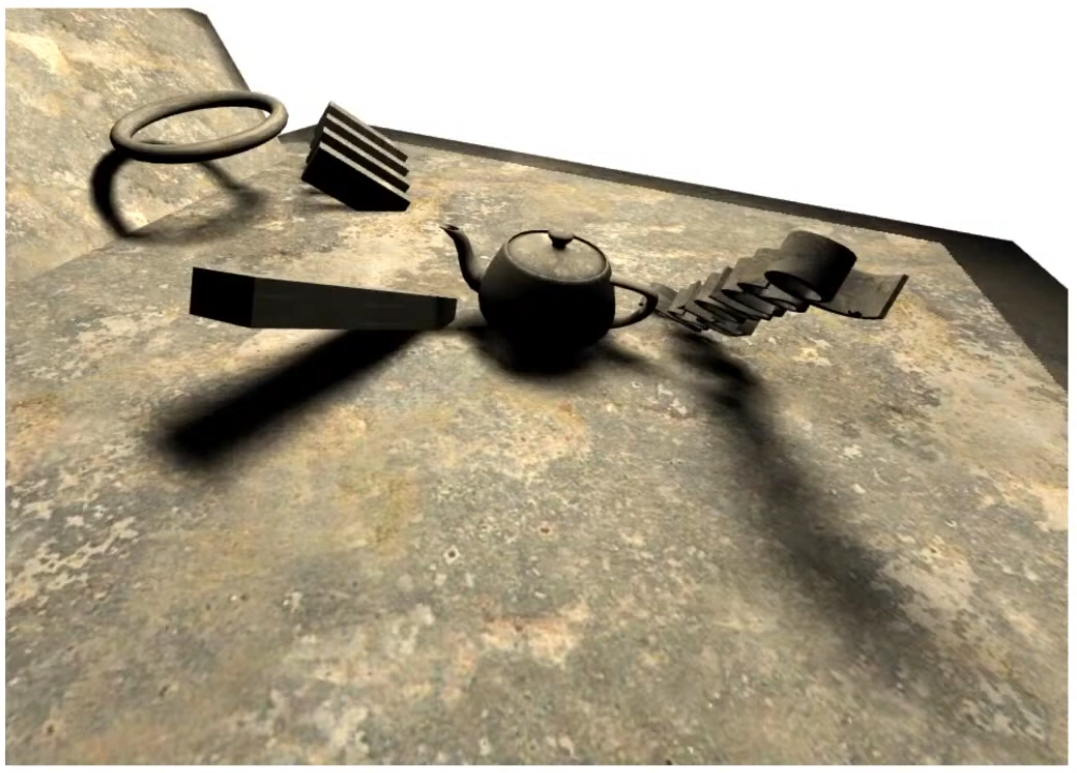

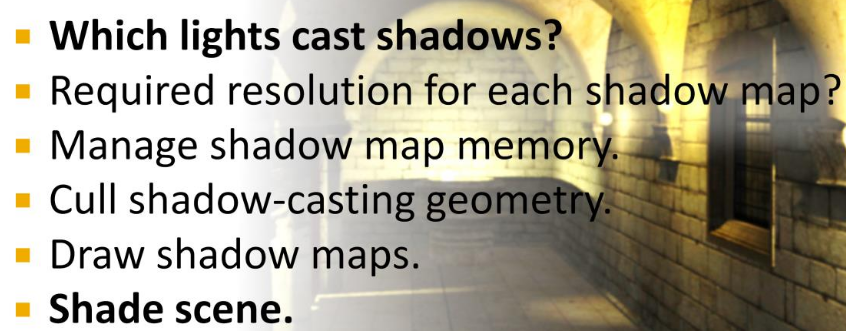

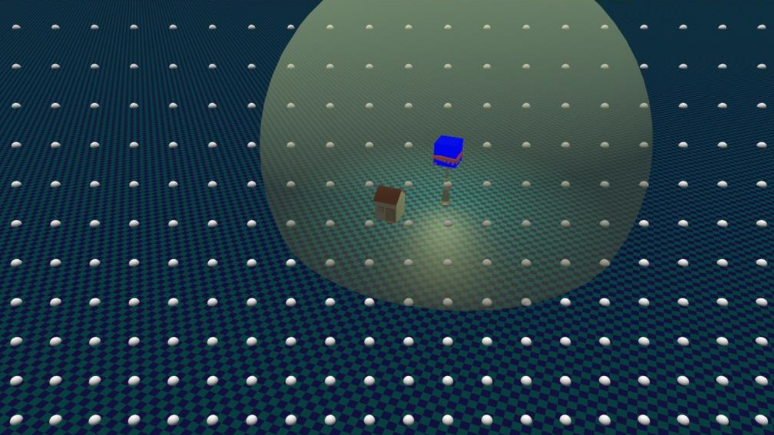

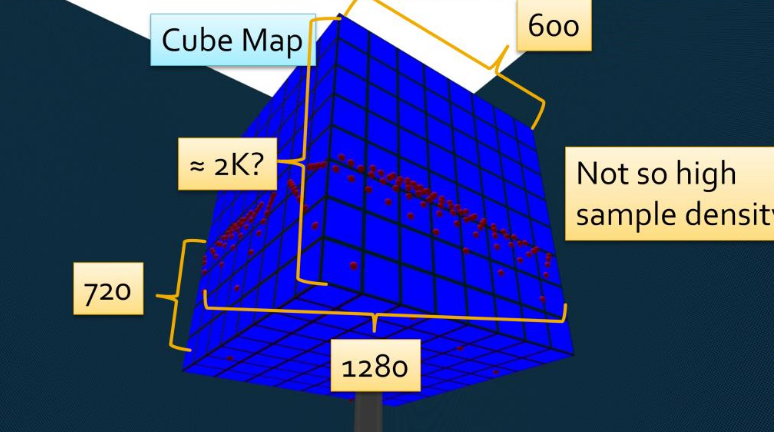

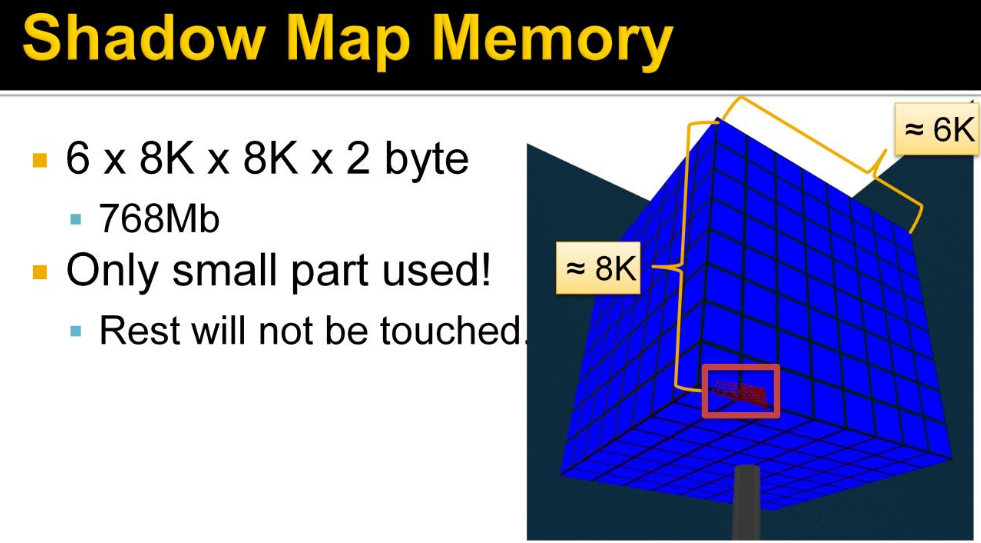

-

"The scene is first rendered at the point of view of the light, and the result of that image is stored in a image called Shadow Map".

-

It stores the depth map.

-

-

"If the light source doesn't 'see' something, then color the pixel dark".

-

The final result is a pixelated shadow. To improve this, PCF (Percentage Closer Filtering) can be done.

-

4x4 PCF is ok, but adding offsets helps in randomness and makes the effect more natural.

-

-

"You want the shadow area to be smallest as possible, while containing all the objects in the camera's view frustum".

-

-

The following techniques are implemented:

-

Cascaded Shadow Maps

-

Stabilized Cascaded Shadow Maps

-

Automatic Cascade Fitting based on depth buffer analysis, as in Sample Distribution Shadow Maps .

-

Various forms of Percentage Closer Filtering

-

Exponential Variance Shadow Maps (EVSM).

-

-

-

Improved shadows using dithering and temporal supersampling .

Percentage Closer Filtering (PCF)

-

.

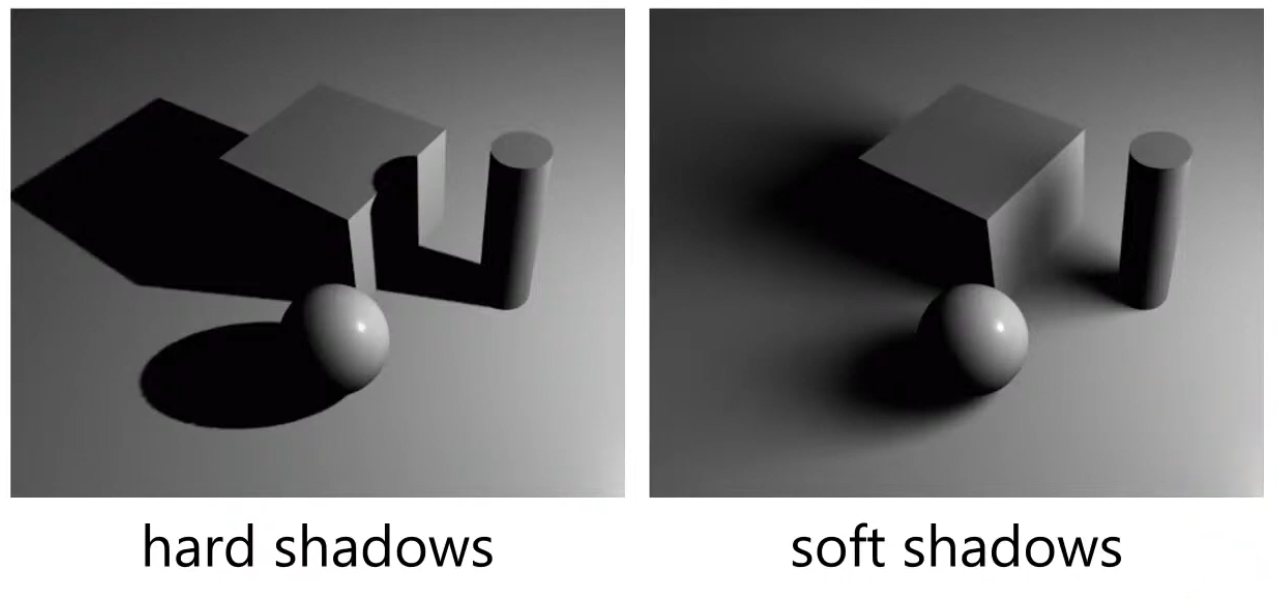

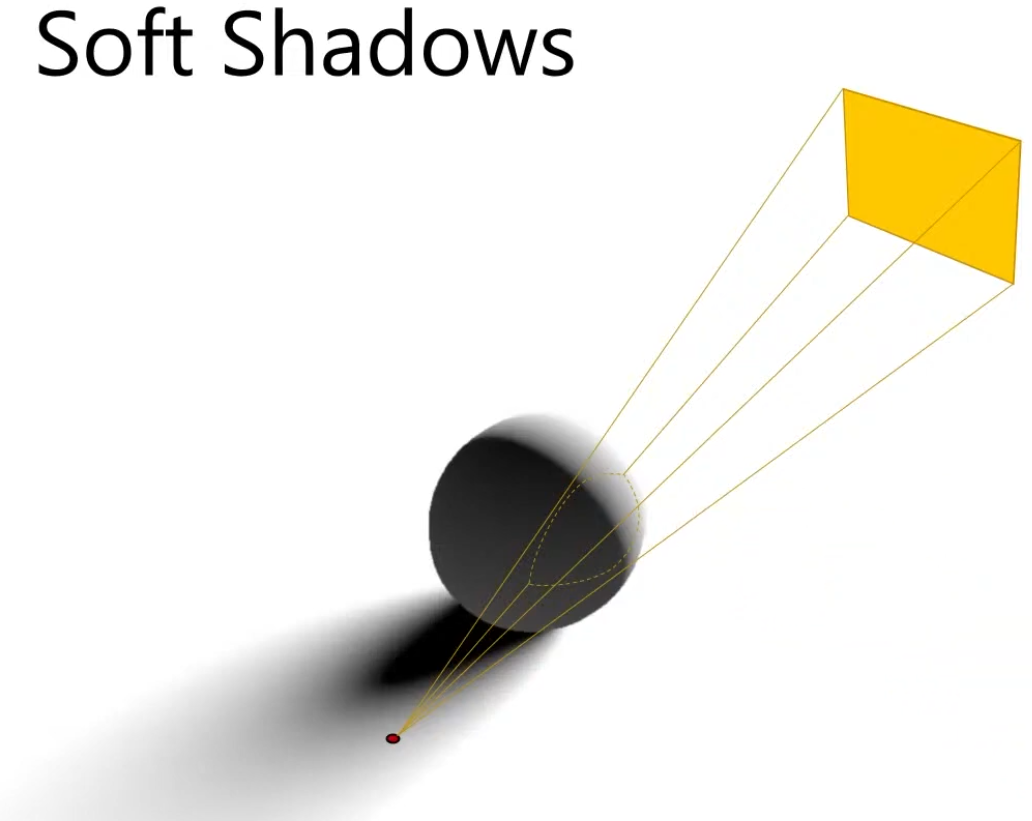

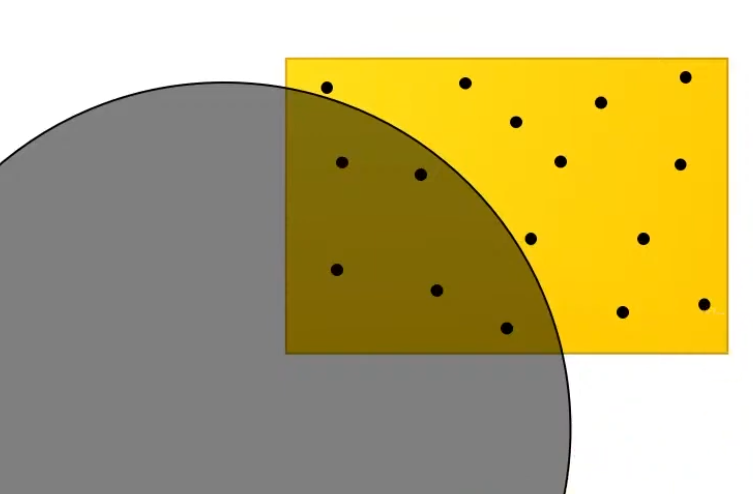

Soft-Shadows

-

Usual for area lights.

-

.

.

-

.

.

-

We will not do proper shading from this light source, but only compute what percentage of the area of the light is covered.

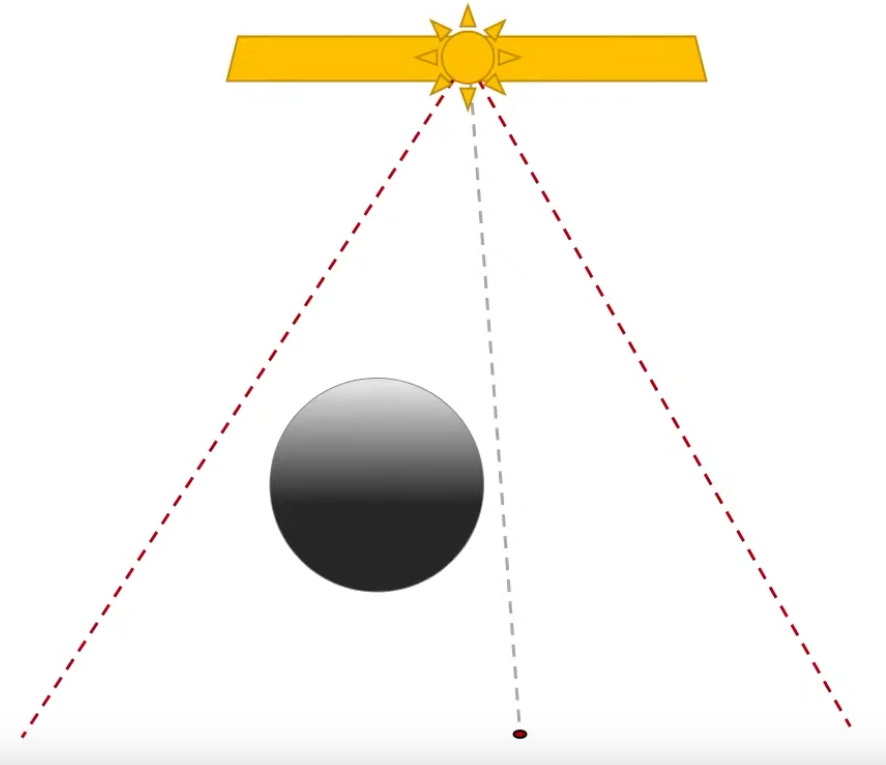

Raytracing

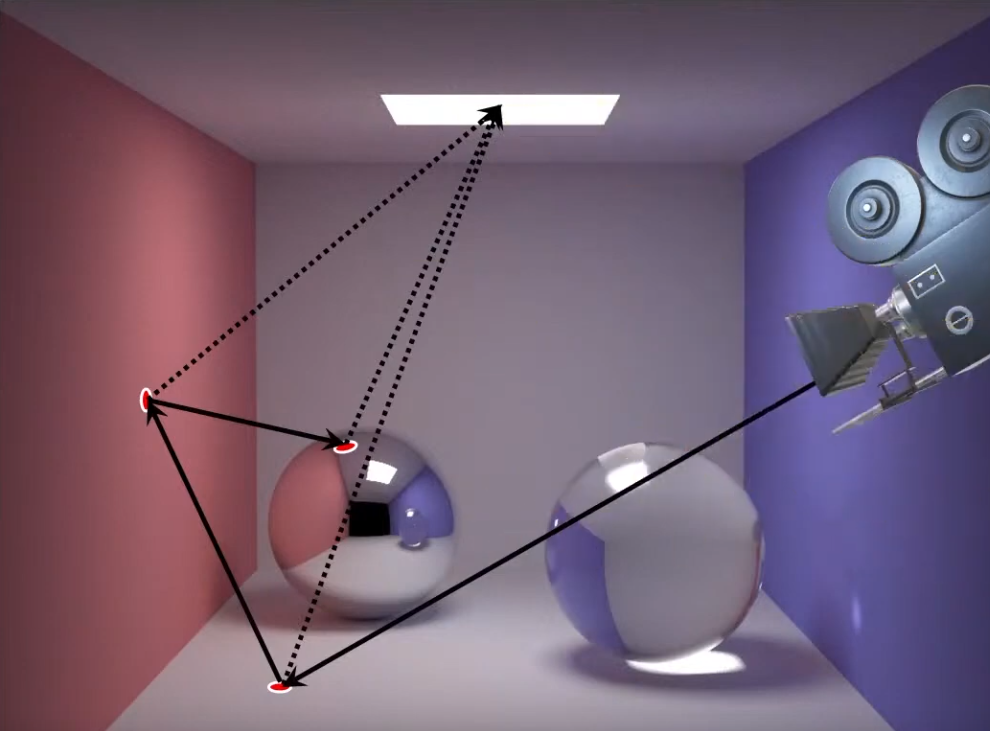

-

It's the proper way to solve it, but it's costly; the other techniques are approximations.

-

Send rays from the point to the light source.

-

.

.

-

Approximate the percentage of the area that is visible to the light source, via the ratio of rays that hit the light vs rays that didn't hit the light.

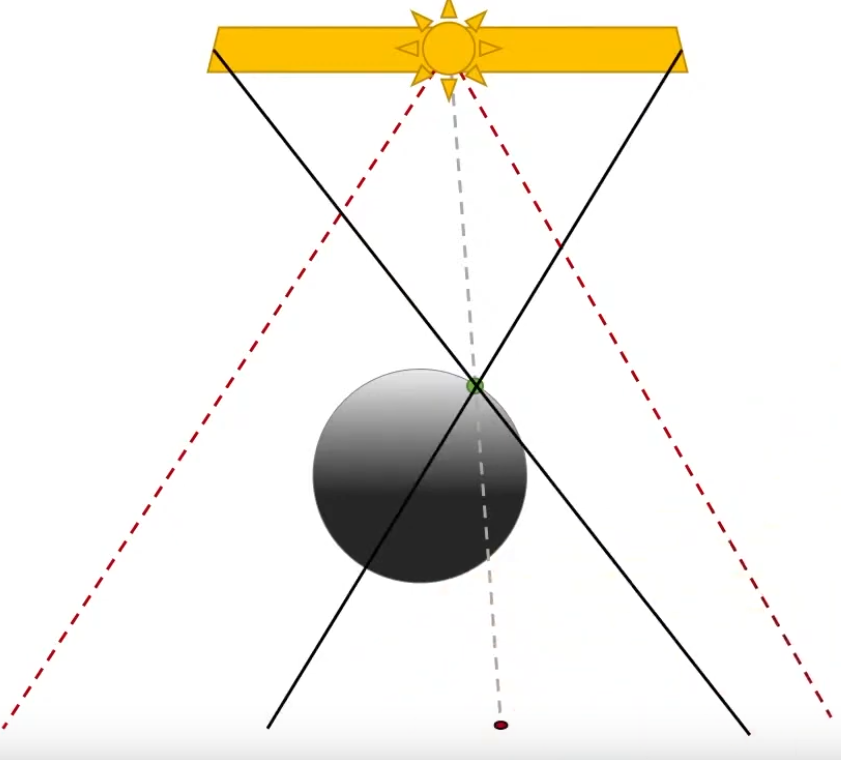

Percentage Closer Soft Shadows (PCSS)

-

Presented at Siggraph in 2005.

-

.

.

-

The PCF sample varies through out the shadow, so the shadows closer to the object look sharp and far away from the object look smooth.

-

Based on the distance to the object from the floor, you decide what filter you should use.

-

How far the occluder is vs how far the point I want to compute is.

-

It also uses the occluder size.

-

.

.

-

-

Requires a 'Occluder Search' so a situation like below correctly indicates that should be shadow in that point.

-

The shadow in that points comes from the fact that the left side of the light source blocks the object.

-

.

.

-

-

As PCSS requires Occluder Search and a PCF with large radius, depending on the situation, this method of soft shadows is not cheap.

-

Is cheaper than tracing rays, tho.

-

-

.

.

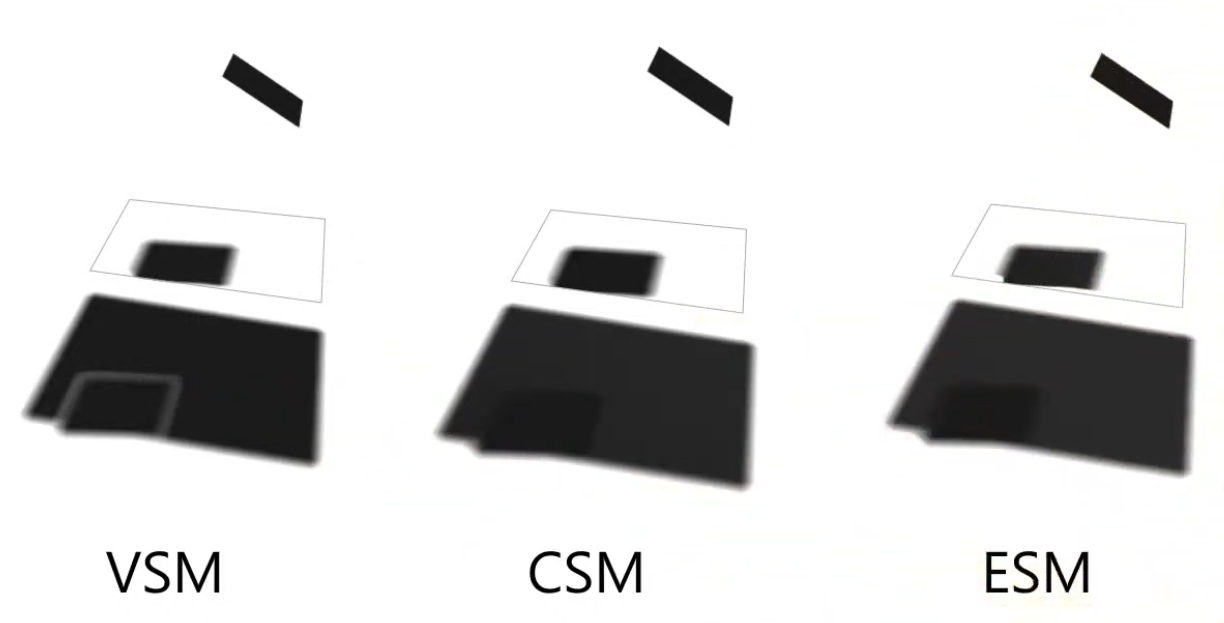

~Other Techiniques

-

Doesn't work with Shadow Maps, as Shadow Maps just gives you a blunt occlusion information.

-

Tries to approximate the PCF / PCSS, for soft-shadows instead of computing it.

-

Variance Shadow Maps (VSM) .

-

-

Convolution Shadow Maps (CSM) .

-

-

Exponential Shadow Maps (ESM) .

-

-

.

.

-

There are improvements to these methods, trying to solve the problems of these approximations.

Radiance Cascades

-

I saw a video demonstrating the usage of radiance cascades for soft-shadows.

Skybox / Skydome

-

Steps to reproduce the Skybox from the Vulkan sample HDR:

-

Load KTX file.

-

See

api_vulkan_sample.cpp->load_texture_cubemap (1202).

-

-

In:

in_pos(vec3). -

Desc Sets: Camera View and Camera Proj in the shader

-

out:

out_uvw(vec3) andout_pos(vec3).

textures.envmap = load_texture_cubemap("textures/uffizi_rgba16f_cube.ktx", vkb::sg::Image::Color); vkb::initializers::write_descriptor_set(descriptor_sets.object, VK_DESCRIPTOR_TYPE_UNIFORM_BUFFER, 0, &matrix_buffer_descriptor), vkb::initializers::write_descriptor_set(descriptor_sets.object, VK_DESCRIPTOR_TYPE_COMBINED_IMAGE_SAMPLER, 1, &environment_image_descriptor),// Vertex layout (location = 0) in vec3 inPos; layout (binding = 0) uniform UBO { mat4 projection; // camera.matrices.perspective; mat4 skybox_modelview; // camera.view } ubo; layout (location = 0) out vec3 outUVW; layout (location = 1) out vec3 outPos; outUVW = inPos; outPos = vec3(mat3(ubo.skybox_modelview) * inPos); gl_Position = vec4(ubo.projection * vec4(outPos, 1.0)); // Frag layout (binding = 1) uniform samplerCube samplerEnvMap; layout (location = 0) in vec3 inUVW; layout (location = 0) out vec4 outColor0; vec3 normal = normalize(inUVW); color = texture(samplerEnvMap, normal); // Color with manual exposure into attachment 0 outColor0.rgb = vec3(1.0) - exp(-color.rgb * ubo.exposure); -

-

See UI and Skybox from Kohi Engine.-

Yea.. The engine is an insane mess.

-

The shaders are confusing and use the camera matrix and model matrix to draw UI, wtf.

-

It's impossible to find out how the graphics pipeline is created. It's an infinite wormhole.

-

Transparency

Alpha

// baseColor has already been premultiplied

vec4 shadeSurface(vec4 baseColor) {

float alpha = baseColor.a;

vec3 diffuseColor = evaluateDiffuseLighting();

vec3 specularColor = evaluateSpecularLighting();

return vec4(diffuseColor + specularColor, alpha);

}

Alpha Blend

-

With Z-Buffer Rasterization, the order matters.

-

.

.

-

If renderer back to front, it works fine.

-

-

.

.

-

With A-Buffer Rasterization, the objects get sorted based on the alpha, so we don't have this problem.

-

GPUs use Z-Buffer, so we are stuck with it.

-

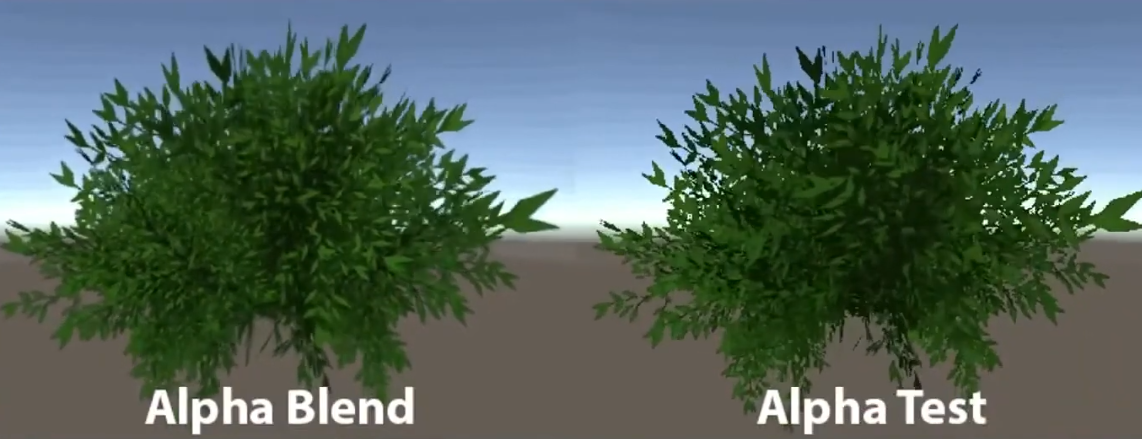

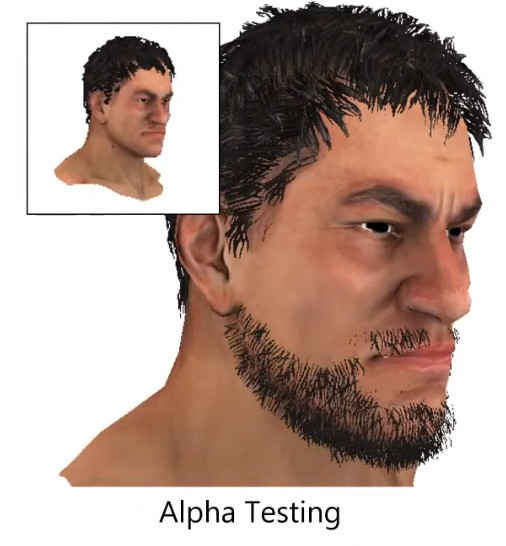

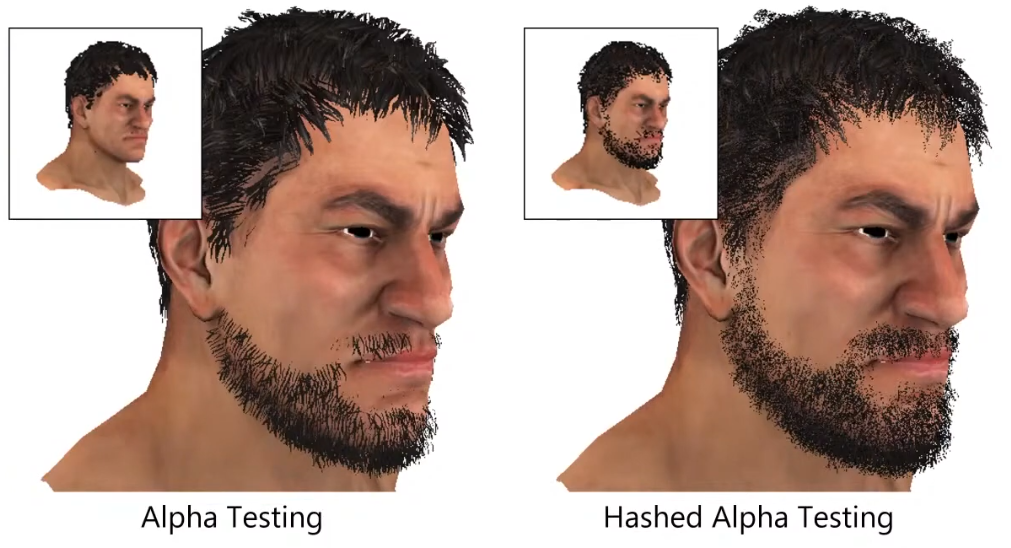

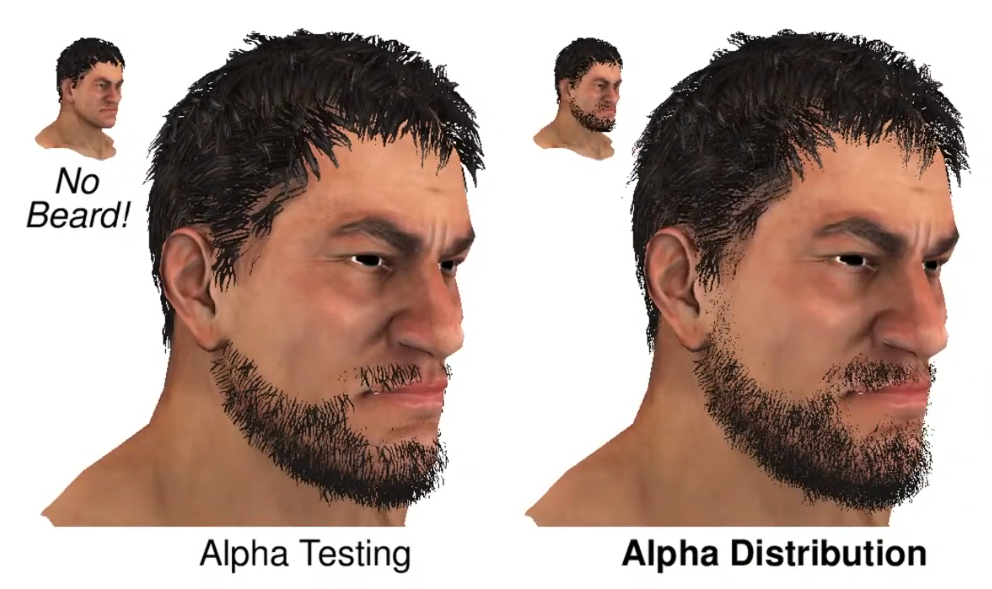

Alpha Testing

-

.

.

-

If the alpha is below a certain threshold, discard.

-

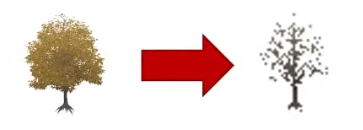

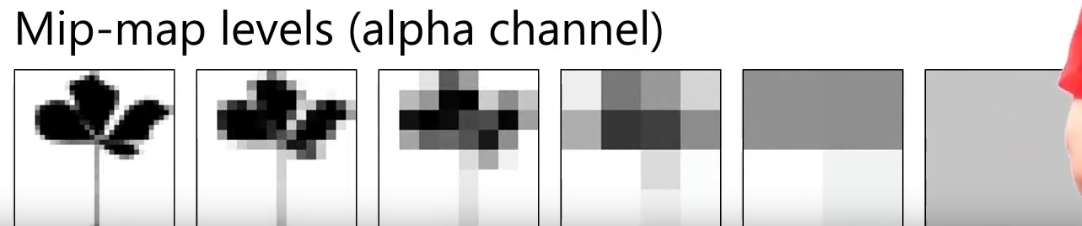

Alpha Testing with mipmapping can have problems:

-

.

.

-

.

.

-

The alpha ends up converging to a value below the threshold

-

.

.

-

At far away, the character loses its beard.

-

-

Hashed Alpha Testing

-

-

.

.

-

Test randomly.

-

The discard is made in software, not in hardware.

-

It's really noise, as it looks like dithering.

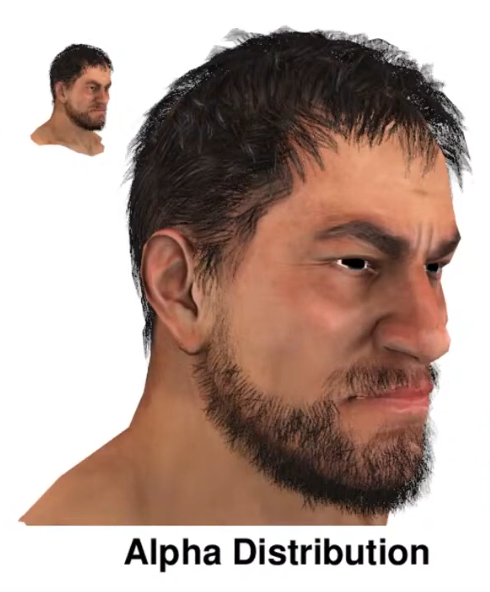

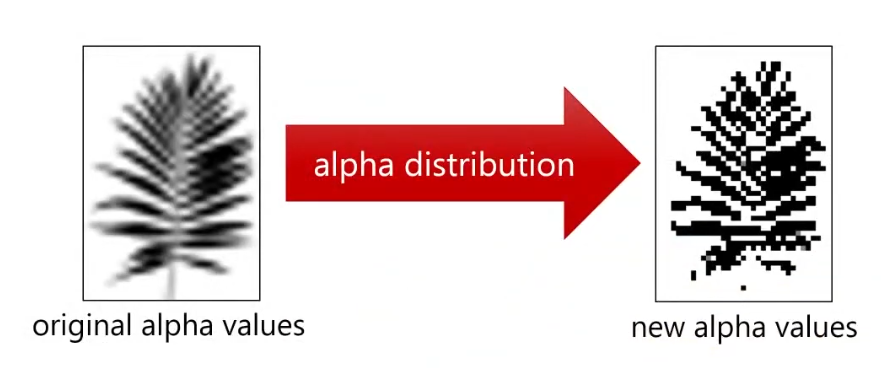

Alpha Distribution

-

-

.

.

-

- Alpha Distribution + Alpha to Coverage.

- Alpha Distribution + Alpha to Coverage.

-

Uses dithering first.

-

.

.

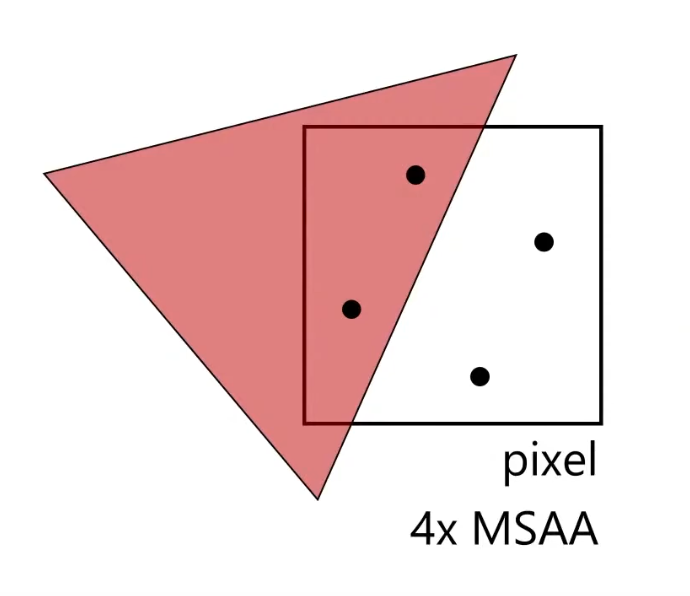

Alpha to Coverage

-

.

.

-

You get smoother pixels than with Alpha Testing.

-

It adds different values of alpha, instead of 0 or 1.

-

With 4x, you can get 4 different values of alpha: 0.0, 0.25, 0.5, 0.75.

-

-

.

.

-

Alpha to Coverage (left), Alpha Testing (right).

-

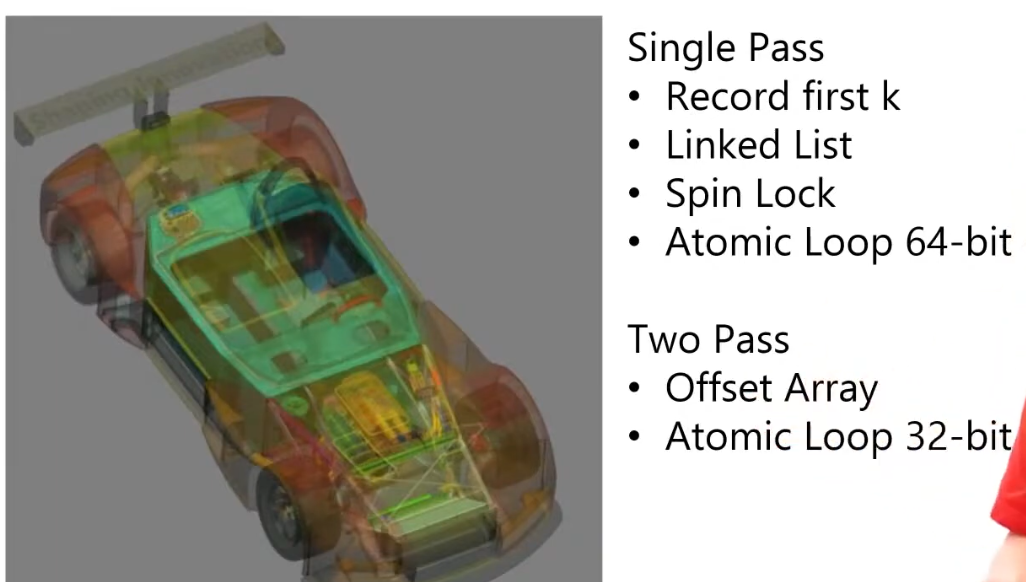

Order-Independent Transparency

-

Godot 4 - Render Limitations .

-

Godot doesn't do OIT.

-

The article shows how to deal with it.

-

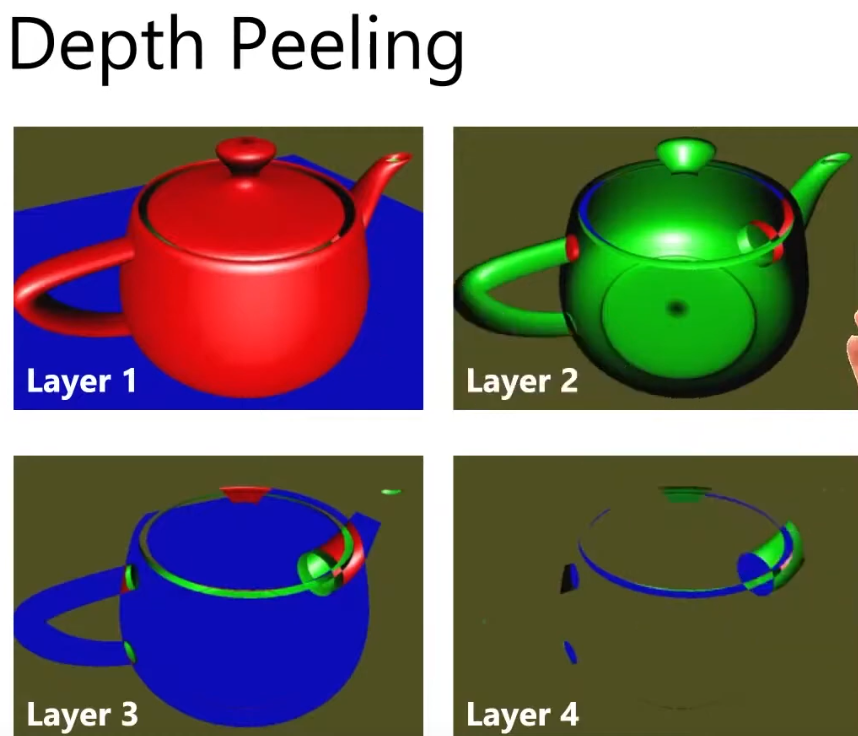

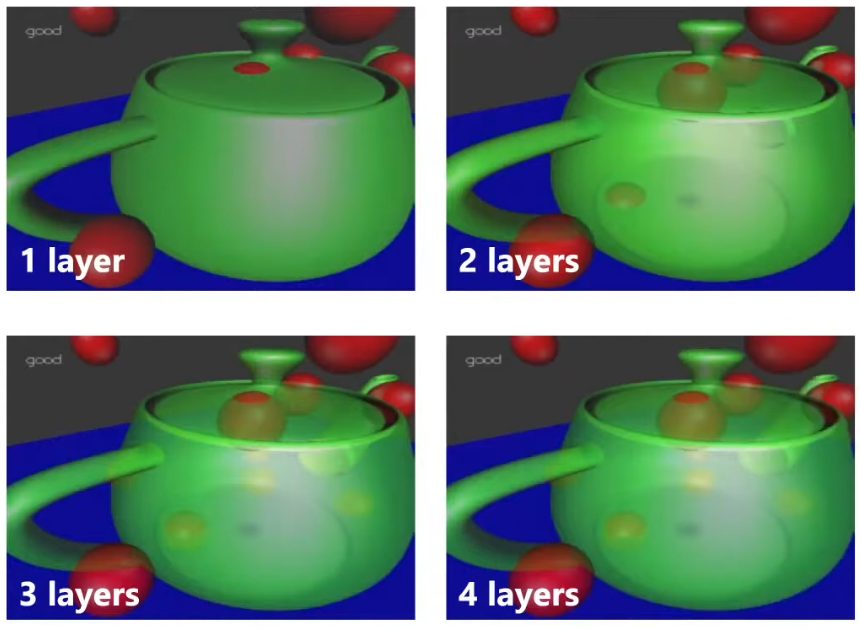

Depth Peeling

-

-

Layer 1:

-

Render as full opaque.

-

-

Layer 2:

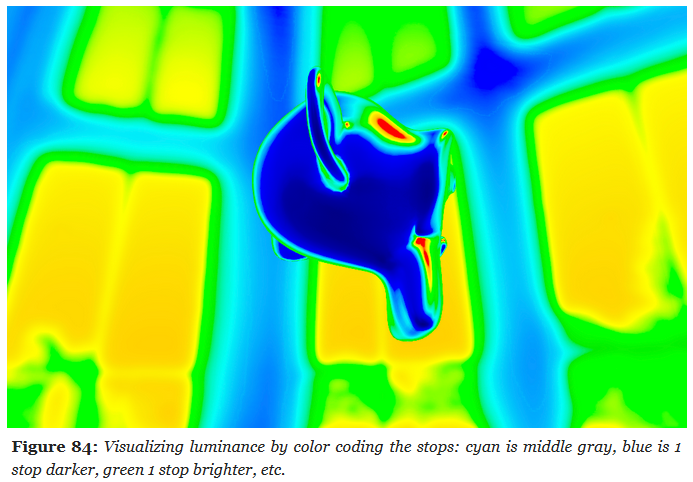

-