-

See Physics Engines#Theory for strategies.

-

Ex : Spin Lockless > Lockless > Sync Primitives.

-

Dedicated Threads / Static Partitioning / Domain-Specific Threads

-

Assign specific long-running tasks to fixed threads.

-

Characteristics:

-

Specialized threads (e.g., physics thread only does physics).

-

No dynamic task assignment.

-

Threads run continuously.

-

-

Used in:

-

Older game engines, embedded systems, latency-critical apps.

-

Older Unreal Engine versions: separate threads for rendering, physics.

-

-

When to Stick :

-

Game performance acceptable.

-

Stability/debug priority over throughput.

-

Workloads not easily parallelizable.

-

-

Dedicated thread may call job system for subtasks.

Pros

-

Simple to implement/debug.

-

Low latency.

-

Predictable (no unrelated contention).

Cons

-

Poor CPU utilization (idle threads waste cores).

-

Hard to scale.

Implementation

-

No Scheduler: thread manages own work manually.

-

No task queue.

Transition to: Job System

-

Technical Hurdles

-

Task Granularity: Dedicated threads handle coarse work (e.g., "whole physics step"), while jobs need fine-grained tasks (e.g., "collision check between A and B").

-

Synchronization: Dedicated threads often use locks; jobs rely on dependencies/atomics.

-

Latency Sensitivity: Jobs introduce scheduling overhead (bad for real-time threads like rendering).

-

-

Architectural Shifts

-

From "Owned Data" to "Shared Data": Dedicated threads often own their data (e.g., physics thread owns physics state), while jobs assume data is transient/immutable.

-

Debugging Complexity: Race conditions in jobs are harder to trace than linear thread execution.

-

Job System

About

-

Unit of work : task/function executed asynchronously.

-

Scheduled and managed by runtime/thread pool/scheduler.

-

Jobs require execution context and synchronization.

-

Like a thread pool but with:

-

Fine-grained tasks, dependencies, work stealing.

-

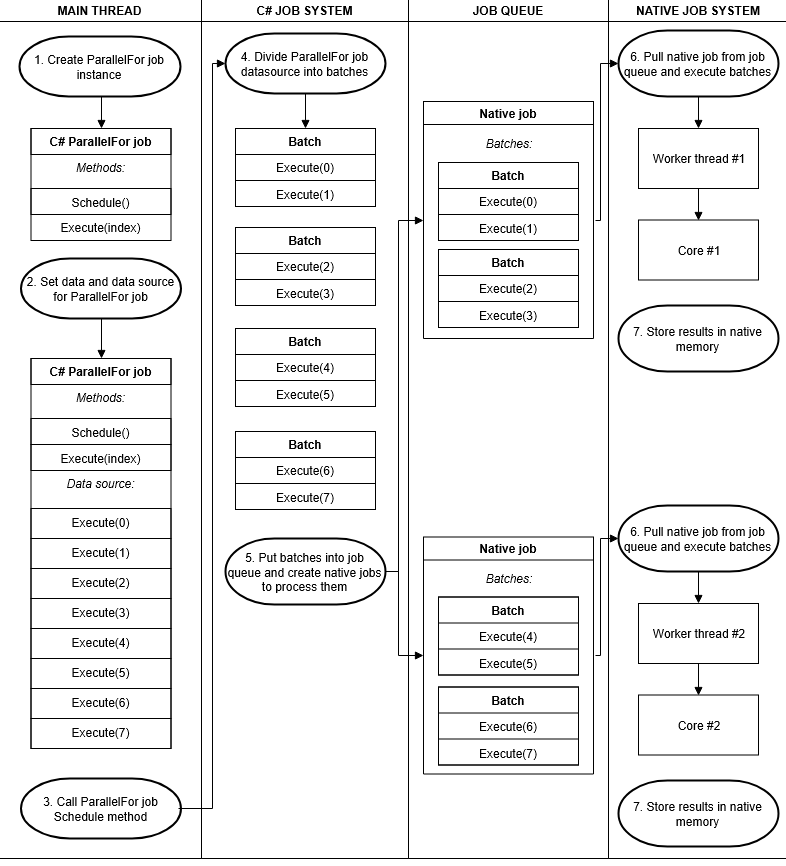

Workflow

-

A job (e.g., compute something, handle request) is created.

-

It's added to a job queue .

-

A scheduler picks jobs from the queue.

-

A worker (thread/fiber/goroutine) executes the job.

-

Synchronization primitives are used inside jobs if they access shared state.

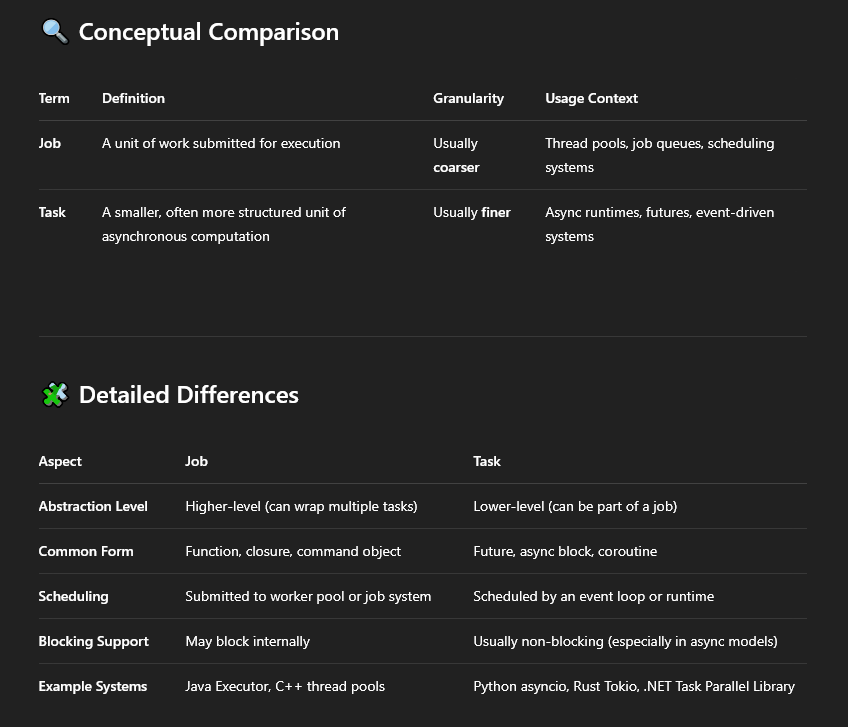

Job or Task?

-

"Job is a self contained task with parameters."

-

They are closely related and often used interchangeably, but there are distinctions depending on context, level of abstraction, and language/runtime.

-

A task can be considered a specialized kind of job.

-

In many libraries and frameworks, the terms are synonymous in practice but may imply different underlying models (threaded vs. async).

-

.

.

Job Queue

-

Is a data structure that holds pending jobs (tasks) to be executed by workers (threads, fibers, goroutines, etc.).

-

It is typically implemented as a FIFO queue, but can vary depending on scheduling strategy.

-

A buffer that stores jobs waiting to be run.

-

A job queue is often implemented using an array or linked list, with concurrency controls.

-

Tipos :

-

FIFO Queue

-

First In First Out.

-

Basic job queue, executed in submission order

-

-

Priority Queue

-

Jobs with priority levels, higher priority first

-

-

Ring Buffer

-

Fixed-size circular buffer, often for performance

-

-

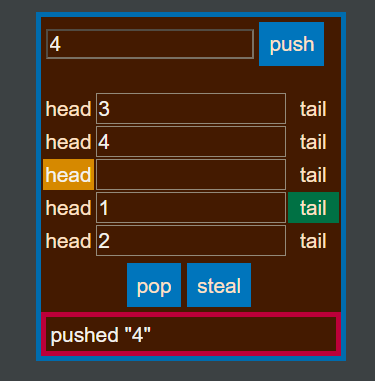

Deque

-

Double-ended queue

-

-

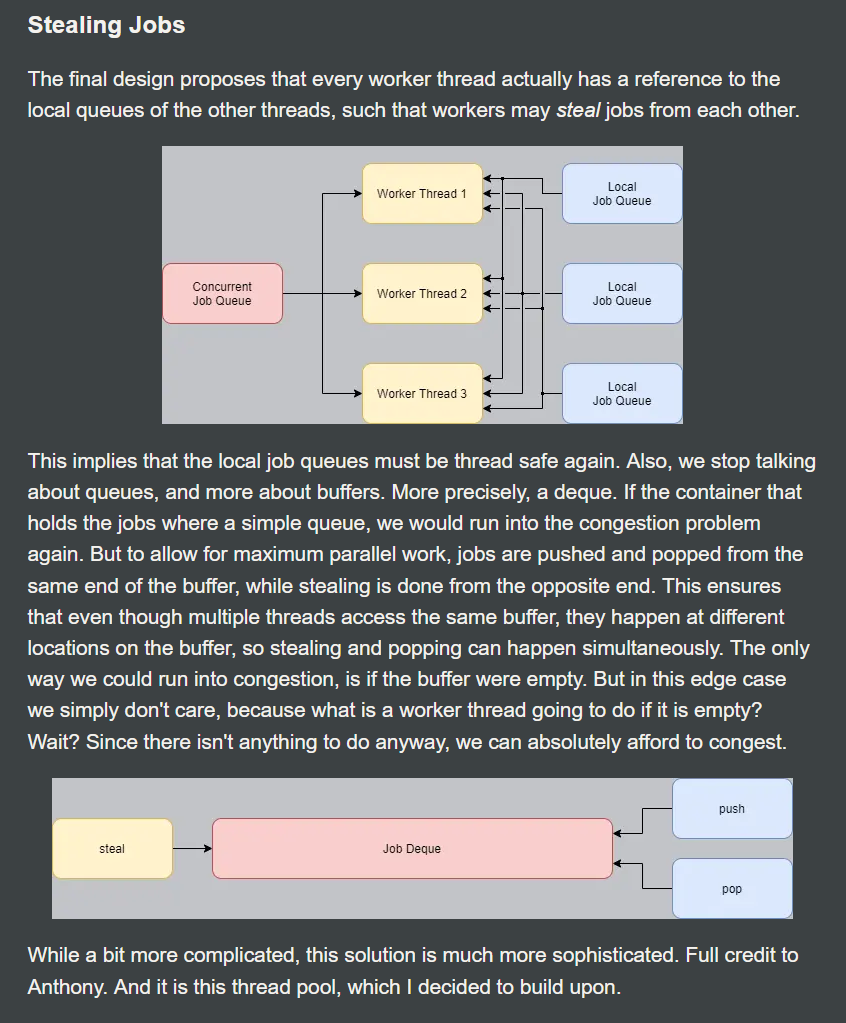

Load-balancing / Work-stealing

-

Building a Job System in Rust .

-

.

.

-

.

.

-

Useful to understand its Ring Buffer.

-

-

.

.

-

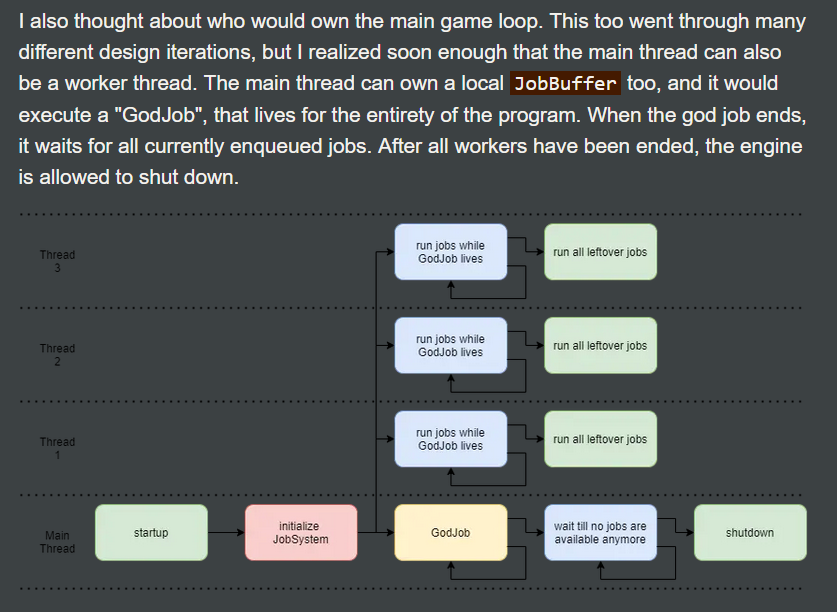

"Nonetheless, I really want to do what Naughty Dog did with their engine: Jobify the entire thing. EVERYTHING will run on this job system, with a few significant exceptions: Startup, shutdown, logging and IO. Everything that runs on the job system will push local jobs."

-

-

Advantages

-

Fine-Grained Workload Distribution :

-

Jobs are small units of work, allowing better load balancing across threads.

-

Reduces idle time by keeping all threads busy with smaller tasks.

-

-

Scalability :

-

Jobs can be dynamically scheduled across available threads, scaling well with CPU core count.

-

Better suited for heterogeneous workloads than static thread partitioning.

-

-

Reduced Thread Overhead :

-

Avoids frequent thread creation/destruction by reusing a fixed pool of worker threads.

-

More efficient than per-task threading (e.g., spawning a thread for each small task).

-

-

Dependency Management :

-

Jobs can express dependencies (e.g., "Job B runs after Job A"), enabling efficient task graphs.

-

Better than manual synchronization in raw thread-based approaches.

-

-

Cache-Friendly :

-

Jobs can be designed to process localized data, improving cache coherence compared to thread-per-task models.

-

-

Flexibility :

-

Supports both parallel and serial execution patterns.

-

Easier to integrate with other systems (e.g., async I/O, game engines, rendering pipelines).

-

Disadvantages

-

Scheduling Overhead :

-

Managing a job queue and dependencies introduces some runtime overhead.

-

May not be worth it for extremely small tasks (nanosecond-scale work).

-

-

Complex Debugging :

-

Race conditions and deadlocks can be harder to debug than single-threaded or coarse-grained threading.

-

Job dependencies can create non-linear execution flows.

-

-

Potential for Starvation :

-

Poor job partitioning can lead to some threads being underutilized.

-

Long-running jobs may block others if not split properly.

-

-

Dependency Complexity :

-

While dependencies are powerful, managing complex job graphs can become unwieldy.

-

Requires careful design to avoid bottlenecks.

-

-

Latency for Small Workloads :

-

If job submission and scheduling latency is high, it may not be ideal for ultra-low-latency tasks.

-

Explanations

-

Multithreading with Fibers+Jobs in Naughty Dog engine .

-

Fibers were highly praised, it was very appreciated.

-

About libs :

-

"There are so few functions that I wouldn't bother with a library".

-

-

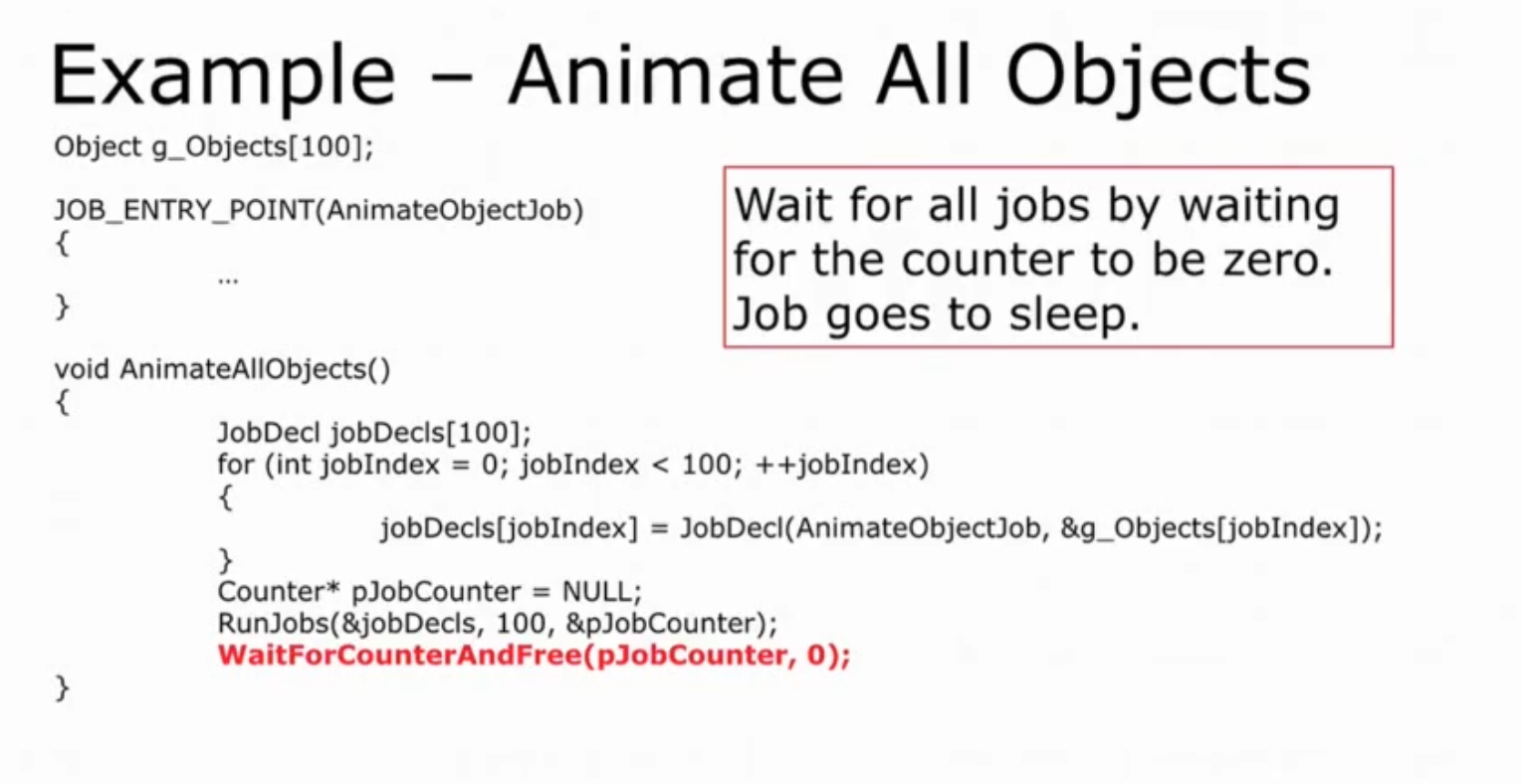

From what I understood of the strategy :

-

Everything is transformed into a job and scheduled to run asynchronously across multiple threads.

-

This is handled automatically by the job system.

-

All new jobs added to the queue are executed completely spread out, out of order, and with some jobs in between.

-

-

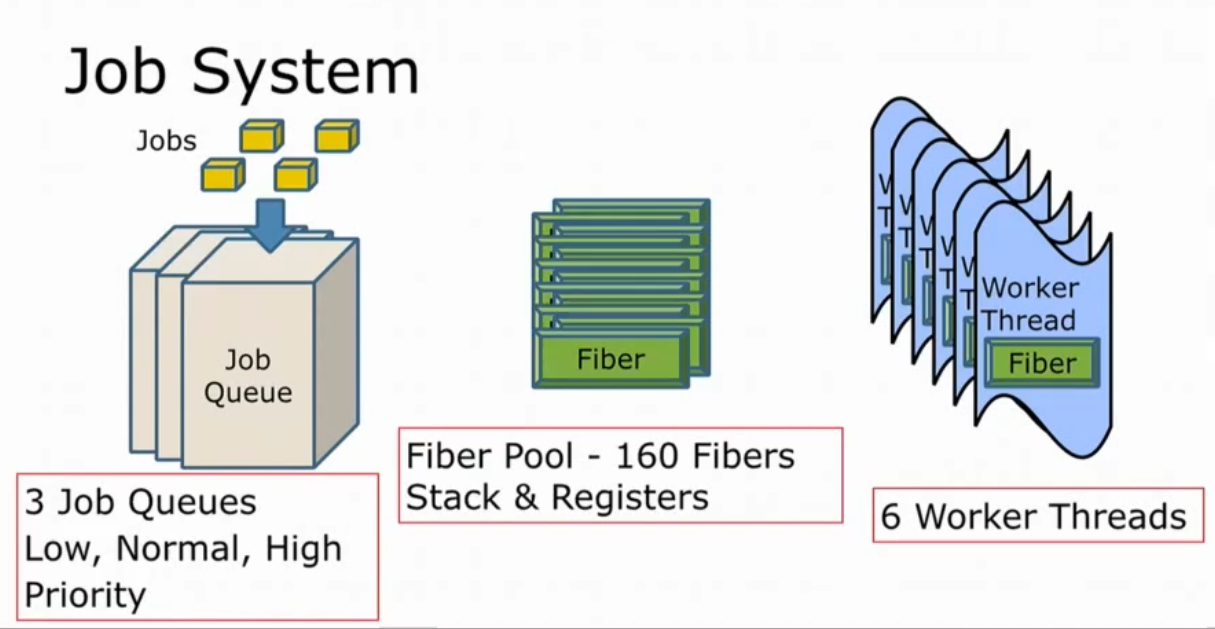

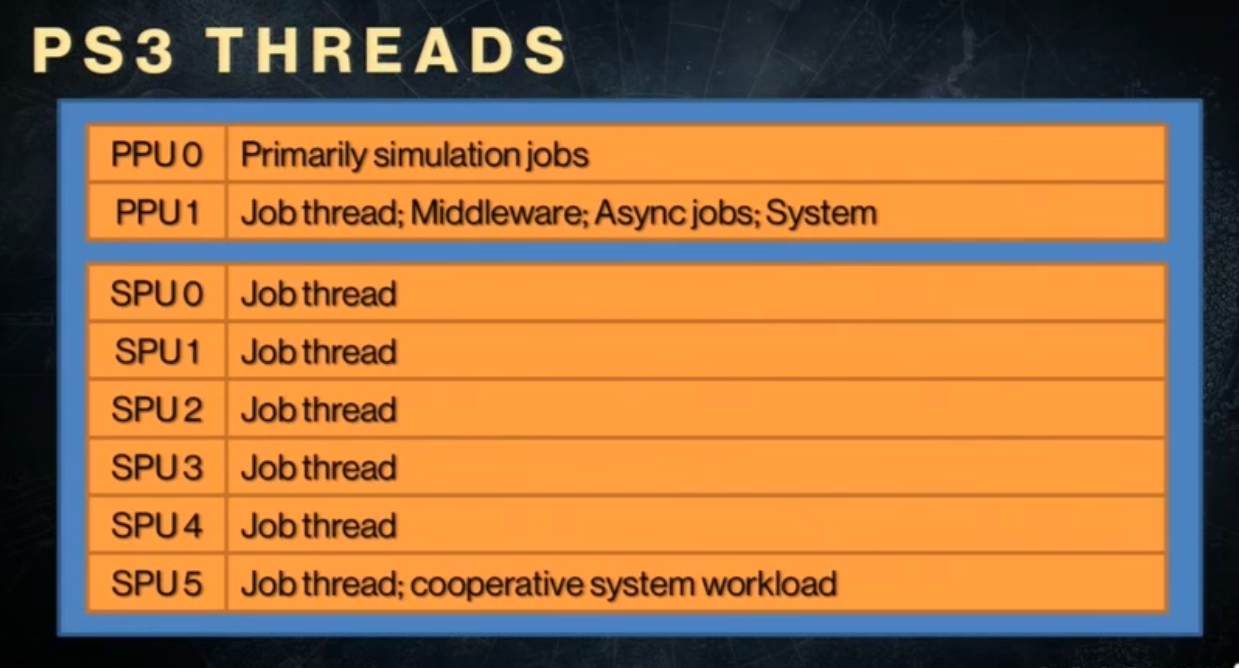

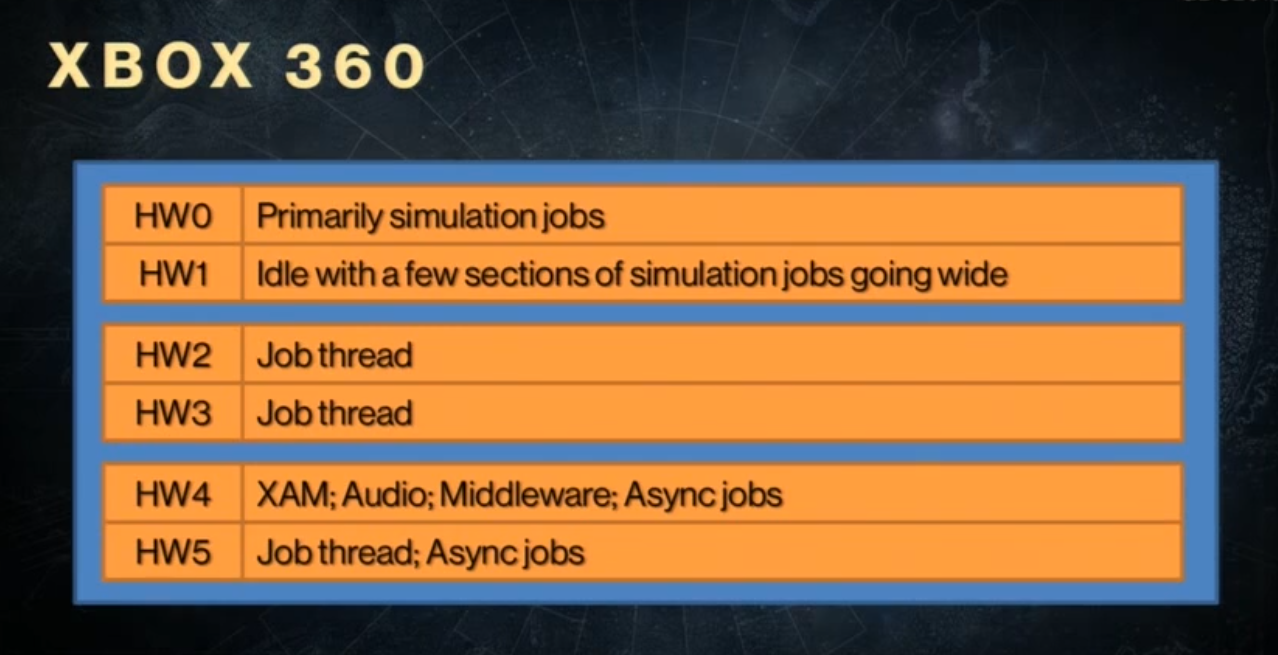

Setup :

-

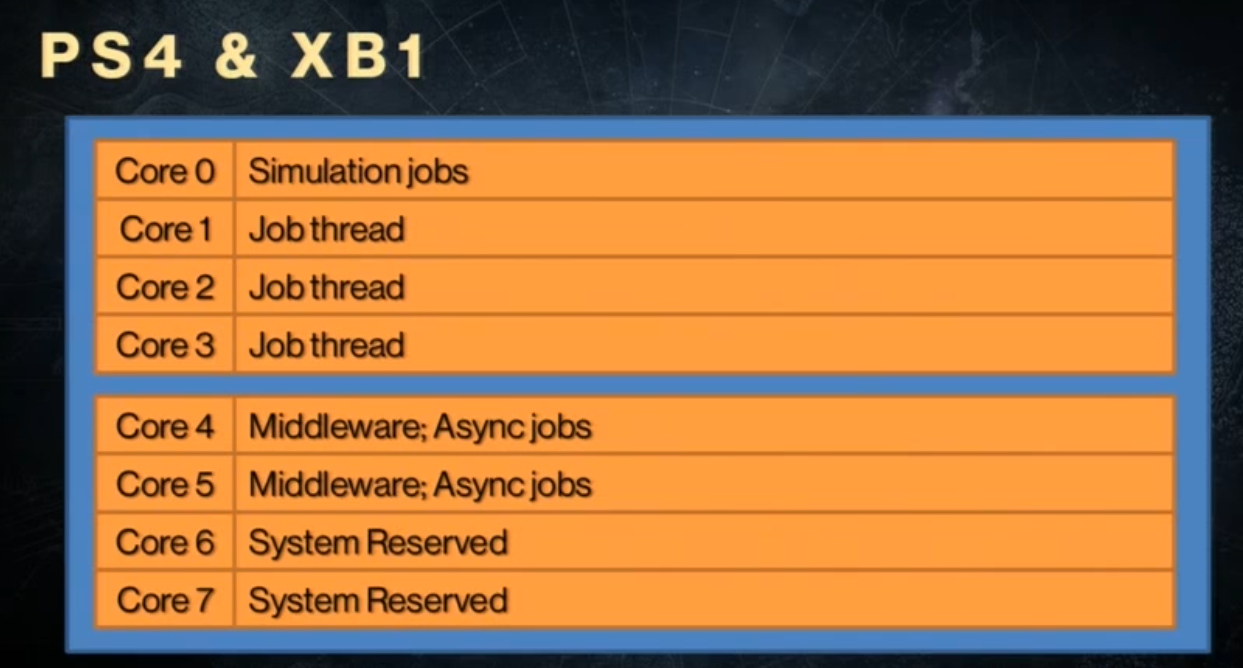

6 worker threads, one for each CPU core.

-

160 fibers.

-

800-1000 jobs per frame in The Last of Us Remastered, at 60Hz.

-

3 global job queues.

-

.

.

-

-

Jobs :

-

"Jobfy the entire engine, everything needs to be a job".

-

EXCEPT I/O Threads:

-

sockets, file I/O, system calls, etc.

-

"There are system threads".

-

"Always waiting (sleeping) and never do expensive processing of the data".

-

-

.

.

-

-

Fibers :

-

"It's just a call stack".

-

Pros :

-

Easy to implement in an existing system.

-

-

Cons :

-

"System synchronization primitives can no longer be used"

-

Mutex, semaphore, condition variables, etc.

-

Locked to a particular thread. Fibers migrate between threads.

-

-

Synchronization has to be done at the hardware level.

-

Atomic spin locks are used almost everywhere.

-

Special job mutex is used for locks held longer.

-

Puts the current job to sleep if needed instead of spin lock.

-

-

-

-

Fibers and their call stacks are viewable in the debugger, just like threads.

-

Can be named/renamed.

-

Indicates the current job.

-

-

Crash handling

-

Fiber call stacks are saved in the core dumps just like threads.

-

-

"Fiber-safe Thread Local Storage (TLS)".

-

Clang had issues with this in 2015.

-

-

Use adaptive mutexes in the job system.

-

Spin and attempt to grab lock before making a system call.

-

Solves priority inversion deadlocks.

-

Can avoid most system calls due to initial spin.

-

-

-

FrameParams :

-

Data for each displayed frame.

-

Contains per-frame state:

-

Frame number.

-

Delta time.

-

Skinning matrices.

-

-

Entry point for each stage to access required data.

-

It's a non-disputed resource.

-

State variables are copied into this structure every frame:

-

Delta time.

-

Camera position.

-

Skinning matrices.

-

List of meshes to render.

-

-

Stores start/end timestamps for each stage: game render, GPU, and flip.

-

HasFrameCompleted(frameNumber) -

We have 16 FrameParams to check, but it's not 16 FrameParams worth of memory, as they are now freed.

-

"The output of one frame is the input of the next".

-

-

-

-

Written in C++.

-

.

.

-

.

.

-

.

.

-

.

.

-

Jobs :

-

"Jobs with ideal duration 500us - 2000us".

-

Priority-based FIFO

-

First In First Out.

-

-

-

"" FIBER ""

-

Set of jobs that always run serially and sequentially with an associated block of memory.

-

"We have 3-4 fibers".

-

They are a convenience, as they are easier to understand than a mess of jobs.

-

"Easier to describe".

-

-

-

Resource .

-

Something that can be accessed.

-

Theoretical.

-

Havok World, player profile, AI data, etc.

-

-

Policies

-

>> Asserts << .

-

This was the biggest tip given.

-

It's an insanely difficult job, and without asserts it would be impossible.

-

-

Describe what you can do with a resource.

-

-

Handles.

-

[ ]

-

-

Resolving overlaps :

-

Add job dependencies.

-

Double buffer or cache data.

-

Queue messages to be acted upon later.

-

Restructure your algorithm.

-

{35:04 -> 38:23}

-

-

"I don't know if there were issues with the client-server architecture".

-

Job System in Unity

-

.

.

Thread Pools

-

A collection of pre-instantiated reusable threads.

-

A pool of generic threads that pull tasks from a shared queue.

-

Reduces overhead from frequent thread creation/destruction.

-

Thread pools are better than Dedicated Threads for:

-

Bursty workloads (e.g., loading screens, asset decompression).

-

Scaling across CPU cores.

-

TLDR

-

It's a middle ground between Dedicated Threads and a Job System.

-

I feel it's a "soft" version of a Job System.

-

Job systems are not strictly better than a Thread Pool—they’re more specialized.

-

Thread pools win for simplicity and low-latency tasks.

-

For most games, a hybrid approach is optimal.

-

How It Works

-

Tasks (arbitrary functions/lambdas) are pushed to a global queue.

-

Idle threads pick up tasks first-come-first-served.

-

No task dependencies (unlike job systems).

Comparisons

-

Thread Pool :

-

For simple fire-and-forget tasks (e.g., logging, file I/O).

-

-

Job System :

-

For complex, data-parallel workloads (e.g., physics, particle systems).

-

-

Stick with Dedicated Threads if:

-

Your game runs smoothly (no CPU bottlenecks).

-

You prioritize simplicity over peak performance.

-

-

Switch to Thread Pool if:

-

You have ad-hoc parallel tasks (e.g., asset loading).

-

You want better CPU usage but aren’t ready for jobs.

-

You need quick parallelism without refactoring.

-

Tasks are independent and coarse-grained.

-

You’re doing I/O or async operations.

-

-

Adopt a Job System if:

-

Your game is CPU-bound and needs max core utilization (e.g., open-world, RTS).

-

You need fine-grained parallelism (e.g., 10,000 physics objects).

-

Work has complex dependencies.

-

You’re willing to restructure code.

-

TBB / oneTBB (Threading Building Blocks)

-

It's a C++ Template Library.

-

Wiki .

-

oneTBB .

-

About .

-

Is a multithreading strategy (or more accurately, a parallel programming framework) rather than just an implementation detail of another strategy.

-

Key Points About TBB:

-

High-Level Parallelism Framework

-

TBB provides a task-based parallel programming model, allowing developers to express parallelism without directly managing threads.

-

It abstracts low-level threading details (like thread creation and synchronization) and instead focuses on tasks scheduled across a thread pool.

-

-

Not Just an Implementation Detail

-

While TBB does implement work-stealing schedulers and thread pools (which could be considered "implementation details"), it is primarily a strategy for parallelizing applications.

-

It competes with other parallelism approaches like OpenMP, raw

std::thread, or GPU-based parallelism (CUDA, SYCL).

-

-

Key Features

-

Task-based parallelism (instead of thread-based).

-

Work-stealing scheduler (for dynamic load balancing).

-

High-level parallel algorithms (

parallelfor,parallelreduce,pipeline, etc.). -

Concurrent containers (e.g.,

concurrentqueue,concurrenthashmap). -

Memory allocator optimizations

tbb::allocatorfor scalable memory allocation.

-

-

Data Parallelism

-

Same operation on different data chunks, e.g., OpenMP.

-

Data Parallelism is a strategy, but SIMD (Single Instruction Multiple Data) is an implementation detail (a CPU feature enabling it).

Actor Model

-

Independent actors messaging asynchronously.

Pipeline Parallelism

-

Split work into stages, like CPU instruction pipelines.