Shader Alignment

Minimum Dynamic-Offset / CBV Allocation Granularity

-

GPUs and drivers require that when you bind or use a portion of a large buffer as a uniform/constant buffer the start address and/or size line up to an alignment.

-

That alignment is the “minimum dynamic-offset” (Vulkan) or the CBV/constant buffer granularity (D3D12).

-

It lets the driver map many small logical buffers into a single big GPU buffer efficiently.

-

If you bind at an unaligned offset the API/driver will reject it or you will get wrong data or degraded performance.

-

Drivers can report 64, 128, 256, or other powers of two.

-

UBO alignment is usually larger than SSBO alignment because UBO usage and caches are handled differently by the hardware.

-

Value :

-

Many APIs and drivers use 256 bytes as the Minimum Dynamic-Offset on common desktop GPUs.

-

VkGuide:

struct MaterialConstants { // written into uniform buffers later glm::vec4 colorFactors; // multiply the color texture glm::vec4 metal_rough_factors; glm::vec4 extra[14]; /* padding, we need it anyway for uniform buffers it needs to meet a minimum requirement for its alignment. 256 bytes is a good default alignment for this which all the gpus we target meet, so we are adding those vec4s to pad the structure to 256 bytes. */ }; -

-

But not every platform or GPU guarantees 256. Mobile or integrated GPUs may have different values.

-

-

minUniformBufferOffsetAlignment-

Is the minimum required alignment, in bytes, for the

offsetmember of theVkDescriptorBufferInfostructure for uniform buffers. -

When a descriptor of type

DESCRIPTOR_TYPE_UNIFORM_BUFFERorDESCRIPTOR_TYPE_UNIFORM_BUFFER_DYNAMICis updated, theoffsetmust be an integer multiple of this limit. -

Similarly, dynamic offsets for uniform buffers must be multiples of this limit.

-

The value must be a power of two.

-

-

minStorageBufferOffsetAlignment-

Is the minimum required alignment, in bytes, for the

offsetmember of theVkDescriptorBufferInfostructure for storage buffers. -

When a descriptor of type

DESCRIPTOR_TYPE_STORAGE_BUFFERorDESCRIPTOR_TYPE_STORAGE_BUFFER_DYNAMICis updated, theoffsetmust be an integer multiple of this limit. -

Similarly, dynamic offsets for storage buffers must be multiples of this limit.

-

The value must be a power of two.

-

-

minTexelBufferOffsetAlignment-

Is the minimum required alignment, in bytes, for the

offsetmember of the VkBufferViewCreateInfo structure for texel buffers. -

If the

texelBufferAlignmentfeature is enabled, this limit is equivalent to the maximum of theuniformTexelBufferOffsetAlignmentBytesandstorageTexelBufferOffsetAlignmentBytesmembers of VkPhysicalDeviceTexelBufferAlignmentProperties , but smaller alignment is optionally allowed bystorageTexelBufferOffsetSingleTexelAlignmentanduniformTexelBufferOffsetSingleTexelAlignment. -

If the

texelBufferAlignmentfeature is not enabled, VkBufferViewCreateInfo ::offsetmust be a multiple of this value. -

The value must be a power of two.

-

-

-

-

Best practice :

-

Query the GPU at runtime and align your buffer ranges to the reported value.

-

Assert size at compile time:

static_assert(sizeof(MaterialConstants) == 256, "MaterialConstants must be 256 bytes"); -

Default Layouts

-

UBOs :

-

std140.

-

-

SSBOs :

-

std430.

-

-

Push Constants :

-

std430 (Vulkan).

-

Source: GLSL Spec 4.60.8 , page 90.

-

OpenGL Spec 4.6 , page 146 (7.6.2.2).

-

-

Alignment Options

-

There are different alignment requirements depending on the specific resources and on the features enabled.

-

Platform dependency :

-

32-bit IEEE-754

-

The scalar value is 4 bytes.

-

The standard for desktop, mobile, OpenGL ES and Vulkan.

-

-

16-bit half precision :

-

The scalar value is 2 bytes.

-

In rare cases, like embedded or custom OpenGL drivers.

-

-

64-bit IEEE-754 double :

-

The scalar value is 8 bytes.

-

Non-standard case.

-

Would require headers redefining

GLfloatasdouble, not compliant with spec.

-

-

-

C layout ≈

std430only if you manually match packing and alignment. Otherwise, it’s platform-dependent.

| GLSL type | C equivalent | Typical C (x86_64) - Alignment | Typical C (x86_64) - Size | Typical C (x86_64) - Stride | std140 - Base Alignment | std140 - Occupied Size | std140 - Stride | std430 - Base Alignment | std430 - Occupied Size | std430 - Stride |

| -------------------------------- | --------------------------------------------------- | -----------------------------: | -----------------------------------: | --------------------------: | -----------------------------------------------------------------------------------------: | ------------------------------------: | ---------------------------------------: | ----------------------: | ----------------------------------------------------: | ------------------------------------------: |

|

bool

| C

_Bool

(native) — or use

int32_t

to match GLSL |

_Bool

: 1;

int32_t

: 4 |

_Bool

: 1;

int32_t

: 4 |

_Bool

: 1;

int32_t

: 4 | 4 | 4 | 16 (std140 rounds scalar arrays to vec4) | 4 | 4 | 4 |

|

int

/

uint

|

int32_t

/

uint32_t

| 4 | 4 | 4 | 4 | 4 | 16 | 4 | 4 | 4 |

|

float

|

float

| 4 | 4 | 4 | 4 | 4 | 16 | 4 | 4 | 4 |

|

double

|

double

| 8 | 8 | 8 | 8 | 8 | 32 (rounded to dvec4 alignment) | 8 | 8 | 8 |

|

vec2

/

ivec2

|

float[2]

/

int32_t[2]

| 4 | 8 | 8 | 8 | 8 | 16 | 8 | 8 | 8 |

|

vec3

/

ivec3

|

float[3]

/

int32_t[3]

| 4 | 12 | 12 | 16 | 16 | 16 | 16 | 16 | 16 |

|

vec4

/

ivec4

|

float[4]

/

int32_t[4]

| 4 | 16 | 16 | 16 | 16 | 16 | 16 | 16 | 16 |

|

dvec2

|

double[2]

| 8 | 16 | 16 | 16 | 16 | 32 | 16 | 16 | 16 |

|

dvec3

|

double[3]

| 8 | 24 | 24 | 32 | 32 | 32 | 32 | 32 | 32 |

|

dvec4

|

double[4]

| 8 | 32 | 32 | 32 | 32 | 32 | 32 | 32 | 32 |

|

mat2

(2×2 float, column-major) |

float[2][2]

(2 columns of

vec2

) | 4 | 16 | 8 (column size) | 16 | 16 × 2 = 32 | each column has vec4 as stride (16) | 8 | 8 × 2 = 16 | each column has vec2 as stride (8) |

|

mat3

(3×3 float, column-major) |

float[3][3]

(3 columns of

vec3

) | 4 | 36 | 12 (column size) | 16 | 16 × 3 = 48 | each column has vec4 as stride (16) | 16 | 16 × 3 = 48 | each column has vec3 as stride (16) |

|

mat4

(4×4 float) |

float[4][4]

| 4 | 64 | 16 (column size) | 16 | 16 x 4 = 64 | each column has vec4 as stride (16) | 16 | 16 × 4 = 64 | each column has vec4 as stride (16) |

|

T[]

(Array of T) |

T[]

| alignof(T) | sizeof(T) | sizeof(T) | base_align(T), rounded up to vec4 base align (16 for 32-bit scalars; 32 for 64-bit/double) | occupied per element = rounded stride | base_align(T), rounded up to 16 | base_align(T) | occupied per element = sizeof(T) rounded to alignment | base_align(T) |

|

vec3[]

(Array of vec3) |

float[3][]

| 4 | 12 | 12 | 16 | 16 | 16 | 16 | 16 | 16 |

|

struct

|

struct { ... }

| max(member alignment) | struct size padded to that alignment | sizeof(struct) (padded) | max(member align) rounded up to vec4 (16) | struct size padded to multiple of 16 | sizeof(struct) rounded up to 16 | max(member align) | struct size padded to that alignment | sizeof(struct) (padded to member alignment) |

Scalar Alignment

-

Looks like std430 , but its vectors are even more compact?

-

Also known as (?) The spec doesn't say.

-

-

Core in Vulkan 1.2.

-

This extension allows most storage types to be aligned in

scalaralignment. -

Make sure to set

--scalar-block-layoutwhen running the SPIR-V Validator. -

A big difference is being able to straddle the 16-byte boundary.

-

In GLSL this can be used with

scalarkeyword and extension

-

Extended Alignment (std140)

-

Source .

-

Conservative, padded layout used for uniform blocks.

-

Widely supported.

-

Caveats :

-

"Avoiding usage of vec3"

-

Usually applies to std140, because some hardware vendors seem to not follow the spec strictly. Although, everything should work when using std430.

-

Array of

vec3(ARRAY) :-

Alignment will be 4x of a

float. -

Size will be

alignment * amount of elements.

-

-

-

// Scalars

float -> 4 bytes // for 32-bit IEEE-754

int -> 4 bytes // for 32-bit IEEE-754

uint -> 4 bytes // for 32-bit IEEE-754

bool -> 4 bytes // for 32-bit IEEE-754

// Vectors

// Base alignments

vec2 -> 8 bytes // 2 times the underlying scalar type.

vec3 -> 16 bytes // 4 times the underlying scalar type.

vec4 -> 16 bytes // 4 times the underlying scalar type.

// Arrays

// Size of the element type, rounded up to a multiple of the size of `vec4` (behave like `vec4` slots).

// Arrays of types are not necessarily tightly packed.

// An array of floats in such a block will not be the equivalent to an array of floats in C/C++. Arrays will only match their C/C++ definitions if the type is a multiple of 16 bytes.

// Ex: `float arr[N]` uses 16 bytes per element.

// Matrices

// Treated as arrays of vectors.

// They are column-major by default; you can change it with `layout(row_major)` or `layout(column_major)`.

// Struct

// The biggest struct member, rounded up to multiples of the size of `vec4` (behave like `vec4` slots).

// Struct members are effectively padded so that each member starts on a 16-byte boundary when necessary.

// The struct size will be the space needed by its members.

-

Examples :

layout(std140) uniform U { float a[3]; }; // size = 3 * 16 = 48 bytes

Base Alignment (std430)

-

Allowed usage :

-

SSBOs, Push Constants.

-

KHR_uniform_buffer_standard_layout.-

Core in Vulkan 1.2.

-

Allows the use of

std430memory layout in UBOs. -

These memory layout changes are only applied to

Uniforms.

-

-

-

Core in Vulkan 1.1; all Vulkan 1.1+ devices support relaxed block layout.

-

This extension allows implementations to indicate they can support more variation in block

Offsetdecorations. -

This comes up when using

std430memory layout where avec3(which is 12 bytes) is still defined as a 16 byte alignment. -

With relaxed block layout an application can fit a

floaton either side of thevec3and maintain the 16 byte alignment between them. -

Currently there is no way in GLSL to legally express relaxed block layout, but a developer can use the

--hlsl-offsetswithglslangto produce the desired offsets.

-

-

-

Relaxed layout used for shader-storage blocks and allows much tighter packing.

-

Requires newer GLSL 4.3+ or equivalent support.

// Scalars

float -> 4 bytes // for 32-bit IEEE-754

int -> 4 bytes // for 32-bit IEEE-754

uint -> 4 bytes // for 32-bit IEEE-754

bool -> 4 bytes // for 32-bit IEEE-754

// Vectors

// Base alignments

vec2 -> 8 bytes // 2 times the underlying scalar type.

vec3 -> 16 bytes // 4 times the underlying scalar type.

vec4 -> 16 bytes // 4 times the underlying scalar type.

// Arrays

// array stride is the natural alignment of the element.

// Ex: `float arr[N]` uses 4 bytes per element.

// Matrices

// Treated as arrays of vectors.

// They are column-major by default; you can change it with `layout(row_major)` or `layout(column_major)`.

// Struct

// Struct packing is tighter and follows natural alignments (less padding).

-

Examples :

layout(std430, binding=0) buffer B { float a[3]; }; // size = 3 * 4 = 12 bytes

Comparisons

-

Input Attributes vs Uniform Buffers :

-

"If you want a shader to access a resource that is not on vertex buffers, they must go through a descriptor set".

-

With Vertex Buffers we can pass arbitrary attributes to the vertex shader for each vertex.

-

Including global variables as vertex data would be a waste of memory, and it would require us to update the vertex buffer whenever the transformation changes.

-

The right way to tackle this in Vulkan is to use Resource Descriptors

-

-

Uniform Buffers vs Push Constants :

-

ChatGPT...

-

"Lets say I want to send the time, would it be optimal to send it as a ubo or a push constant? The time should not change between draw calls (it's irrelevant) in the same frame. Seems like a UBO I only need to set it once and every object would benefit from it, but a push constant needs to be send for every object?"

-

Exactly. For your “time” value, a Uniform Buffer is generally the more optimal choice in this scenario.

-

Push Constants

-

You must call

vkCmdPushConstantsfor each command buffer section where shaders need it. -

Since push constants are set per draw/dispatch scope, if you have many objects, you’d be redundantly re-sending the same value (time) multiple times in the same frame.

-

There’s no automatic “shared” state — every pipeline that uses it must get the value pushed explicitly.

-

-

Uniform Buffers

-

You can store the time in a uniform buffer once per frame, bind it once in a descriptor set, and then every draw call will see the same value without re-uploading.

-

Works well for “global” frame data (view/proj matrices, time, frame index, etc.).

-

Binding a pre-allocated UBO in a descriptor set has low overhead and avoids per-draw constant pushing.

-

-

Performance implication:

-

If the data is the same for all draws in a frame, a UBO avoids redundant driver calls and state changes, and makes it easier to keep the command buffer lean. Push constants are better suited for per-object or per-draw small data.

-

-

-

-

Storage Image vs. Storage Buffer :

-

While both storage images and storage buffers allow for read-write access in shaders, they have different use cases:

-

Storage Images :

-

Ideal for 2D or 3D data that benefits from texture operations like filtering or addressing modes.

-

-

Storage Buffers :

-

Better for arbitrary structured data or when you need to access data in a non-uniform pattern.

-

-

-

Texel Buffer vs. Storage Buffer :

-

Texel buffers and storage buffers also have different strengths:

-

Texel Buffers :

-

Provide texture-like access to buffer data, allowing for operations like filtering.

-

-

Storage Buffers :

-

More flexible for general-purpose data storage and manipulation.

-

-

-

Do

-

Do keep constant data small, where 128 bytes is a good rule of thumb.

-

Do use push constants if you do not want to set up a descriptor set/UBO system.

-

Do make constant data directly available in the shader if it is pre-determinable, such as with the use of specialization constants.

-

-

Avoid

-

Avoid indexing in the shader if possible, such as dynamically indexing into

bufferoruniformarrays, as this can disable shader optimisations in some platforms.

-

-

Impact

-

Failing to use the correct method of constant data will negatively impact performance, causing either reduced FPS and/or increased BW and load/store activity.

-

On Mali, register mapped uniforms are effectively free. Any spilling to buffers in memory will increase load/store cache accesses to the per thread uniform fetches.

-

Input Attributes

About

-

The only shader stage in core Vulkan that has an input attribute controlled by Vulkan is the vertex shader stage (

SHADER_STAGE_VERTEX).#version 450 layout(location = 0) in vec3 inPosition; void main() { gl_Position = vec4(inPosition, 1.0); } -

Other shader stages, such as a fragment shader stage, have input attributes, but the values are determined from the output of the previous stages run before it.

-

This involves declaring the interface slots when creating the

VkPipelineand then binding theVkBufferbefore draw time with the data to map. -

Before calling

vkCreateGraphicsPipelinesaVkPipelineVertexInputStateCreateInfostruct will need to be filled out with a list ofVkVertexInputAttributeDescriptionmappings to the shader.VkVertexInputAttributeDescription input = {}; input.location = 0; input.binding = 0; input.format = FORMAT_R32G32B32_SFLOAT; // maps to vec3 input.offset = 0; -

The only thing left to do is bind the vertex buffer and optional index buffer prior to the draw call.

vkBeginCommandBuffer(); // ... vkCmdBindVertexBuffer(); vkCmdDraw(); // ... vkCmdBindVertexBuffer(); vkCmdBindIndexBuffer(); vkCmdDrawIndexed(); // ... vkEndCommandBuffer(); -

Limits :

-

maxVertexInputAttributes -

maxVertexInputAttributeOffset

-

Memory Layout

-

.

.

-

.

.

-

.

.

-

Single binding.

-

-

.

.

-

One binding per attribute.

-

-

One binding or many bindings? It doesn't matter that much. In some cases one is better, etc, don't worry too much about it.

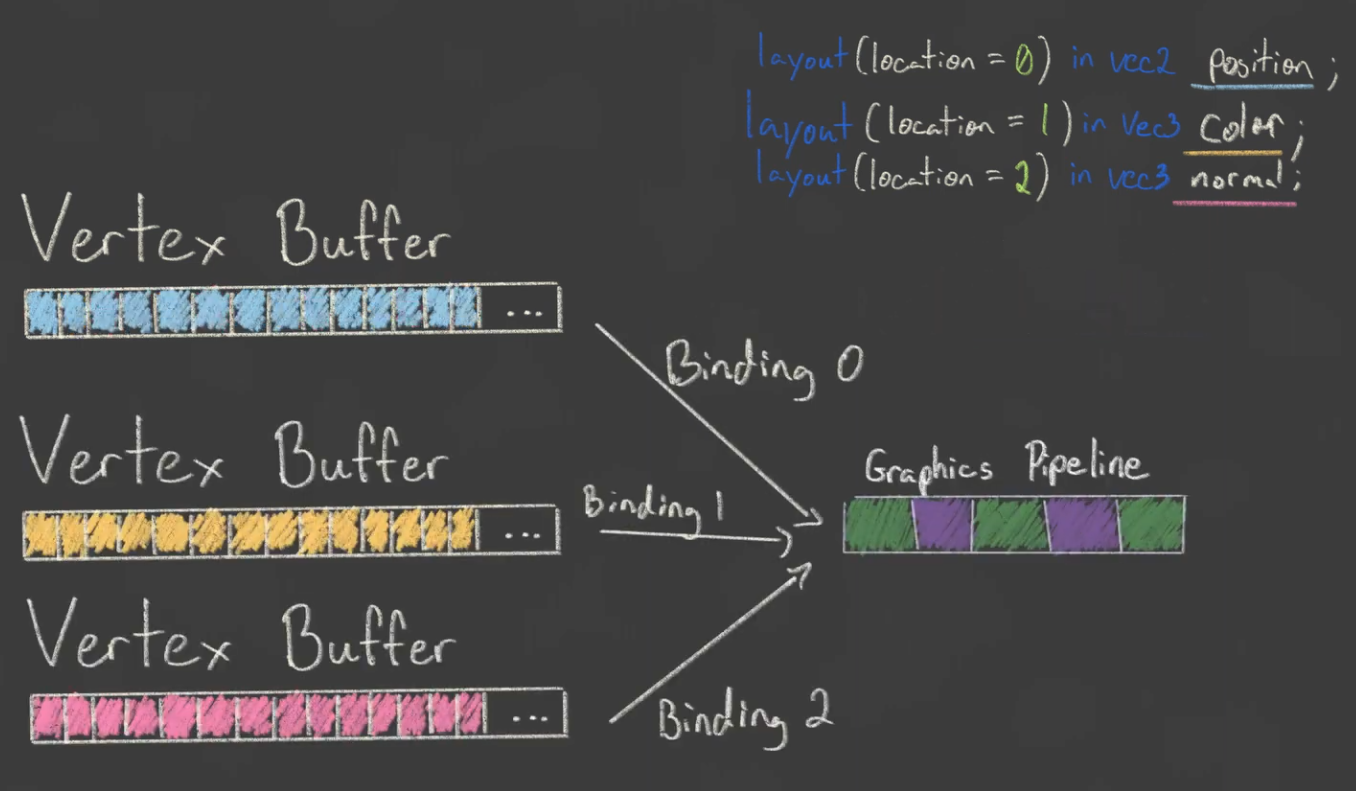

Vertex Input Binding / Vertex Buffer

-

Tell Vulkan how to pass this data format to the vertex shader once it's been uploaded into GPU memory

-

A vertex binding describes at which rate to load data from memory throughout the vertices.

-

It specifies the number of bytes between data entries and whether to move to the next data entry after each vertex or after each instance.

-

VkVertexInputBindingDescription.-

binding-

Specifies the index of the binding in the array of bindings.

-

-

stride-

Specifies the number of bytes from one entry to the next.

-

-

inputRate-

VERTEX_INPUT_RATE_VERTEX-

Move to the next data entry after each vertex.

-

-

VERTEX_INPUT_RATE_INSTANCE-

Move to the next data entry after each instance.

-

-

We're not going to use instanced rendering, so we'll stick to per-vertex data.

-

-

-

VkVertexInputAttributeDescription-

Describes how to handle vertex input.

-

An attribute description struct describes how to extract a vertex attribute from a chunk of vertex data originating from a binding description.

-

We have two attributes, position and color, so we need two attribute description structs.

-

binding-

Tells Vulkan from which binding the per-vertex data comes.

-

-

location-

References the

locationdirective of the input in the vertex shader.-

The input in the vertex shader with location

0is the position, which has two 32-bit float components.

-

-

-

format-

Describes the type of data for the attribute.

-

Implicitly defines the byte size of attribute data.

-

A bit confusingly, the formats are specified using the same enumeration as color formats.

-

The following shader types and formats are commonly used together:

-

float:FORMAT_R32_SFLOAT -

vec2:FORMAT_R32G32_SFLOAT -

vec3:FORMAT_R32G32B32_SFLOAT -

vec4:FORMAT_R32G32B32A32_SFLOAT

-

-

As you can see, you should use the format where the amount of color channels matches the number of components in the shader data type.

-

It is allowed to use more channels than the number of components in the shader, but they will be silently discarded.

-

If the number of channels is lower than the number of components, then the BGA components will use default values of

(0, 0, 1).

-

-

The color type (

SFLOAT,UINT,SINT) and bit width should also match the type of the shader input. See the following examples:-

ivec2:FORMAT_R32G32_SINT, a 2-component vector of 32-bit signed integers -

uvec4:FORMAT_R32G32B32A32_UINT, a 4-component vector of 32-bit unsigned integers -

double:FORMAT_R64_SFLOAT, a double-precision (64-bit) float

-

-

-

offset-

Specifies the number of bytes since the start of the per-vertex data to read from.

-

-

-

Graphics Pipeline Vertex Input Binding :

-

For the following vertices:

Vertex :: struct { pos: eng.Vec2, color: eng.Vec3, } vertices := [?]Vertex{ { { 0.0, -0.5 }, { 1.0, 0.0, 0.0 } }, { { 0.5, 0.5 }, { 0.0, 1.0, 0.0 } }, { { -0.5, 0.5 }, { 0.0, 0.0, 1.0 } }, } -

We setup this in the Graphics Pipeline creation:

vertex_binding_descriptor := vk.VertexInputBindingDescription{ binding = 0, stride = size_of(Vertex), inputRate = .VERTEX, } vertex_attribute_descriptor := [?]vk.VertexInputAttributeDescription{ { binding = 0, location = 0, format = .R32G32_SFLOAT, offset = cast(u32)offset_of(Vertex, pos), }, { binding = 0, location = 1, format = .R32G32B32_SFLOAT, offset = cast(u32)offset_of(Vertex, color), }, } vertex_input_create_info := vk.PipelineVertexInputStateCreateInfo { sType = .PIPELINE_VERTEX_INPUT_STATE_CREATE_INFO, vertexBindingDescriptionCount = 1, pVertexBindingDescriptions = &vertex_binding_descriptor, vertexAttributeDescriptionCount = len(vertex_attribute_descriptor), pVertexAttributeDescriptions = &vertex_attribute_descriptor[0], } -

The pipeline is now ready to accept vertex data in the format of the

verticescontainer and pass it on to our vertex shader.

-

-

Vertex Buffer :

-

If you run the program now with validation layers enabled, you'll see that it complains that there is no vertex buffer bound to the binding.

-

The next step is to create a vertex buffer and move the vertex data to it so the GPU is able to access it.

-

Creating :

-

Follow the tutorial for creating a buffer, specifying

BUFFER_USAGE_VERTEX_BUFFERas theBufferCreateInfousage.

-

-

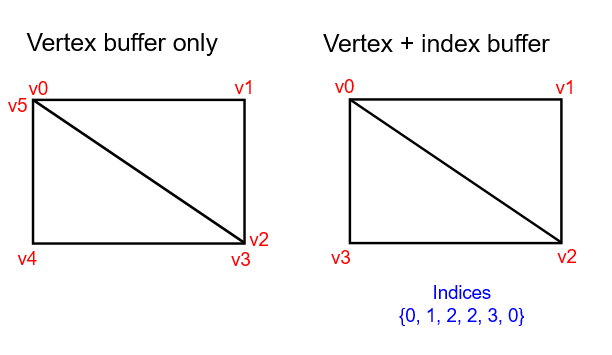

Index Buffer

-

Motivation :

-

Drawing a rectangle takes two triangles, which means that we need a vertex buffer with six vertices. The problem is that the data of two vertices needs to be duplicated, resulting in redundancies.

-

The solution to this problem is to use an index buffer.

-

An index buffer is essentially an array of pointers into the vertex buffer.

-

It allows you to reorder the vertex data, and reuse existing data for multiple vertices.

-

.

.

-

The first three indices define the upper-right triangle, and the last three indices define the vertices for the bottom-left triangle.

-

-

It is possible to use either

uint16_toruint32_tfor your index buffer depending on the number of entries invertices. We can stick touint16_tfor now because we’re using less than 65535 unique vertices. -

Just like the vertex data, the indices need to be uploaded into a

VkBufferfor the GPU to be able to access them.

-

-

Creating :

-

Follow the tutorial for creating a buffer, specifying

BUFFER_USAGE_INDEX_BUFFERas theBufferCreateInfousage.

-

-

Using :

-

We first need to bind the index buffer, just like we did for the vertex buffer.

-

The difference is that you can only have a single index buffer. It’s unfortunately not possible to use different indices for each vertex attribute, so we do still have to completely duplicate vertex data even if just one attribute varies.

-

An index buffer is bound with

vkCmdBindIndexBufferwhich has the index buffer, a byte offset into it, and the type of index data as parameters.-

As mentioned before, the possible types are

INDEX_TYPE_UINT16andINDEX_TYPE_UINT32.

-

-

Just binding an index buffer doesn’t change anything yet, we also need to change the drawing command to tell Vulkan to use the index buffer.

-

Remove the

vkCmdDrawline and replace it withvkCmdDrawIndexed.

-

Push Constants

-

A Push Constant is a small bank of values accessible in shaders.

-

These are designed for small amount (a few dwords) of high frequency data to be updated per-recording of the command buffer.

-

So that the shader can understand where this data will be sent, we specify a special push constants

<layout>in our shader code.

layout(push_constant) uniform MeshData {

mat4 model;

} mesh_data;

-

Choosing to use Push Constants :

-

In early implementations of Vulkan on Arm Mali, this was usually the fastest way of pushing data to your shaders. In more recent times, we have observed on Mali devices that overall they can be slower. If performance is something you are trying to maximise on Mali devices, descriptor sets may be the way to go. However, other devices may still favour push constants.

-

Having said this, descriptor sets are one of the more complex features of Vulkan, making the convenience of push constants still worth considering as a go-to method, especially if working with trivial data.

-

-

Limits :

-

maxPushConstantsSize-

guaranteed at least

128bytes on all devices. -

If you're using Vulkan 1.4 the minimum was increased to 256.

-

-

Offsets

-

.

.

-

Ex1 :

layout(push_constant, std430) uniform pc { layout(offset = 32) vec4 data; }; layout(location = 0) out vec4 outColor; void main() { outColor = data; }VkPushConstantRange range = {}; range.stageFlags = SHADER_STAGE_FRAGMENT; range.offset = 32; range.size = 16;

Updating

-

Ex1 :

-

Push constants can be incrementally updated over the course of a command buffer.

// vkBeginCommandBuffer() vkCmdBindPipeline(); vkCmdPushConstants(offset: 0, size: 16, value = [0, 0, 0, 0]); vkCmdDraw(); // values = [0, 0, 0, 0] vkCmdPushConstants(offset: 4, size: 8, value = [1 ,1]); vkCmdDraw(); // values = [0, 1, 1, 0] vkCmdPushConstants(offset: 8, size: 8, value = [2, 2]); vkCmdDraw(); // values = [0, 1, 2, 2] // vkEndCommandBuffer()-

Interesting how old values are kept. Values that were not changed are preserved.

-

Lifetime

-

vkCmdPushConstantsis tied to theVkPipelineLayoutusage and therefore why they must match before a call to a command such asvkCmdDraw(). -

Because push constants are not tied to descriptors, the use of

vkCmdBindDescriptorSetshas no effect on the lifetime or pipeline layout compatibility of push constants. -

The same way it is possible to bind descriptor sets that are never used by the shader, the same is true for push constants.

CPU Performance

-

Push one struct once per draw instead of many separate vkCmdPushConstants calls (one call writing a small struct is far cheaper).

-

Many small state changes cause the driver to update internal tables, validate, or patch commands — that’s CPU work and cannot be avoided without batching.

-

Observations :

-

5 push calls were taking 7.65us. I groupped all them in 1 single push call, now taking 3.08us.

-

This was substancial, as at the time I was issuing this push calls hundreds of time per frame; I later reduced this number, but anyway, could be significant.

-

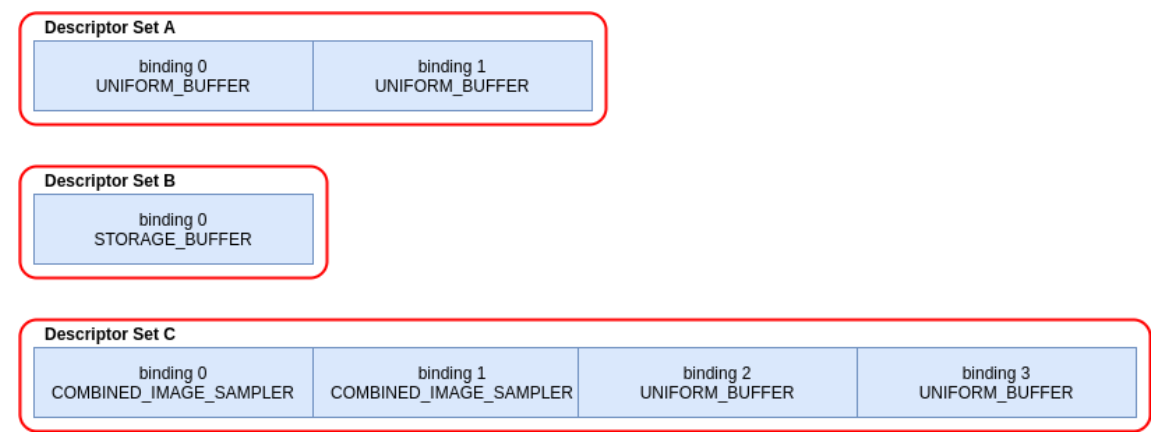

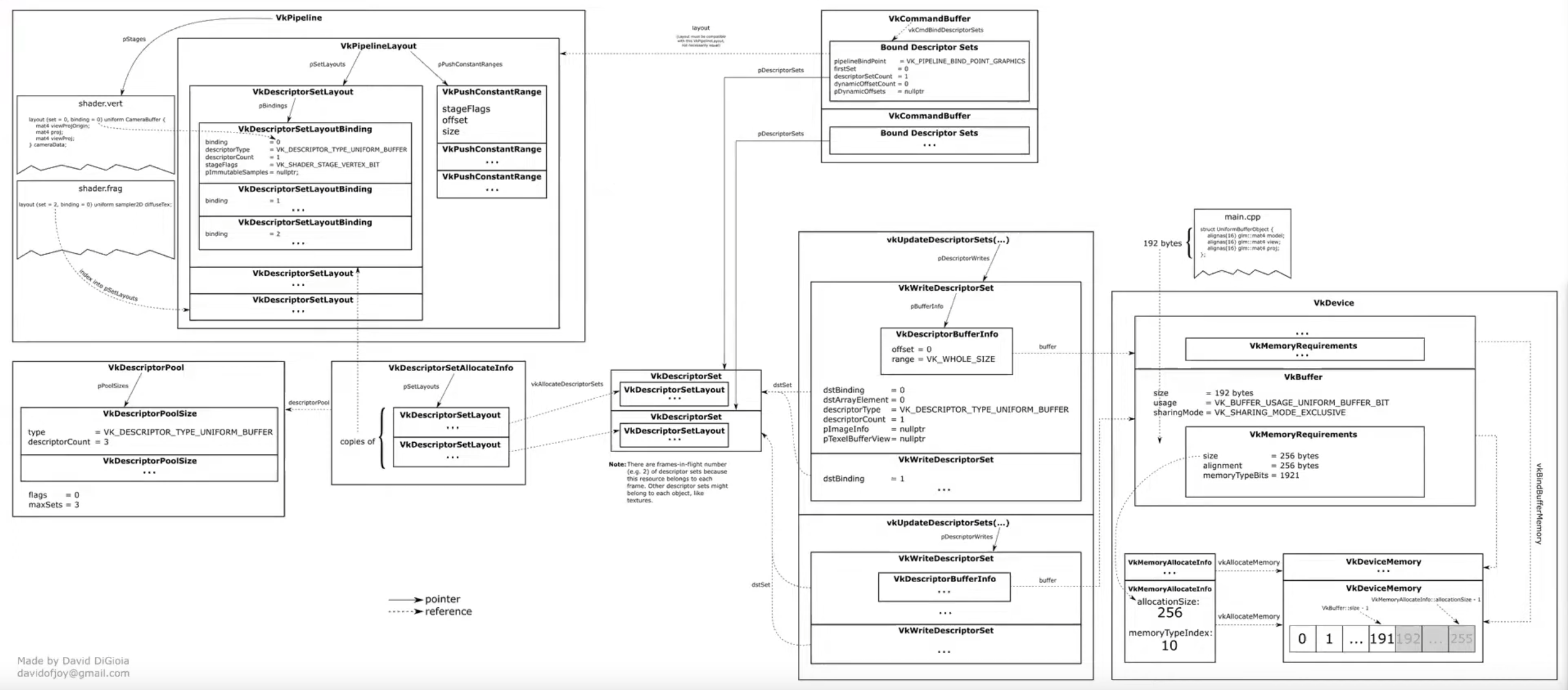

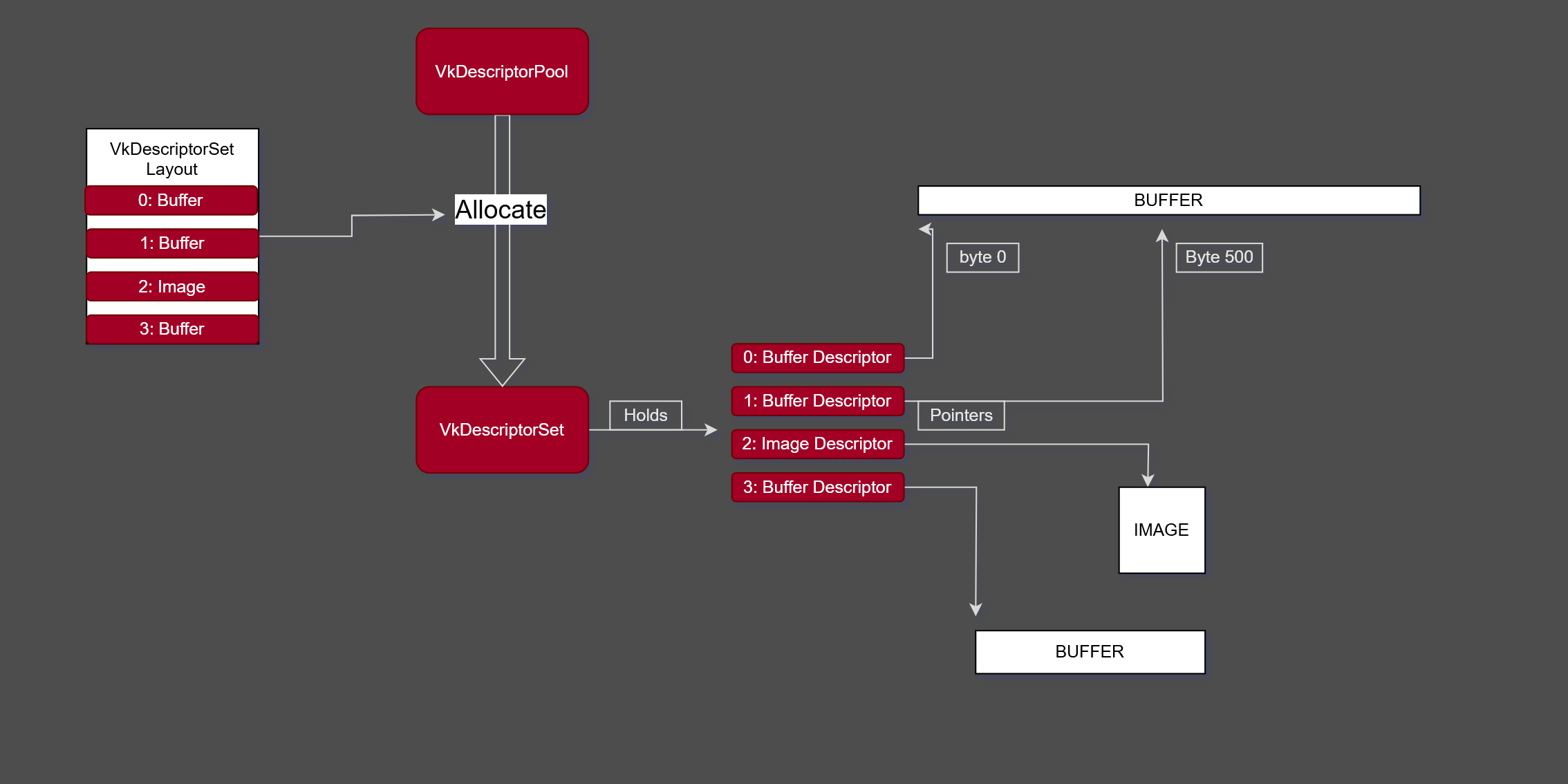

Descriptors Sets

About

-

VkDescriptorSet -

One Descriptor -> One Resource.

-

They are always organized in Descriptor Sets.

-

One or more descriptors contained.

-

Combine descriptors which are used in conjunction.

-

-

A handle or pointer into a resource.

-

Note that is not just a pointer, but a pointer + metadata.

-

-

A core mechanism used to bind resources to shaders.

-

Holds the binding information that connects shader inputs to data such as

VkBufferresources andVkImagetextures. -

Think of it as a set of GPU-side pointers that you bind once.

-

The internal representation of a descriptor set is whatever the driver wants it to be.

-

Content :

-

Where to find a Resource.

-

Usage type of a Resource.

-

Offsets, sometimes.

-

Some metadata, sometimes.

-

-

Example :

-

.

.

// Note - only set 0 and 2 are used in this shader layout(set = 0, binding = 0) uniform sampler2D myTextureSampler; layout(set = 0, binding = 2) uniform uniformBuffer0 { float someData; } ubo_0; layout(set = 0, binding = 3) uniform uniformBuffer1 { float moreData; } ubo_1; layout(set = 2, binding = 0) buffer storageBuffer { float myResults; } ssbo; -

-

API :

-

.

.

-

.

.

-

-

Limits :

-

maxBoundDescriptorSets -

Per stage limit

-

maxPerStageDescriptorSamplers -

maxPerStageDescriptorUniformBuffers -

maxPerStageDescriptorStorageBuffers -

maxPerStageDescriptorSampledImages -

maxPerStageDescriptorStorageImages -

maxPerStageDescriptorInputAttachments -

Per type limit

-

maxPerStageResources -

maxDescriptorSetSamplers -

maxDescriptorSetUniformBuffers -

maxDescriptorSetUniformBuffersDynamic -

maxDescriptorSetStorageBuffers -

maxDescriptorSetStorageBuffersDynamic -

maxDescriptorSetSampledImages -

maxDescriptorSetStorageImages -

maxDescriptorSetInputAttachments -

VkPhysicalDeviceDescriptorIndexingPropertiesif using Descriptor Indexing -

VkPhysicalDeviceInlineUniformBlockPropertiesEXTif using Inline Uniform Block

-

-

Visual explanation {0:00 -> 5:35} .

-

Nice.

-

The rest of the video is meh.

-

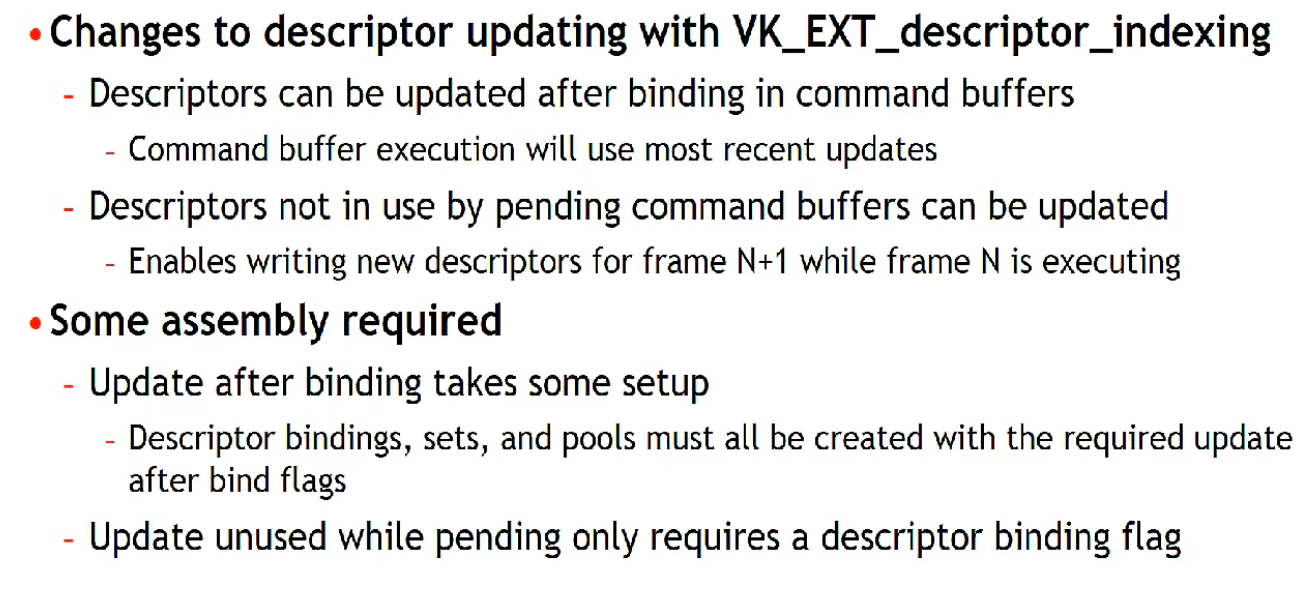

Difficulties

-

Problems :

-

"They are not bad but they very much force a specific rendering style: you have triple / quadrupled nested for loops, binding your things based on usage and then rebind descriptor sets as needed."

-

"Many of us are moving towards bindless rendering, where you just bind everything once in one big descriptor set, and then index into it at will; tho, Vulkan 1.0 does not greatly support, and also the descriptor count for it was quite low".

-

Cannot update descriptors after binding in a command buffer.

-

All descriptors must be valid, even if not used.

-

Descriptor arrays must be sampled uniformly.

-

Different invocations can’t use different indices.

-

Can sample “dynamically uniform”, e.g. runtime-based index.

-

-

Upper limit on descriptor counts.

-

Discourages GPU-driven rendering architectures.

-

Due to the need to set up descriptor sets per draw call it’s hard to adapt any of the aforementioned schemes to GPU-based culling or command submission.

-

-

-

Solutions :

-

Descriptor Indexing :

-

Available in 1.3, optional in 1.2, or

EXT_descriptor_indexing. -

Update descriptors after binding.

-

Update unused descriptors.

-

Relax requirement that all descriptors must be valid, even if unused.

-

Non-uniform array indexing.

-

-

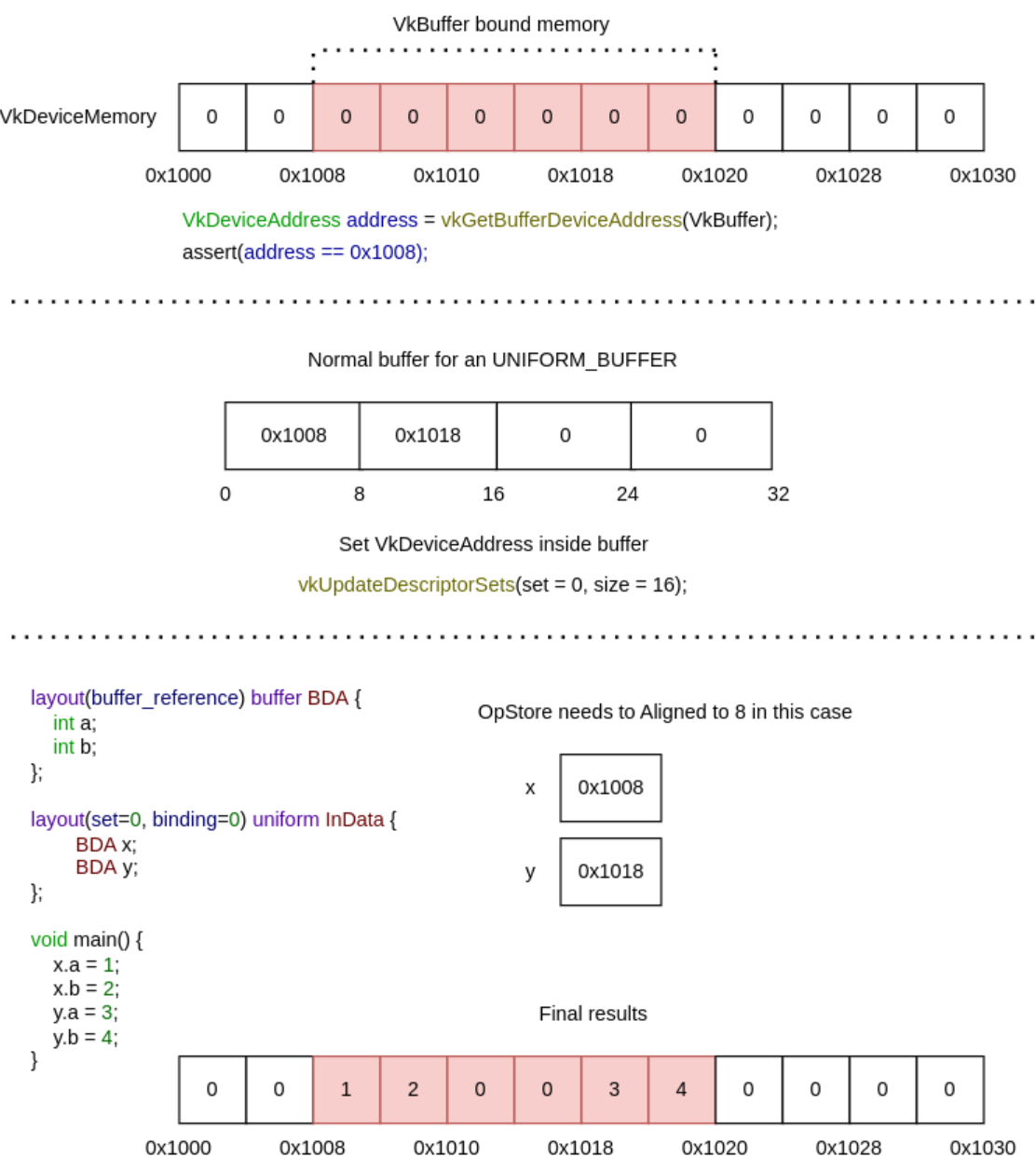

Buffer Device Address :

-

Available in 1.3, optional in 1.2, or

KHR_buffer_device_address. -

Directly access buffers through addresses without a descriptor.

-

See [[#Physical Storage Buffer]] below.

-

-

Descriptor Buffers – EXT_descriptor_buffer :

-

Manage descriptors directly.

-

Similar to D3D12’s descriptor model.

-

-

Allocation

-

A scheme that works well is to use free lists of descriptor set pools; whenever you need a descriptor set pool, you allocate one from the free list and use it for subsequent descriptor set allocations in the current frame on the current thread. Once you run out of descriptor sets in the current pool, you allocate a new pool. Any pools that were used in a given frame need to be kept around; once the frame has finished rendering, as determined by the associated fence objects, the descriptor set pools can reset via

vkResetDescriptorPooland returned to free lists. While it’s possible to free individual descriptors from a pool viaDESCRIPTOR_POOL_CREATE_FREE_DESCRIPTOR_SET, this complicates the memory management on the driver side and is not recommended. -

When a descriptor set pool is created, application specifies the maximum number of descriptor sets allocated from it, as well as the maximum number of descriptors of each type that can be allocated from it. In Vulkan 1.1, the application doesn’t have to handle accounting for these limits – it can just call vkAllocateDescriptorSets and handle the error from that call by switching to a new descriptor set pool. Unfortunately, in Vulkan 1.0 without any extensions, it’s an error to call vkAllocateDescriptorSets if the pool does not have available space, so application must track the number of sets and descriptors of each type to know beforehand when to switch to a different pool.

-

Different pipeline objects may use different numbers of descriptors, which raises the question of pool configuration. A straightforward approach is to create all pools with the same configuration that uses the worst-case number of descriptors for each type – for example, if each set can use at most 16 texture and 8 buffer descriptors, one can allocate all pools with maxSets=1024, and pool sizes 16 1024 for texture descriptors and 8 1024 for buffer descriptors. This approach can work but in practice it can result in very significant memory waste for shaders with different descriptor count – you can’t allocate more than 1024 descriptor sets out of a pool with the aforementioned configuration, so if most of your pipeline objects use 4 textures, you’ll be wasting 75% of texture descriptor memory.

-

Strategies :

-

Two alternatives that provide a better balance memory use:

-

Measure an average number of descriptors used in a shader pipeline per type for a characteristic scene and allocate pool sizes accordingly. For example, if in a given scene we need 3000 descriptor sets, 13400 texture descriptors, and 1700 buffer descriptors, then the average number of descriptors per set is 4.47 textures (rounded up to 5) and 0.57 buffers (rounded up to 1), so a reasonable configuration of a pool is maxSets=1024, 5*1024 texture descriptors, 1024 buffer descriptors. When a pool is out of descriptors of a given type, we allocate a new one – so this scheme is guaranteed to work and should be reasonably efficient on average.

-

Group shader pipeline objects into size classes, approximating common patterns of descriptor use, and pick descriptor set pools using the appropriate size class. This is an extension of the scheme described above to more than one size class. For example, it’s typical to have large numbers of shadow/depth prepass draw calls, and large numbers of regular draw calls in a scene – but these two groups have different numbers of required descriptors, with shadow draw calls typically requiring 0 to 1 textures per set and 0 to 1 buffers when dynamic buffer offsets are used. To optimize memory use, it’s more appropriate to allocate descriptor set pools separately for shadow/depth and other draw calls. Similarly to general-purpose allocators that can have size classes that are optimal for a given application, this can still be managed in a lower-level descriptor set management layer as long as it’s configured with application specific descriptor set usages beforehand.

-

Implementation

-

Descriptors are like pointers, so as any pointer they need to allocate space to live ahead of time.

-

How many :

-

Its possible to have 1 very big descriptor pool that handles the entire engine, but that means we need to know what descriptors we will be using for everything ahead of time.

-

That can be very tricky to do at scale. Instead, we will keep it simpler, and we will have multiple descriptor pools for different parts of the project , and try to be more accurate with them.

-

I don't know what that actually means in practice.

-

-

-

-

Maintains a pool of descriptors, from which descriptor sets are allocated.

-

Descriptor pools are externally synchronized, meaning that the application must not allocate and/or free descriptor sets from the same pool in multiple threads simultaneously.

-

They are very opaque.

-

-

Contains a type of descriptor (same

VkDescriptorTypeas on the bindings above ), alongside a ratio to multiply themaxSetsparameter is. -

This lets us directly control how big the pool is going to be.

maxSetscontrols how manyVkDescriptorSetswe can create from the pool in total, and the pool sizes give how many individual bindings of a given type are owned. -

flags.-

Is a bitmask of VkDescriptorPoolCreateFlagBits specifying certain supported operations on the pool.

-

DESCRIPTOR_POOL_CREATE_FREE_DESCRIPTOR_SET-

Determines if individual descriptor sets can be freed or not:

-

We're not going to touch the descriptor set after creating it, so we don't need this flag. You can leave

flagsto its default value of0.

-

-

DESCRIPTOR_POOL_CREATE_UPDATE_AFTER_BIND-

Descriptor pool creation may fail with the error

ERROR_FRAGMENTATIONif the total number of descriptors across all pools (including this one) created with this bit set exceedsmaxUpdateAfterBindDescriptorsInAllPools, or if fragmentation of the underlying hardware resources occurs.

-

-

-

maxSets-

Is the maximum number of descriptor sets that can be allocated from the pool.

-

-

poolSizeCount-

Is the number of elements in

pPoolSizes.

-

-

pPoolSizes-

Is a pointer to an array of VkDescriptorPoolSize structures, each containing a descriptor type and number of descriptors of that type to be allocated in the pool.

-

If multiple

VkDescriptorPoolSizestructures containing the same descriptor type appear in thepPoolSizesarray then the pool will be created with enough storage for the total number of descriptors of each type. -

-

type-

Is the type of descriptor.

-

-

descriptorCount-

Is the number of descriptors of that type to allocate. If

typeisDESCRIPTOR_TYPE_INLINE_UNIFORM_BLOCKthendescriptorCountis the number of bytes to allocate for descriptors of this type.

-

-

-

-

-

-

-

descriptorPool-

Is the pool which the sets will be allocated from.

-

-

descriptorSetCount-

Determines the number of descriptor sets to be allocated from the pool.

-

-

pSetLayouts-

Is a pointer to an array of descriptor set layouts, with each member specifying how the corresponding descriptor set is allocated.

-

-

-

-

The allocated descriptor sets are returned in

pDescriptorSets. -

When a descriptor set is allocated, the initial state is largely uninitialized and all descriptors are undefined, with the exception that samplers with a non-null

pImmutableSamplersare initialized on allocation. -

Descriptors also become undefined if the underlying resource or view object is destroyed.

-

Descriptor sets containing undefined descriptors can still be bound and used, subject to the following conditions:

-

For descriptor set bindings created with the

PARTIALLY_BOUNDbit set:-

All descriptors in that binding that are dynamically used must have been populated before the descriptor set is consumed .

-

-

For descriptor set bindings created without the

PARTIALLY_BOUNDbit set:-

All descriptors in that binding that are statically used must have been populated before the descriptor set is consumed .

-

-

Descriptor bindings with descriptor type of

DESCRIPTOR_TYPE_INLINE_UNIFORM_BLOCKcan be undefined when the descriptor set is consumed ; though values in that block will be undefined. -

Entries that are not used by a pipeline can have undefined descriptors.

-

-

pAllocateInfo-

Is a pointer to a VkDescriptorSetAllocateInfo structure describing parameters of the allocation.

-

-

pDescriptorSets-

Is a pointer to an array of VkDescriptorSet handles in which the resulting descriptor set objects are returned.

-

-

-

Multithreading :

-

Descriptor pools are externally synchronized, meaning that the application must not allocate and/or free descriptor sets from the same pool in multiple threads simultaneously.

-

Command Pools are used to allocate, free, reset, and update descriptor sets. By creating multiple descriptor pools, each application host thread is able to manage a descriptor set in each descriptor pool at the same time.

-

Best Practices

-

Don’t allocate descriptor sets if nothing in the set changed. In the model with slots that are shared between different stages, this can mean that if no textures are set between two draw calls, you don’t need to allocate the descriptor set with texture descriptors.

-

Don't allocate descriptor sets from descriptor pools on performance critical code paths.

-

Don't allocate, free or update descriptor sets every frame, unless it is necessary.

-

Don't set

DESCRIPTOR_POOL_CREATE_FREE_DESCRIPTOR_SETif you do not need to free individual descriptor sets.-

Setting

DESCRIPTOR_POOL_CREATE_FREE_DESCRIPTOR_SETmay prevent the implementation from using a simpler (and faster) allocator.

-

Descriptor Types

Overview

-

For buffers, application must choose between uniform and storage buffers, and whether to use dynamic offsets or not. Uniform buffers have a limit on the maximum addressable size – on desktop hardware, you get up to 64 KB of data, however on mobile hardware some GPUs only provide 16 KB of data (which is also the guaranteed minimum by the specification). The buffer resource can be larger than that, but shader can only access this much data through one descriptor.

-

On some hardware, there is no difference in access speed between uniform and storage buffers, however for other hardware depending on the access pattern uniform buffers can be significantly faster. Prefer uniform buffers for small to medium sized data especially if the access pattern is fixed (e.g. for a buffer with material or scene constants). Storage buffers are more appropriate when you need large arrays of data that need to be larger than the uniform buffer limit and are indexed dynamically in the shader.

-

For textures, if filtering is required, there is a choice of combined image/sampler descriptor (where, like in OpenGL, descriptor specifies both the source of the texture data, and the filtering/addressing properties), separate image and sampler descriptors (which maps better to Direct3D 11 model), and image descriptor with an immutable sampler descriptor, where the sampler properties must be specified when pipeline object is created.

-

The relative performance of these methods is highly dependent on the usage pattern; however, in general immutable descriptors map better to the recommended usage model in other newer APIs like Direct3D 12, and give driver more freedom to optimize the shader. This does alter renderer design to a certain extent, making it necessary to implement certain dynamic portions of the sampler state, like per-texture LOD bias for texture fade-in during streaming, using shader ALU instructions.

Storage Images

-

DESCRIPTOR_TYPE_STORAGE_IMAGE -

Is a descriptor type that allows shaders to read from and write to an image without using a fixed-function graphics pipeline.

-

This is particularly useful for compute shaders and advanced rendering techniques.

// FORMAT_R32_UINT

layout(set = 0, binding = 0, r32ui) uniform uimage2D storageImage;

// example usage for reading and writing in GLSL

const uvec4 texel = imageLoad(storageImage, ivec2(0, 0));

imageStore(storageImage, ivec2(1, 1), texel);

-

Use cases :

-

Image Processing :

-

Storage images are ideal for image processing tasks like filters, blurs, and other post-processing effects.

-

-

Sampler

-

DESCRIPTOR_TYPE_SAMPLERandDESCRIPTOR_TYPE_SAMPLED_IMAGE.

layout(set = 0, binding = 0) uniform sampler samplerDescriptor;

layout(set = 0, binding = 1) uniform texture2D sampledImage;

// example usage of using texture() in GLSL

vec4 data = texture(sampler2D(sampledImage, samplerDescriptor), vec2(0.0, 0.0));

Combined Image Sampler

-

DESCRIPTOR_TYPE_COMBINED_IMAGE_SAMPLER -

On some implementations, it may be more efficient to sample from an image using a combination of sampler and sampled image that are stored together in the descriptor set in a combined descriptor.

layout(set = 0, binding = 0) uniform sampler2D combinedImageSampler;

// example usage of using texture() in GLSL

vec4 data = texture(combinedImageSampler, vec2(0.0, 0.0));

Uniform Buffer / UBO (Uniform Buffer Object)

-

DESCRIPTOR_TYPE_UNIFORM_BUFFER -

Uniform buffers can also have dynamic offsets at bind time (

DESCRIPTOR_TYPE_UNIFORM_BUFFER_DYNAMIC).

layout(set = 0, binding = 0) uniform uniformBuffer {

float a;

int b;

} ubo;

// example of reading from UBO in GLSL

int x = ubo.b + 1;

vec3 y = vec3(ubo.a);

-

Uniform Buffers commonly use

std140layout (strict alignment rules, predictable padding).-

Source: ChatGPT. I want to confirm.

-

/* UBO: small read-only data (std140) */

layout(set = 0, binding = 0, std140) uniform SceneParams {

mat4 viewProj;

vec4 lightPos;

float time;

} scene;

-

UBO (Uniform Buffer Object) :

-

“Uniform buffer object” is more of an OpenGL-era name, but some Vulkan tutorials and developers still use it informally to mean the same thing — the buffer that holds uniform data.

-

Storage Buffer / SSBO (Shader Storage Buffer Object)

-

DESCRIPTOR_TYPE_STORAGE_BUFFER -

GLSL uses distinct address spaces:

uniform→ UBO,buffer→ SSBO. -

Use

std430layout by default (tighter packing, fewer padding requirements). -

SSBO (Shader Storage Buffer Object) is a OpenGL term.

// Implicit std430 (default)

layout(set = 0, binding = 0) buffer storageBuffer {

float a;

int b;

} ssbo;

// Explicit std430

layout(set = 0, binding = 1, std430) buffer ParticleData {

vec4 pos[];

} particles;

// Reading and writing to a SSBO in GLSL

ssbo.a = ssbo.a + 1.0;

ssbo.b = ssbo.b + 1;

-

BufferBlockandUniformwould have been seen prior toKHR_storage_buffer_storage_class. -

Storage buffers can also have dynamic offsets at bind time

DESCRIPTOR_TYPE_STORAGE_BUFFER_DYNAMIC. -

Why SSBO for dynamic arrays :

-

std430allows tight packing and runtime-sized arrays(T data[]), which is ideal for dynamic-length storage. -

SSBOs allow arbitrary indexing, read/write, and atomics.

-

maxStorageBufferRange is usually much larger than

maxUniformBufferRange. -

You can use

*_DYNAMICdescriptors to bind multiple subranges of one large backing buffer cheaply.

-

-

Many arrays :

-

A buffer block may contain multiple arrays, but only the last member of the block may be a runtime-sized (unsized) array

T x[]. All other arrays must be fixed-size (compile-time constant) or you must implement sizing/offsets yourself.-

This is invalid , even with descriptor indexing:

layout(std430, set = 0, binding = 0) buffer FixedArrays { vec4 A[]; vec2 B[]; mat4 C[]; some_struct D[]; } fixedArrays; -

-

Use a

uint x[]:-

32-bit words; simplest and portable.

-

This is effectively an untyped byte/word blob stored in the SSBO and you manually reinterpret (cast) it in the shader

layout(std430, set = 0, binding = 0) buffer PackedBytes { uint countA; // number of A elements uint offsetA; // offset into data[] in uint words uint countB; uint offsetB; // offset into data[] in uint words uint countC; uint offsetC; uint data[]; // payload in 32-bit words } pb; // helpers float readFloat(uint baseWordIndex) { return uintBitsToFloat(pb.data[baseWordIndex]); } vec2 readVec2(uint baseWordIndex) { return vec2( uintBitsToFloat(pb.data[baseWordIndex + 0]), uintBitsToFloat(pb.data[baseWordIndex + 1]) ); } vec3 readVec3(uint baseWordIndex) { return vec3( uintBitsToFloat(pb.data[baseWordIndex + 0]), uintBitsToFloat(pb.data[baseWordIndex + 1]), uintBitsToFloat(pb.data[baseWordIndex + 2]) ); } vec4 readVec4(uint baseWordIndex) { return vec4( uintBitsToFloat(pb.data[baseWordIndex + 0]), uintBitsToFloat(pb.data[baseWordIndex + 1]), uintBitsToFloat(pb.data[baseWordIndex + 2]), uintBitsToFloat(pb.data[baseWordIndex + 3]) ); } mat4 readMat4(uint baseWordIndex) { // mat4 stored column-major as 16 floats (4 columns of vec4) return mat4( readVec4(baseWordIndex + 0), readVec4(baseWordIndex + 4), readVec4(baseWordIndex + 8), readVec4(baseWordIndex + 12) ); } -

-

Use a

vec4 x[]:-

128-bit blocks; simpler alignment for vec4/mat4 data.

// Pack everything into vec4 blocks for simple alignment layout(std430, set = 0, binding = 0) buffer Packed { uint countA; uint offsetA; // in vec4-blocks uint countB; uint offsetB; // in vec4-blocks uint countC; uint offsetC; // in vec4-blocks uint countD; uint offsetD; // in vec4-blocks vec4 blocks[]; // single runtime-sized array (last member) } packed; // helpers vec4 getA(uint i) { return packed.blocks[packed.offsetA + i]; } vec2 getB(uint i) { return packed.blocks[packed.offsetB + i].xy; // we store each B in one vec4 block } mat4 getC(uint i) { uint base = packed.offsetC + i * 4; // mat4 occupies 4 vec4 blocks return mat4(packed.blocks[base + 0], packed.blocks[base + 1], packed.blocks[base + 2], packed.blocks[base + 3]); } // for some_struct D that we store as 1 vec4 per element: some_struct getD(uint i) { vec4 v = packed.blocks[packed.offsetD + i]; // decode v -> some_struct fields } -

-

Use many SSBOs:

layout(std430, set=0, binding=0) buffer BufA { vec4 A[]; } bufA; layout(std430, set=0, binding=1) buffer BufB { vec2 B[]; } bufB; layout(std430, set=0, binding=2) buffer BufC { mat4 C[]; } bufC; layout(std430, set=0, binding=3) buffer BufD { some_struct D[]; } bufD;

-

Texel Buffer

-

Texel buffers are a way to access buffer data with texture-like operations in shaders.

-

-

The format specified in the shader (SPIR-V Image Format) must exactly match the format used when creating the VkImageView (Vulkan Format).

-

Require exact format matching between the shader and the view. The views must always match the shader exactly.

-

-

Uniform Texel Buffer :

-

DESCRIPTOR_TYPE_UNIFORM_TEXEL_BUFFER -

Read-only access.

layout(set = 0, binding = 0) uniform textureBuffer uniformTexelBuffer; // example of reading texel buffer in GLSL vec4 data = texelFetch(uniformTexelBuffer, 0);-

Use cases :

-

Lookup Tables :

-

Uniform texel buffers are useful for implementing lookup tables that need to be accessed with texture-like operations.

-

-

-

-

Storage Texel Buffer :

-

DESCRIPTOR_TYPE_STORAGE_TEXEL_BUFFER -

Read-write access.

// FORMAT_R8G8B8A8_UINT layout(set = 0, binding = 0, rgba8ui) uniform uimageBuffer storageTexelBuffer; // example of reading and writing texel buffer in GLSL int offset = int(gl_GlobalInvocationID.x); vec4 data = imageLoad(storageTexelBuffer, offset); imageStore(storageTexelBuffer, offset, uvec4(0));-

Use cases :

-

Particle Systems :

-

Storage texel buffers can be used to store and update particle data in a compute shader, which can then be read by a vertex shader for rendering.

-

-

-

Input Attachment

-

DESCRIPTOR_TYPE_INPUT_ATTACHMENT

layout (input_attachment_index = 0, set = 0, binding = 0) uniform subpassInput inputAttachment;

// example loading the attachment data in GLSL

vec4 data = subpassLoad(inputAttachment);

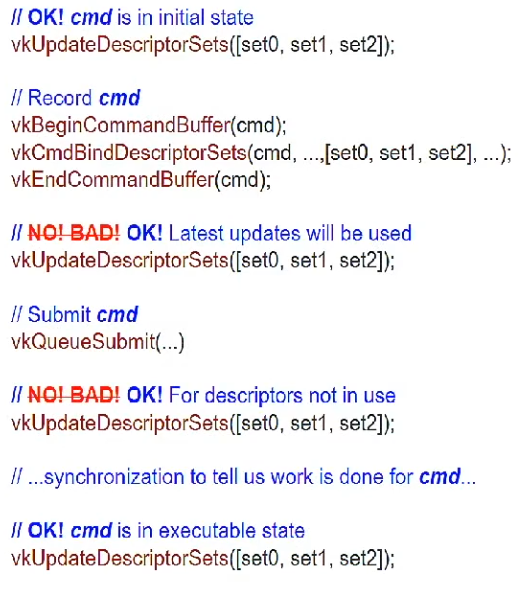

Updates

Implementation

-

A Descriptor Set, even though created and allocated, is still empty. We need to fill it up with data.

-

Updates must happen outside of a command record and execution.

-

No update after

vkCmdBindDescriptorSets(). -

Usually you update before

vkBeginCommandBuffer()or after thevkQueueSubmit()(if we know the sync is done for cmd).

-

-

If using Descriptor Indexing :

-

Descriptors can be updated after binding in command buffers.

-

Command buffer execution will use most recent updates.

-

-

.

.

-

-

-

dstSet-

Is the destination descriptor set to update.

-

-

dstBinding-

Is the descriptor binding within that set.

-

-

dstArrayElement-

Remember that descriptors can be arrays, so we also need to specify the first index in the array that we want to update.

-

If not using an array, the index is simply

0. -

Is the starting element in that array.

-

If the descriptor binding identified by

dstSetanddstBindinghas a descriptor type ofDESCRIPTOR_TYPE_INLINE_UNIFORM_BLOCKthendstArrayElementspecifies the starting byte offset within the binding.

-

-

descriptorCount-

It's a descriptor count, not a descriptor SET count!!

-

Is the number of descriptors to update.

-

If the descriptor binding identified by

dstSetanddstBindinghas a descriptor type ofDESCRIPTOR_TYPE_INLINE_UNIFORM_BLOCK, thendescriptorCountspecifies the number of bytes to update. -

Otherwise,

descriptorCountis one of-

the number of elements in

pImageInfo -

the number of elements in

pBufferInfo -

the number of elements in

pTexelBufferView -

a value matching the

dataSizemember of a VkWriteDescriptorSetInlineUniformBlock structure in thepNextchain -

a value matching the

accelerationStructureCountof a VkWriteDescriptorSetAccelerationStructureKHR or VkWriteDescriptorSetAccelerationStructureNV structure in thepNextchain -

a value matching the

descriptorCountof a VkWriteDescriptorSetTensorARM structure in thepNextchain

-

-

-

descriptorType-

We need to specify the type of descriptor again

-

Is a VkDescriptorType specifying the type of each descriptor in

pImageInfo,pBufferInfo, orpTexelBufferView. -

It must be the same type as the

descriptorTypespecified inVkDescriptorSetLayoutBindingfordstSetatdstBinding, except ifVkDescriptorSetLayoutBindingfordstSetatdstBindingis equal toDESCRIPTOR_TYPE_MUTABLE_EXT. -

The type of the descriptor also controls which array the descriptors are taken from.

-

-

pBufferInfo-

Is a pointer to an array of VkDescriptorBufferInfo structures or is ignored, as described below.

-

-

Structure specifying descriptor buffer information

-

Specifies the buffer and the region within it that contains the data for the descriptor.

-

buffer-

Is the buffer resource or NULL_HANDLE .

-

-

offset-

Is the offset in bytes from the start of

buffer. -

Access to buffer memory via this descriptor uses addressing that is relative to this starting offset.

-

For

DESCRIPTOR_TYPE_UNIFORM_BUFFER_DYNAMICandDESCRIPTOR_TYPE_STORAGE_BUFFER_DYNAMICdescriptor types:-

offsetis the base offset from which the dynamic offset is applied.

-

-

-

range-

Is the size in bytes that is used for this descriptor update, or

WHOLE_SIZEto use the range fromoffsetto the end of the buffer.-

When

rangeisWHOLE_SIZEthe effective range is calculated at vkUpdateDescriptorSets by taking the size ofbufferminus theoffset.

-

-

For

DESCRIPTOR_TYPE_UNIFORM_BUFFER_DYNAMICandDESCRIPTOR_TYPE_STORAGE_BUFFER_DYNAMICdescriptor types:-

rangeis the static size used for all dynamic offsets.

-

-

-

-

-

pImageInfo-

Is a pointer to an array of VkDescriptorImageInfo structures or is ignored, as described below.

-

-

imageLayout-

Is the layout that the image subresources accessible from

imageViewwill be in at the time this descriptor is accessed. -

Is used in descriptor updates for types

DESCRIPTOR_TYPE_SAMPLED_IMAGE,DESCRIPTOR_TYPE_STORAGE_IMAGE,DESCRIPTOR_TYPE_COMBINED_IMAGE_SAMPLER, andDESCRIPTOR_TYPE_INPUT_ATTACHMENT.

-

-

imageView-

Is an image view handle or NULL_HANDLE .

-

Is used in descriptor updates for types

DESCRIPTOR_TYPE_SAMPLED_IMAGE,DESCRIPTOR_TYPE_STORAGE_IMAGE,DESCRIPTOR_TYPE_COMBINED_IMAGE_SAMPLER, andDESCRIPTOR_TYPE_INPUT_ATTACHMENT.

-

-

sampler-

Is a sampler handle.

-

Is used in descriptor updates for types

DESCRIPTOR_TYPE_SAMPLERandDESCRIPTOR_TYPE_COMBINED_IMAGE_SAMPLERif the binding being updated does not use immutable samplers.

-

-

-

-

pTexelBufferView-

Is a pointer to an array of VkBufferView handles as described in the Buffer Views section or is ignored, as described below.

-

-

-

-

descriptorWriteCount-

Is the number of elements in the

pDescriptorWritesarray.

-

-

pDescriptorWrites-

Is a pointer to an array of VkWriteDescriptorSet structures describing the descriptor sets to write to.

-

-

descriptorCopyCount-

Is the number of elements in the

pDescriptorCopiesarray.

-

-

pDescriptorCopies-

Is a pointer to an array of VkCopyDescriptorSet structures describing the descriptor sets to copy between.

-

-

Best Practices

-

Don’t update descriptor sets if nothing in the set changed. In the model with slots that are shared between different stages, this can mean that if no textures are set between two draw calls, you don’t need to update the descriptor set with texture descriptors.

-

When rendering dynamic objects the application will need to push some amount of per-object data to the GPU, such as the MVP matrix. This data may not fit into the push constant limit for the device, so it becomes necessary to send it to the GPU by putting it into a

VkBufferand binding a descriptor set that points to it. -

Materials also need their own descriptor sets, which point to the textures they use. We can either bind per-material and per-object descriptor sets separately or collate them into a single set. Either way, complex applications will have a large amount of descriptor sets that may need to change on the fly, for example due to textures being streamed in or out.

-

Not-good Solution: One or more pools per-frame, resetting the pool :

-

The simplest approach to circumvent the issue is to have one or more

VkDescriptorPools per frame, reset them at the beginning of the frame and allocate the required descriptor sets from it. This approach will consist of a vkResetDescriptorPool() call at the beginning, followed by a series of vkAllocateDescriptorSets() and vkUpdateDescriptorSets() to fill them with data. -

This is very useful for things like per-frame descriptors. That way we can have descriptors that are used just for one frame, allocated dynamically, and then before we start the frame we completely delete all of them in one go.

-

This is confirmed to be a fast path by GPU vendors, and recommended to use when you need to handle per-frame descriptor sets.

-

The issue is that these calls can add a significant overhead to the CPU frame time, especially on mobile. In the worst cases, for example calling vkUpdateDescriptorSets() for each draw call, the time it takes to update descriptors can be longer than the time of the draws themselves.

-

-

Solution: Caching descriptor sets :

-

A major way to reduce descriptor set updates is to re-use them as much as possible. Instead of calling vkResetDescriptorPool() every frame, the app will keep the

VkDescriptorSethandles stored with some caching mechanism to access them. -

The cache could be a hashmap with the contents of the descriptor set (images, buffers) as key. This approach is used in our framework by default. It is possible to remove another level of indirection by storing descriptor set handles directly in the materials and/or meshes.

-

Caching descriptor sets has a dramatic effect on frame time for our CPU-heavy scene.

-

In this game on a 2019 mobile phone it went from 44ms (23fps) to 27ms (37fps). This is a 38% decrease in frame time.

-

This system is reasonably easy to implement for a static scene, but it becomes harder when you need to delete descriptor sets. Complex engines may implement techniques to figure out which descriptor sets have not been accessed for a certain number of frames, so they can be removed from the map.

-

This may correspond to calling vkFreeDescriptorSets() , but this solution poses another issue: in order to free individual descriptor sets the pool has to be created with the

DESCRIPTOR_POOL_CREATE_FREE_DESCRIPTOR_SETflag. Mobile implementations may use a simpler allocator if that flag is not set, relying on the fact that pool memory will only be recycled in block. -

It is possible to avoid using that flag by updating descriptor sets instead of deleting them. The application can keep track of recycled descriptor sets and re-use one of them when a new one is requested.

-

-

Solution: One buffer per-frame :

-

We will now explore an alternative approach, that is complementary to descriptor caching in some way. Especially for applications in which descriptor caching is not quite feasible, buffer management is another lever for optimizing performance.

-

As discussed at the beginning, each rendered object will typically need some uniform data along with it, that needs to be pushed to the GPU somehow. A straightforward approach is to store a

VkBufferper object and update that data for each frame. -

This already poses an interesting question: is one buffer enough? The problem is that this data will change dynamically and will be in use by the GPU while the frame is in flight.

-

Since we do not want to flush the GPU pipeline between each frame, we will need to keep several copies of each buffer, one for each frame in flight.

-

Another similar option is to use just one buffer per object, but with a size equal to

num_frames * buffer_size, then offset it dynamically based on the frame index.-

For each frame, one buffer per object is created and filled with data. This means that we will have many descriptor sets to create, since every object will need one that points to its

VkBuffer. Furthermore, we will have to update many buffers separately, meaning we cannot control their memory layout and we might lose some optimization opportunities with caching.

-

-

We can address both problems by reverting the approach: instead of having a

VkBufferper object containing per-frame data, we will have aVkBufferper frame containing per-object data. The buffer will be cleared at the beginning of the frame, then each object will record its data and will receive a dynamic offset to be used at vkCmdBindDescriptorSets() time. -

With this approach we will need fewer descriptor sets, as more objects can share the same one: they will all reference the same

VkBuffer, but at different dynamic offsets. Furthermore, we can control the memory layout within the buffer. -

Using a single large

VkBufferin this case shows a performance improvement similar to descriptor set caching. -

For this relatively simple scene stacking the two approaches does not provide a further performance boost, but for a more complex case they do stack nicely:

-

Descriptor caching is necessary when the number of descriptor sets is not just due to

VkBuffers with uniform data, for example if the scene uses a large amount of materials/textures. -

Buffer management will help reduce the overall number of descriptor sets, thus cache pressure will be reduced and the cache itself will be smaller.

-

-

(2025-09-08)

-

I personally liked this technique much more than descriptor caching.

-

It sounds more concrete than fiddling with descriptor sets.

-

Reminds me of Buffer Device Address.

-

-

-

Do

-

Update already allocated but no longer referenced descriptor sets, instead of resetting descriptor pools and reallocating new descriptor sets.

-

Prefer reusing already allocated descriptor sets, and not updating them with the same information every time.

-

Consider caching your descriptor sets when feasible.

-

Consider using a single (or few)

VkBufferper frame with dynamic offsets. -

Batch calls to vkAllocateDescriptorSets if possible – on some drivers, each call has measurable overhead, so if you need to update multiple sets, allocating both in one call can be faster;

-

To update descriptor sets, either use vkUpdateDescriptorSets with descriptor write array, or use

vkUpdateDescriptorSetWithTemplatefrom Vulkan 1.1. Using the descriptor copy functionality ofvkUpdateDescriptorSetsis tempting with dynamic descriptor management for copying most descriptors out of a previously allocated array, but this can be slow on drivers that allocate descriptors out of write-combined memory. Descriptor templates can reduce the amount of work application needs to do to perform updates – since in this scheme you need to read descriptor information out of shadow state maintained by application, descriptor templates allow you to tell the driver the layout of your shadow state, making updates substantially faster on some drivers. -

Prefer dynamic uniform buffers to updating uniform buffer descriptors. Dynamic uniform buffers allow to specify offsets into buffer objects using pDynamicOffsets argument of vkCmdBindDescriptorSets without allocating and updating new descriptors. This works well with dynamic constant management where constants for draw calls are allocated out of large uniform buffers, substantially reduce CPU overhead, and can be more efficient on GPU. While on some GPUs the number of dynamic buffers must be kept small to avoid extra overhead in the driver, one or two dynamic uniform buffers should work well in this scheme on all architectures.

-

On some drivers, unfortunately the allocate & update path is not very optimal – on some mobile hardware, it may make sense to cache descriptor sets based on the descriptors they contain if they can be reused later in the frame.

-

Descriptor Set Layout

-

Contains the information about what that descriptor set holds.

-

Specifies the types of resources that are going to be accessed by the pipeline, just like a render pass specifies the types of attachments that will be accessed.

-

How many :

-

You need to specify a descriptor set layout for each descriptor set when creating the pipeline layout.

-

You can use this feature to put descriptors that vary per-object and descriptors that are shared into separate descriptor sets.

-

In that case, you avoid rebinding most of the descriptors across draw calls which are potentially more efficient.

-

-

Since the buffer structure is identical across frames, one layout suffices.

-

Create only 1 descriptor set layout, regardless of frames in-flight.

-

This layout defines the type of resource (e.g.,

VKDESCRIPTORTYPEUNIFORMBUFFER) and its binding point.

-

-

-

-

Opaque handle to a descriptor set layout object.

-

Is defined by an array of zero or more descriptor bindings.

-

Where it's used :

-

VkDescriptorSetLayoutBinding.-

Structure specifying a descriptor set layout binding.

-

Each individual descriptor binding is specified by a descriptor type, a count (array size) of the number of descriptors in the binding, a set of shader stages that can access the binding, and (if using immutable samplers) an array of sampler descriptors.

-

Bindings that are not specified have a

descriptorCountandstageFlagsof zero, and the value ofdescriptorTypeis undefined. -

binding-

Is the binding number of this entry and corresponds to a resource of the same binding number in the shader stages.

-

Used in the shader and the type of descriptor, which is a uniform buffer object.

-

-

descriptorType-

Is a VkDescriptorType specifying which type of resource descriptors are used for this binding.

-

-

descriptorCount-

Insight :

-

It's a descriptor count, not a descriptor SET count !! It's just to specify how many resources is expected to be in that binding.

-

It makes complete sense to be used for arrays.

-

Caio:

-

What happens if the values don't match? For example, trying to get the index 5 of the array, when the binding was described having

descriptorCount = 1?

-

-

Oni:

-

I don't know if this is specified. I guess it's only going to update the first element. So you're going to read bogus data. Maybe it changes between different drivers, no idea.

-

-

-

What value to use :

-

A MVP transformation is in a single uniform buffer, so we using a

descriptorCountof1. -

In other words, a whole struct counts as

1.

-

-

Is the number of descriptors contained in the binding, accessed in a shader as an array.

-

Except if

descriptorTypeisDESCRIPTOR_TYPE_INLINE_UNIFORM_BLOCKin which casedescriptorCountis the size in bytes of the inline uniform block.

-

-

If

descriptorCountis zero this binding entry is reserved and the resource must not be accessed from any stage via this binding within any pipeline using the set layout. -

It is possible for the shader variable to represent an array of uniform buffer objects, and this property specifies the number of values in the array.

-

Examples :

-

This could be used to specify a transformation for each of the bones in a skeleton for skeletal animation.

-

-

-

stageFlags-

Is a bitmask of VkShaderStageFlagBits specifying which pipeline shader stages can access a resource for this binding.

-

SHADER_STAGE_ALLis a shorthand specifying all defined shader stages, including any additional stages defined by extensions.

-

-

If a shader stage is not included in

stageFlags, then a resource must not be accessed from that stage via this binding within any pipeline using the set layout. -

Other than input attachments which are limited to the fragment shader, there are no limitations on what combinations of stages can use a descriptor binding, and in particular a binding can be used by both graphics stages and the compute stage.

-

-

pImmutableSamplers-

Affects initialization of samplers.

-

If

descriptorTypespecifies aDESCRIPTOR_TYPE_SAMPLERorDESCRIPTOR_TYPE_COMBINED_IMAGE_SAMPLERtype descriptor, thenpImmutableSamplerscan be used to initialize a set of immutable samplers . -

If

descriptorTypeis not one of these descriptor types, thenpImmutableSamplersis ignored . -

Immutable samplers are permanently bound into the set layout and must not be changed; updating a

DESCRIPTOR_TYPE_SAMPLERdescriptor with immutable samplers is not allowed and updates to aDESCRIPTOR_TYPE_COMBINED_IMAGE_SAMPLERdescriptor with immutable samplers does not modify the samplers (the image views are updated, but the sampler updates are ignored). -

If

pImmutableSamplersis notNULL, then it is a pointer to an array of sampler handles that will be copied into the set layout and used for the corresponding binding. Only the sampler handles are copied; the sampler objects must not be destroyed before the final use of the set layout and any descriptor pools and sets created using it. -

If

pImmutableSamplersisNULL, then the sampler slots are dynamic and sampler handles must be bound into descriptor sets using this layout. ]

-

-

-

VkDescriptorSetLayoutCreateInfo.-

pBindings-

A pointer to an array of

VkDescriptorSetLayoutBindingstructures.

-

-

bindingCount-

Is the number of elements in

pBindings.

-

-

flags-

Is a bitmask of VkDescriptorSetLayoutCreateFlagBits specifying options for descriptor set layout creation.

-

-

-

vkCreateDescriptorSetLayout().-

Create a new descriptor set layout.

-

pCreateInfo-

Is a pointer to a VkDescriptorSetLayoutCreateInfo structure specifying the state of the descriptor set layout object.

-

-

pAllocator-

Controls host memory allocation as described in the Memory Allocation chapter.

-

-

pSetLayout-

Is a pointer to a VkDescriptorSetLayout handle in which the resulting descriptor set layout object is returned.

-

-

-

-

-

Structure specifying the parameters of a newly created pipeline layout object

-

setLayoutCount-

Is the number of descriptor sets included in the pipeline layout.

-

How it works :

-

It's possible to have multiple descriptor sets (

set = 0,set = 1, etc). -

"You can have set = 0 being a set that is always bound and never changes, set = 1 is something specific to the current object being rendered, etc."

-

-

-

pSetLayouts-

Is a pointer to an array of

VkDescriptorSetLayoutobjects. -

The implementation must not access these objects outside of the duration of the command this structure is passed to.

-

-

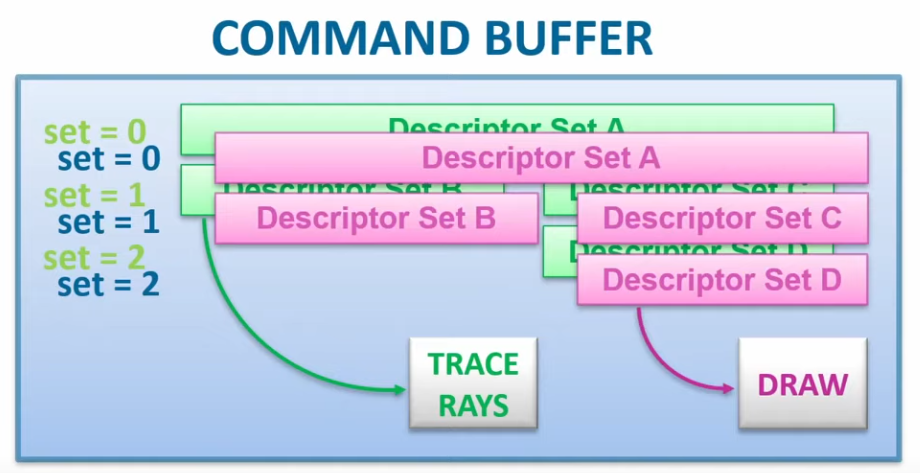

Binding

-

A Descriptor state is tracked only inside a command buffer; they are always bound at command buffer level; their state is local to command buffers.

-

They are not bound at queue level or global level, only to command buffers.

-

-

.

.

-

Which set index to choose :

-

According to GPU vendors, each descriptor set slot has a cost, so the fewer we have, the better.

-

"Organize shader inputs into "sets" by update frequency."

-

Rarely changes -> low index.

-

Changes frequently -> high index.

-

Usually Descriptor Set 0 is used to always bind some global scene data, which will contain some uniform buffers and some special textures, and Descriptor Set 1 will be used for per-object data.

-

-

-

It needs to be done before the

vkCmdDrawIndexed()calls, for example. -

commandBuffer-

Is the command buffer that the descriptor sets will be bound to.

-

-

pipelineBindPoint-

Is a VkPipelineBindPoint indicating the type of the pipeline that will use the descriptors. There is a separate set of bind points for each pipeline type, so binding one does not disturb the others.

-

Unlike vertex and index buffers, descriptor sets are not unique to graphics pipelines, therefore, we need to specify if we want to bind descriptor sets to the graphics or compute pipeline.

-

Indicates the type of the pipeline that will use the descriptor.

-

There is a separate set of bind points for each pipeline type, so binding one does not disturb the others.

-

.

.

-

A raytracing command takes the currently bound descriptors from the raytracing bind point.

-

A draw command takes the currently bound descriptors from the graphics bind point.

-

The two don't interfere with each other.

-

-

-

layout-

Is a VkPipelineLayout object used to program the bindings.